↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Unsupervised Visible-Infrared Person Re-identification (USVI-ReID) is a challenging task due to the lack of annotations and the significant differences between visible and infrared images. Existing methods often rely on simple cluster-based contrastive learning, focusing primarily on the common features of individuals and neglecting the inherent divergence and variety in the data. This leads to inaccurate pseudo-label generation and hinders performance.

This paper introduces a novel method called Progressive Contrastive Learning with Hard and Dynamic Prototypes (PCLHD) to address these limitations. PCLHD generates hard prototypes by selecting samples furthest from cluster centers, emphasizing divergence. It also employs dynamic prototypes, randomly chosen from clusters, to capture the data’s variety. The method incorporates a progressive learning strategy, gradually focusing on divergence and variety as training progresses. Experiments on two standard datasets (SYSU-MM01 and RegDB) show that PCLHD outperforms existing USVI-ReID methods, demonstrating its effectiveness in improving the accuracy and reliability of person re-identification in cross-modal scenarios.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in unsupervised person re-identification because it addresses the limitations of existing methods by focusing on commonality, divergence, and variety within data clusters. The proposed PCLHD method significantly improves the accuracy of person matching in visible-infrared images, opening new avenues for research in this challenging area and enhancing real-world applications such as surveillance and security systems.

Visual Insights#

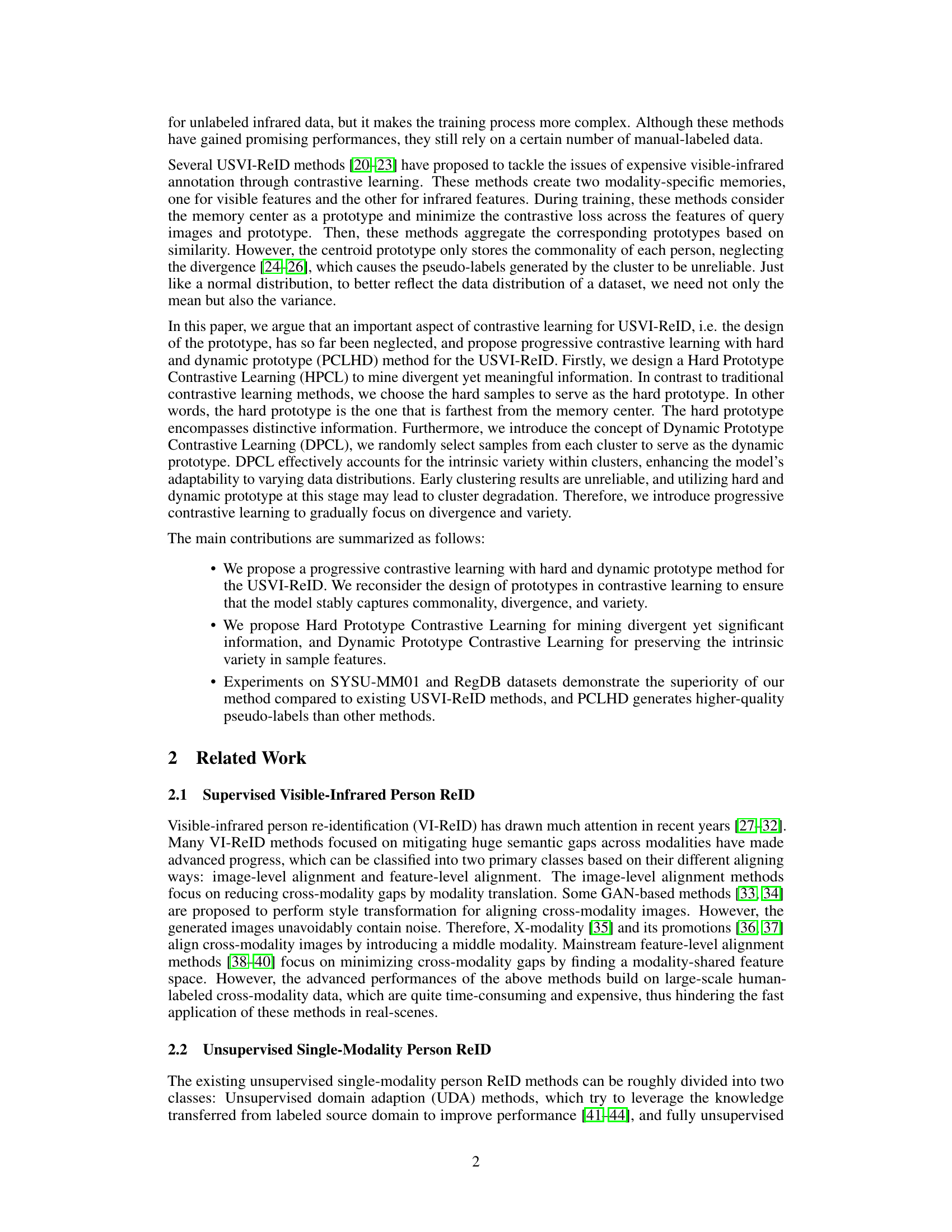

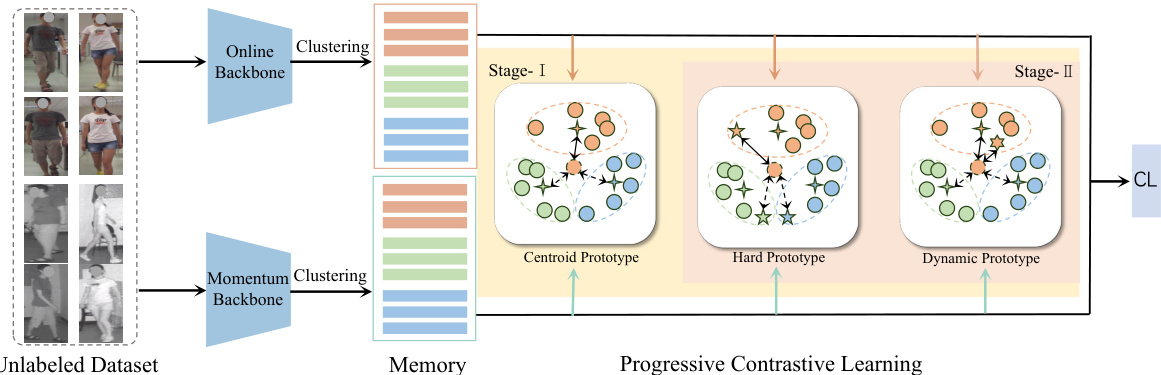

The figure illustrates the proposed PCLHD framework, which consists of two stages. Stage I employs contrastive learning with centroid prototypes to learn a discriminative representation. Stage II introduces contrastive learning with hard and dynamic prototypes to enhance the model’s focus on divergence and variety within clusters. The framework starts with an unlabeled dataset which is passed to both an online and momentum backbone network. The output of these networks populates the memory and is then passed to the progressive contrastive learning stage. This stage integrates the centroid, hard, and dynamic prototype approaches to optimize the model.

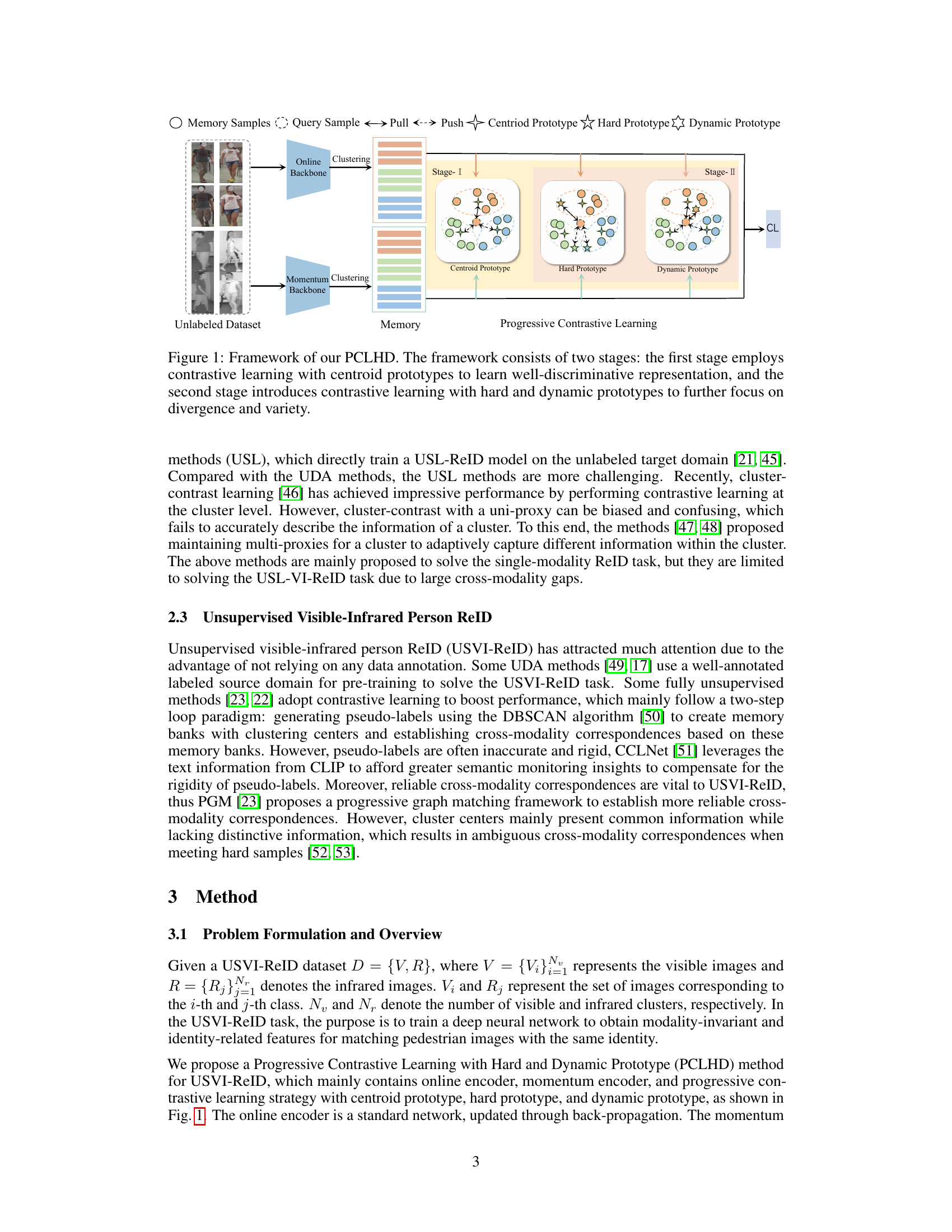

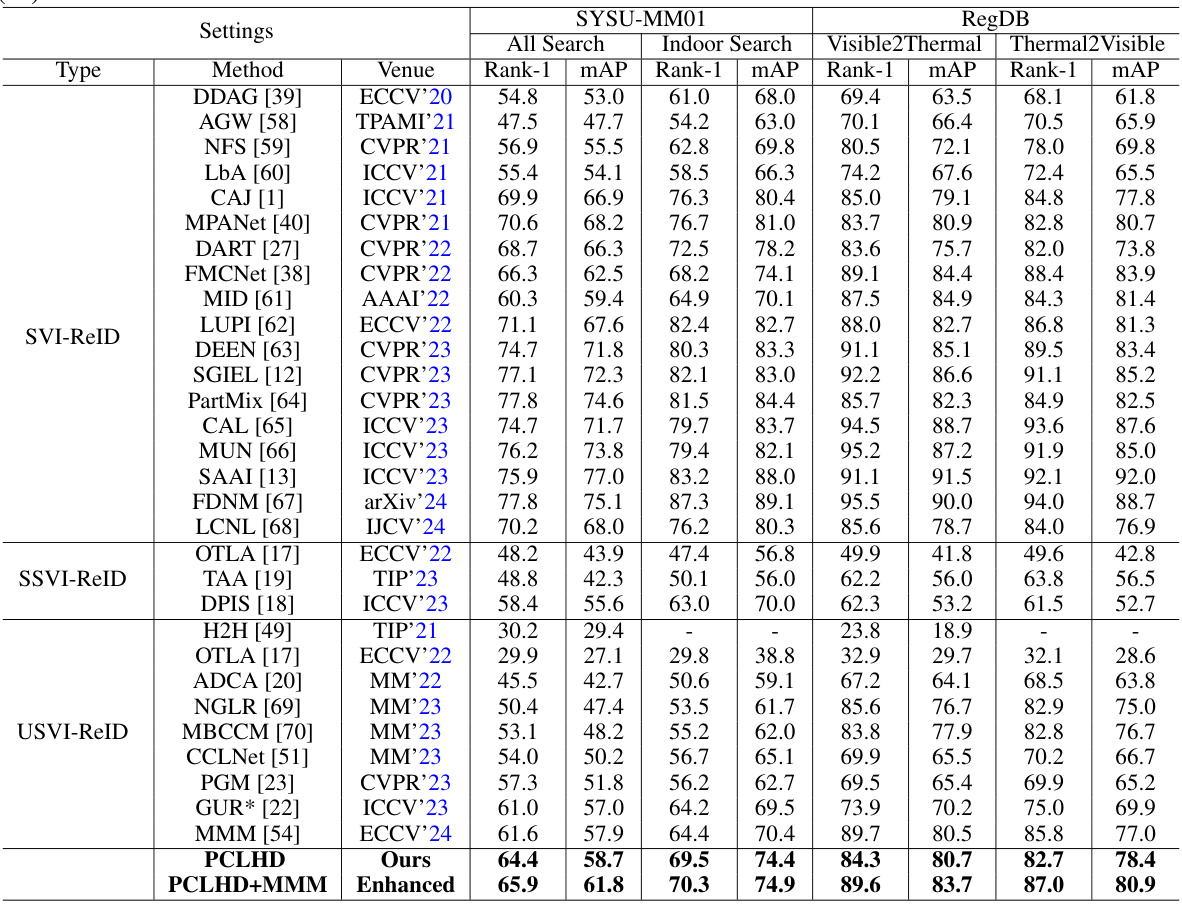

This table compares the proposed PCLHD method with various state-of-the-art methods for visible-infrared person re-identification on two benchmark datasets: SYSU-MM01 and RegDB. It shows results for supervised (SVI-ReID), semi-supervised (SSVI-ReID), and unsupervised (USVI-ReID) approaches. Evaluation metrics include Rank-1 accuracy and mean Average Precision (mAP). A separate column indicates results when camera information is excluded.

In-depth insights#

USVI-ReID Challenges#

Unsupervised Visible-Infrared Person Re-identification (USVI-ReID) presents significant challenges due to the inherent difficulties in matching individuals across disparate visible and infrared modalities without labeled data. The lack of annotations necessitates the development of unsupervised learning strategies, making model training substantially harder than in supervised settings. Cross-modality discrepancies, stemming from differences in spectral properties and imaging techniques, create a large semantic gap. Furthermore, variations in viewpoint, pose, illumination, and occlusion within each modality add complexity. Addressing these challenges requires robust feature extraction and representation learning techniques to bridge the modality gap and ensure identity preservation, while effectively managing the inherent uncertainty in unsupervised learning. Consequently, methods are needed that are particularly adept at handling hard examples and outliers, which are common in unlabeled data. Finally, evaluating performance is complicated due to the absence of ground truth labels, necessitating careful consideration of appropriate evaluation metrics.

PCLHD Framework#

The PCLHD framework, a progressive contrastive learning approach for unsupervised visible-infrared person re-identification (USVI-ReID), tackles the limitations of existing cluster-based methods by focusing on commonality, divergence, and variety. It ingeniously introduces hard prototypes (furthest from cluster centers) to highlight divergence and dynamic prototypes (randomly sampled from clusters) to capture variety. This dual prototype strategy, combined with a progressive learning scheme, allows the model to gradually shift attention from commonality to divergence and variety, leading to more robust and reliable pseudo-label generation and improved performance. The progressive aspect is crucial, preventing early cluster degradation and enabling stable learning of discriminative features. This framework presents a significant advancement in USVI-ReID by addressing the inherent challenges of modality discrepancy and unsupervised learning.

Hard & Dynamic Prototypes#

The innovative approach of using hard and dynamic prototypes in contrastive learning significantly enhances unsupervised visible-infrared person re-identification (USVI-ReID). Traditional methods rely on centroid prototypes, capturing only the commonality within a cluster. Hard prototypes, selected as the furthest samples from the cluster center, explicitly address divergence by highlighting distinctive features, improving discrimination. Dynamic prototypes, randomly sampled from clusters, introduce variability, making the model more robust to varying data distributions and preventing overfitting to a single representative. This dual-prototype strategy, combined with progressive learning, allows the model to gradually shift its focus from commonality to the crucial aspects of divergence and variety, leading to superior performance and higher-quality pseudo-labels in USVI-ReID.

Progressive Learning#

Progressive learning, in the context of unsupervised visible-infrared person re-identification (USVI-ReID), is a crucial strategy to gradually shift the model’s focus from commonality to divergence and variety. Early stages of USVI-ReID models primarily learn shared features (commonality) from visible and infrared images to improve initial clustering accuracy. However, this can lead to unreliable pseudo-labels and suboptimal performance. The progressive approach introduces hard and dynamic prototypes at later stages. Hard prototypes emphasize divergence by focusing on samples furthest from cluster centers, while dynamic prototypes, selected randomly from clusters, promote variety by encouraging the model to capture intra-class differences. This progressive strategy prevents premature overemphasis on commonality which leads to better cluster stability and ultimately, enhanced performance in matching individuals across modalities. It’s a refinement that addresses limitations inherent in simpler contrastive learning methods by explicitly incorporating and managing the subtle interplay between shared and distinct features across the different modalities.

Future Directions#

Future research directions for unsupervised visible-infrared person re-identification (USVI-ReID) could involve exploring alternative clustering methods beyond DBSCAN to handle extremely large-scale datasets more effectively. Hierarchical clustering or other scalable techniques could significantly improve performance. Another key area would be developing more robust and reliable methods for generating pseudo-labels, potentially using techniques that incorporate both global and local context. Improving the handling of hard samples and outliers is also crucial. Methods focusing on uncertainty estimation or self-training could enhance the quality of pseudo-labels. Finally, research could explore advanced cross-modality alignment strategies. This includes investigating more sophisticated feature fusion techniques or exploring novel representation learning methods designed specifically for the challenges of aligning visible and infrared data. Attention mechanisms or transformer-based architectures could be beneficial in this context. Further, research should systematically investigate the impact of various hyperparameters and their optimal settings on the proposed methodology.

More visual insights#

More on figures

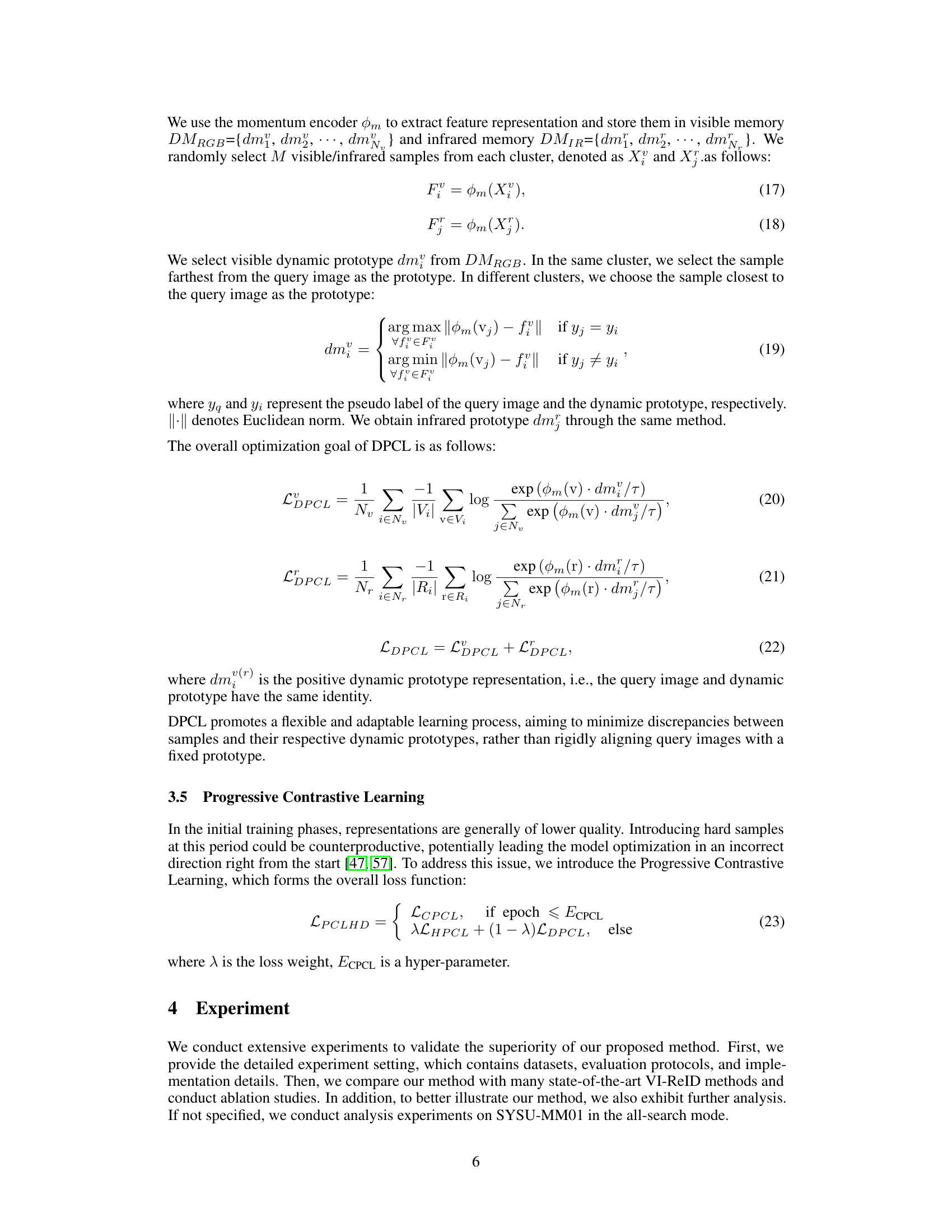

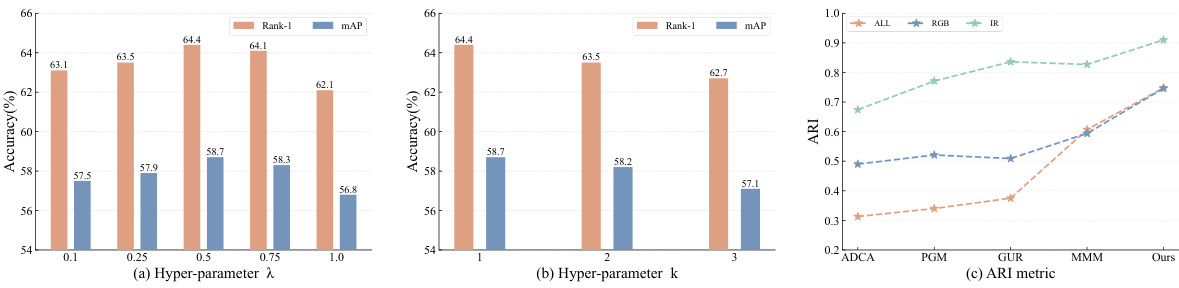

This figure presents the ablation study results to validate the impact of different hyperparameters and components in the proposed PCLHD method. Panel (a) shows the effect of varying the hyperparameter λ (weighting parameter balancing Hard and Dynamic Prototype Contrastive Learning loss functions) on Rank-1 accuracy and mAP. Panel (b) illustrates the influence of changing hyperparameter k (number of hard samples) on model performance. Panel (c) compares the Adjusted Rand Index (ARI) metric, measuring clustering quality, of the PCLHD method against several other USVI-ReID methods.

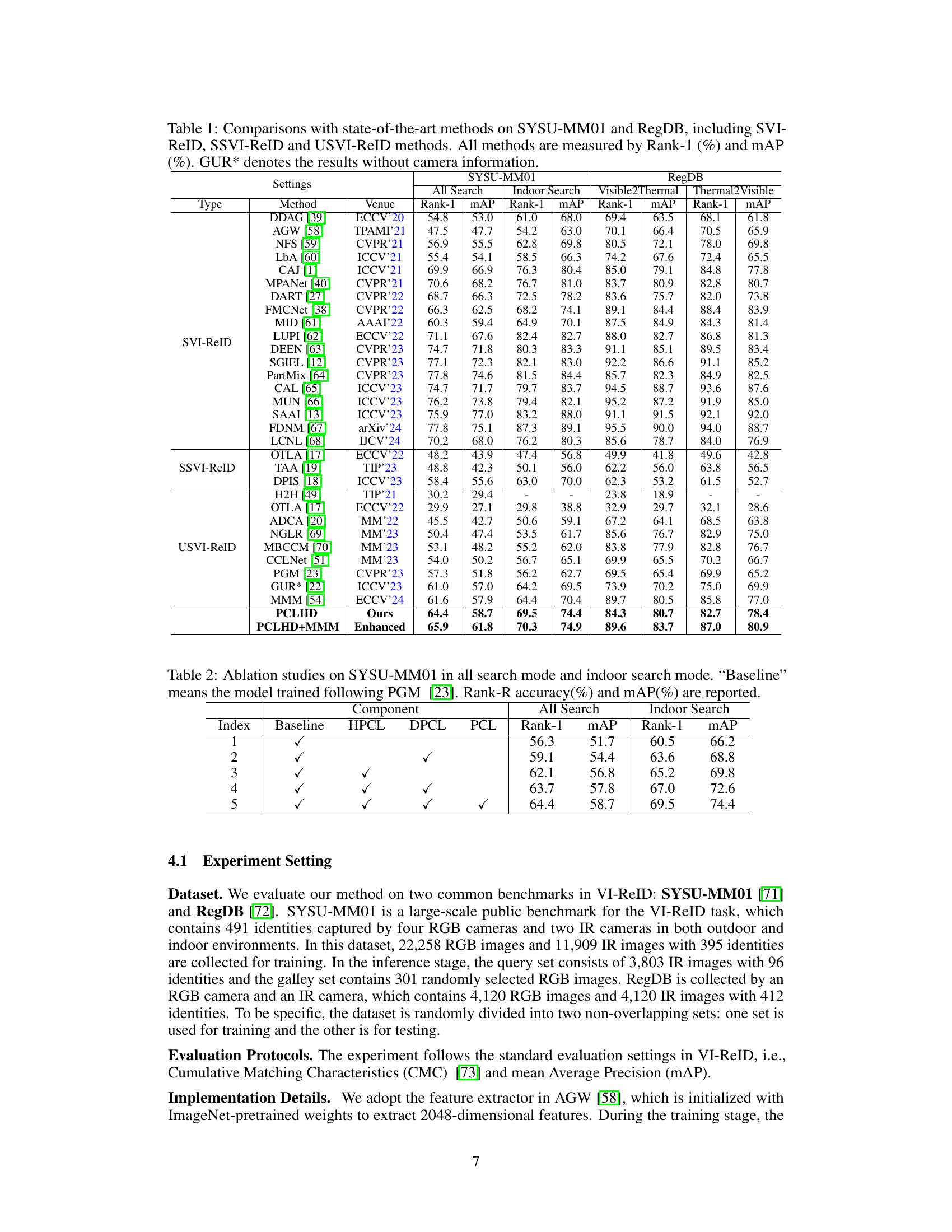

This figure visualizes the t-SNE embeddings of visible and infrared features for 10 randomly selected identities. Different colors represent different identities. Circles represent visible features while pentagrams represent infrared features. The visualization aims to show how well the proposed PCLHD method clusters samples of the same identity compared to a baseline method. Well-clustered samples indicate effective feature extraction and identity discrimination.

Full paper#