↗ arXiv ↗ Hugging Face ↗ Hugging Face ↗ Chat

TL;DR#

Diffusion models, while impressive, suffer from slow generation due to their complex diffusion processes requiring numerous steps. Existing acceleration methods often struggle with poor sampling quality or require unstable training procedures, such as adversarial training. Therefore, there is a need for an efficient and high-quality method to speed up the process.

PeRFlow addresses these challenges through a novel piecewise reflow operation that straightens the sampling trajectories. By dividing the sampling into smaller time windows, PeRFlow avoids lengthy computational costs associated with simulating entire trajectories. This innovative approach enables fast and high-quality image generation with high compatibility across various diffusion model pipelines. PeRFlow demonstrates superior results compared to existing methods in terms of FID scores, image quality, and generation diversity. The method’s plug-and-play nature also simplifies its integration into different workflows.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers working on diffusion models because it presents PeRFlow, a novel method for significantly accelerating the generation process while maintaining high-quality results. Its plug-and-play compatibility makes it easily adaptable to various diffusion model workflows, opening up exciting possibilities for future research and application in diverse areas.

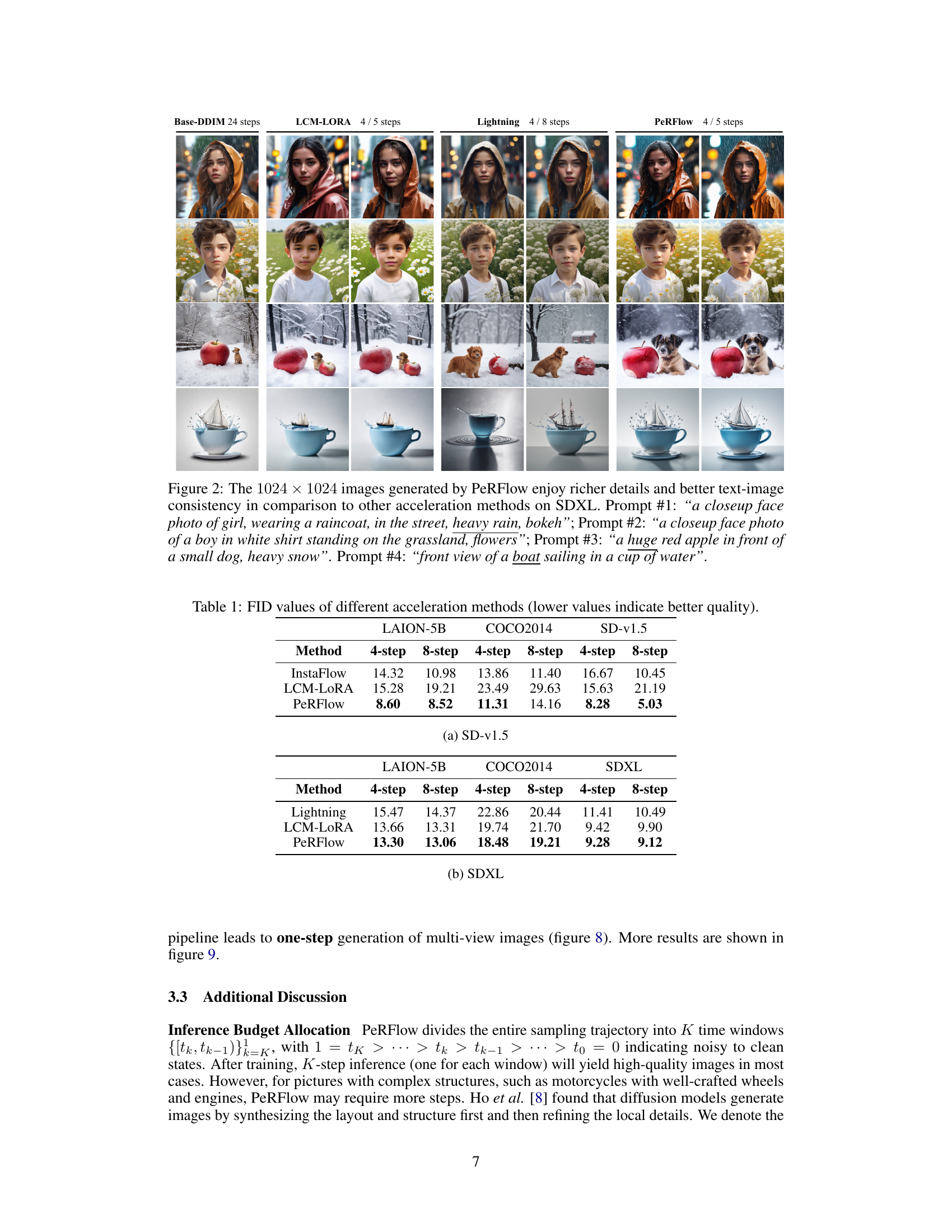

Visual Insights#

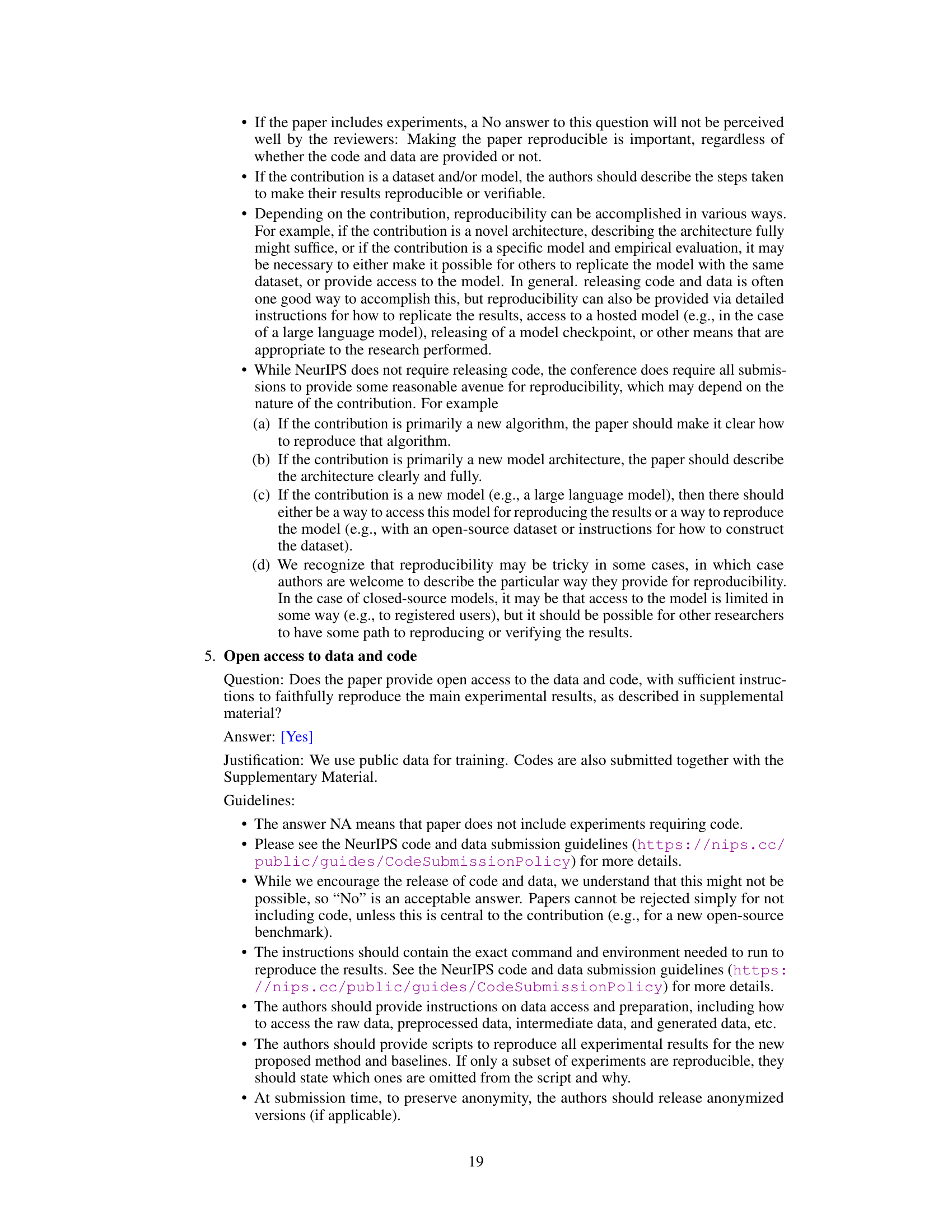

🔼 This figure illustrates the core idea of PeRFlow, a method to accelerate diffusion models. It shows how the original probability flow, which has complex curved trajectories requiring many steps for sampling, is divided into several time windows. Within each window, the ‘reflow’ operation straightens the trajectories, resulting in a piecewise linear flow that significantly reduces the number of steps needed for sampling. This divide-and-conquer approach simplifies the sampling process and accelerates generation.

read the caption

Figure 1: Our few-step generator PeRFlow is trained by a divide-and-conquer strategy. We divide the ODE trajectories into several intervals and perform reflow in each time window to straighten the sampling trajectories.

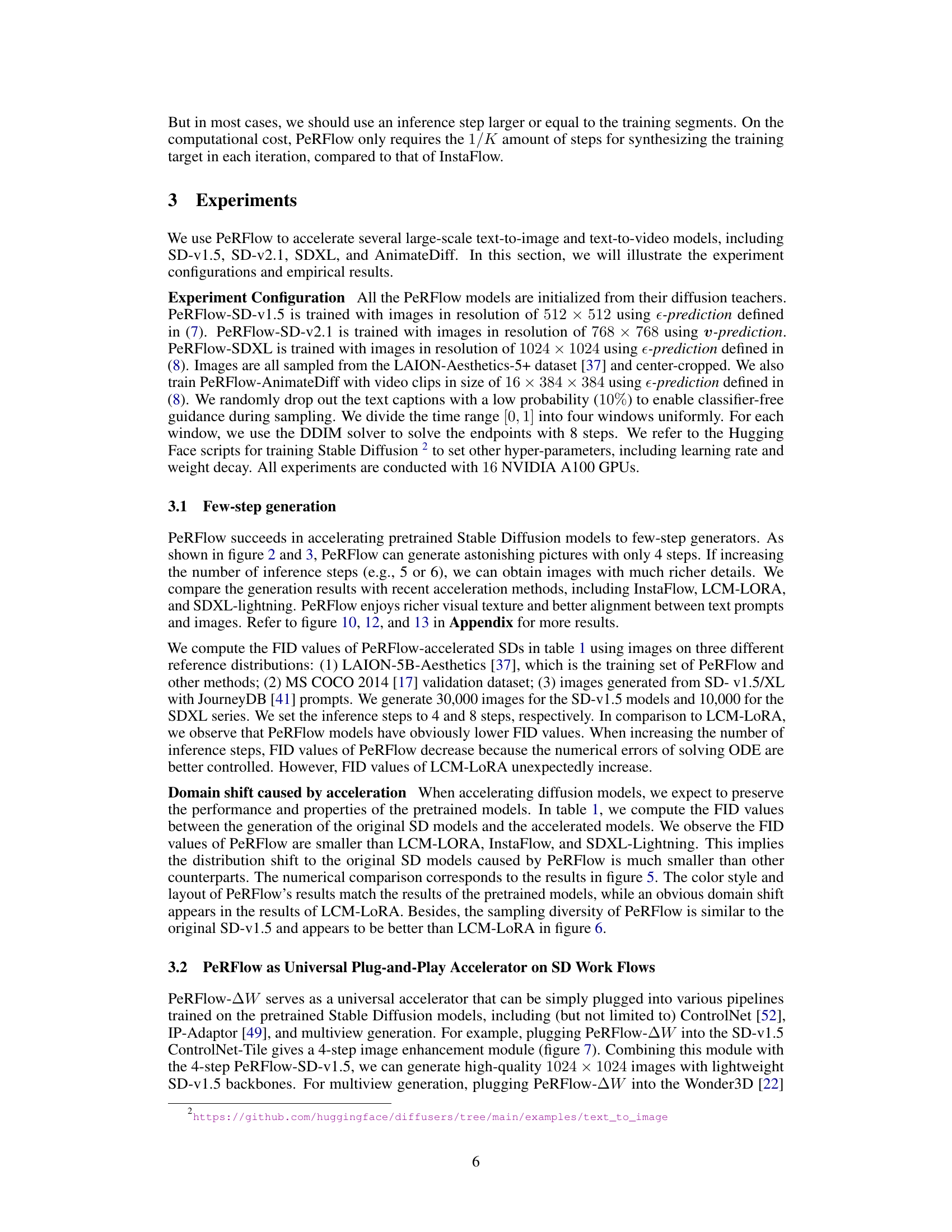

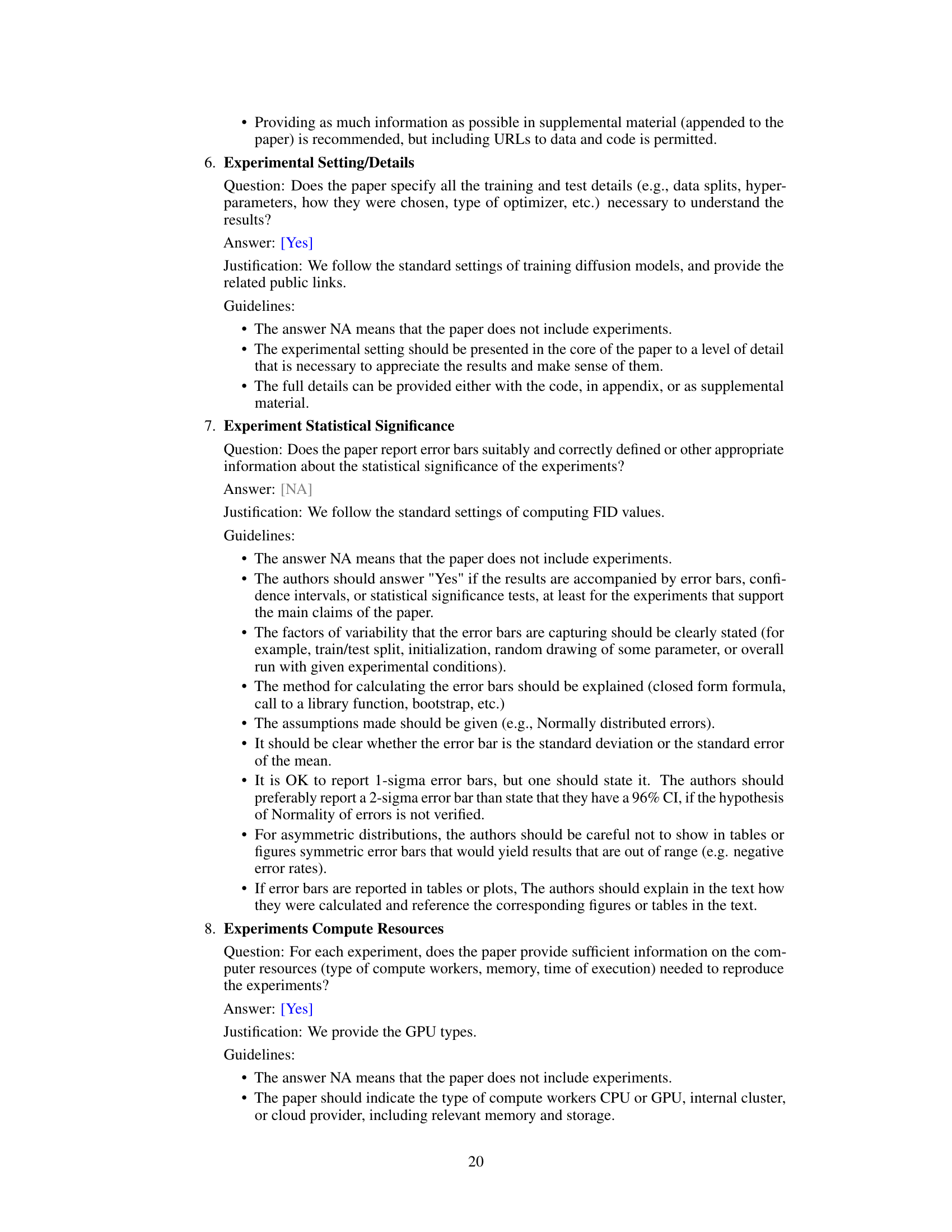

🔼 This table compares the Fréchet Inception Distance (FID) scores of different image generation acceleration methods, including InstaFlow, LCM-LORA, and PeRFlow, across three different datasets: LAION-5B, COCO2014, and images generated by SD-v1.5. Lower FID scores indicate better image quality and higher similarity to real images. The table shows FID values for both 4-step and 8-step generation methods, demonstrating the improvement in image quality with increased steps and showcasing PeRFlow’s superior performance compared to other acceleration methods.

read the caption

Table 1: FID values of different acceleration methods (lower values indicate better quality).

In-depth insights#

Piecewise Flow#

Piecewise flow methods offer a compelling approach to enhance the efficiency and performance of generative models, particularly diffusion models. By dividing the complex, continuous flow trajectory into smaller, more manageable segments, these methods simplify the computational process. This piecewise linearization significantly reduces the number of inference steps needed to generate high-quality samples, thus accelerating the overall process and reducing computational costs. A core advantage lies in the ability to leverage pre-trained diffusion models by inheriting their knowledge through dedicated parameterizations. This accelerates convergence and enhances the transferability of learned knowledge. However, challenges remain. Careful consideration of the trade-off between the number of segments and the accuracy of the approximation is crucial to balance computational efficiency and generative quality. The optimal segmentation strategy may need to be tailored based on the specific generative model and data modality used. Furthermore, the potential numerical errors introduced by approximating the continuous flow with a piecewise linear one require careful management.

Reflow Training#

Reflow training, in the context of diffusion models, presents a novel approach to accelerate the sampling process. It aims to straighten the often complex, curved trajectories inherent in the original diffusion process by applying a piecewise linearization technique. This involves dividing the sampling process into smaller time windows and applying a reflow operation within each window, effectively making the trajectories more linear, thus enabling faster generation of samples. The key advantage of this approach lies in its ability to avoid the generation and storage of large synthetic datasets, which is often a computationally expensive and time-consuming process associated with other training techniques. This approach is more efficient and scalable. It reduces the computational cost significantly and offers superior performance, producing high-quality results in a fraction of the time. However, the effectiveness of reflow training hinges on carefully selecting the number and size of the time windows, which may require careful experimentation and tuning to optimize the trade-off between speed and generation quality. The choice of parameterization for the reflow model also influences the results and its compatibility with pre-trained diffusion models.

Universal Accelerator#

The concept of a “Universal Accelerator” in the context of diffusion models suggests a method or module capable of significantly speeding up the generation process across diverse models and applications. This implies a degree of model-agnosticism, meaning the acceleration technique isn’t tied to a specific architecture. The key advantage is broader applicability, potentially accelerating various workflows with minimal adjustments. Achieving this universality likely requires careful design, perhaps focusing on the underlying mathematical principles governing diffusion processes rather than model-specific details. A successful universal accelerator would need to maintain or even improve image quality and diversity, while drastically reducing computational cost. The potential impact would be vast, democratizing access to high-quality image generation across various domains and reducing environmental impact associated with extensive training and inference.

Few-Step Sampling#

The concept of “Few-Step Sampling” in diffusion models addresses the computational inefficiency inherent in the process of generating high-quality samples. Traditional methods require numerous iterative steps to reverse the diffusion process, leading to significant computational costs. Few-step sampling aims to drastically reduce the number of steps required, achieving comparable or even superior results with significantly reduced computational burden. This is achieved through various techniques, including distilling pretrained diffusion models into smaller, faster models, modifying the sampling process itself (e.g., using advanced ODE solvers or piecewise linear approximations), or employing novel training strategies that focus on efficient knowledge transfer. Key challenges involve maintaining the quality and diversity of generated samples while minimizing the number of sampling steps. The success of few-step sampling hinges on the ability to effectively capture the essence of the diffusion process within a limited number of iterations, balancing computational efficiency with the fidelity of generated output. Research in this area is crucial for broadening the applicability of diffusion models to resource-constrained environments and real-time applications.

Future Works#

Future research directions stemming from this piecewise rectified flow (PeRFlow) method for accelerating diffusion models could focus on several key areas. Improving the efficiency of the reflow operation is crucial; exploring alternative optimization techniques beyond the current divide-and-conquer approach could significantly enhance the training speed and reduce computational cost. Investigating the impact of the number of time windows (K) on the model’s performance and exploring adaptive strategies for determining the optimal K based on the data complexity would be valuable. Further exploration of the parameterization strategies is needed to improve the compatibility with diverse pre-trained diffusion models and enhance transferability. Finally, applying PeRFlow to novel tasks and modalities beyond image generation such as video or 3D model generation, would broaden its impact and demonstrate its versatility as a general-purpose acceleration framework.

More visual insights#

More on figures

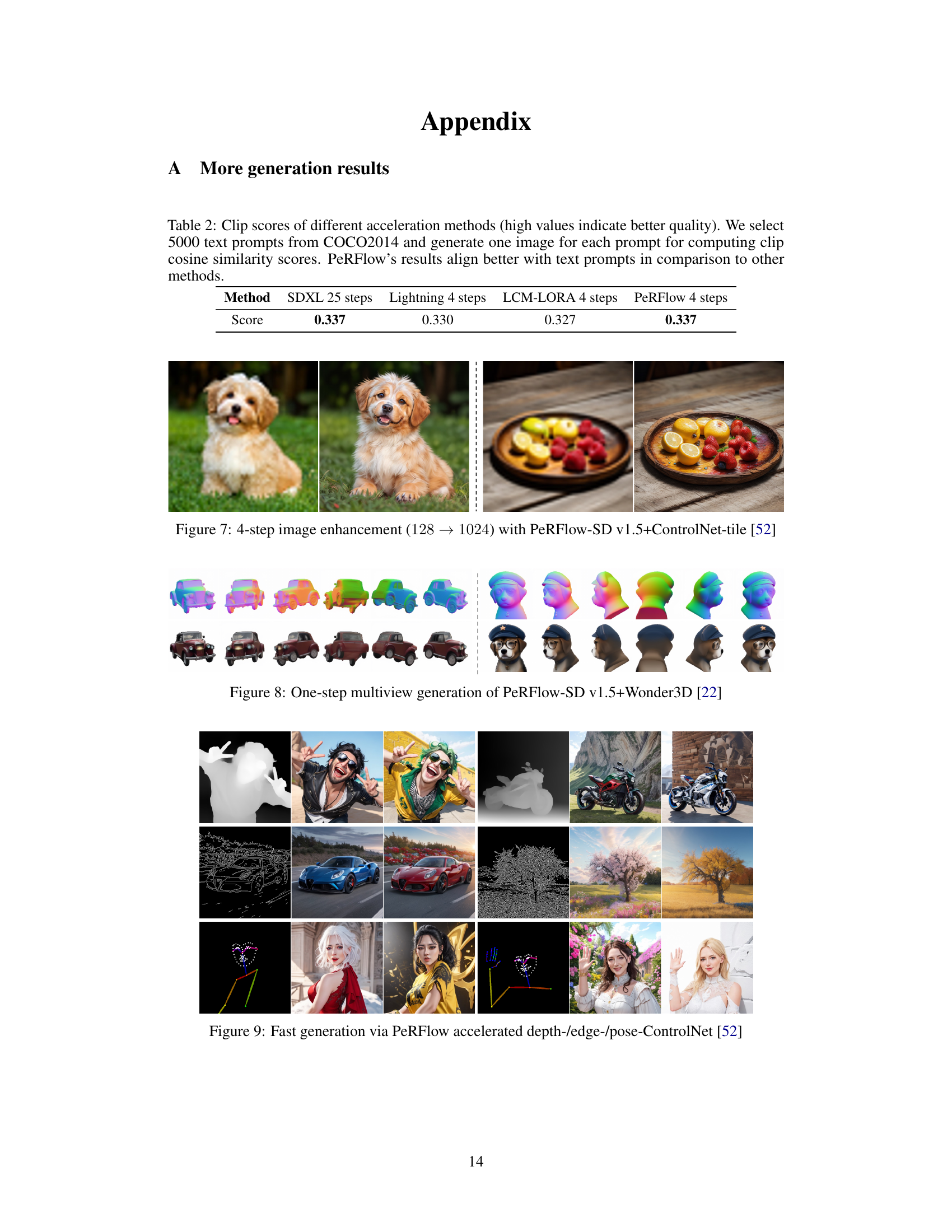

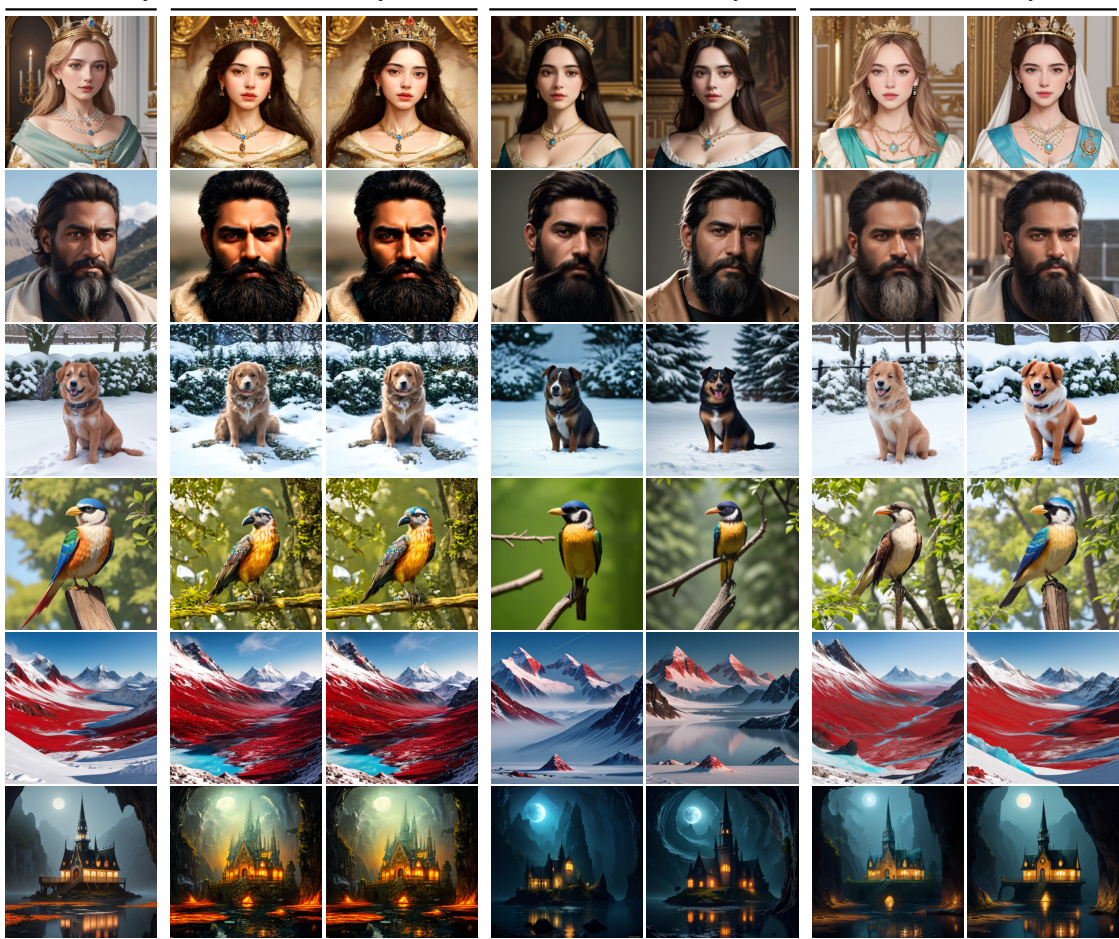

🔼 This figure compares the image generation results of PeRFlow and LCM-LORA models using the same prompts. The comparison highlights PeRFlow’s superior ability to generate images with diverse styles and features compared to LCM-LORA.

read the caption

Figure 6: Three random samples from two models with the same prompts. PeRFlow has better sampling diversity compared to LCM-LORA.

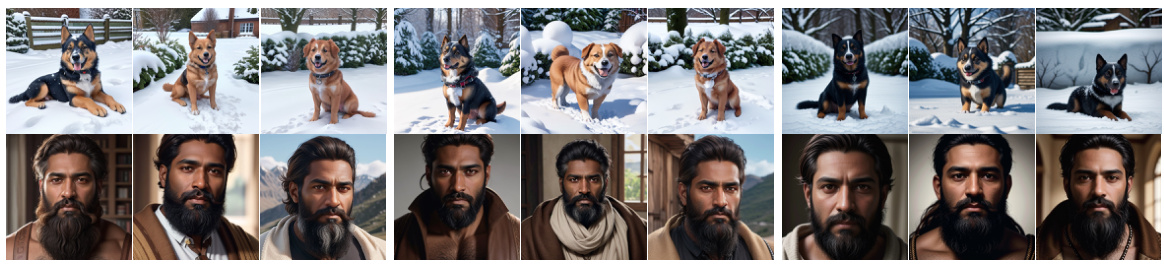

🔼 This figure compares the image generation results of PeRFlow and LCM-LORA when using customized Stable Diffusion (SD) models. It showcases PeRFlow’s superior compatibility by demonstrating that it maintains a higher level of consistency with the style of the customized SD models (ArchitectureExterior and Disney PixarCartoon) compared to LCM-LORA.

read the caption

Figure 5: PeRFlow has better compatibility with customized SD models compared to LCM-LORA. The top is ArchitectureExterior and the bottom is Disney PixarCartoon.

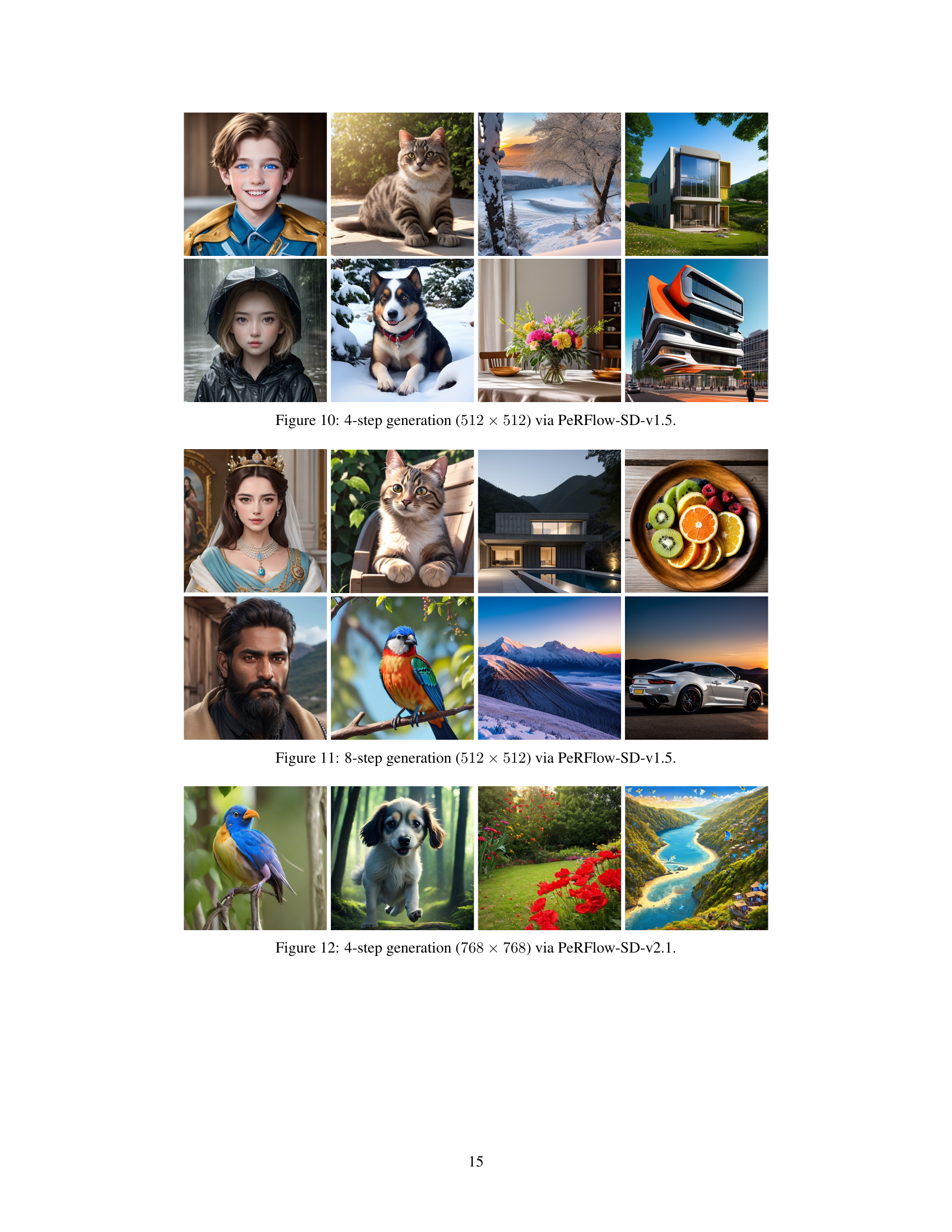

🔼 This figure demonstrates the one-step multiview image generation capability of PeRFlow when integrated with the Wonder3D pipeline. The results showcase PeRFlow’s ability to produce multiple views of a scene with just a single inference step. This highlights the efficiency and speed improvements achieved through the PeRFlow acceleration method.

read the caption

Figure 8: One-step multiview generation of PeRFlow-SD v1.5+Wonder3D [22]

🔼 This figure compares the image generation results of three different methods: the original Stable Diffusion model (SD-v1.5), the PeRFlow accelerated model, and the LCM-LORA accelerated model. Two different prompts were used for image generation: ‘ArchitectureExterior’ and ‘Disney PixarCartoon’. The results demonstrate that PeRFlow maintains better compatibility with the original SD model than LCM-LORA, generating images with higher visual fidelity and better alignment to the given prompts.

read the caption

Figure 5: PeRFlow has better compatibility with customized SD models compared to LCM-LORA. The top is ArchitectureExterior and the bottom is Disney PixarCartoon.

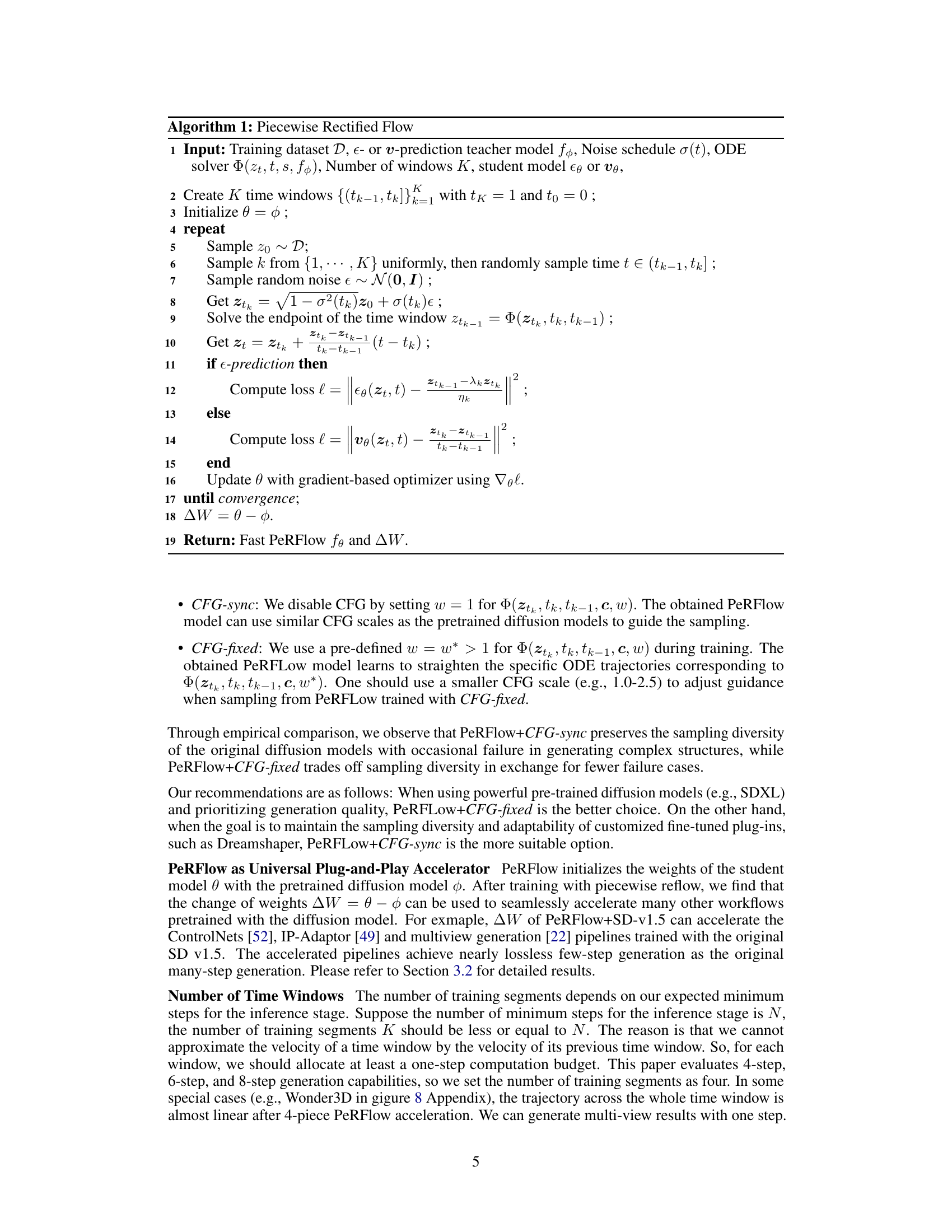

🔼 This figure compares the image generation quality of PeRFlow with other state-of-the-art acceleration methods on the SDXL model. Using four different prompts, PeRFlow’s results exhibit superior detail and alignment with the text prompts.

read the caption

Figure 2: The 1024 × 1024 images generated by PeRFlow enjoy richer details and better text-image consistency in comparison to other acceleration methods on SDXL. Prompt #1: 'a closeup face photo of girl, wearing a raincoat, in the street, heavy rain, bokeh'; Prompt #2: 'a closeup face photo of a boy in white shirt standing on the grassland, flowers'; Prompt #3: 'a huge red apple in front of a small dog, heavy snow'. Prompt #4: 'front view of a boat sailing in a cup of water'.

🔼 This figure compares the image generation quality of PeRFlow with other acceleration methods (LCM-LORA, Lightning) on the SDXL model. The images generated using PeRFlow show more detail and a better alignment between the image and the corresponding text prompt.

read the caption

Figure 2: The 1024 × 1024 images generated by PeRFlow enjoy richer details and better text-image consistency in comparison to other acceleration methods on SDXL. Prompt #1: “a closeup face photo of girl, wearing a raincoat, in the street, heavy rain, bokeh”; Prompt #2: “a closeup face photo of a boy in white shirt standing on the grassland, flowers”; Prompt #3: “a huge red apple in front of a small dog, heavy snow”. Prompt #4: “front view of a boat sailing in a cup of water”.

🔼 This figure shows the result of one-step multiview generation using PeRFlow-SD v1.5 combined with Wonder3D. PeRFlow acts as an accelerator for the Wonder3D pipeline, enabling the generation of multiple views of a single object with just one step. This demonstrates the plug-and-play capability of PeRFlow with other pre-trained diffusion model workflows.

read the caption

Figure 8: One-step multiview generation of PeRFlow-SD v1.5+Wonder3D [22]

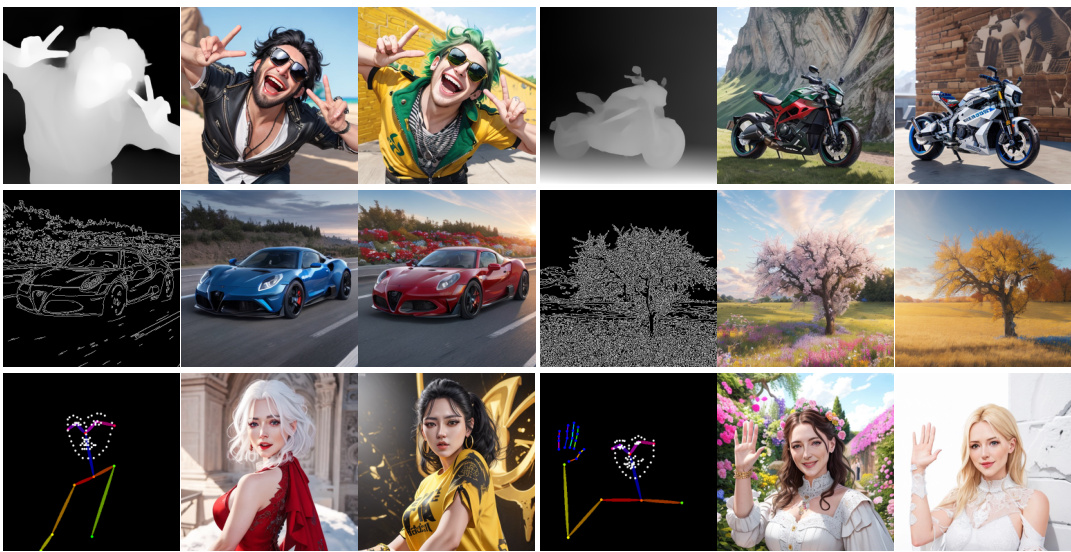

🔼 This figure demonstrates fast image generation using PeRFlow, showcasing its compatibility with ControlNet. The results highlight the quality and speed improvements achieved by incorporating PeRFlow into existing image generation workflows.

read the caption

Figure 9: Fast generation via PeRFlow accelerated depth-/edge-/pose-ControlNet [52]

🔼 This figure compares image generation results of PeRFlow with other state-of-the-art acceleration methods on Stable Diffusion XL (SDXL). PeRFlow generated images (rightmost column) show more details and better alignment with the text prompts than other methods, indicating superior performance in terms of image quality and text-image consistency.

read the caption

Figure 2: The 1024 × 1024 images generated by PeRFlow enjoy richer details and better text-image consistency in comparison to other acceleration methods on SDXL. Prompt #1: 'a closeup face photo of girl, wearing a raincoat, in the street, heavy rain, bokeh'; Prompt #2: 'a closeup face photo of a boy in white shirt standing on the grassland, flowers'; Prompt #3: 'a huge red apple in front of a small dog, heavy snow'. Prompt #4: 'front view of a boat sailing in a cup of water'.

🔼 This figure compares image generation results from PeRFlow with other acceleration methods (LCM-LORA, Lightning, and InstaFlow) using SDXL. PeRFlow demonstrates superior image quality and better alignment between text prompts and generated images, particularly noticeable in the details and overall coherence.

read the caption

Figure 2: The 1024 × 1024 images generated by PeRFlow enjoy richer details and better text-image consistency in comparison to other acceleration methods on SDXL. Prompt #1: 'a closeup face photo of girl, wearing a raincoat, in the street, heavy rain, bokeh'; Prompt #2: 'a closeup face photo of a boy in white shirt standing on the grassland, flowers'; Prompt #3: 'a huge red apple in front of a small dog, heavy snow'. Prompt #4: 'front view of a boat sailing in a cup of water'.

🔼 This figure compares the image generation quality of PeRFlow against other state-of-the-art acceleration methods on the SDXL model. Four different prompts were used, each producing a 1024x1024 image. The results show PeRFlow generating images with more detail and better alignment with the text prompt compared to the other methods.

read the caption

Figure 2: The 1024 × 1024 images generated by PeRFlow enjoy richer details and better text-image consistency in comparison to other acceleration methods on SDXL. Prompt #1: 'a closeup face photo of girl, wearing a raincoat, in the street, heavy rain, bokeh'; Prompt #2: 'a closeup face photo of a boy in white shirt standing on the grassland, flowers'; Prompt #3: 'a huge red apple in front of a small dog, heavy snow'. Prompt #4: 'front view of a boat sailing in a cup of water'.

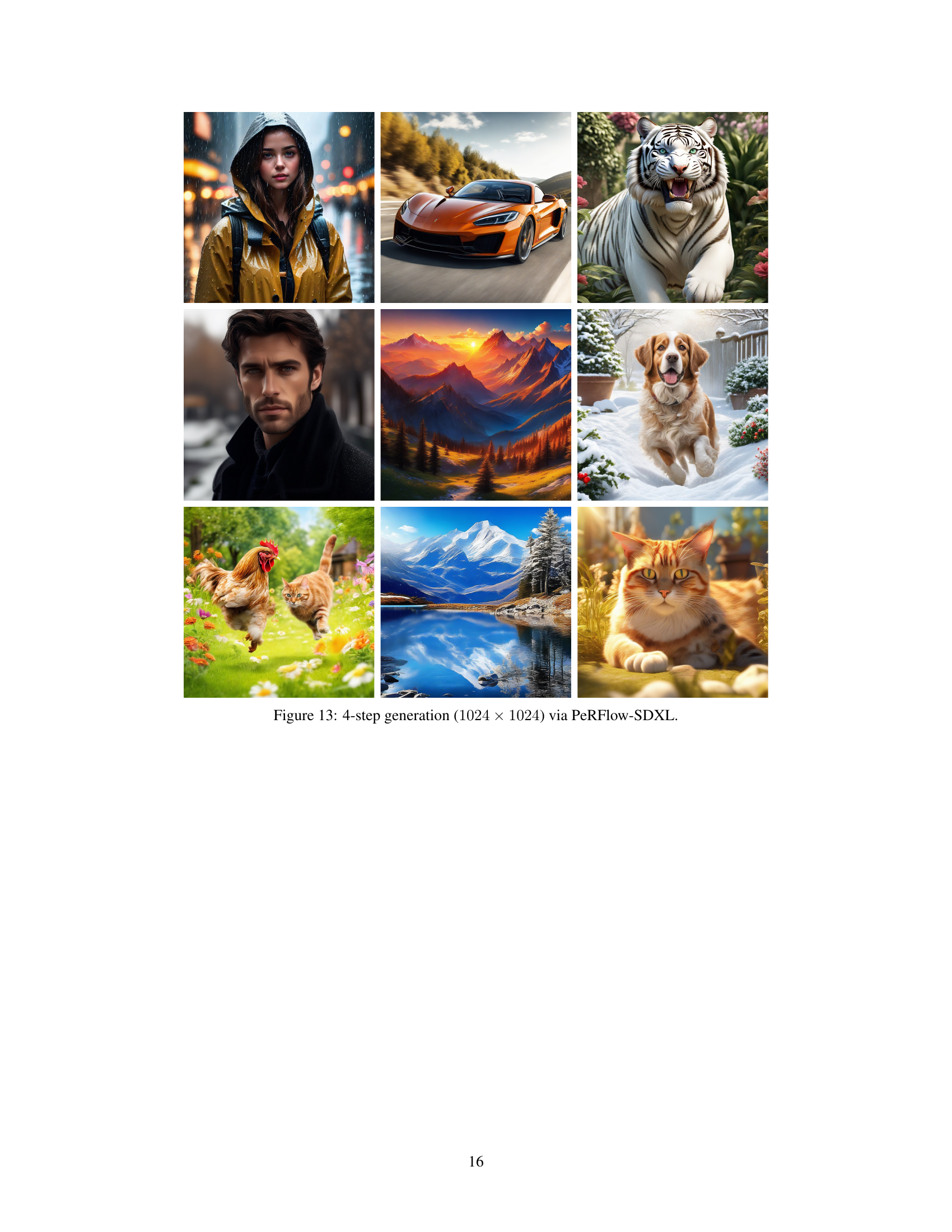

🔼 This figure showcases the high-quality images generated by PeRFlow-SDXL, a model accelerated using the PeRFlow method, within only four inference steps. The images depict diverse subjects including a woman, a sports car, a tiger, a man, mountains, a dog, a rooster with a cat, and a lake with mountains. The visual quality highlights PeRFlow’s effectiveness in accelerating diffusion models for high-resolution image generation.

read the caption

Figure 13: 4-step generation (1024 × 1024) via PeRFlow-SDXL.

Full paper#