↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Visible-infrared person re-identification (VIReID) is crucial in security monitoring, but its vulnerability to adversarial attacks remains largely unexplored. Existing research on adversarial attacks primarily focuses on single-modality systems, neglecting the unique challenges of VIReID, such as modality differences and the need for robust ranking mechanisms. This creates a significant gap in understanding and improving the security of these systems. The lack of focus on the alignment of adversarial features across different modalities (visible and infrared) also poses a major obstacle.

This paper addresses these issues by introducing a novel feature-level adversarial attack method for VIReID. This method uses universal adversarial perturbations and a frequency-spatial attention module to generate adversarial features that are consistent across modalities. The study also incorporates an auxiliary quadruple adversarial loss to enhance the distinctions between visible and infrared features, further disrupting the system’s ranking. Extensive experiments on SYSU-MM01 and RegDB benchmarks showcase the effectiveness of this approach, demonstrating the vulnerability of existing VIReID systems and highlighting the need for improved security measures.

Key Takeaways#

Why does it matter?#

This paper is critical for researchers in computer vision and security, particularly those working on person re-identification. It highlights a significant vulnerability in visible-infrared person re-identification (VIReID) systems, a technology with widespread applications in security and surveillance. By proposing effective adversarial attacks, the research opens new avenues for developing more robust and secure VIReID systems, advancing the field of model security and improving the reliability of real-world applications.

Visual Insights#

This figure illustrates the security vulnerabilities of Visible-Infrared Person Re-identification (VIReID) systems. It shows two scenarios: (a) where an infrared image of a person with added adversarial noise (indicated by a colorful bar at the bottom) is incorrectly matched to a visible image of a different person, and (b) a visible image with adversarial noise is incorrectly matched to an infrared image of a different person. Correct matches are indicated in green, and incorrect matches (due to adversarial attacks) are indicated in red. This highlights the risk of attackers manipulating images to deceive the VIReID system.

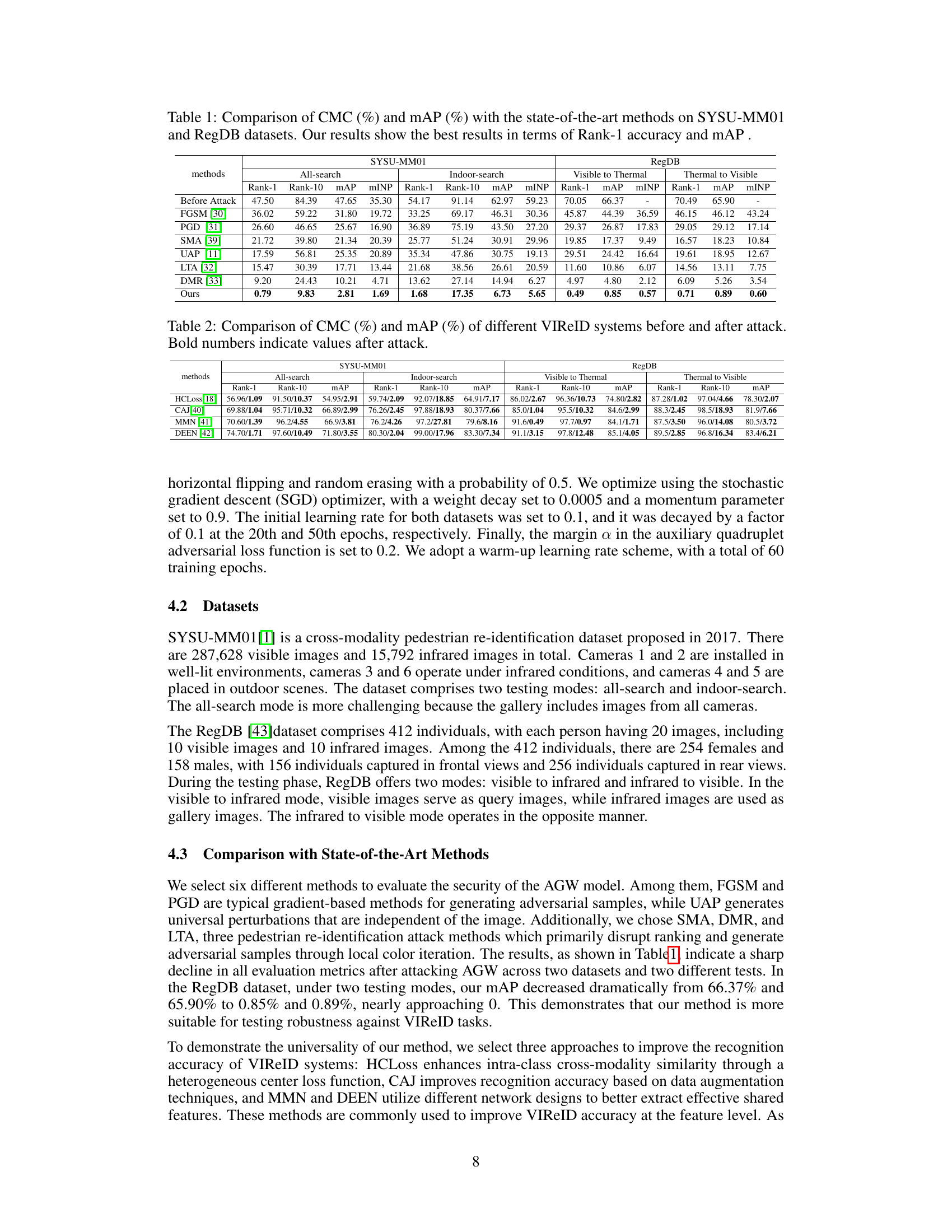

This table compares the performance of the proposed method against other state-of-the-art methods on two benchmark datasets, SYSU-MM01 and RegDB, using two metrics: Cumulative Matching Characteristic (CMC) and mean Average Precision (mAP). It shows results for both ‘all-search’ and ‘indoor-search’ modes on SYSU-MM01, and ‘visible to thermal’ and ’thermal to visible’ settings for RegDB. The table highlights the superiority of the proposed method in terms of Rank-1 accuracy and mAP.

In-depth insights#

VIReID Security Risks#

VIReID systems, while beneficial for security applications, are vulnerable to adversarial attacks. The core risk lies in the ability of malicious actors to manipulate visible or infrared images, causing the system to misidentify individuals or disrupt its ranking process. Feature-level attacks, in particular, are concerning because they can subtly alter image characteristics, making the manipulation difficult to detect with the naked eye. The successful execution of such attacks can lead to serious consequences in security applications, such as false identification of suspects or failure to track criminals. Mitigating these risks requires a multi-pronged approach, including developing more robust VIReID models, implementing defensive mechanisms against adversarial attacks, and exploring the use of more secure image acquisition techniques. Research into the security of VIReID systems is still in its early stages, and more work is needed to fully understand the vulnerabilities and develop effective countermeasures.

Feature-Level Attacks#

Feature-level adversarial attacks offer a potent methodology for evaluating the robustness of visible-infrared person re-identification (VIReID) systems. Unlike attacks manipulating the pixel-level data, feature-level attacks focus on modifying the learned feature representations within the model itself. This approach is particularly relevant to VIReID, due to the inherent modality differences between visible and infrared images which create unique challenges for generating effective adversarial examples. By manipulating features, these attacks aim to disrupt the system’s ranking mechanism, potentially causing mismatches between modalities. Successful feature-level attacks reveal vulnerabilities that traditional pixel-level approaches might miss. Moreover, such attacks can be more generalizable to different VIReID models and environments because they operate on high-level features representing the core data patterns rather than the raw data itself. Understanding these attacks and designing robust defenses against them is crucial for enhancing the security and reliability of VIReID systems in real-world applications where adversarial examples could potentially lead to severe misidentification or system failure.

FSAM and LAQAL#

The proposed approach integrates two key modules: Frequency-Spatial Attention Module (FSAM) and Auxiliary Quadruple Adversarial Loss (LAQAL). FSAM aims to enhance adversarial feature alignment between visible and infrared modalities by unifying frequency and spatial information. This is crucial because the modalities capture different aspects of an image, requiring a method to reconcile their differences for effective adversarial attacks. By combining frequency domain analysis (FFT) with spatial attention mechanisms, FSAM ensures consistency and focuses on essential regional features. LAQAL, on the other hand, amplifies the distinction between modalities, further disrupting the ranking system’s ability to correctly identify individuals. It leverages an auxiliary loss function, combining features from different modalities and stages of the network to enhance modality differences while maintaining intra-class similarity. The synergy between FSAM and LAQAL is powerful: FSAM generates consistent adversarial features, and LAQAL exploits these features to produce incorrect ranking results. This combination successfully disrupts visible-infrared person re-identification systems.

Cross-Modality UAP#

Cross-modality Universal Adversarial Perturbations (UAPs) represent a significant advancement in adversarial attacks against visible-infrared person re-identification (VIReID) systems. Standard UAPs, designed for single-modality scenarios, often fail to generalize effectively across the visible and infrared spectrums due to the inherent differences in image characteristics. Cross-modality UAPs aim to overcome this limitation by generating perturbations that are effective regardless of the input modality. This requires careful consideration of how to align features across modalities, ensuring the attack remains robust to variations in imaging conditions and sensor noise. A key challenge lies in crafting a perturbation that maintains consistency in the shared feature space of the two modalities. The effectiveness of cross-modality UAPs is crucial for assessing the robustness and security of VIReID systems, as it helps evaluate the vulnerability of these systems to real-world adversarial attacks. Research into cross-modality UAPs would focus on methods for generating such perturbations, optimizing their effectiveness, and analyzing their generalizability across different VIReID models and datasets. The results of such research can inform the development of more robust and secure VIReID systems, thereby strengthening their applicability in critical security and surveillance applications.

Future VIReID Research#

Future research in Visible-Infrared Person Re-identification (VIReID) should prioritize addressing robustness against adversarial attacks. Current systems are vulnerable, and feature-level attacks, particularly those exploiting modality differences, require stronger defenses. Developing more effective attention mechanisms that integrate frequency and spatial information consistently across visible and infrared modalities is crucial. Furthermore, research should explore more sophisticated loss functions that better account for cross-modal relationships and ranking challenges. Addressing the scarcity of high-quality, large-scale VIReID datasets with diverse scenarios and environmental conditions is essential for advancing the field. Finally, exploring novel architectures and algorithms that naturally handle modality heterogeneity will improve generalization and accuracy. The ethical implications of VIReID security must also be considered to ensure responsible development and deployment.

More visual insights#

More on figures

This figure illustrates the overall architecture of the proposed method for feature-level adversarial attacks and ranking disruption in Visible-Infrared Person Re-identification (VIReID). It shows a dual-stream ResNet network as the backbone, with universal adversarial perturbation (UAP) added to visible and infrared images. The Frequency-Spatial Attention Module (FSAM) extracts frequency-domain spatial features, and the Auxiliary Quadruple Adversarial Loss (LAQAL) amplifies modality differences to disrupt ranking results. The figure also includes a detailed diagram of the FSAM module, showing the FFT, spatial attention module (SAM), and IFFT processes.

This figure shows the decomposition and reconstruction of visible and infrared images using Fast Fourier Transform (FFT). Subfigure (a) displays the original visible and infrared images of a pedestrian. Subfigure (b) shows the reconstruction using only the amplitude information from the FFT, highlighting the overall brightness and contrast. Subfigure (c) shows the reconstruction using only the phase information from the FFT, emphasizing the structural details and shape of the pedestrian.

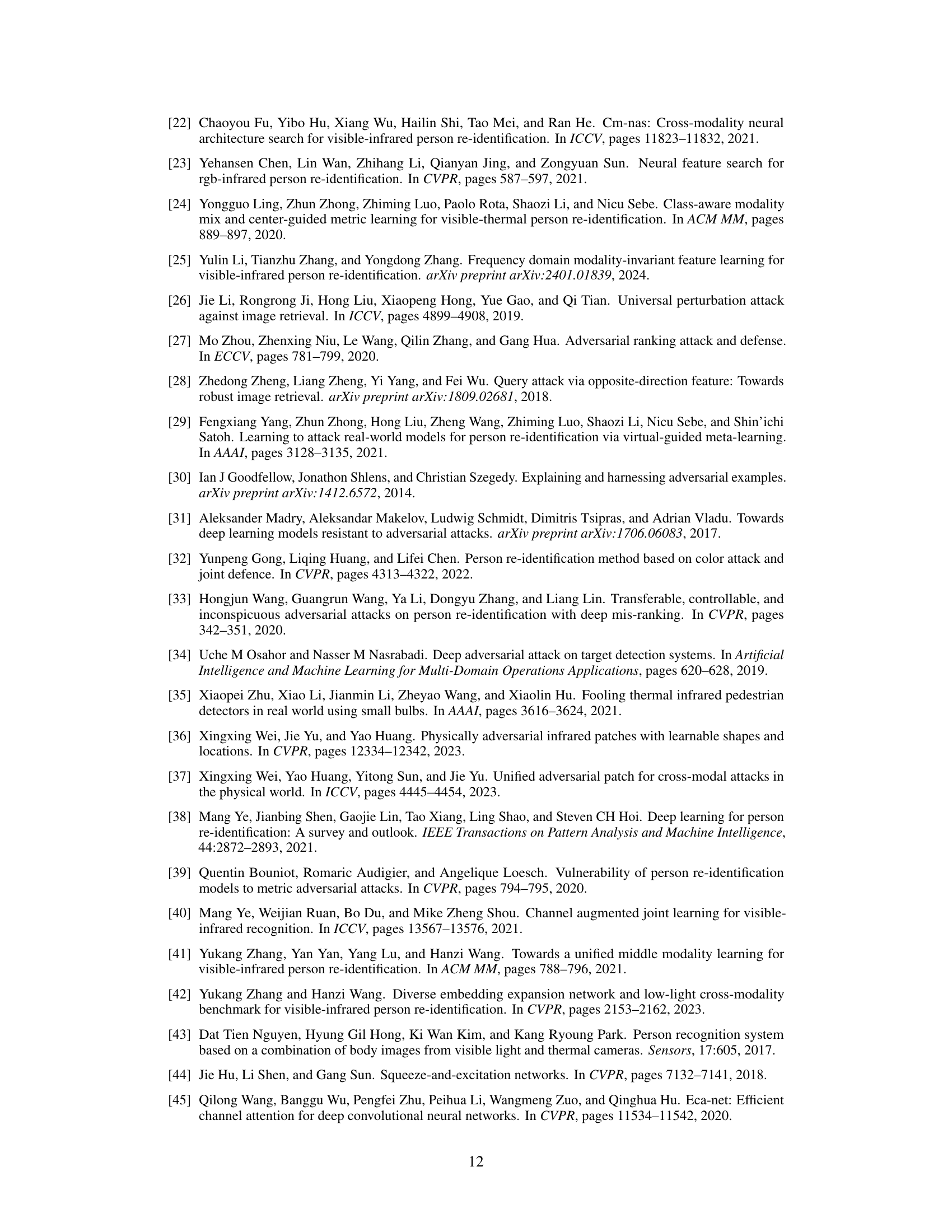

This figure shows the t-SNE visualization of the features before and after the adversarial attack. Each point represents a pedestrian image feature from either the visible or infrared modality. Different colors indicate different identities. Before the attack (left), features from the same identity cluster tightly together, and visible and infrared features are closely related. After the attack (right), the feature clusters are significantly more dispersed, showing how the attack separates features across modalities and within identities. This demonstrates that the attack effectively disrupts the relationship between visible and infrared features, impacting the re-identification performance.

More on tables

This table presents a comparison of Cumulative Matching Characteristic (CMC) and mean Average Precision (mAP) metrics for various Visible-Infrared Person Re-identification (VIReID) systems. It shows the performance of these systems before and after being subjected to an adversarial attack. The bold numbers highlight the performance drop after the attack, demonstrating the vulnerability of these VIReID systems to adversarial attacks.

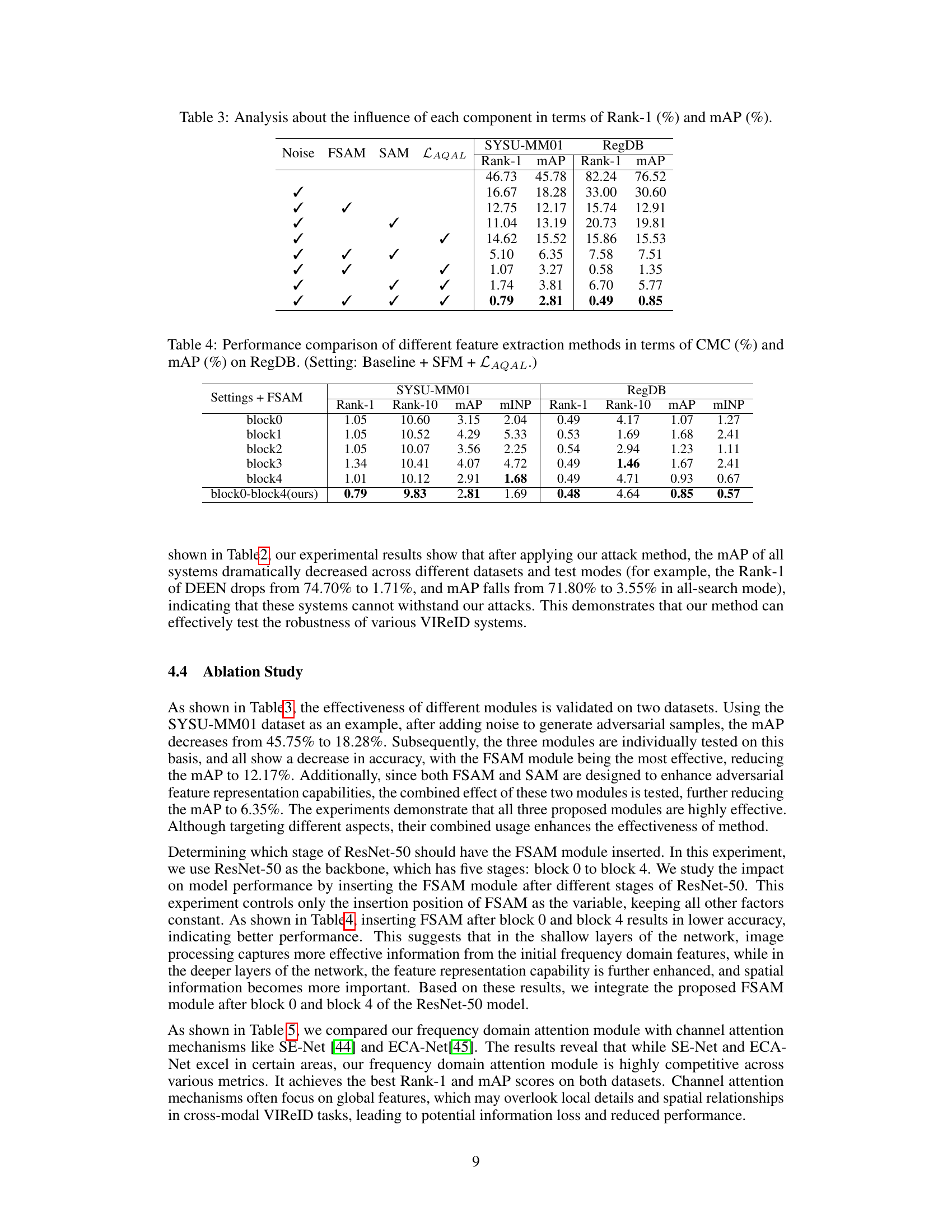

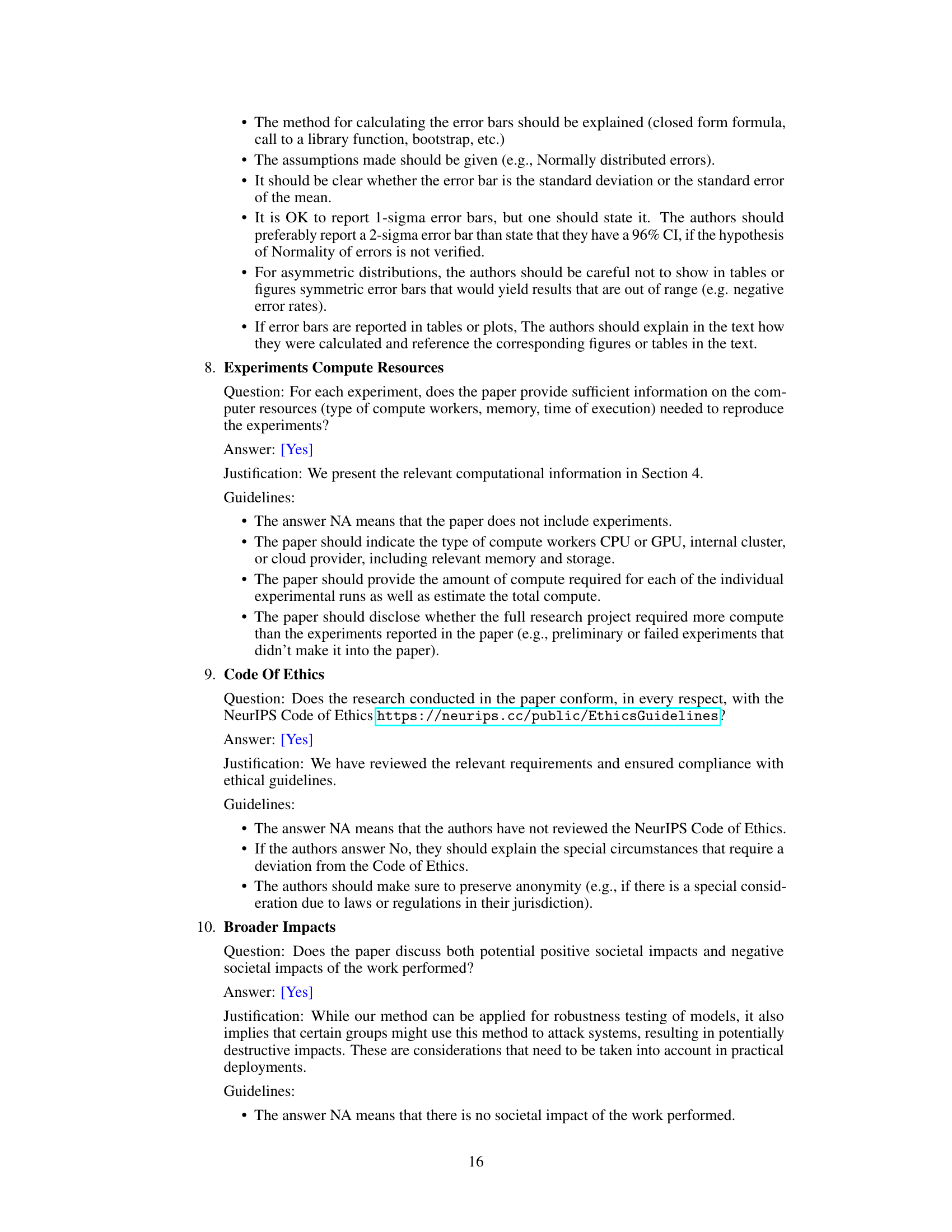

This table presents the ablation study results, showing the impact of each component (noise addition, Frequency-Spatial Attention Module (FSAM), Spatial Attention Module (SAM), and Auxiliary Quadruple Adversarial Loss (LAQAL)) on the performance metrics (Rank-1 accuracy and mean Average Precision (mAP)) of the proposed adversarial attack method on the SYSU-MM01 and RegDB datasets. Each row represents a different combination of components, with a checkmark indicating the inclusion of a particular component. The results show the effectiveness of each component in disrupting the ranking of the VIReID system.

This table presents the performance comparison of different feature extraction methods on the RegDB dataset. The performance is measured in terms of CMC and mAP. The settings used for this comparison include the baseline model, the spatial focusing module (SFM), and the auxiliary quadruple adversarial loss (LAQAL). Different feature extraction methods (block0-block4) are compared to determine which is the most effective. The ‘block0-block4(ours)’ row represents the results obtained using the proposed method.

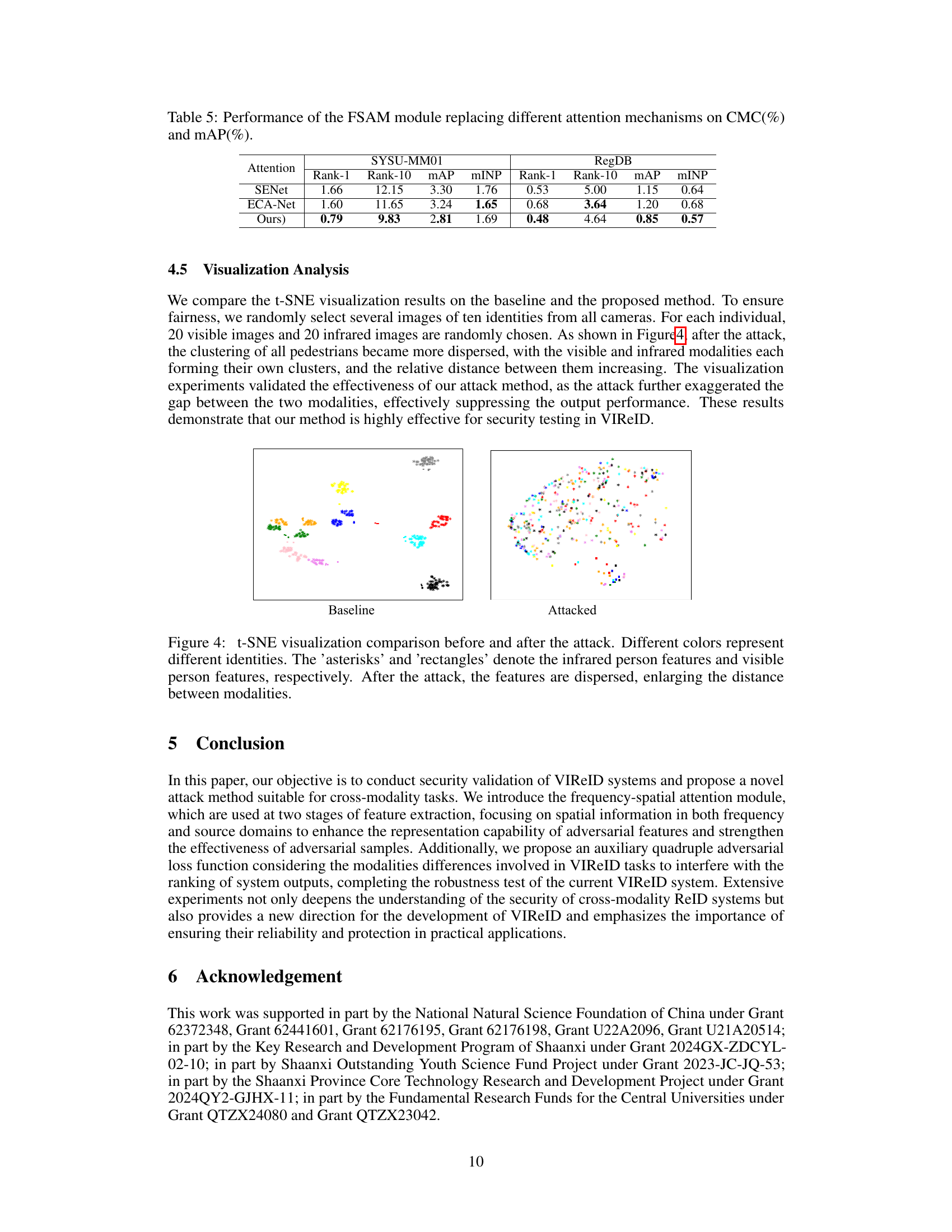

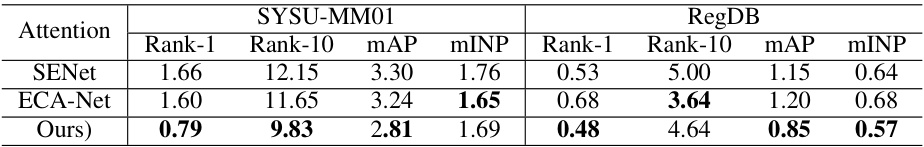

This table presents the comparison of the proposed Frequency-Spatial Attention Module (FSAM) against other attention mechanisms (SENet and ECA-Net). The performance is evaluated using CMC and mAP metrics on SYSU-MM01 and RegDB datasets, demonstrating the effectiveness of FSAM in improving the accuracy of visible-infrared person re-identification.

Full paper#