↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

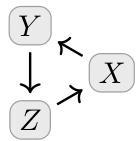

Probabilistic graphical models, while useful, primarily capture conditional independencies. They struggle to effectively represent other important qualitative properties like functional dependencies and causal relationships, particularly within cyclic structures. This leads to limitations in modeling complex real-world systems where such relationships are prevalent.

This research introduces “Qualitative Independent-Mechanism (QIM)-compatibility,” a novel framework for defining compatibility between probability distributions and directed hypergraphs. This approach allows for a richer representation of qualitative relationships, encompassing functional dependencies and causality, even in cyclic graphs. The framework connects to information theory, offering new insights and resolving long-standing conceptual challenges. The authors also propose methods for determining compatibility and explore the connections between causal models, information theory, and the proposed compatibility notion.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers working with probabilistic graphical models because it introduces a novel framework for understanding and modeling qualitative aspects of probability distributions beyond standard conditional independence. This framework connects causal modeling, information theory, and independence concepts in a unified way, opening avenues for analyzing complex systems, and resolving long-standing issues in information theory. The proposed QIM-compatibility offers a powerful tool for both theoretical and applied research, enabling more expressive model design and analysis.

Visual Insights#

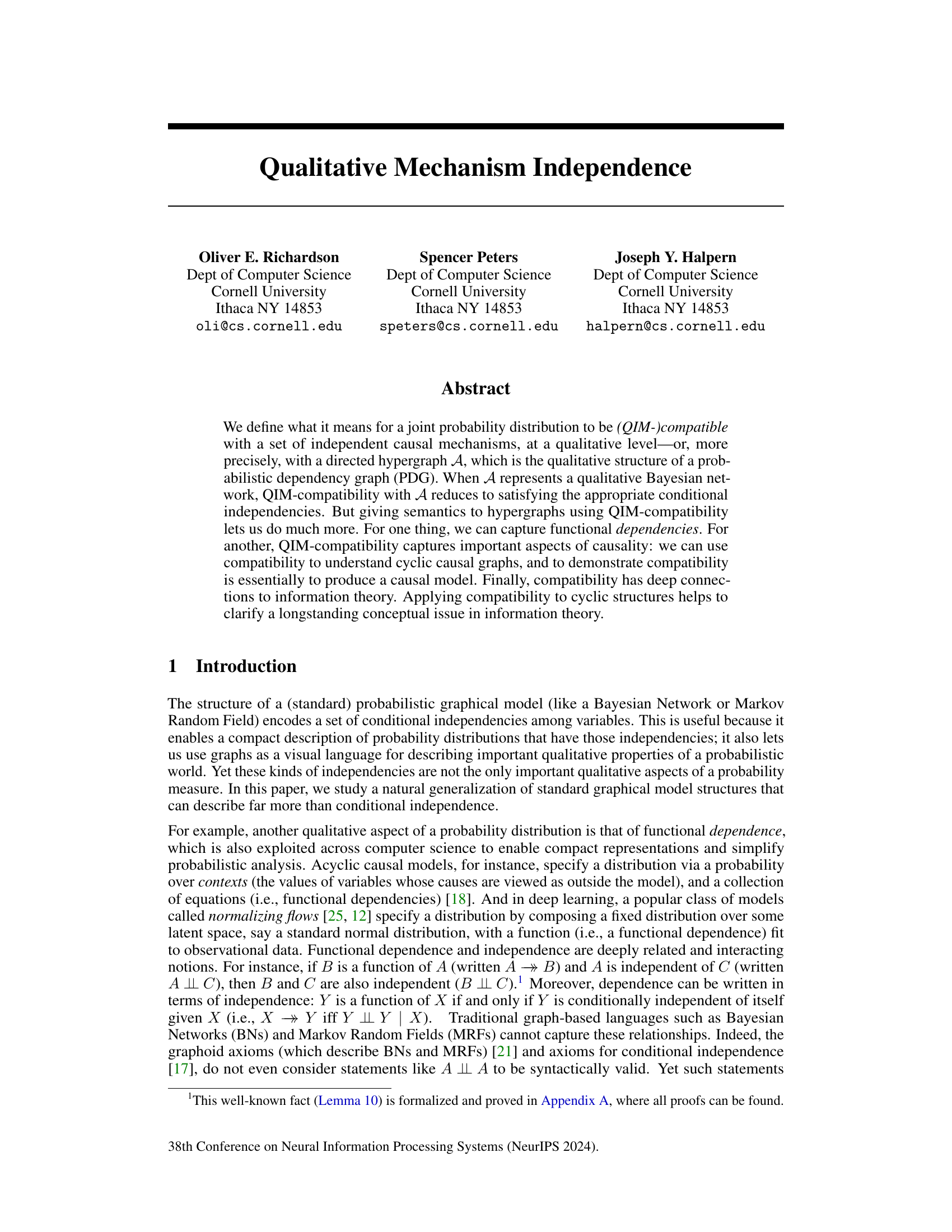

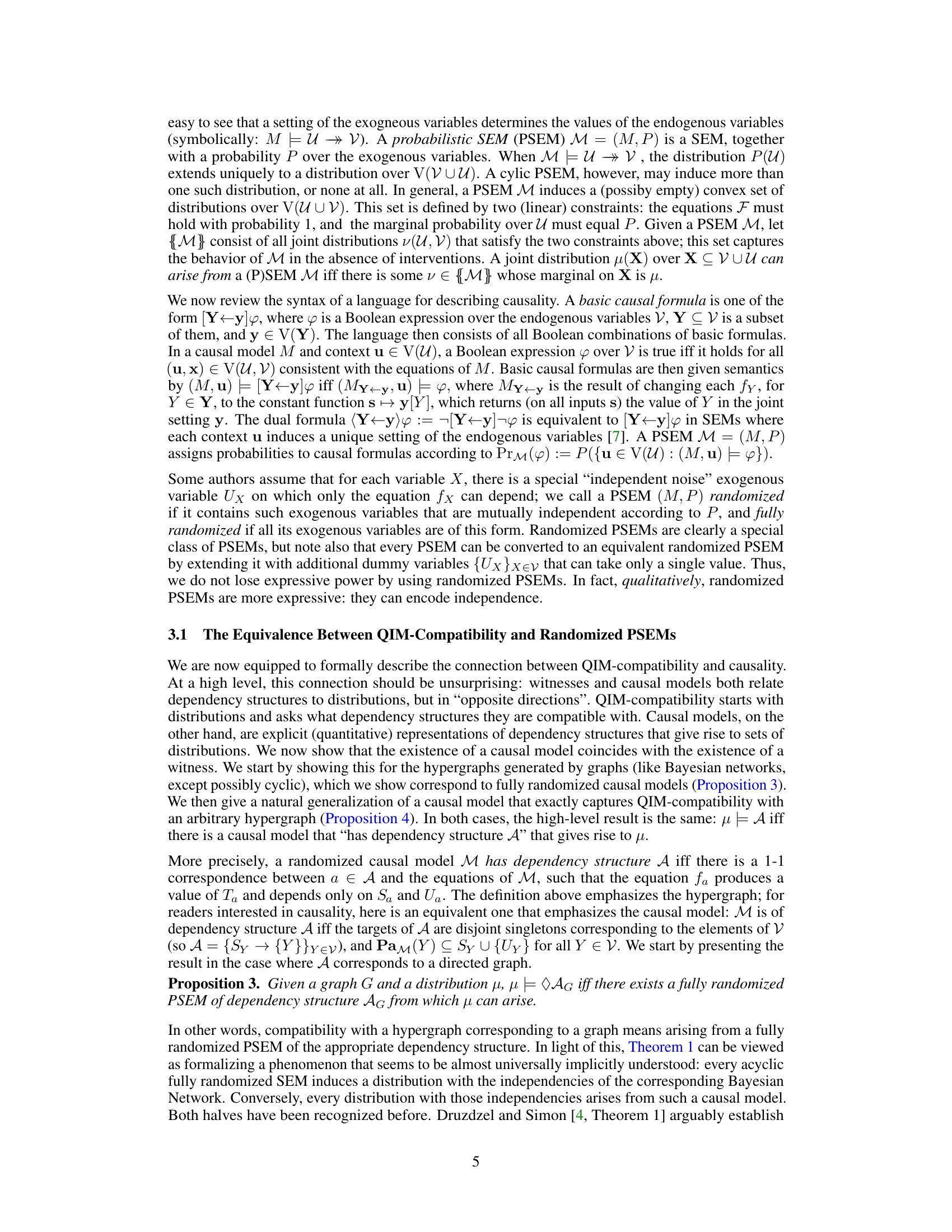

This figure shows the components of the information profile vector Iμ for three variables, X, Y, Z. The components represent different information-theoretic quantities: conditional entropy (H(Y|X,Z), H(Y|X), H(Z|X), H(Z|Y)), mutual information (I(X;Y|Z), I(X;Z|Y), I(Y;Z|X), I(X;Y), I(X;Z), I(Y;Z)), and interaction information I(X;Y;Z). These quantities measure various aspects of independence and dependence between the variables. The figure is referenced in the context of explaining how an arbitrary conjunction of conditional (in)dependencies can be expressed as a constraint Iμ · v ≥ 0, with the appropriate choice of coefficient vector v.

In-depth insights#

QIM-Causality Bridge#

A hypothetical ‘QIM-Causality Bridge’ section in a PDF research paper would likely explore the deep connection between Qualitative Independent Mechanism (QIM) compatibility and causality. It would likely demonstrate how QIM-compatibility, a framework for representing qualitative structures in probability distributions using directed hypergraphs, can be used to understand and model causal relationships. This bridge would likely involve showing how a QIM-compatible distribution implies the existence of a causal model that is consistent with that distribution. A crucial aspect would be establishing an equivalence between QIM-compatibility witnesses and causal models, demonstrating that the existence of a witness implies a causal model exists and vice-versa. Furthermore, the section might discuss how QIM-compatibility extends causal reasoning beyond acyclic graphs, handling cyclic causal structures in a principled way. The exploration of interventions within this framework would be key, showing how QIM-compatibility aligns with Pearl’s do-calculus, providing a probabilistic interpretation of causal interventions. Finally, the bridge might discuss the implications of this connection for various applications of causal inference and probabilistic modeling.

Cyclic Models’ Semantics#

The semantics of cyclic causal models present a significant challenge in causal inference. Traditional methods, often rooted in directed acyclic graphs (DAGs), struggle to directly address cycles. The concept of Qualitative Independent-Mechanism (QIM) compatibility offers a novel approach by focusing on the qualitative structure of independent mechanisms rather than strictly on conditional independencies. This allows for a more nuanced understanding of cyclic structures, representing them as directed hypergraphs. QIM-compatibility focuses on the existence of a causal model explaining the observed distribution, where the model’s structure aligns with the hypergraph. This approach extends beyond conditional independence to encompass functional dependencies and deep connections with information theory. One of the key advantages is the capacity to define compatibility for cyclic models, providing a framework for interpreting probabilities and interactions in scenarios where traditional causal models would break down. The challenge remains in computationally establishing QIM-compatibility, but the information-theoretic connections offer promising avenues for future research.

Witness-Model Equivalence#

The concept of ‘Witness-Model Equivalence’ in a probabilistic graphical model framework centers on the interchangeability between a probabilistic distribution (the witness) and a causal model that generates it. A witness is a probability distribution that satisfies certain conditional independence and functional dependence constraints encoded in a directed hypergraph. A causal model, typically a Structural Equation Model (SEM), explicitly represents the causal relationships among variables through a system of equations. The equivalence lies in the assertion that the existence of a witness for a given hypergraph structure implies the existence of a causal model (and vice-versa) that explains the same probabilistic relationships. This equivalence bridges the gap between qualitative descriptions of probabilistic dependence and quantitative causal explanations. The strength of this equivalence lies in enabling a deeper understanding of causality even in cyclic systems, where standard graphical models struggle. Witness-model equivalence provides a powerful tool for analyzing complex probabilistic structures by relating them to intuitive causal interpretations. However, the mapping between witnesses and models isn’t always unique, introducing complexities in scenarios involving zero-probability events or cyclic causal relationships.

Info-Theoretic Constraints#

The heading ‘Info-Theoretic Constraints’ suggests a section exploring how information theory can mathematically formalize and bound the qualitative relationships captured in a probabilistic graphical model. The authors likely leverage concepts like entropy, mutual information, and conditional independence to define constraints on probability distributions that are compatible with a given model structure. A key insight might involve expressing conditional independencies and functional dependencies as information-theoretic inequalities. This allows the framework to move beyond the limitations of traditional graphical models, particularly in handling cyclic causal structures where standard conditional independence assumptions fail. The core idea is to translate the qualitative structure of causal mechanisms into quantitative information-theoretic constraints that any compatible probability distribution must satisfy. This approach provides a powerful tool for reasoning about complex causal relationships, going beyond standard graphical models in terms of expressiveness and applicability.

Future Research#

The “Future Research” section of a PDF research paper on probabilistic graphical models would ideally delve into several promising avenues. Extending QIM-compatibility to handle continuous variables and more complex relationships beyond functional dependencies is crucial. The current work lays a foundation, but richer semantics are needed. Developing efficient algorithms for determining QIM-compatibility is a significant computational challenge, and a major focus of future work should be to address the scalability issue. Investigating the implications of QIM-compatibility for causal inference in cyclic systems is also vital; the theoretical framework opens exciting opportunities for causal reasoning beyond traditional directed acyclic graphs. Finally, exploring deeper connections to information theory could yield fundamental insights into the nature of information and dependencies in probabilistic settings. This includes investigating the relationship between QIM-compatibility and other information-theoretic measures, and potentially developing novel measures.

More visual insights#

More on figures

This figure shows the information theoretic quantities involved in the information profile of a distribution μ, such as conditional entropy, mutual information, and conditional mutual information. The quantities are represented as areas in a Venn diagram, illustrating how these quantities interact and relate to one another.

This figure illustrates the information profile of μ for three variables, X, Y, Z, in the form of a Venn diagram. Each area in the Venn diagram represents a component of the information profile, which are components of a coefficient vector used in conjunction with the qualitative PDG scoring function (IDef). These components are: the conditional entropy of Y given X and Z (H(Y|X,Z)), the conditional entropy of Z given X and Y (H(Z|X,Y)), the mutual information between X and (Y,Z) (I(X; YZ)), the interaction information between X, Y, and Z (I(X; Y; Z)), the conditional mutual information of Y and Z given X (I(Y;Z|X)), the entropy of X (H(X)), the entropy of Y (H(Y)), the entropy of Z (H(Z)), the joint entropy of X and Y (H(XY)), the joint entropy of Y and Z (H(YZ)), and the joint entropy of X and Z (H(XZ)). The values are shown in the areas of the Venn diagram and used to express an arbitrary conjunction of (conditional) independencies as a constraint Iμ · v ≥ 0.

This figure illustrates the information profile of μ for three variables X, Y, and Z. It shows how various information-theoretic quantities, such as conditional entropy and mutual information, can be represented as components of a vector. The figure highlights the connection between these information-theoretic quantities and the concept of (in)dependence, demonstrating that an arbitrary conjunction of (conditional) (in)dependencies can be expressed as a constraint on the information profile.

This figure shows a vector representation of the information profile of a probability distribution μ over three variables X, Y, and Z. The components of the vector are conditional entropies (H(Y|X,Z), H(Y|X), H(Y|Z)), mutual informations (I(X;Y|Z), I(X;Y;Z), I(X;Z|Y), I(Y;Z|X)), and the joint entropy H(X,Y,Z). These quantities capture the dependencies among the variables, reflecting how far the distribution is from functional dependence or conditional independence.

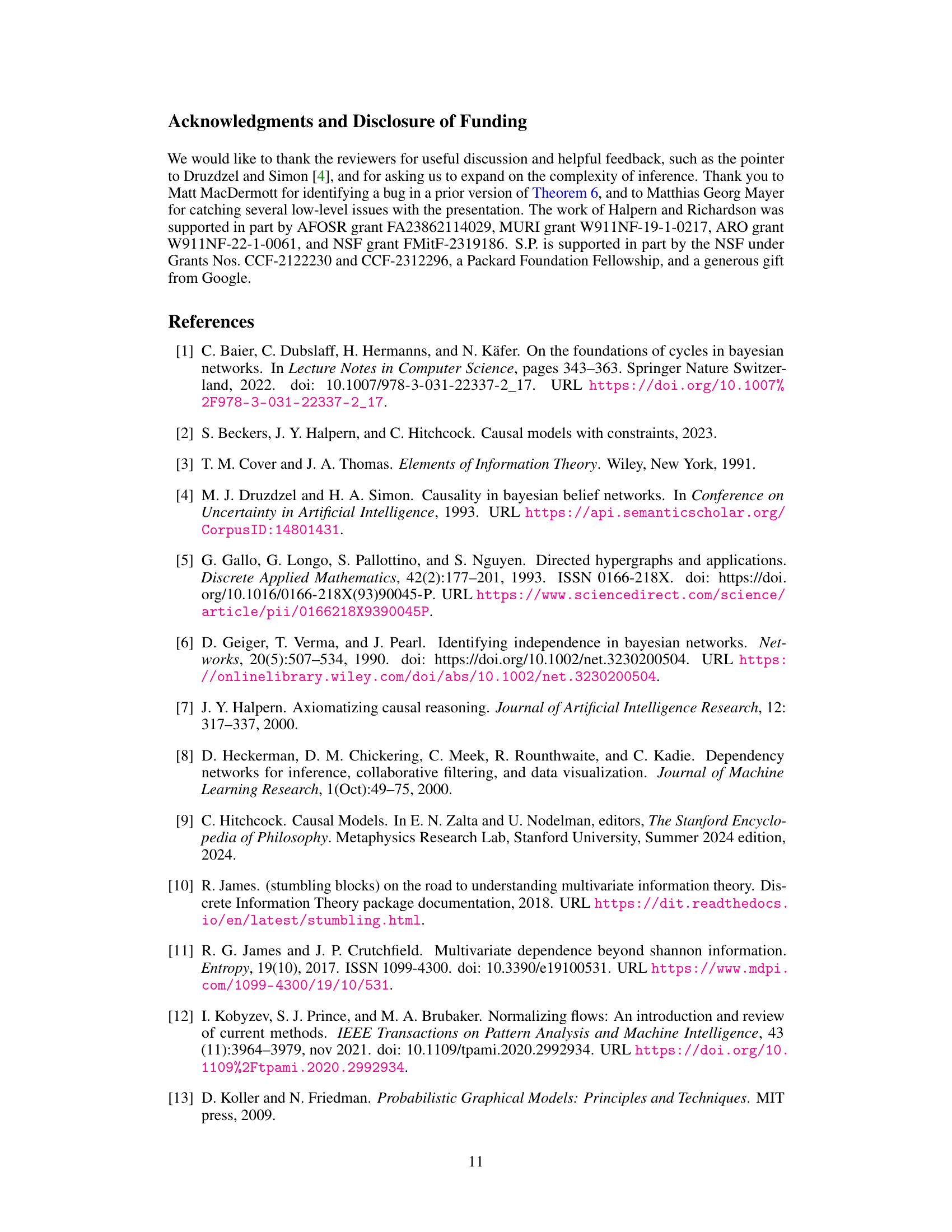

This figure illustrates how the structural deficiency IDefA, which is a measure of how far a distribution is from being compatible with a hypergraph, varies across different hypergraph structures. The circles represent variables, and the intersections and overlaps visualize how information is shared between them. Green areas indicate that changing the distribution in those areas improves the fit, while red areas indicate that changing it worsens the fit. Grey areas are neutral. Only the boxed blue structures are expressible as Bayesian networks.

Full paper#