↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Stackelberg games model strategic interactions where one player commits to a strategy before the other. Existing algorithms often ignore the additional information (side information) available to players, like weather or traffic, which significantly impacts optimal strategies. This paper studies such scenarios, formalizing them as Stackelberg games with side information, which can vary round to round. This makes applying existing algorithms inadequate.

This research shows that in fully adversarial settings (where both contexts and follower types are chosen by an adversary), no-regret learning is impossible. However, it presents new algorithms achieving no-regret learning when either the sequence of follower types or contexts is stochastic. The paper also extends these algorithms to bandit feedback settings where the follower’s type is unobservable. These results are important for real-world applications of Stackelberg games where contextual information is crucial, particularly in security and resource allocation.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in AI, game theory, and security. It addresses the limitations of existing Stackelberg game algorithms by incorporating side information, a critical aspect often overlooked. This opens new avenues for online learning in complex real-world scenarios where context matters, impacting fields like security and resource allocation. The impossibility result and proposed solutions provide a deeper theoretical understanding and practical guidance.

Visual Insights#

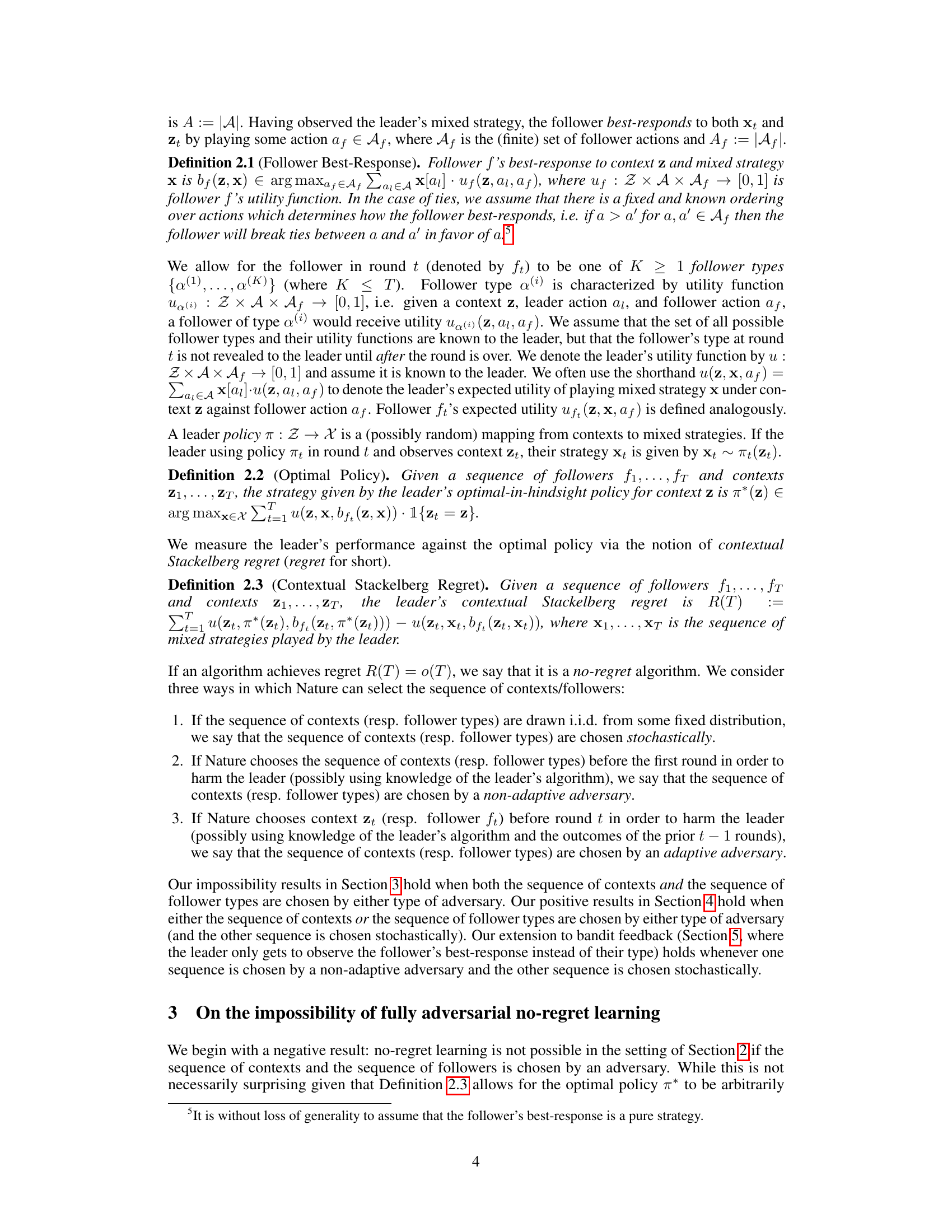

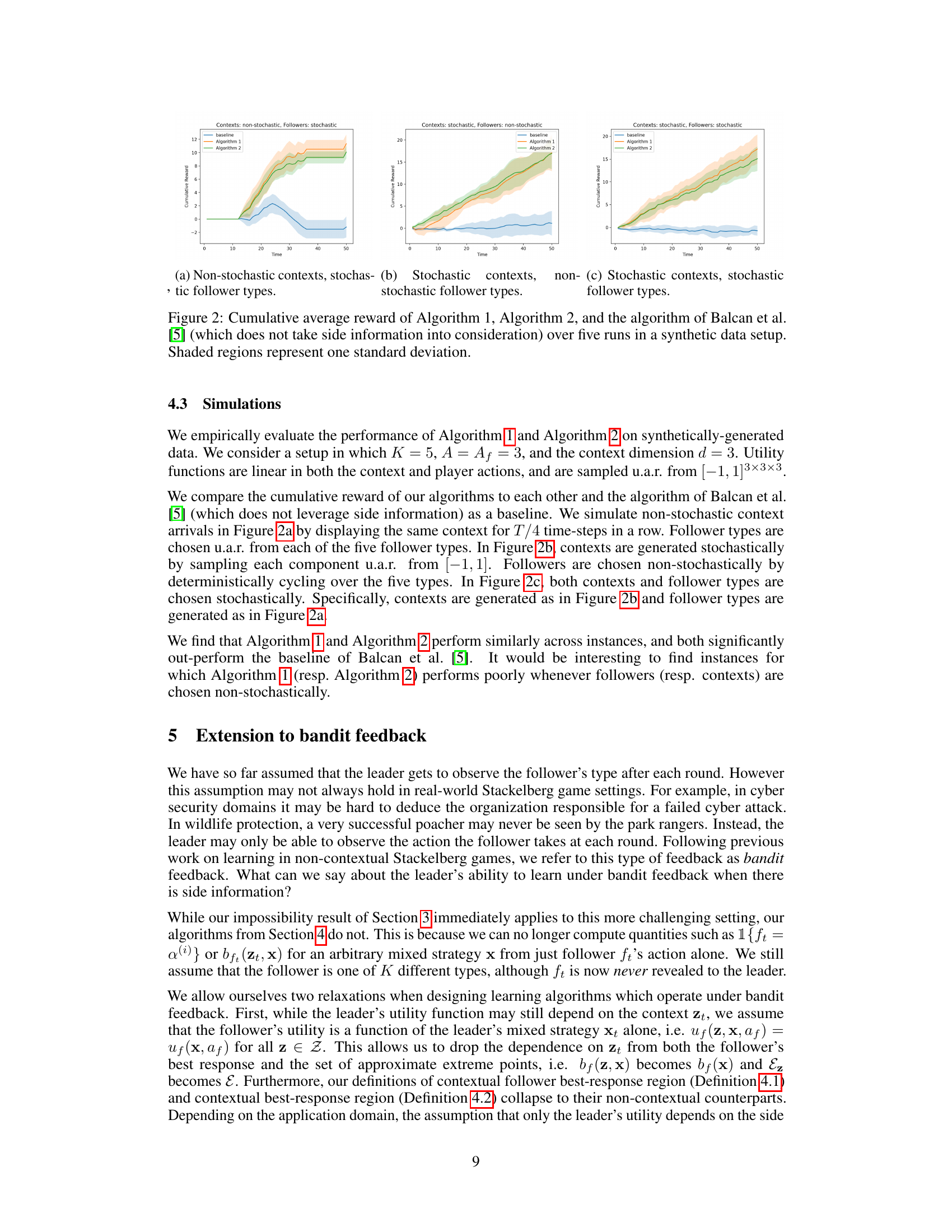

This figure summarizes the reduction from the online linear thresholding problem to the contextual Stackelberg game setting. It illustrates how a regret minimizer for the Stackelberg game with side information can be used as a black box to achieve no regret in the online linear thresholding problem. The reduction involves three functions, h1, h2, and h3, that map between the inputs and outputs of the two problems.

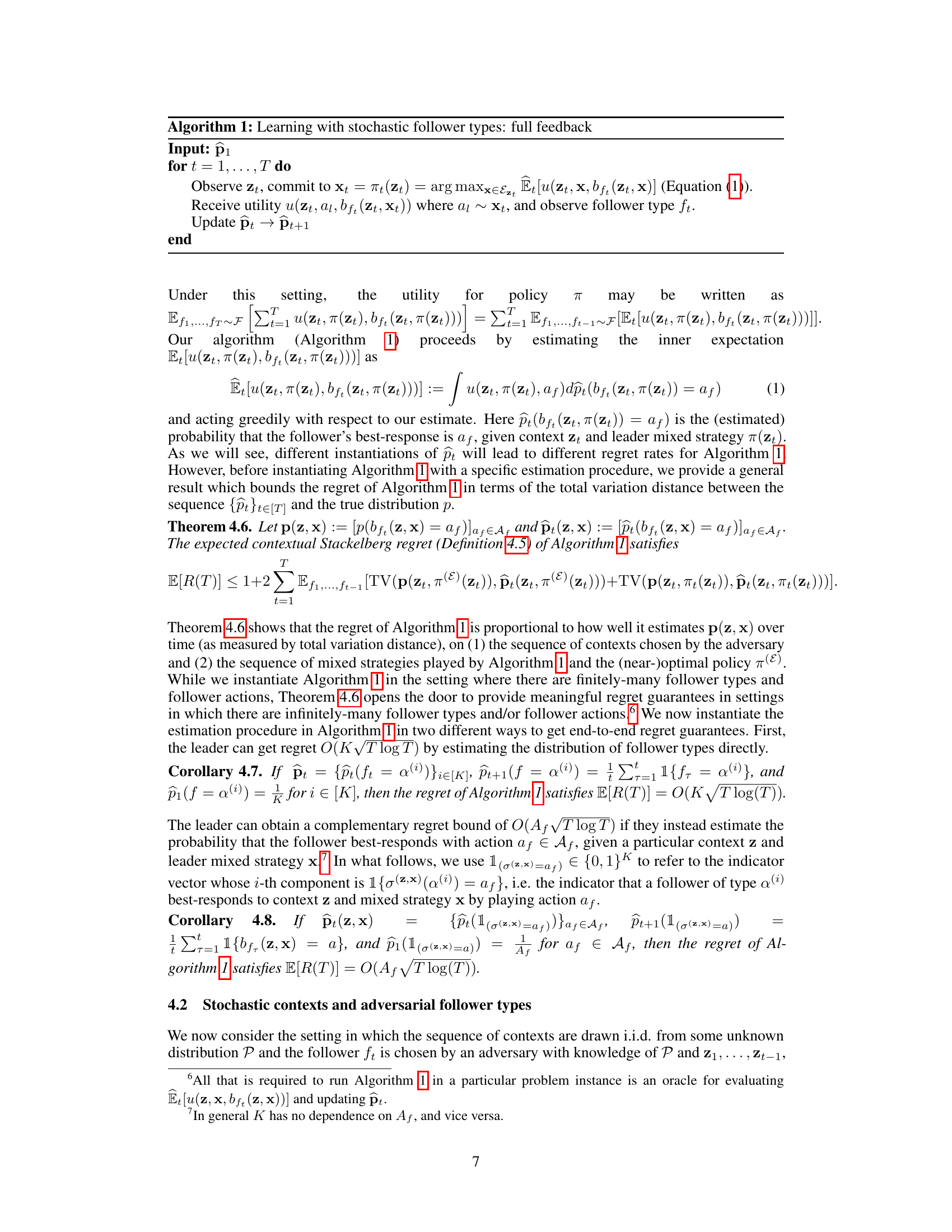

This table summarizes the upper and lower bounds on the contextual Stackelberg regret achieved by the proposed algorithms under various settings. The settings vary in how the sequence of followers and contexts are chosen (fully adversarial, stochastic followers/adversarial contexts, stochastic contexts/adversarial followers) and in whether full or bandit feedback is available. The table shows that no-regret learning is impossible in the fully adversarial setting but is achievable in the other settings with the provided regret bounds. The bandit feedback setting considers a relaxation where only the leader’s utility depends on side information.

Full paper#