↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Current QR code generation methods struggle to balance aesthetics, scannability, and the preservation of facial features when incorporating personal identity. Existing image and style transfer techniques often compromise either visual appeal or scannability. Generative models, while capable of high-quality images, lack precise control over the inclusion and placement of facial data. This leads to unsatisfactory QR codes that either fail to scan or look unnatural.

Face2QR solves this by using a three-stage pipeline. First, ID-refined QR integration (IDQR) combines background styles with the facial image using a unified Stable Diffusion framework. Second, ID-aware QR ReShuffle (IDRS) intelligently rearranges QR modules to avoid conflicts with facial features. Third, ID-preserved Scannability Enhancement (IDSE) improves scanning robustness through latent code optimization. Through these innovative components, Face2QR produces high-quality, scannable QR codes while faithfully preserving facial features and achieving a balanced visual design.

Key Takeaways#

Why does it matter?#

This paper is important because it presents a novel solution to a significant challenge in QR code design: integrating personalized information, specifically facial images, while preserving both aesthetic appeal and scannability. It introduces innovative techniques like ID-refined QR integration, ID-aware QR reshuffling, and ID-preserved scannability enhancement. This research is relevant to current trends in personalized digital identities and generative design, opening new avenues for future investigations into integrating complex information into visually appealing and functional QR codes. The comprehensive evaluation and high-quality results demonstrate the effectiveness of the proposed approach, providing a valuable resource for researchers in computer vision, image processing, and QR code technology.

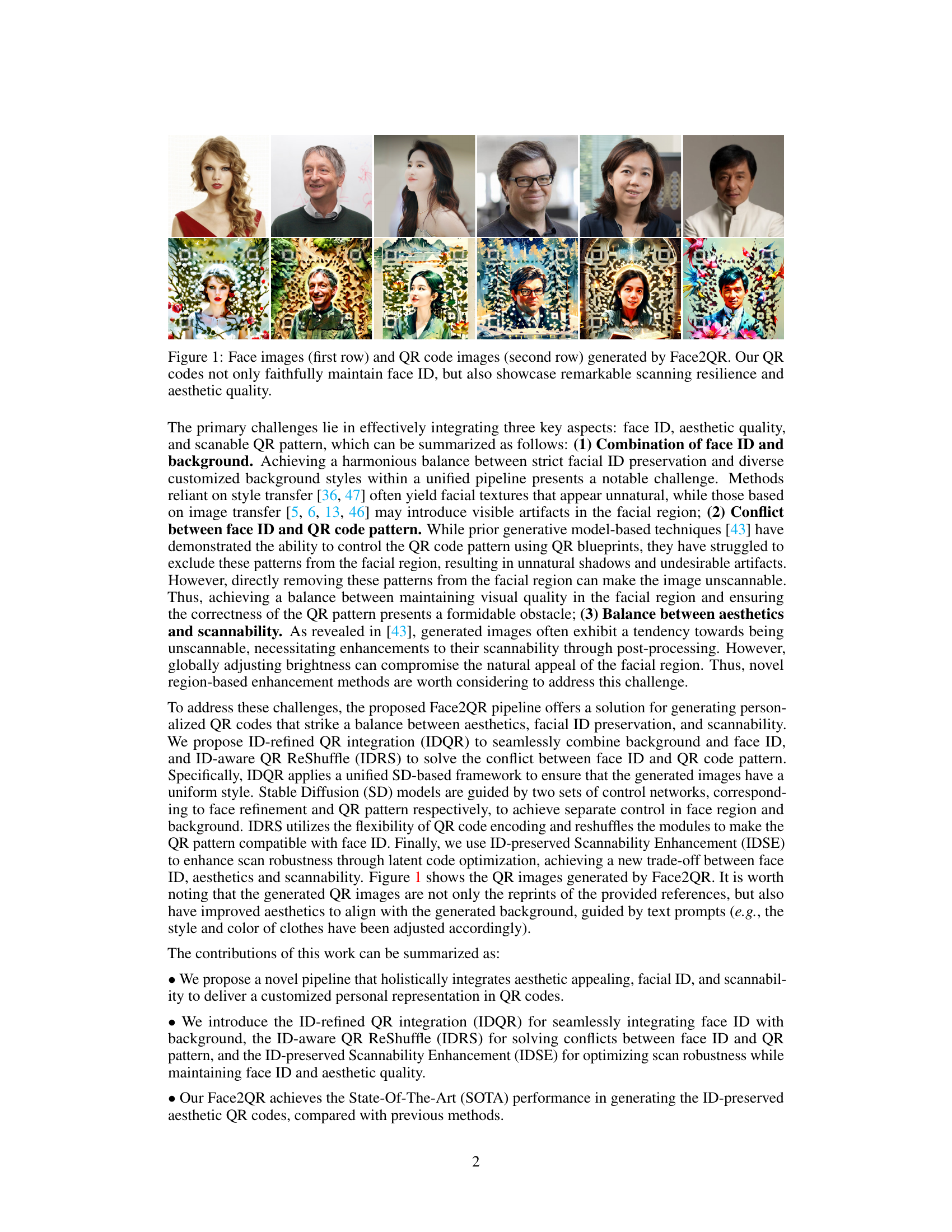

Visual Insights#

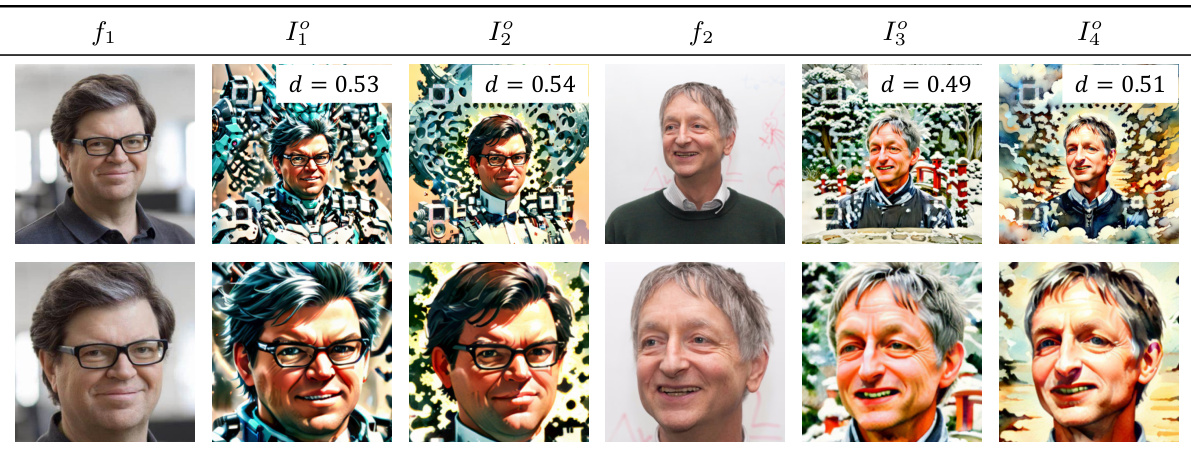

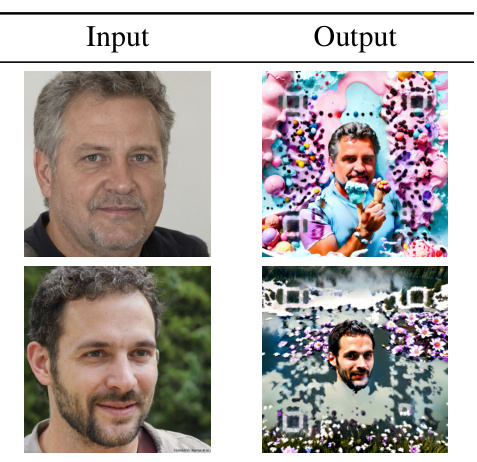

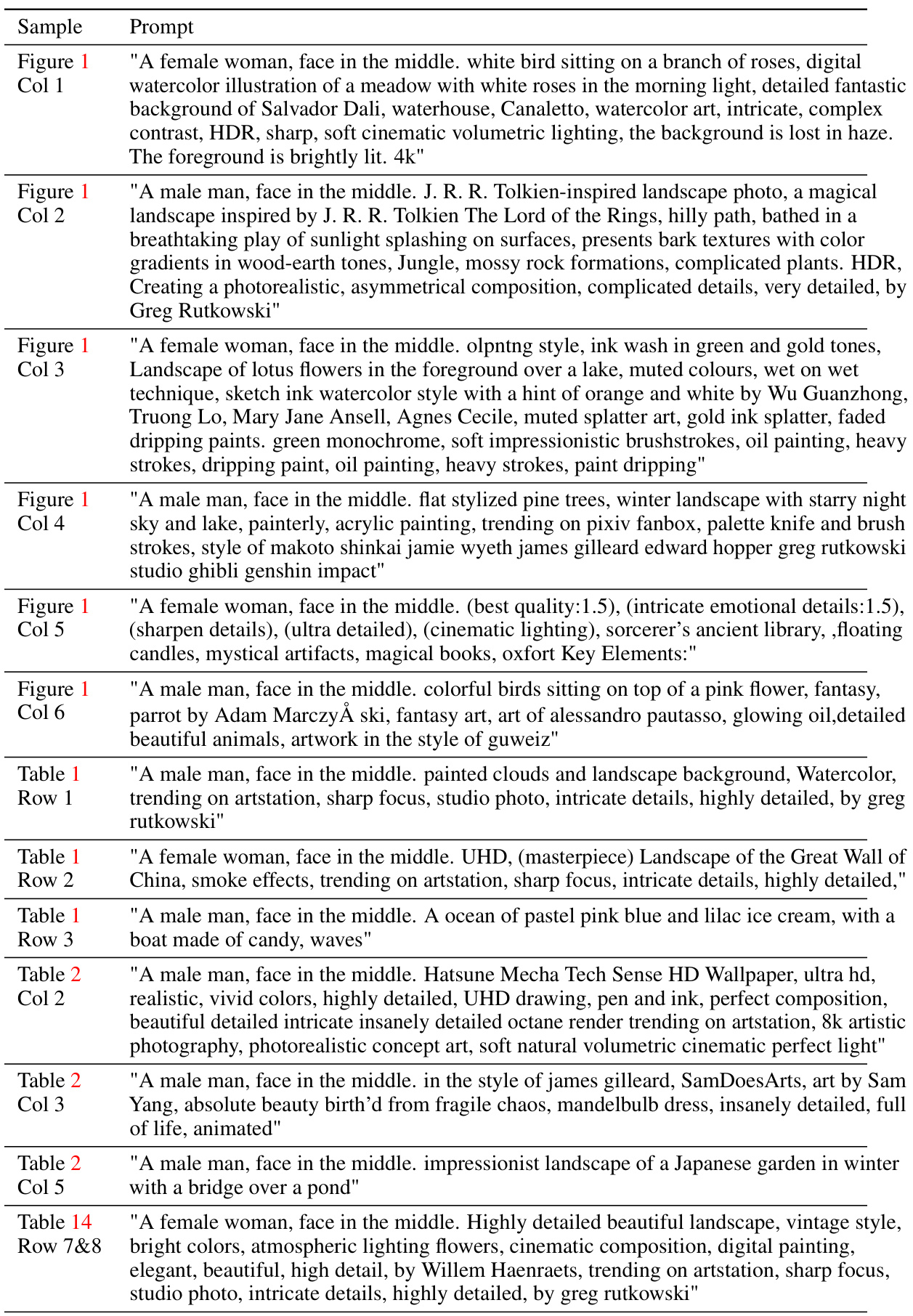

This figure shows the results of applying the Face2QR method. The top row displays six different input face images, and the bottom row presents the corresponding QR codes generated by Face2QR. The QR codes are not just functional but also aesthetically pleasing, integrating the face image seamlessly into a visually appealing design while maintaining high scannability.

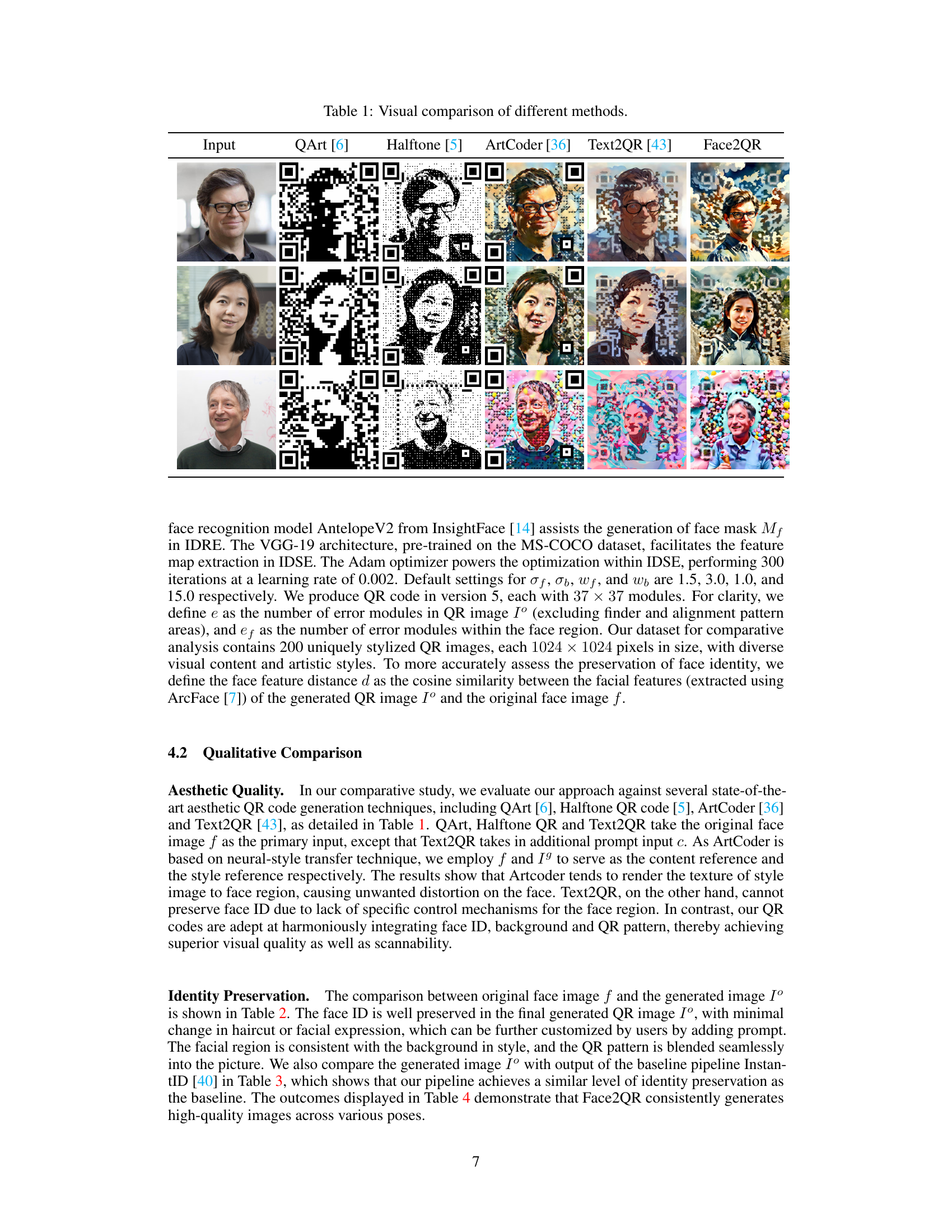

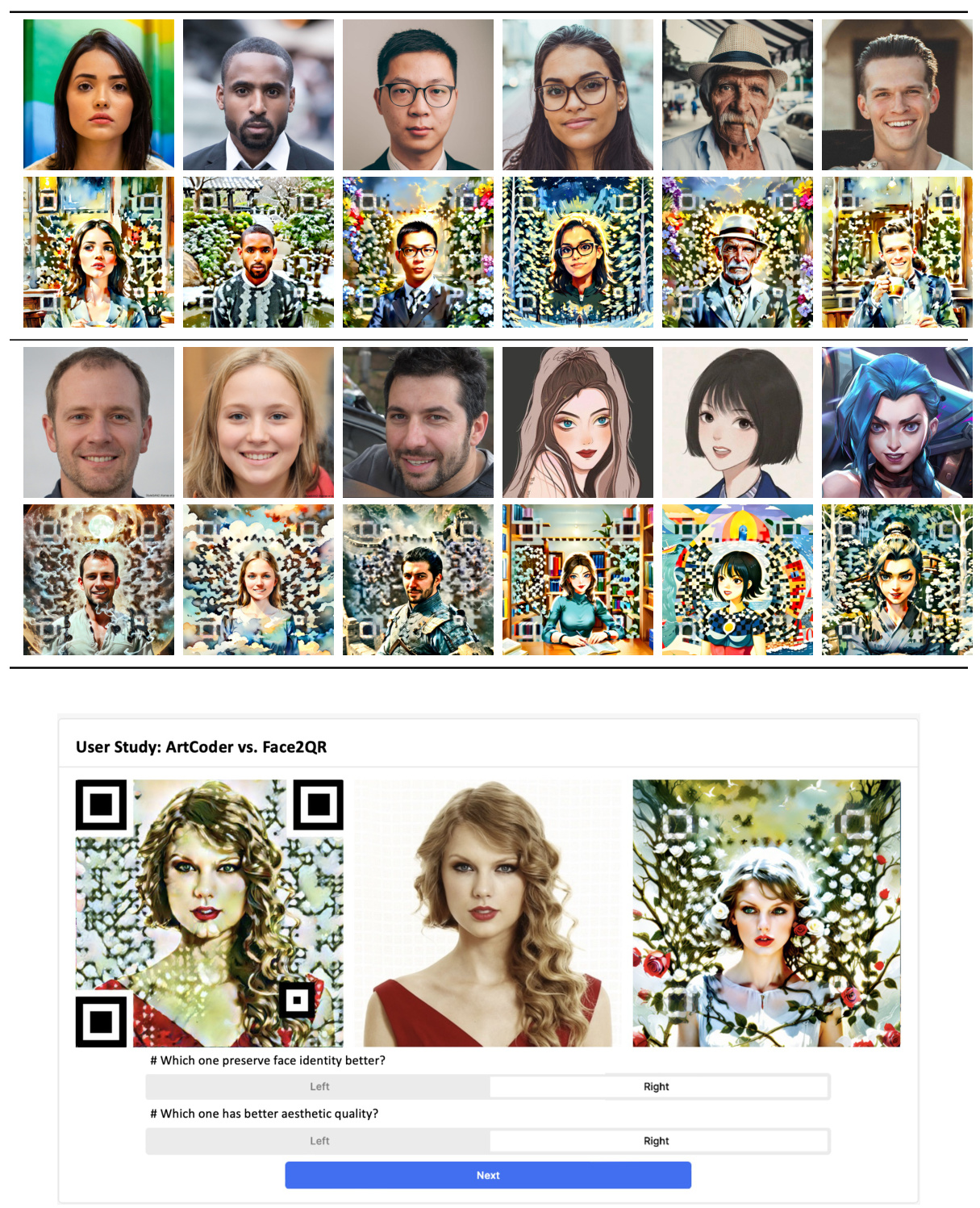

This table provides a visual comparison of the results of Face2QR against other state-of-the-art aesthetic QR code generation techniques, including QArt, Halftone QR code, ArtCoder and Text2QR. It shows the input images and generated QR codes for each method, allowing a visual comparison of aesthetic quality and facial feature preservation.

In-depth insights#

Aesthetic QR Codes#

Aesthetic QR codes represent a fascinating intersection of art and technology. The core challenge lies in balancing visual appeal with scannability. Early attempts often prioritized one over the other, resulting in either visually unappealing codes or codes that were difficult for scanners to read. Current approaches employ various techniques including style transfer and generative models to improve aesthetics. However, integrating human faces, a common personalization method, introduces further complexity, requiring sophisticated methods to avoid compromising both visual quality and scannability. Preserving facial recognition features while enhancing visual appeal remains a major challenge that current research is actively addressing, with techniques such as QR module reshuffling and latent code optimization being employed. The ultimate goal is to seamlessly integrate artistic expression into functional QR codes, creating personalized and visually engaging experiences for users.

ID-Preserving Tech#

ID-preserving technologies are crucial for balancing personalization and privacy in applications involving facial recognition. The core challenge lies in integrating individual identity information into applications while preventing unauthorized access or misidentification. Successful ID-preserving techniques must be robust against adversarial attacks, which aim to manipulate or counterfeit identity data, and should incorporate strong security measures such as encryption and access control. Privacy concerns are paramount, requiring methods that minimize the storage and processing of sensitive biometric data, employing techniques like differential privacy or federated learning. Furthermore, ethical considerations are vital, ensuring the responsible use of such technologies and preventing potential biases or discriminatory outcomes. Transparency and user control are also necessary features, allowing individuals to understand how their data is being used and giving them control over its access and use. Future directions include developing more efficient and accurate ID-preserving techniques, creating robust methods for verifying identity without compromising privacy, and establishing ethical guidelines for the design and deployment of these technologies. The goal is to enable personalized experiences while upholding privacy standards.

Unified SD Framework#

A unified SD (Stable Diffusion) framework, in the context of a research paper on QR code generation, likely refers to a single, integrated system leveraging the power of Stable Diffusion to address multiple challenges simultaneously. Instead of using separate models or pipelines for aesthetics, face preservation, and scannability, a unified framework would streamline the process. This approach probably involves using control networks within the SD architecture to independently guide different aspects of the image generation, ensuring a harmonious balance between a visually appealing QR code, accurate facial recognition, and reliable scannability. The advantage is in efficiency and coherence: the different features are generated concurrently rather than sequentially, reducing artifacts and improving overall quality. Key innovations within such a framework might include methods for intelligently integrating QR code patterns with facial features to avoid conflicts, and techniques for enhancing scannability without compromising aesthetics. This unified framework represents a significant advancement over prior methods that tackle these aspects in isolation.

Scannable QR Designs#

Scannable QR code design presents a unique challenge: balancing aesthetic appeal with robust scannability. Existing methods often compromise one for the other, particularly when integrating complex images like faces. A key consideration is the robustness of the QR code to variations in lighting, angle, and distance. Optimizing the contrast and size of the QR modules is crucial. Additionally, error correction capabilities built into the QR code standard help mitigate issues with partially obscured or damaged modules. However, excessive manipulation for artistic purposes can negatively impact these error correction capabilities. Therefore, advanced techniques are needed to strike a balance. This might involve employing adaptive halftoning or other intelligent encoding strategies that prioritize preserving scannability in key areas while allowing for creative freedom in less critical regions. Furthermore, post-processing techniques focused on enhancing contrast and sharpening the edges of the QR code modules without altering the underlying data can significantly improve scan rates.

Future Enhancements#

Future enhancements for Face2QR could focus on several key areas. Improving the robustness of the QR code generation process against various image degradations (e.g., noise, blur, compression) would be crucial. This could involve exploring more advanced techniques in error correction coding, perhaps using deep learning models to optimize QR code layouts dynamically. Another area of improvement is enhancing the system’s efficiency. The current pipeline, while effective, may be computationally expensive, especially for high-resolution images. Investigating more efficient architectures and algorithms, or leveraging hardware acceleration (like GPUs), is essential. Furthermore, exploring alternative generative models might lead to faster and higher-quality outputs. While Stable Diffusion is robust, other models designed for controlled generation could potentially offer advantages. Finally, expanding the customization options could greatly enhance the system’s appeal. This could involve allowing more granular control over the visual style, integrating additional customization parameters, or adding functionality to incorporate user-provided design elements directly. Addressing these enhancements would solidify Face2QR as a leading solution in personalized and aesthetic QR code generation.

More visual insights#

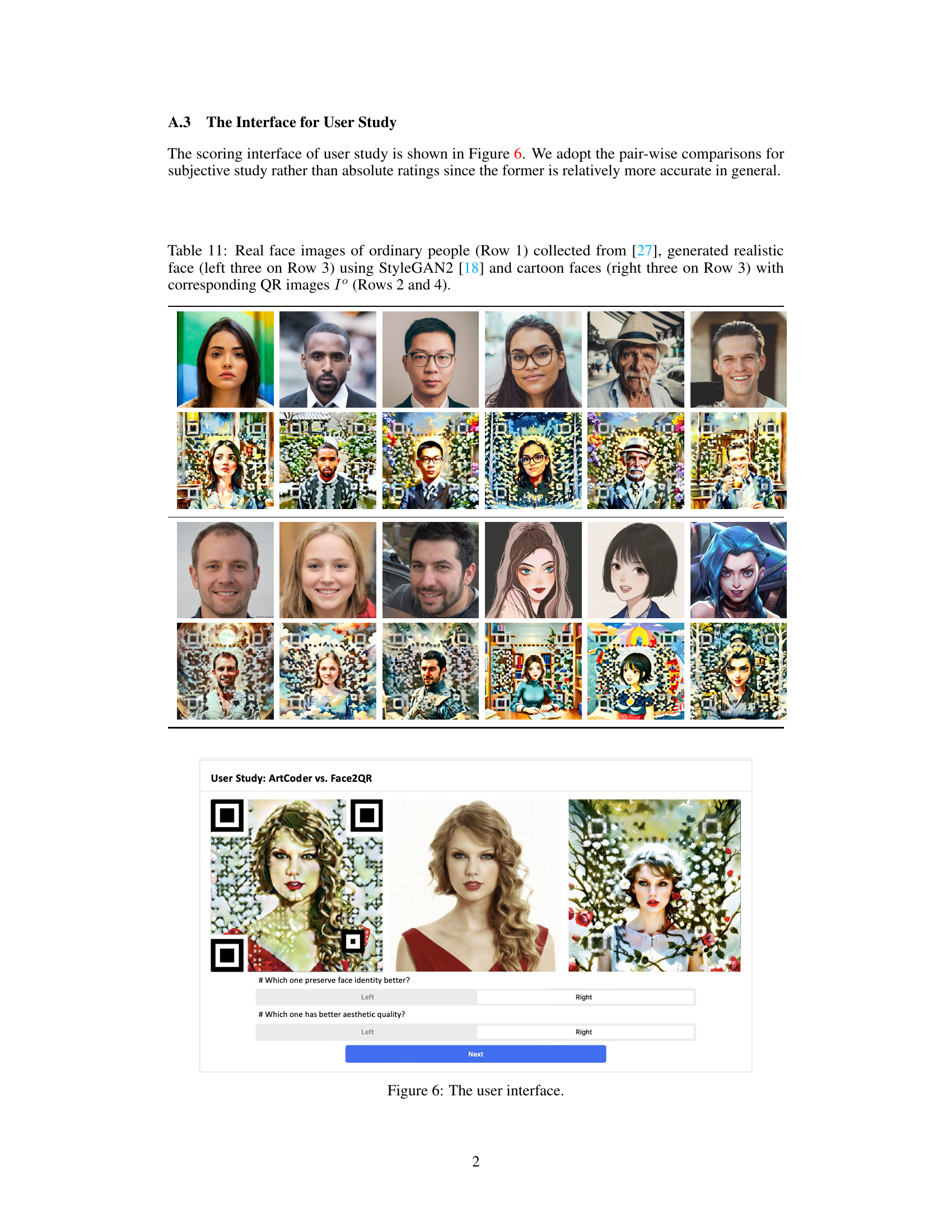

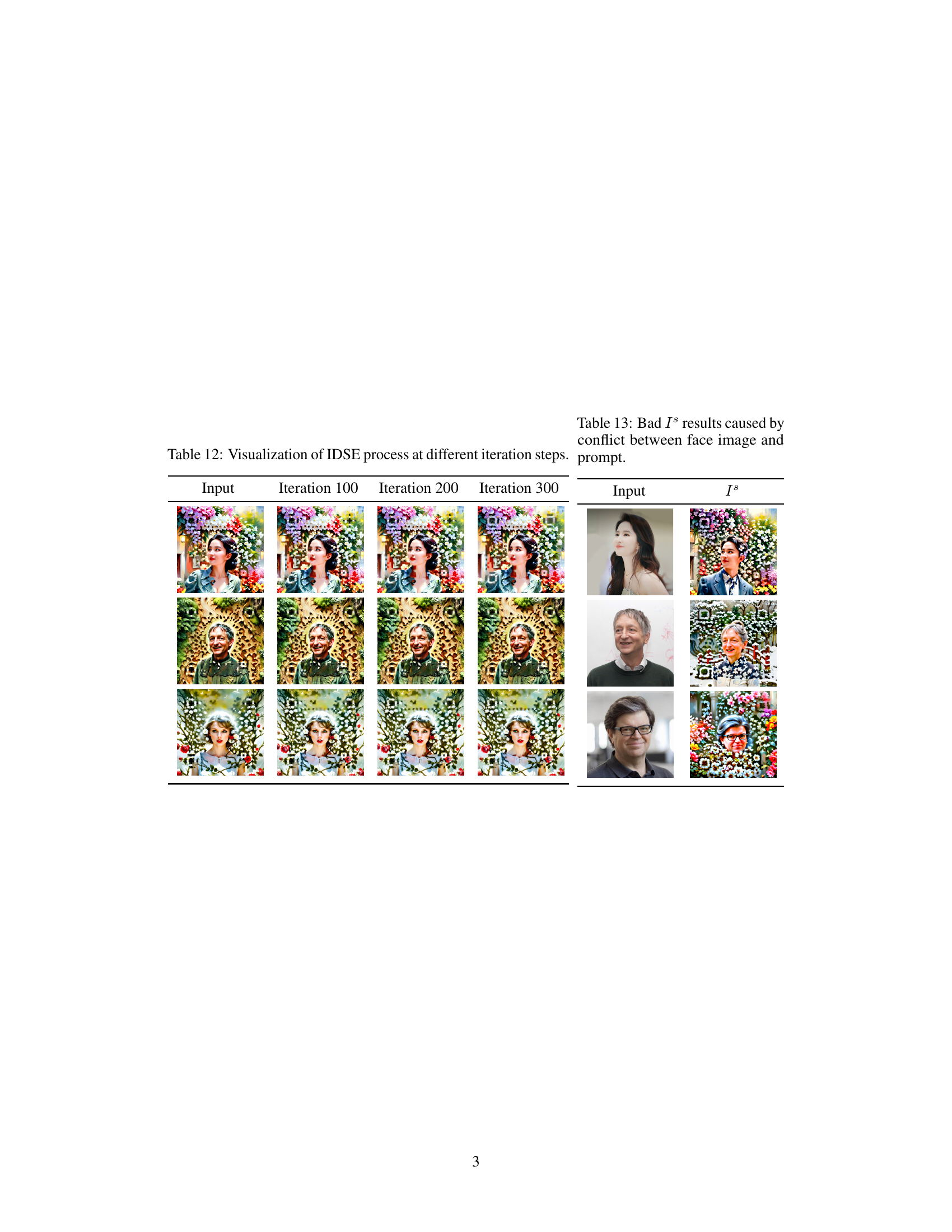

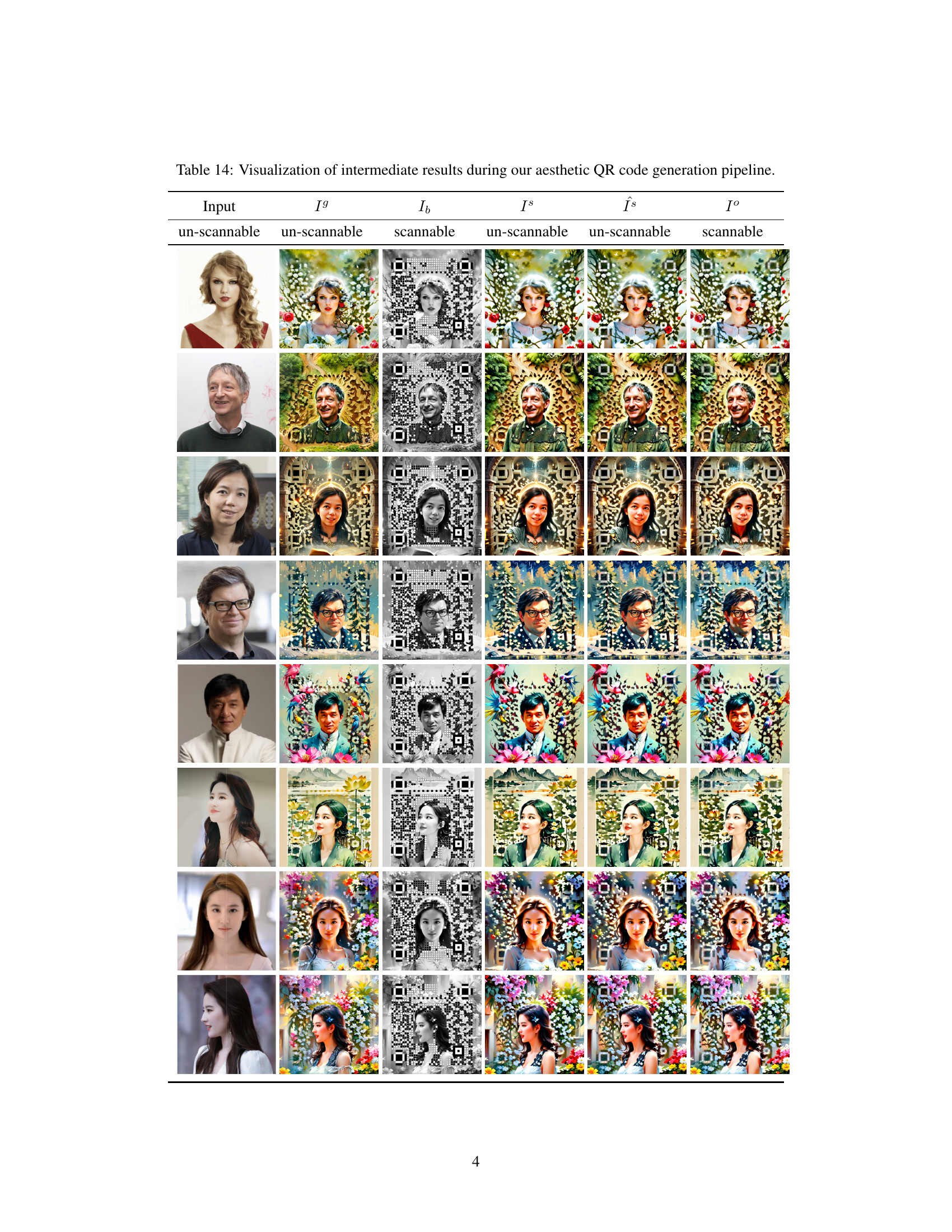

More on figures

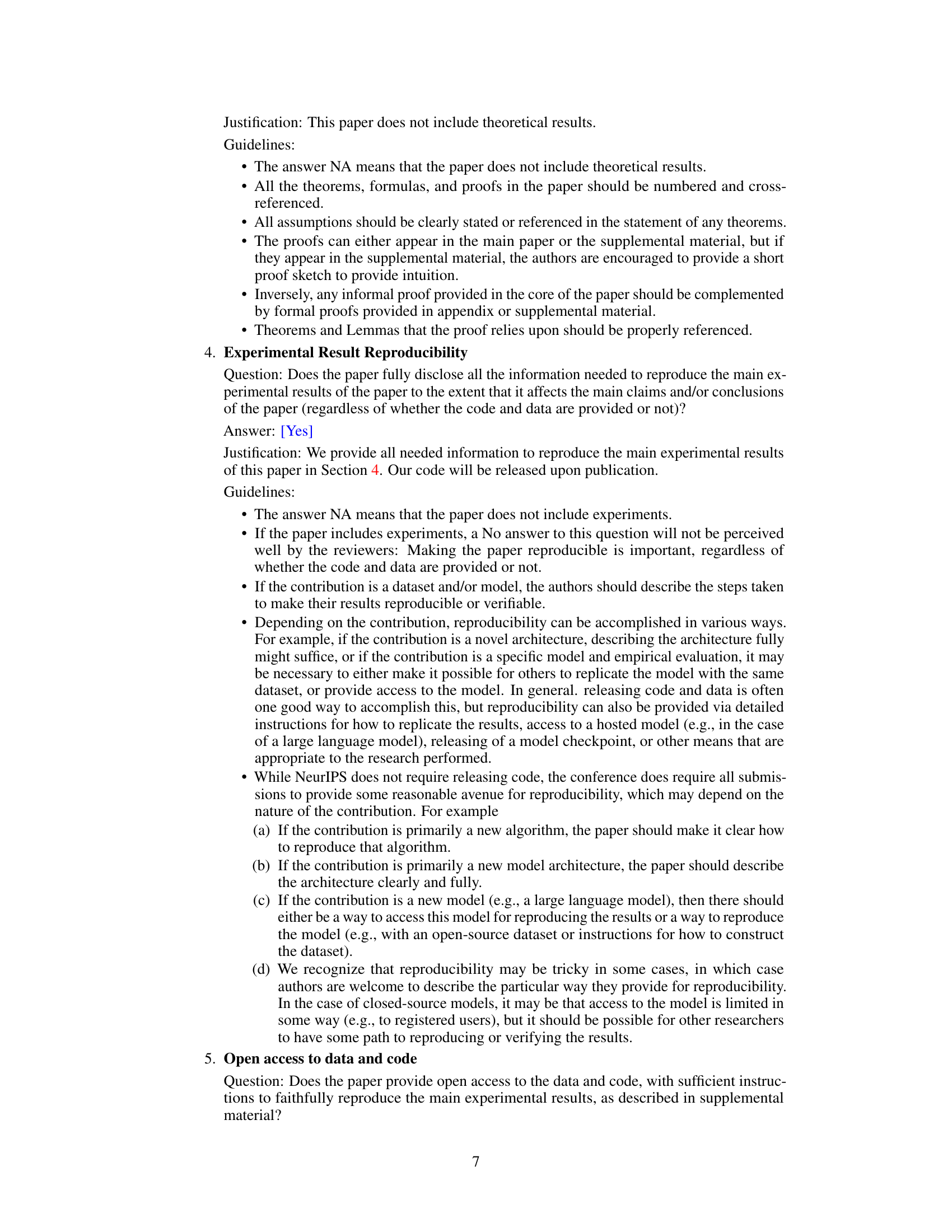

This figure illustrates the Face2QR pipeline, which consists of three stages: ID-refined QR integration (IDQR), ID-aware QR ReShuffle (IDRS), and ID-preserved Scannability Enhancement (IDSE). The pipeline takes as input a facial image, text prompts, and a QR code message. IDQR generates an initial QR image by integrating the facial image with a customizable background, using a Stable Diffusion model guided by control networks. IDRS addresses the conflict between face and QR code patterns by reshuffling the QR modules while preserving facial features. Finally, IDSE enhances the QR code’s scannability using latent code optimization. The figure visually shows the process flow and error rates at each stage, highlighting the reduction in errors from 43.85% to 0.01%.

This figure illustrates the Face2QR pipeline, a three-stage process for generating personalized QR codes that preserve facial identity while maintaining aesthetic appeal and scannability. Stage 1 (IDQR) integrates the face and background using a Stable Diffusion model. Stage 2 (IDRS) resolves conflicts between the face and QR code by rearranging modules. Stage 3 (IDSE) enhances scannability via latent code optimization. The figure visually shows the process with images and error rates at each stage.

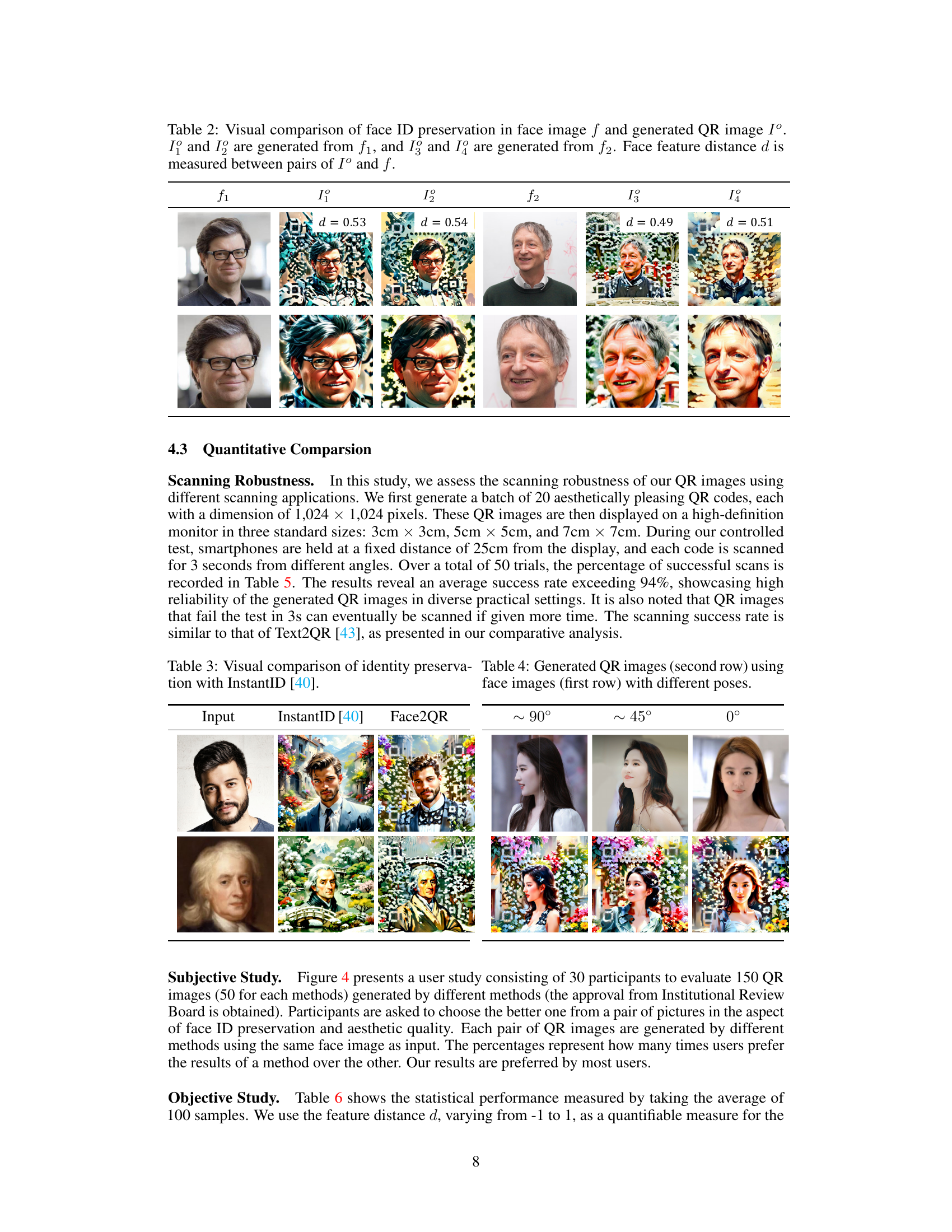

This figure displays a comparison between input face images and their corresponding QR codes generated using the Face2QR framework. The top row shows the input face images while the bottom row presents the QR codes, each integrated with the corresponding face image. The QR codes demonstrate high scanning resilience and pleasing aesthetic quality, showcasing the ability of Face2QR to seamlessly blend face identity, aesthetics and scannability.

This figure illustrates the Face2QR pipeline, which consists of three stages: ID-refined QR integration (IDQR), ID-aware QR ReShuffle (IDRS), and ID-preserved Scannability Enhancement (IDSE). IDQR integrates the face and QR code seamlessly; IDRS shuffles QR modules to reduce conflicts with the face while maintaining scannability; and IDSE refines the generated image to further boost scannability. The figure shows the flow of the process and highlights the error rates at each stage, illustrating how Face2QR successfully generates highly scannable QR codes that preserve facial identity.

This figure showcases the results of the Face2QR model. The top row displays input face images, while the bottom row shows the corresponding QR codes generated by the model. The codes are designed to seamlessly integrate the facial image with the QR code patterns, maintaining both facial recognition accuracy and scannability. They are visually appealing and display high-quality results.

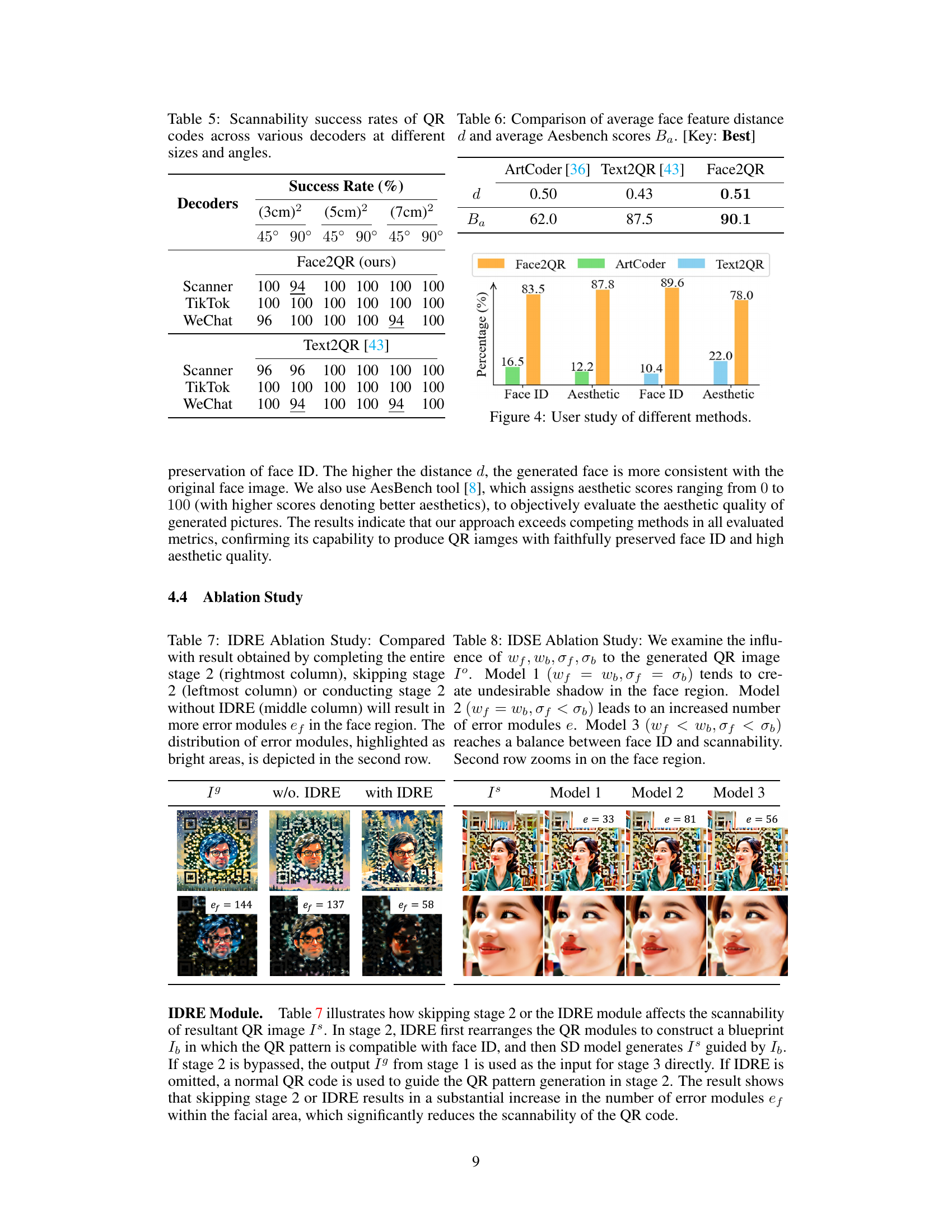

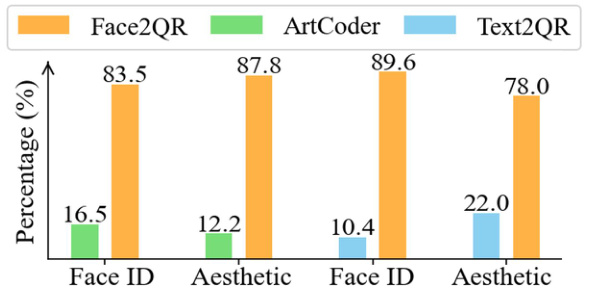

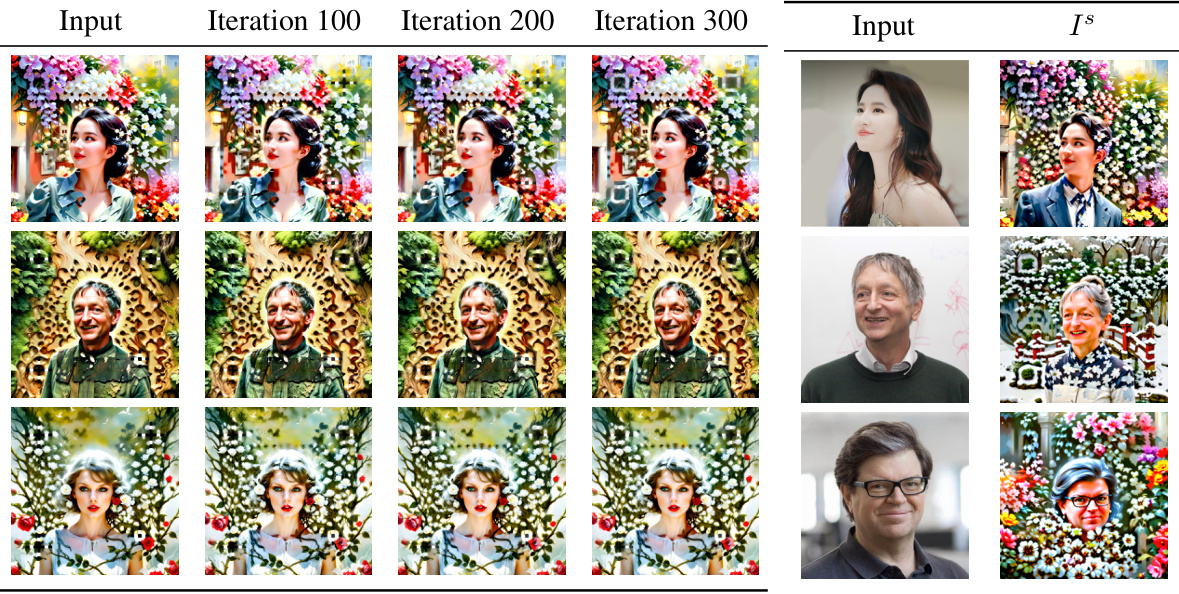

This figure presents the results of a user study comparing Face2QR against ArtCoder and Text2QR. The user study evaluated the performance of each method in terms of both face ID preservation (how well the face is preserved in the generated QR code) and aesthetic quality (how visually appealing the generated QR code is). The bar chart shows the percentage of times each method was preferred by users for face ID preservation and aesthetic quality. Face2QR demonstrates a significant advantage over the other two methods in both categories.

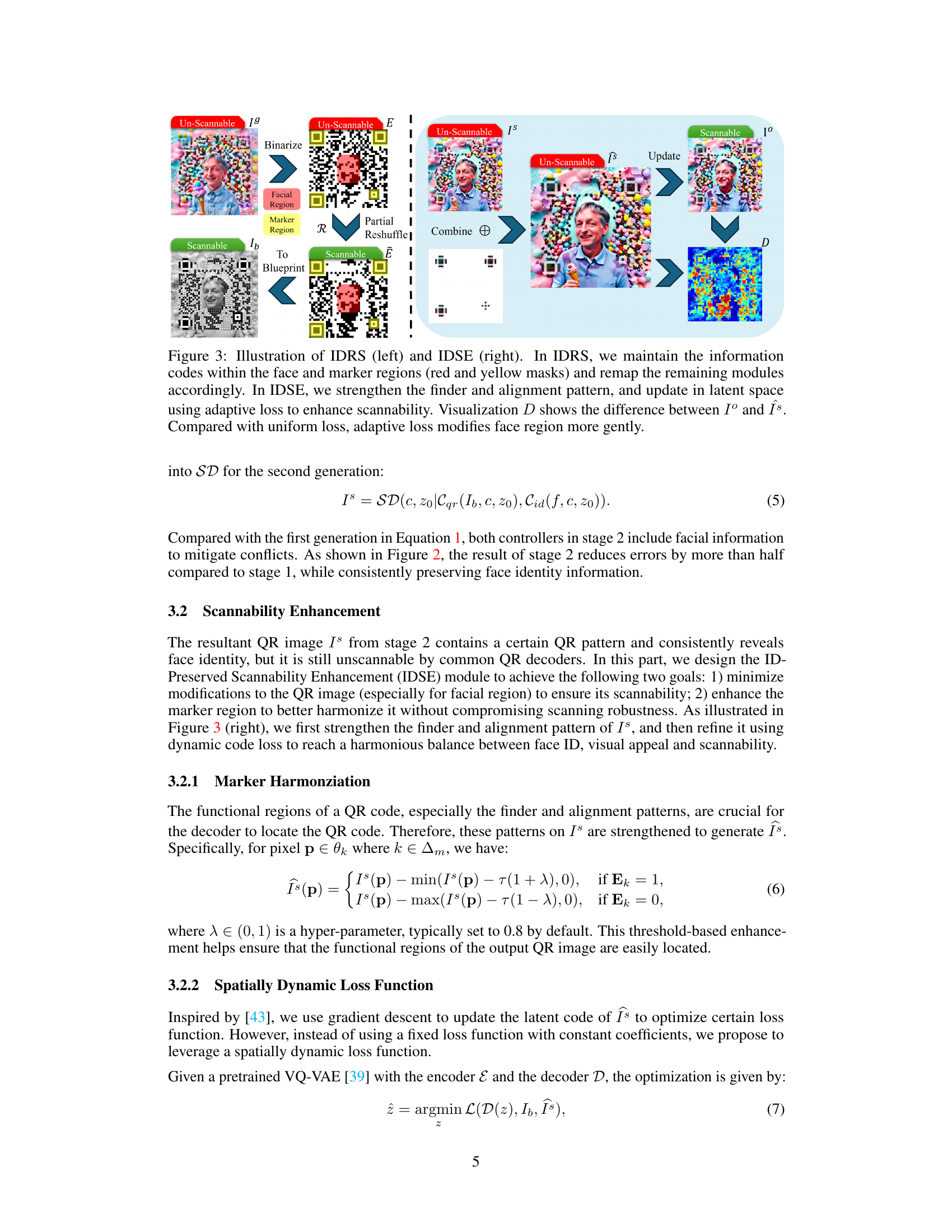

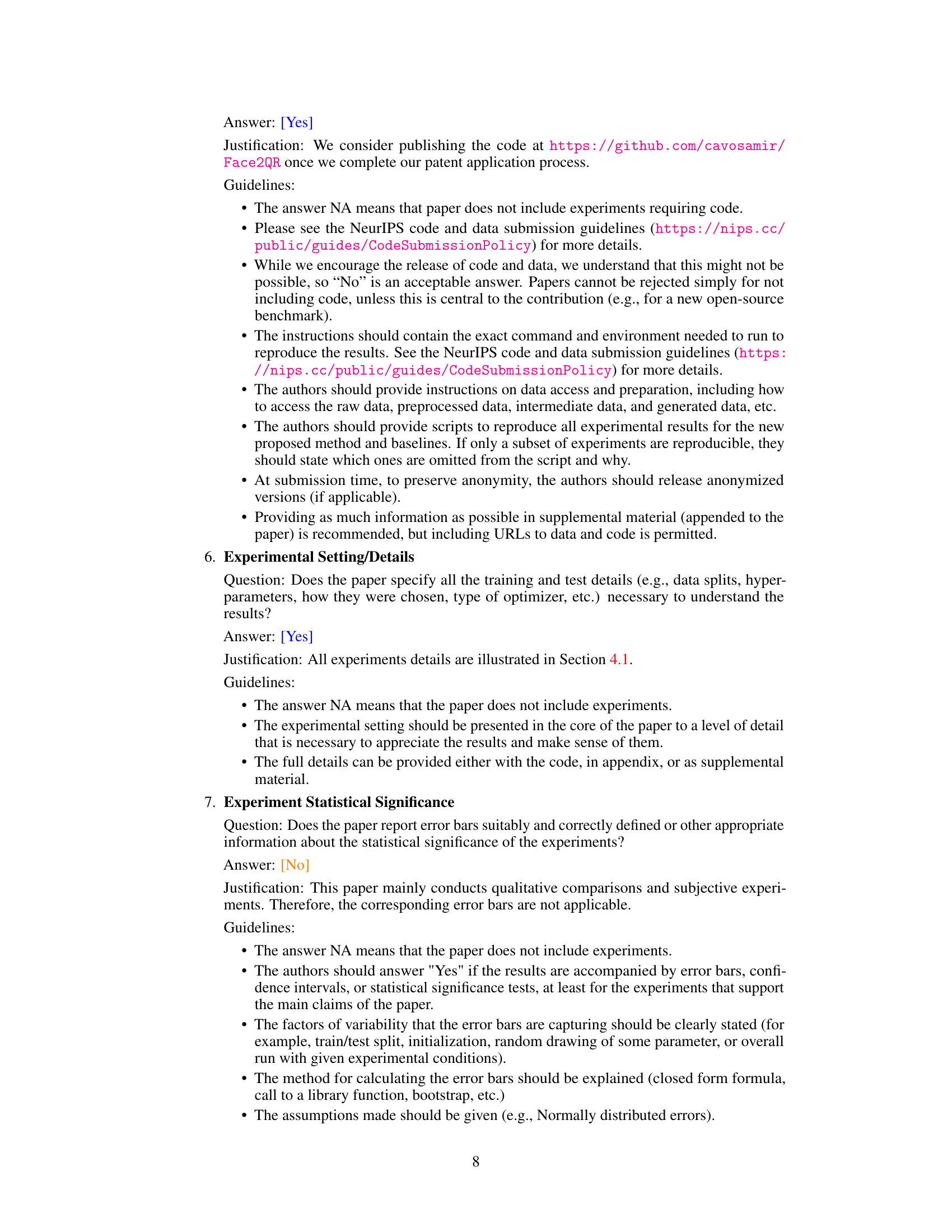

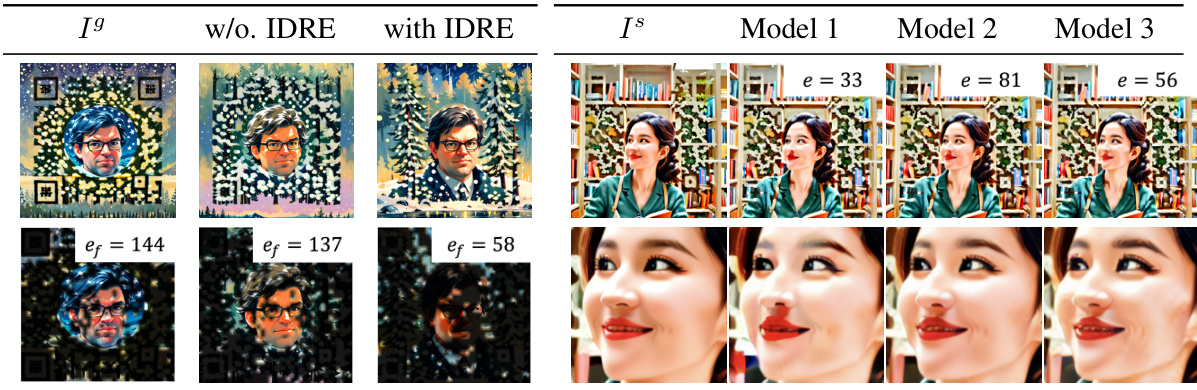

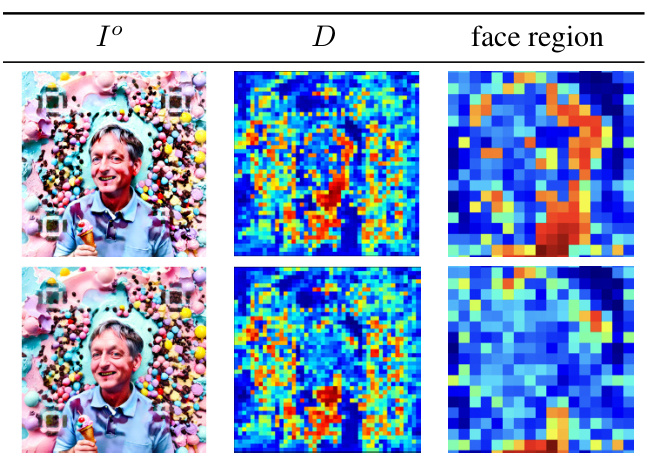

This figure illustrates the ID-Aware QR ReShuffle (IDRS) and ID-Preserved Scannability Enhancement (IDSE) modules. IDRS addresses conflicts between face ID and QR codes by fixing the facial and marker regions and reshuffling other modules. IDSE enhances the QR code scannability by optimizing the latent code using an adaptive loss function, which balances face ID preservation and aesthetic quality. The visualization D highlights how the adaptive loss in IDSE gently modifies the face region, unlike the uniform loss.

This figure visualizes the difference between QR images before and after applying the IDSE module with uniform and adaptive losses. The first row shows the result using uniform loss, highlighting significant changes in the facial region. The second row, using adaptive loss, demonstrates a gentler modification of the face region, achieving a better balance between face identity preservation and scannability.

This figure displays the results of the Face2QR model. The top row shows the input face images. The bottom row shows the corresponding QR codes generated by the model. The QR codes seamlessly integrate the faces into aesthetically pleasing and varied backgrounds, while maintaining both the integrity of the facial features and the scannability of the QR code.

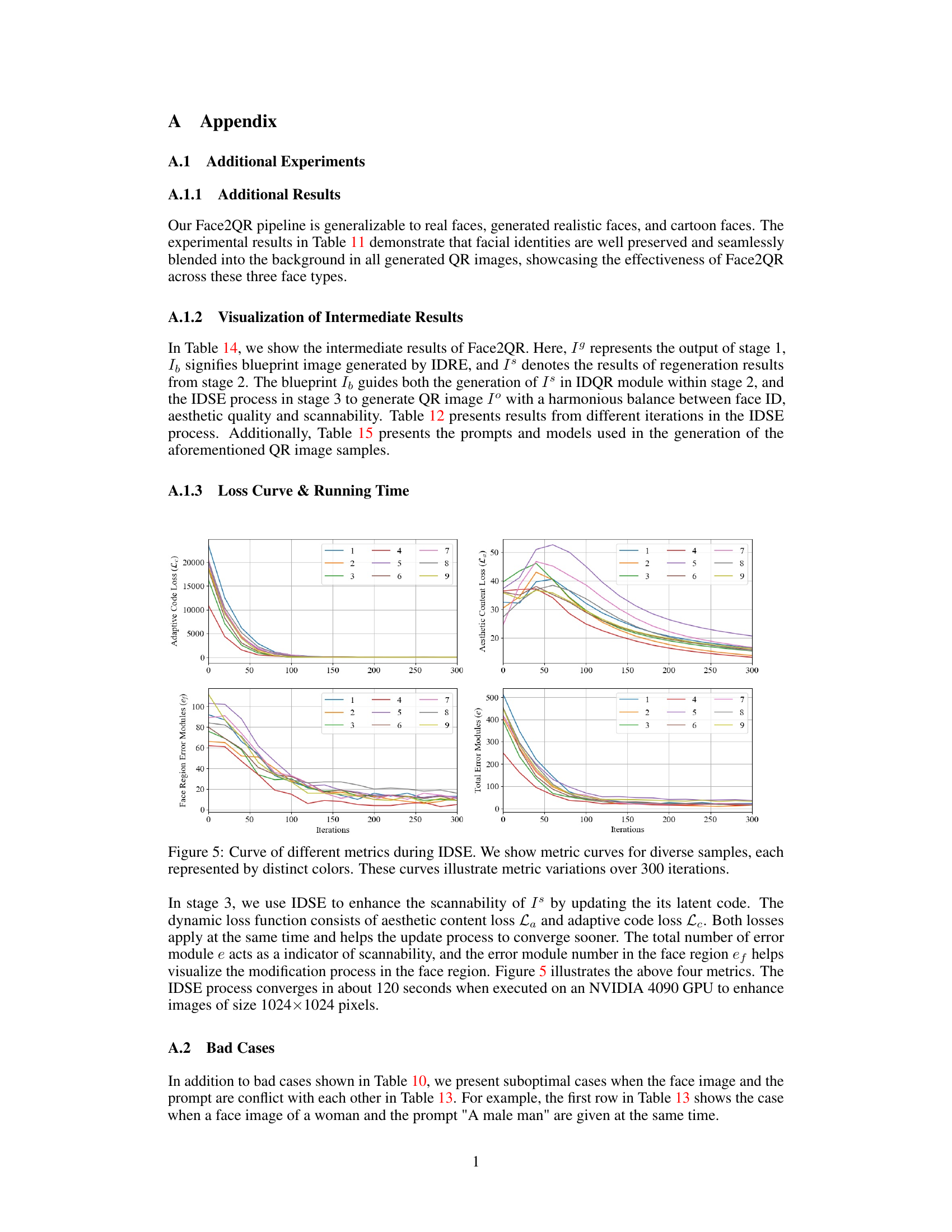

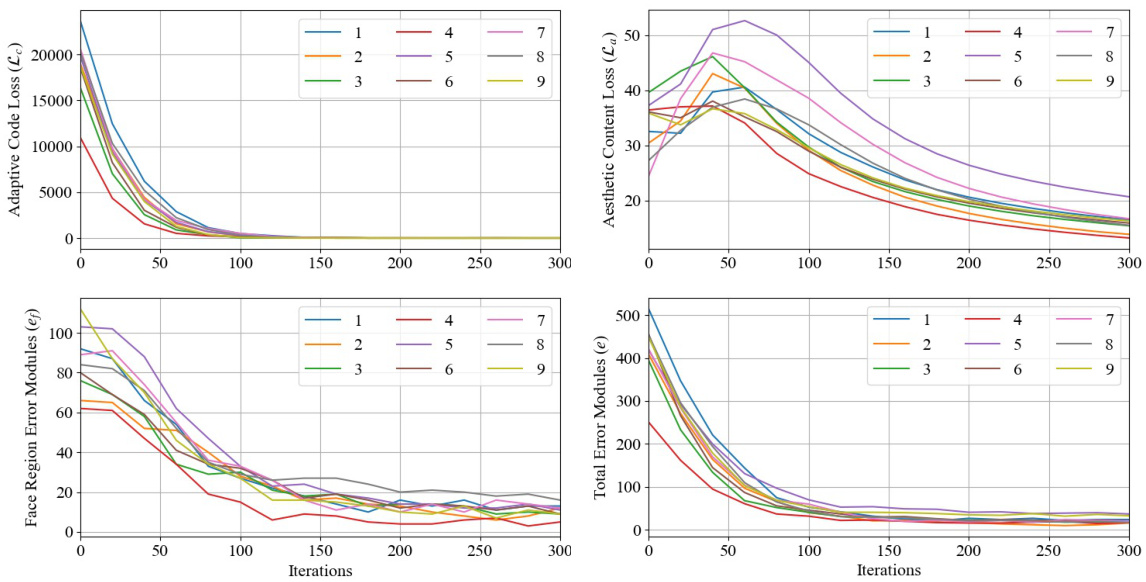

This figure shows the curves of four metrics during the IDSE (ID-preserved Scannability Enhancement) process. Each curve represents a different sample, and the curves track the changes in Adaptive Code Loss (Lc), Aesthetic Content Loss (La), Face Region Error Modules (ef), and Total Error Modules (e) over 300 iterations. The plot provides a visualization of how these metrics evolve during the optimization process of the IDSE module, highlighting the convergence towards a balanced compromise between face ID preservation, aesthetic quality, and QR scannability.

This figure illustrates the Face2QR pipeline, a three-stage process for generating personalized QR codes. The first stage (IDQR) integrates face and background using a unified Stable Diffusion framework. The second stage (IDRS) rearranges QR modules to resolve conflicts between the face and QR code, ensuring scannability and visual harmony. The third stage (IDSE) enhances scanning robustness via latent code optimization, balancing face ID, aesthetics, and QR functionality. The figure visually represents this flow and highlights the error rate reduction at each stage.

This figure illustrates the Face2QR pipeline, a three-stage process for creating personalized QR codes. Stage 1 (ID-Refined QR Integration, IDQR) generates an initial QR code by combining background styles with the input face image using a Stable Diffusion model. This stage, however, may lead to conflicts between the face and QR code patterns. Stage 2 (ID-Aware QR ReShuffle, IDRS) addresses these conflicts by rearranging QR modules to maintain image quality and scannability. Finally, Stage 3 (ID-Preserved Scannability Enhancement, IDSE) optimizes the QR code’s scannability without sacrificing aesthetics or face recognition.

This figure illustrates the Face2QR pipeline, a three-stage process for generating personalized QR codes. Stage 1 (ID-refined QR Integration, IDQR) combines background and face ID using a unified Stable Diffusion framework. Stage 2 (ID-aware QR ReShuffle, IDRS) addresses conflicts between face IDs and QR patterns by rearranging QR modules while preserving facial features. Finally, Stage 3 (ID-preserved Scannability Enhancement, IDSE) optimizes latent codes to improve scanning robustness. The figure highlights the error rates at each stage, showing a significant reduction in errors after the IDRS and IDSE stages.

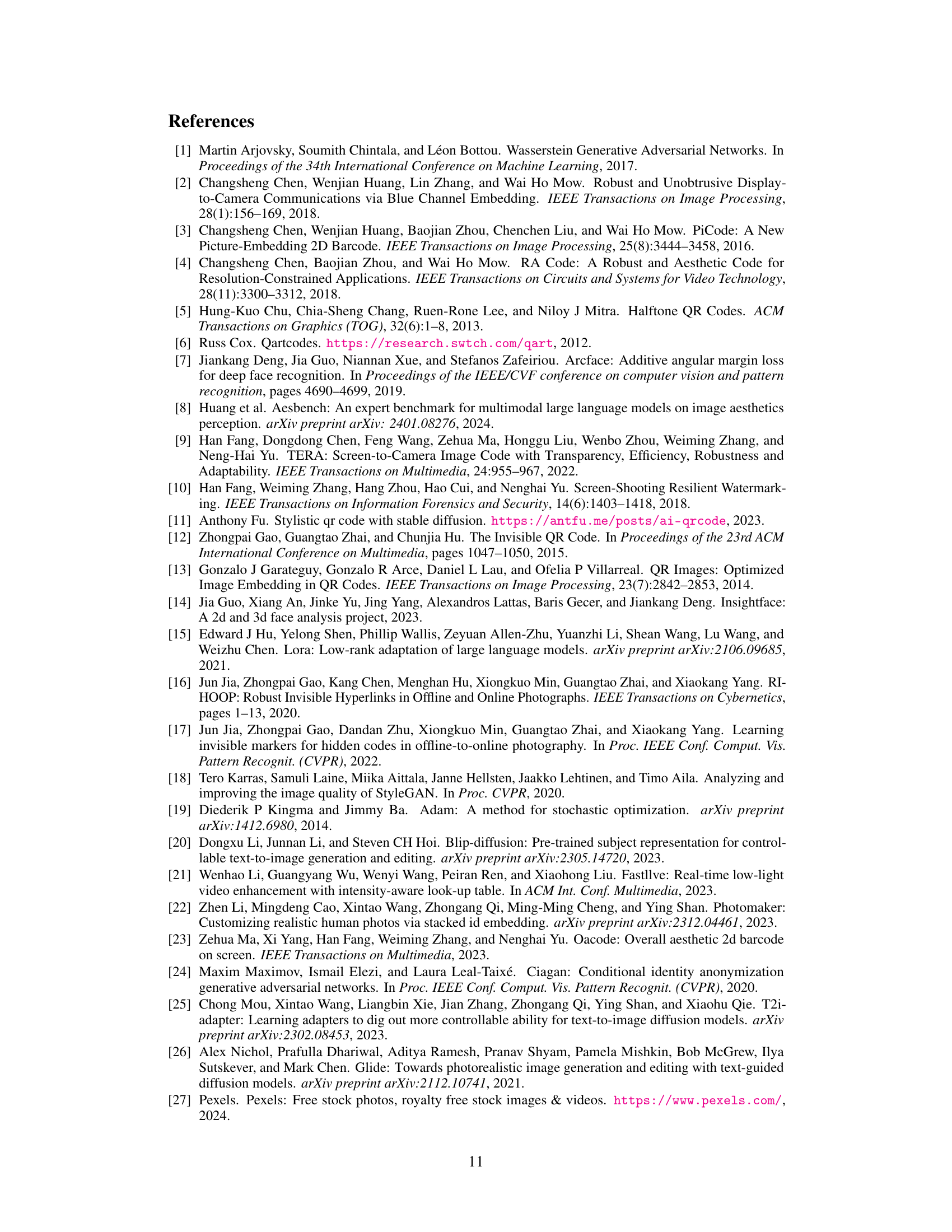

More on tables

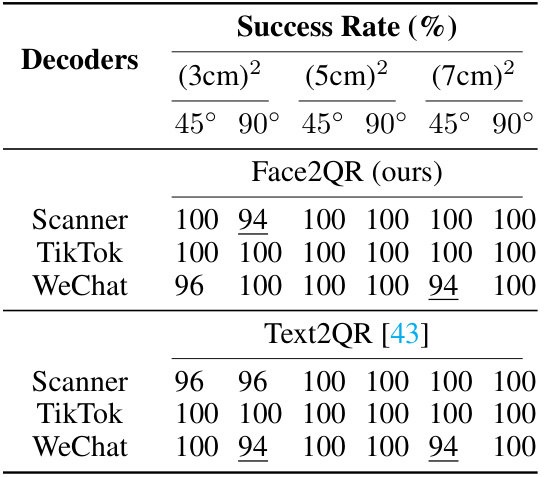

This table presents the quantitative results of testing the scannability of QR codes generated by Face2QR and compares them with the results of Text2QR. The success rate is measured across three different sizes of QR codes (3cm x 3cm, 5cm x 5cm, and 7cm x 7cm) and two angles (45° and 90°). Three different QR code scanners (Scanner, TikTok, and WeChat) were used to assess the scannability. The high success rates demonstrate the robustness and reliability of the generated QR codes in various practical settings.

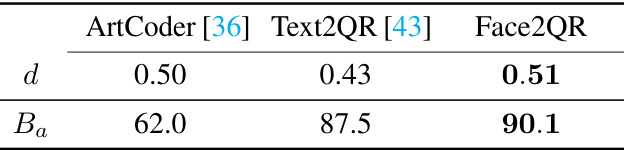

This table quantitatively compares the performance of Face2QR with ArtCoder and Text2QR in terms of face ID preservation and aesthetic quality. Face feature distance (d) measures the similarity between the generated QR code’s facial features and the original image’s facial features; a higher value indicates better preservation. The Aesbench score (Ba) provides an objective aesthetic quality assessment, with higher scores representing better aesthetics. Face2QR demonstrates superior performance across both metrics.

This table presents a qualitative comparison of Face2QR against other state-of-the-art aesthetic QR code generation techniques, including QArt, Halftone QR code, ArtCoder, and Text2QR. It visually demonstrates the differences in aesthetic quality, face ID preservation, and QR code pattern integration achieved by each method. The input images are the same for all methods, allowing for a direct comparison of their outputs.

Full paper#