↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Image deep features, while rich in information, have limitations in directly quantifying image attributes like quality or style. Existing methods often rely on comparing features or analyzing their distributions, which can be inflexible or require human-labeled data. The challenge lies in finding a robust and adaptable way to capture diverse image characteristics without relying on manual labeling or inflexible feature comparison techniques.

This paper introduces Deep Degradation Response (DDR), a novel image descriptor that addresses these limitations. DDR leverages the changes in image deep features caused by controlled degradation as a flexible descriptor. The method excels as an unsupervised learning objective in image restoration tasks and outperforms other methods in blind image quality assessment. The text-driven approach enables flexible degradation control, showing promising results across various applications.

Key Takeaways#

Why does it matter?#

This paper is important because it introduces a novel and versatile image descriptor, DDR, which outperforms existing methods in image quality assessment and enhances performance in image restoration tasks. It opens up new avenues for unsupervised learning in computer vision by using the image’s response to degradation as a learning objective. This approach is highly relevant to the current research trends in both image quality assessment and image restoration, making it a valuable resource for researchers in these fields.

Visual Insights#

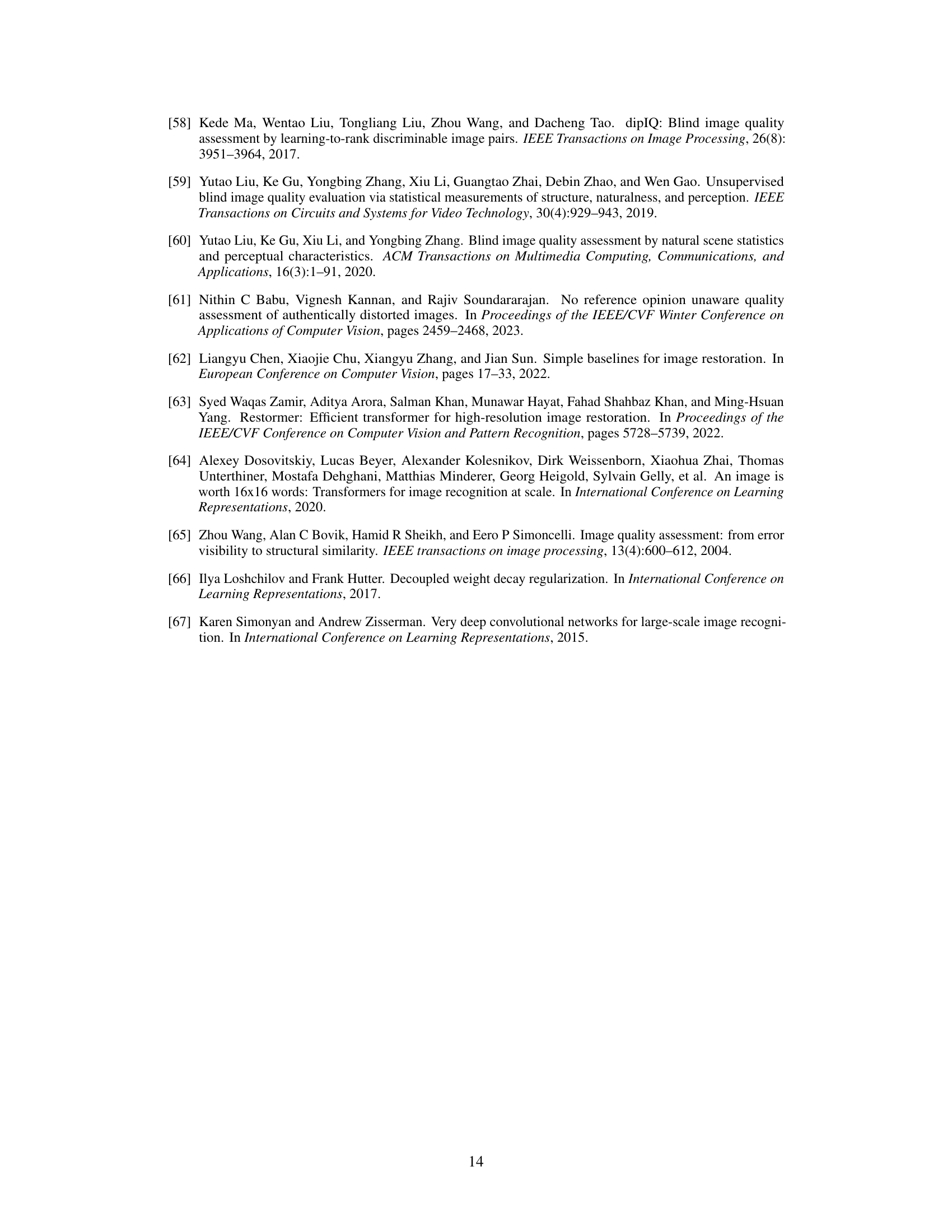

This figure shows examples of how different images respond to the same Gaussian blur. The top row shows original images, and the bottom row shows the same images after blurring. The numbers below each pair represent the LPIPS distance between the original and blurred versions. LPIPS is a metric that measures perceptual similarity, so lower numbers indicate smaller changes in deep features; the images with simpler textures change less dramatically after blurring.

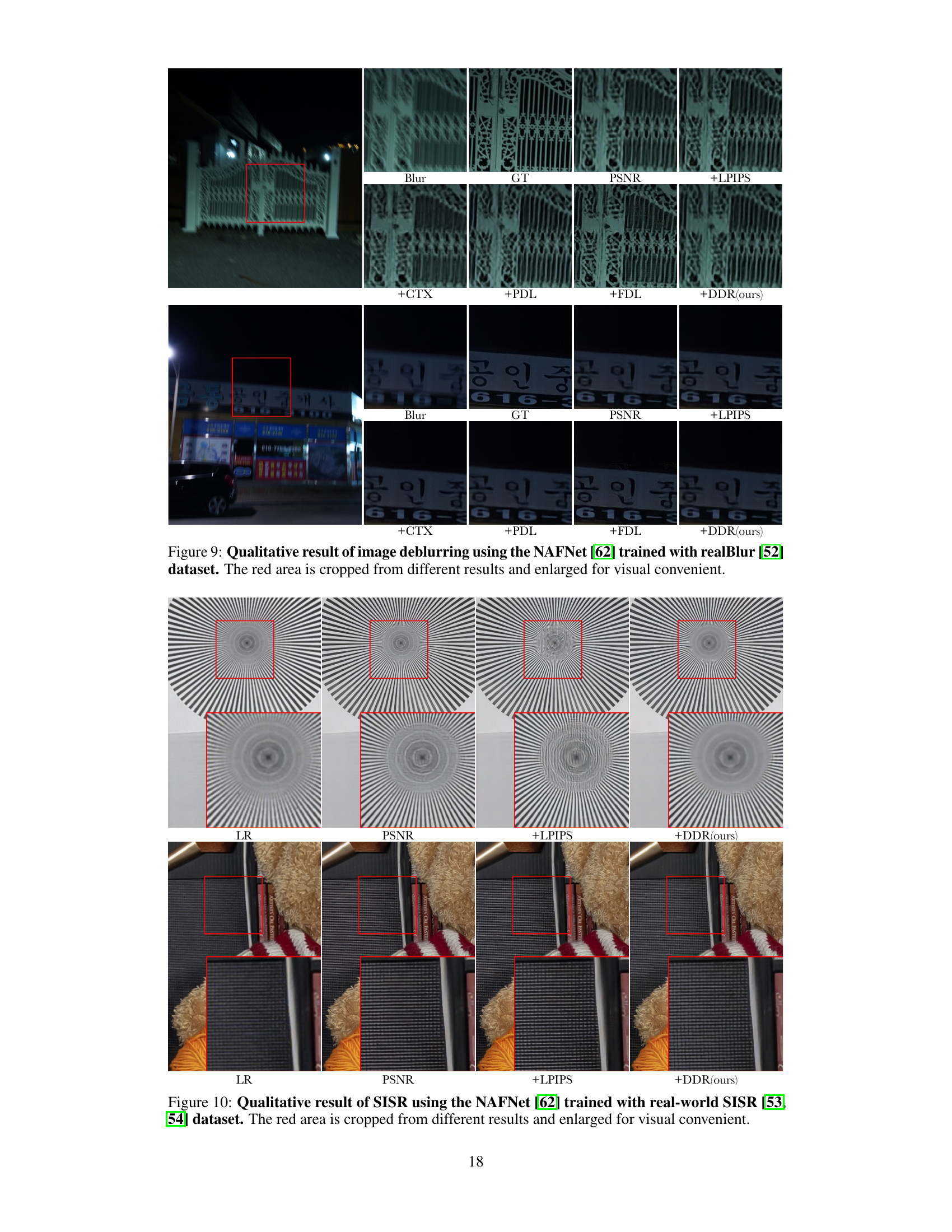

This table compares the Spearman rank correlation coefficient (SRCC) of different blind image quality assessment (BIQA) metrics with human judgment scores for blurred images from the LIVE dataset. It highlights the superior performance of the proposed DDRblur metric, which quantifies changes in deep features due to blur, showcasing its strong correlation with human perception of image quality.

In-depth insights#

DDR: A Novel Descriptor#

DDR, as a novel image descriptor, presents a unique approach to image representation by focusing on the deep feature response to various degradations. Instead of directly encoding image content, it quantifies how deep features change under controlled degradations, offering a flexible and adaptive descriptor. This approach’s strength lies in its ability to capture intricate image characteristics often missed by traditional methods, which focus primarily on content analysis. By leveraging text-driven prompts, it allows for flexible and controlled degradation synthesis, making it highly versatile across diverse applications. Its success in blind image quality assessment and as an unsupervised learning objective in image restoration demonstrates its effectiveness in tasks beyond simple image classification. However, future work needs to address potential limitations, such as the feature extractor’s understanding of low-level degradation details and the need for task-specific prompt engineering. The reliance on pre-trained networks also needs consideration. Despite these, DDR’s adaptability and strong performance make it a promising advancement in image analysis.

Feature Degradation#

The concept of “Feature Degradation” in the context of image analysis using deep learning models is crucial. It explores how the representative features extracted by a pre-trained model change under various image degradation conditions (e.g., blur, noise, compression). Understanding this degradation is key to developing robust image descriptors and improving performance in downstream tasks. The impact of degradation varies depending on image content and the type of degradation applied, highlighting the need for adaptive and flexible methods. Quantifying feature degradation allows for the creation of metrics that strongly correlate with perceived image quality, leading to advancements in blind image quality assessment. Furthermore, treating feature degradation as an unsupervised learning objective can enhance image restoration models, providing a new perspective for improving image deblurring, super-resolution, and other related image processing tasks. The effectiveness of a method in measuring and leveraging feature degradation is therefore vital for creating robust and adaptable image processing techniques.

BIQA & Restoration#

The BIQA (Blind Image Quality Assessment) and restoration sections of the paper explore the relationship between image quality and deep features. DDR (Deep Degradation Response) is proposed as a novel BIQA metric, effectively measuring changes in deep features under various degradations. The strength of DDR lies in its flexibility, adapting to different degradation types via text-driven prompts. DDR significantly outperforms existing BIQA methods across multiple datasets, demonstrating its robustness and accuracy in evaluating image quality. Furthermore, the study demonstrates the effectiveness of DDR as an unsupervised learning objective for image restoration. By incorporating DDR during model training, notable improvements are achieved in image deblurring and single-image super-resolution tasks. This suggests DDR can serve as a powerful tool for both evaluating and improving image quality, bridging the gap between perception and representation in image processing.

Text-Driven Degradation#

The concept of “Text-Driven Degradation” presents a novel approach to image manipulation and analysis. Instead of relying on predefined, pixel-level degradation methods (like applying Gaussian blur or adding noise), this technique leverages the power of large language models to control the type and intensity of degradation applied to an image. This is achieved by using textual descriptions as prompts, guiding the model to generate the appropriate degradation in the feature space of the image. This approach is inherently more flexible than traditional methods, offering granular control and allowing for the creation of novel, potentially unanticipated degradation effects. The core innovation is in applying degradation directly to the learned features of an image, bypassing the need for explicit pixel-level manipulation. This approach likely offers improved adaptability to diverse image content, making it less sensitive to variations in image texture and style. The text-driven nature allows users to specify the desired degradation with natural language, enabling greater intuitive control and potentially automating the laborious process of manually generating diverse degraded images. This innovative method has significant implications for various computer vision tasks, including image quality assessment, restoration, and the creation of more realistic synthetic training datasets.

Future Work: DDR+#

DDR+, as a future extension of Deep Degradation Response (DDR), presents exciting avenues for enhancing image analysis and manipulation. Extending DDR to videos would enable temporal analysis of degradation effects, opening doors to improved video restoration and quality assessment. Integrating advanced deep learning architectures, such as transformers or diffusion models, could boost DDR’s performance, allowing for a more nuanced and accurate quantification of degradation. Exploring diverse degradation types beyond those currently considered in DDR could significantly broaden its applicability, encompassing complex scenarios like motion blur or atmospheric effects. Developing a more comprehensive evaluation framework is also critical, comparing DDR+ against a wider range of existing methods and exploring its potential on diverse image datasets and applications. Investigating DDR+’s potential in various computer vision tasks, such as image retrieval and synthesis, is crucial to demonstrate its wide-ranging influence. The core challenge will lie in designing robust and efficient algorithms, but the potential benefits in diverse areas warrant further exploration.

More visual insights#

More on figures

This figure shows the distribution of Deep Degradation Response (DDR) values for images in the LIVEitw dataset under different degradation levels. The x-axis represents the DDR value, and the y-axis represents the number of images with that DDR value. Four different degradation methods are compared: low, optimal, high levels of handcrafted degradation applied directly to the pixel domain of the image, and an adaptive method using text-driven feature-space degradation. The figure demonstrates that the adaptive method produces a DDR distribution similar to the optimal handcrafted method, suggesting its effectiveness in achieving optimal performance for Blind Image Quality Assessment (BIQA).

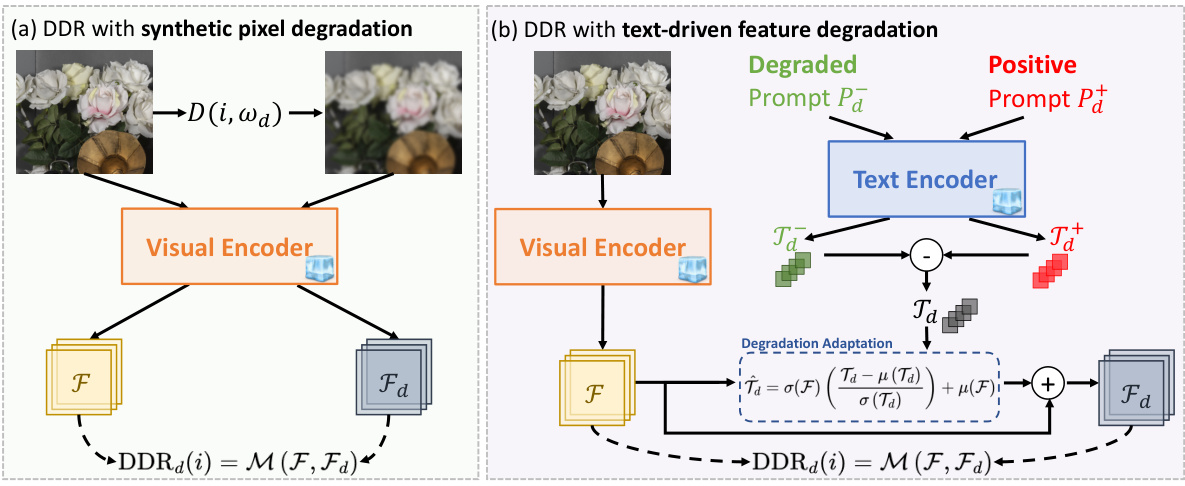

This figure illustrates the two methods used to compute the Deep Degradation Response (DDR). Method (a) uses handcrafted degradation in the pixel domain, applying degradation directly to the image and then extracting features. Method (b) uses text-driven feature degradation, leveraging a text encoder to generate a degradation representation from text prompts and then fusing this representation with the image features to create degraded features. The DDR is then calculated as a distance metric between the original and degraded features.

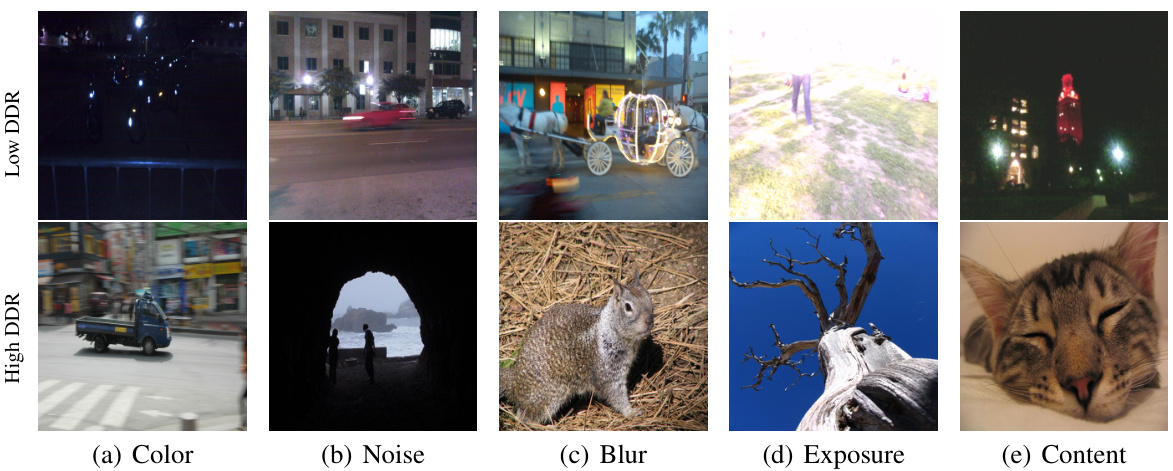

This figure shows example images with high and low DDR values for five different degradation types (color, noise, blur, exposure, and content). The DDR was calculated using a text-driven degradation method in the feature domain. Images with lower DDR values for a specific degradation type show a higher degree of that degradation, suggesting that DDR is correlated with the level of degradation present.

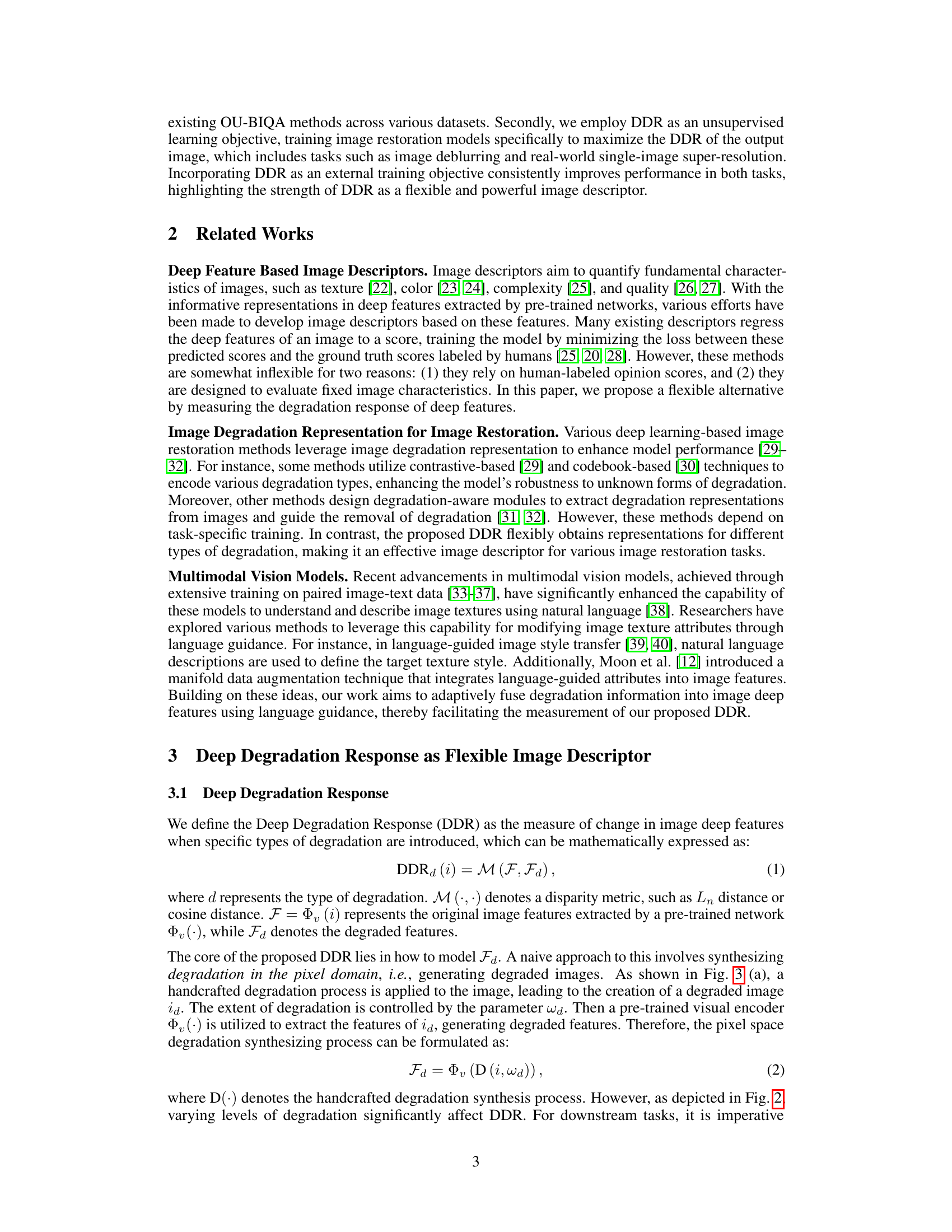

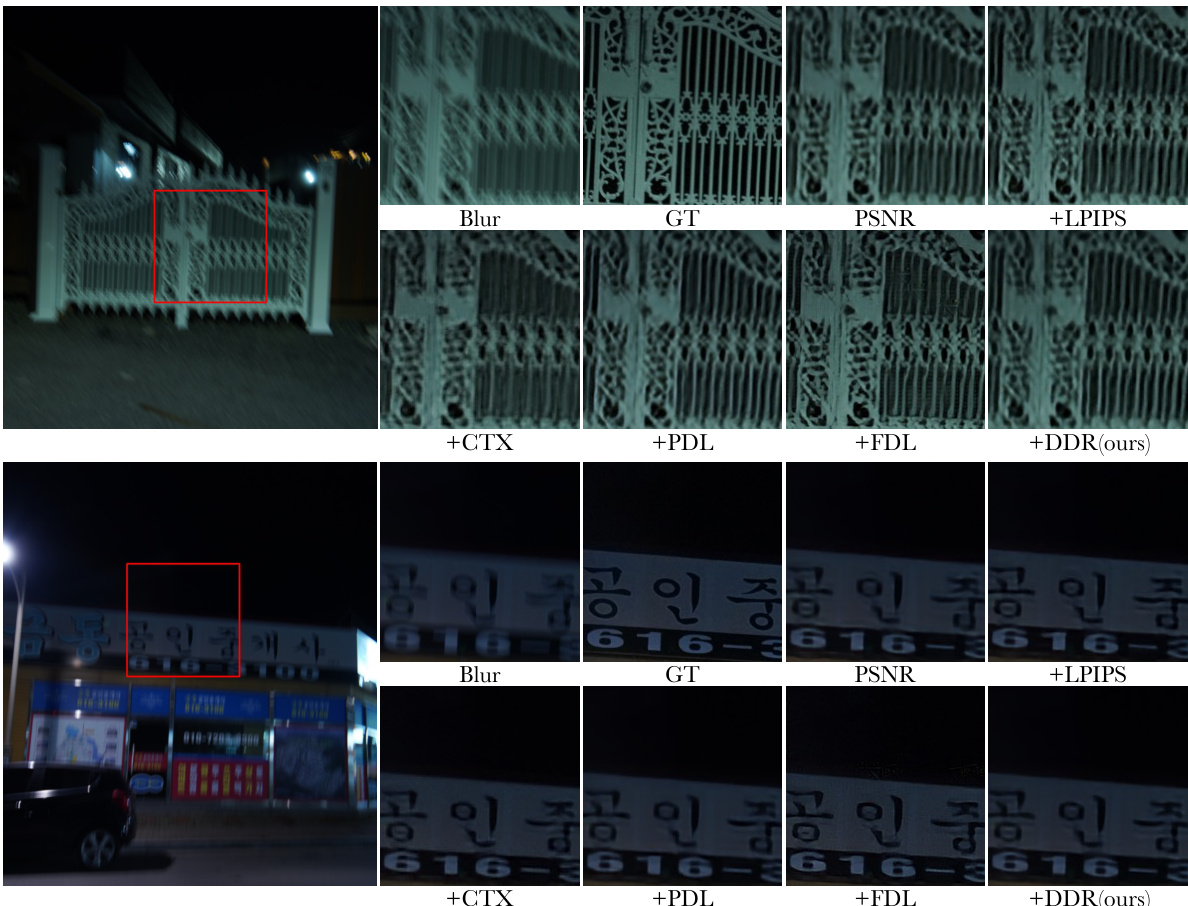

This figure shows a qualitative comparison of image deblurring results using different loss functions. The top row shows an example image and the results obtained using PSNR loss alone, PSNR+LPIPS, PSNR+CTX, PSNR+PDL, PSNR+FDL, and PSNR+DDR (the proposed method). The bottom row shows zoomed-in portions of the same results, highlighting the difference in texture and artifacts. The addition of DDR as a loss function leads to more natural-looking results with fewer artifacts.

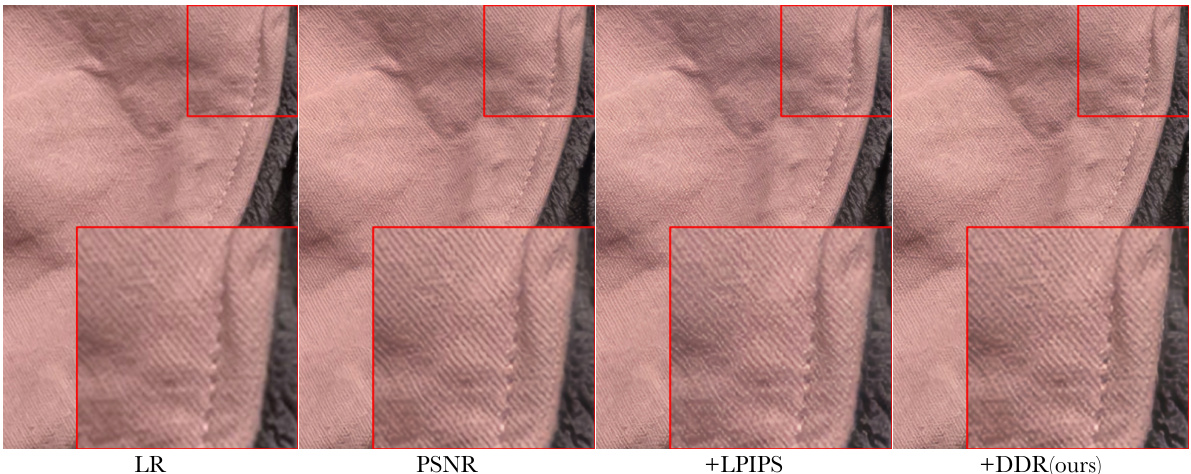

This figure shows a qualitative comparison of single image super-resolution (SISR) results using different loss functions. The leftmost image is the low-resolution (LR) input. The next three images show results using PSNR loss alone, PSNR loss combined with LPIPS perceptual loss, and PSNR loss combined with the proposed DDR loss, respectively. Red boxes highlight areas where the DDR loss produces noticeably sharper and more detailed textures compared to other methods.

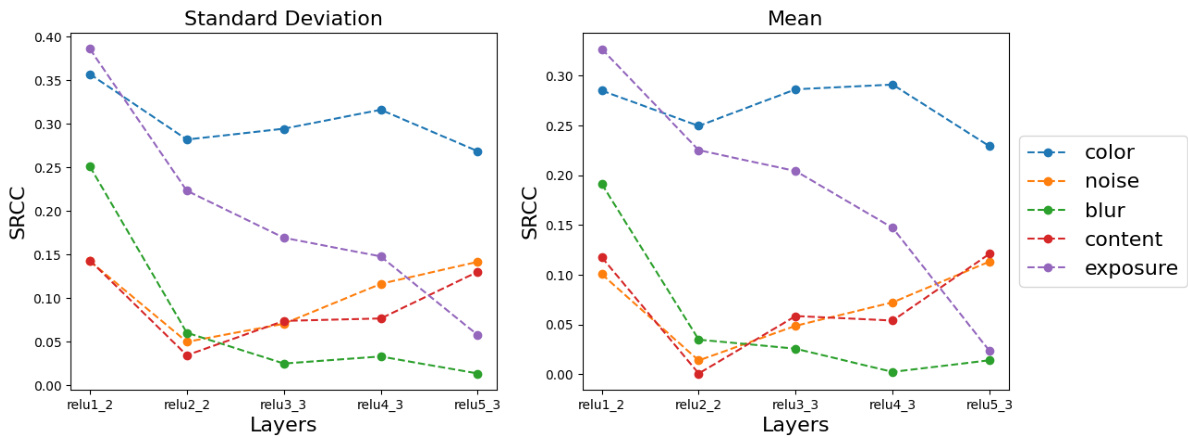

This figure shows the Spearman Rank Correlation Coefficient (SRCC) between the Deep Degradation Response (DDR) and the statistics of deep features extracted from different layers of a pre-trained VGG network. The statistics considered are the mean and standard deviation of the deep features. The figure visualizes how the correlation between DDR and deep feature statistics changes depending on the layer of the network (relu1_2, relu2_2, relu3_3, relu4_3, and relu5_3) and the type of degradation used (color, noise, blur, content, and exposure). The purpose is to show how the DDR correlates with different deep feature characteristics and how this relationship varies based on the type of image degradation.

This figure shows a qualitative comparison of image deblurring results using the NAFNet model trained on the GoPro dataset. Four different loss functions were compared: PSNR (peak signal-to-noise ratio), LPIPS (Learned Perceptual Image Patch Similarity), CTX (Contextual Loss), PDL (Projected Distribution Loss), FDL (Focal Frequency Loss), and the proposed DDR (Deep Degradation Response) loss. The results are displayed as image pairs, showing the blurred input image, ground truth, and outputs from each of the loss functions. A red box highlights a region of interest for easier comparison of the deblurring effects.

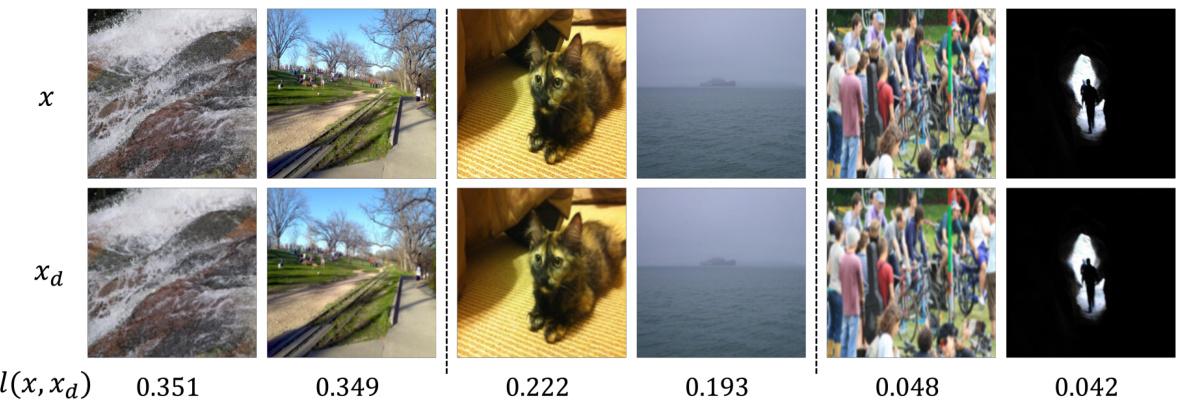

This figure shows the qualitative results of image deblurring using the NAFNet model trained on the RealBlur dataset. It compares the results obtained using different loss functions: PSNR (Peak Signal-to-Noise Ratio), LPIPS (Learned Perceptual Image Patch Similarity), CTX (Contextual Loss), PDL (Projected Distribution Loss), FDL (Focal Frequency Loss), and DDR (Deep Degradation Response). The red boxes highlight zoomed-in areas for a detailed comparison. The image shows DDR loss function outperforms others for producing higher-quality results.

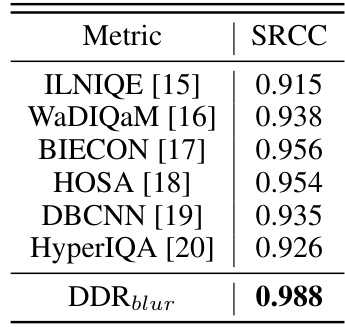

This figure shows a qualitative comparison of single image super-resolution (SISR) results obtained using different loss functions. The top row displays a close-up of a radial pattern image, and the bottom row shows a close-up of a textured image. For each image, four versions are presented: the low-resolution input (LR), results optimized using the PSNR loss function, results optimized using the LPIPS perceptual loss function, and results optimized using the proposed DDR loss function. The red boxes highlight the regions where the differences are most apparent. This figure visually demonstrates that using DDR as a loss function yields more natural and sharper results than using PSNR or LPIPS alone.

More on tables

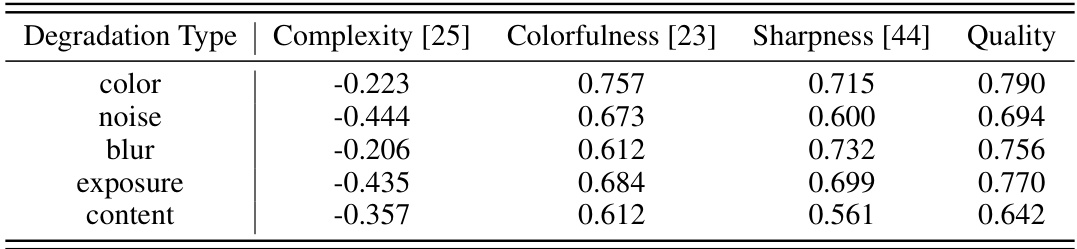

This table shows the Spearman’s Rank Correlation Coefficient (SRCC) between the Deep Degradation Response (DDR) and four image characteristics (Complexity, Colorfulness, Sharpness, and Quality). It demonstrates that the correlation between DDR and these characteristics varies depending on the type of degradation used to calculate DDR (color, noise, blur, exposure, content). For example, DDR correlated strongly with colorfulness when using color degradation and with sharpness when using blur degradation.

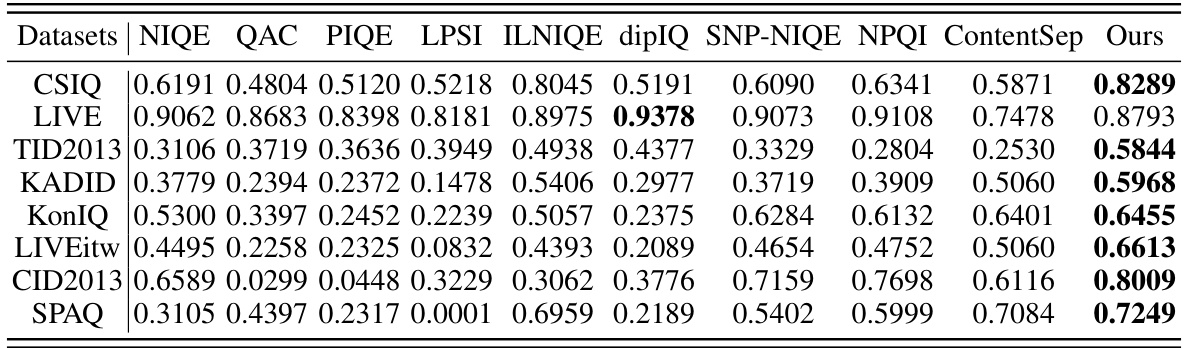

This table presents a quantitative comparison of the proposed DDR method against several state-of-the-art Opinion-Unaware Blind Image Quality Assessment (OU-BIQA) methods. The comparison is done across eight publicly available datasets using the Spearman Rank Correlation Coefficient (SRCC) as the evaluation metric. The table highlights the superior performance of DDR across diverse datasets, showcasing its robustness and efficacy as an image quality assessment tool.

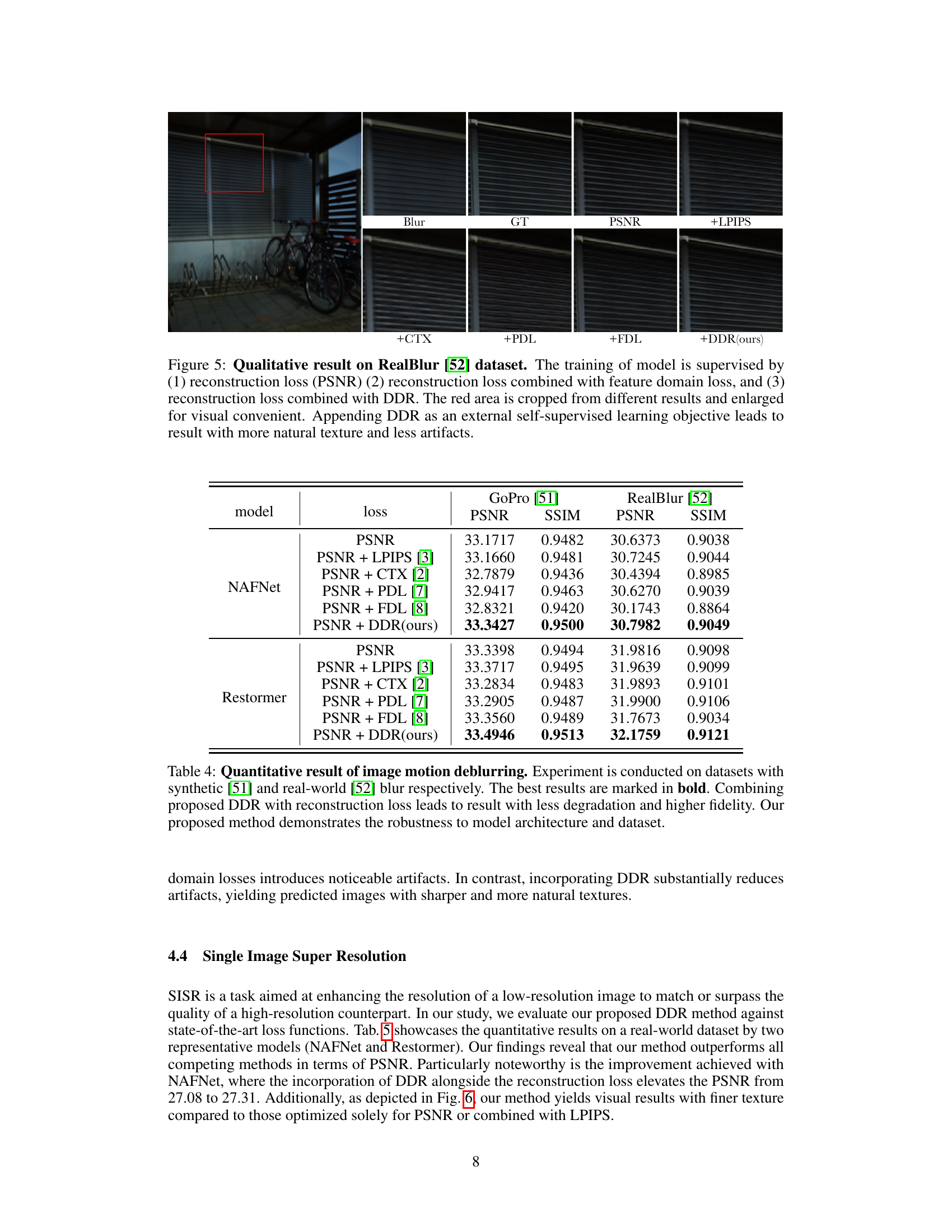

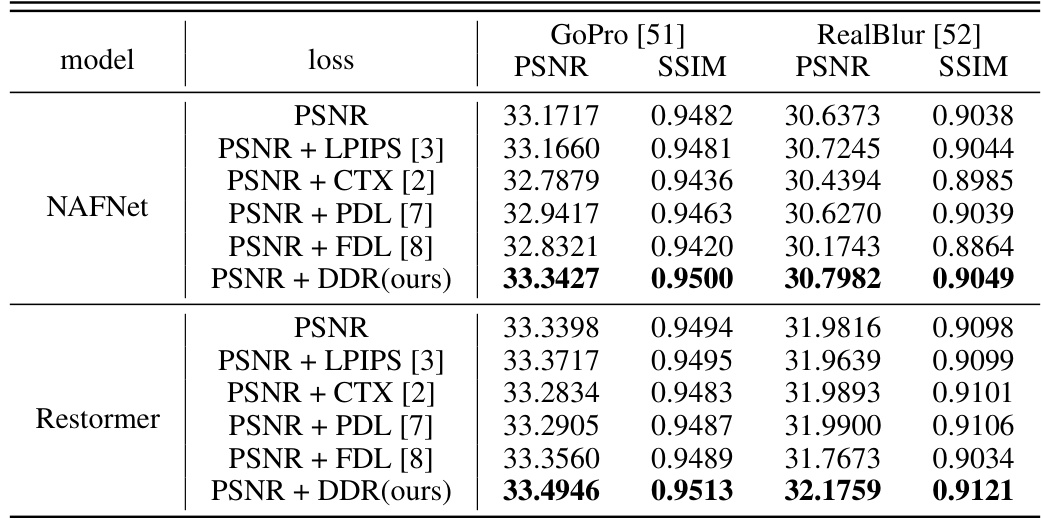

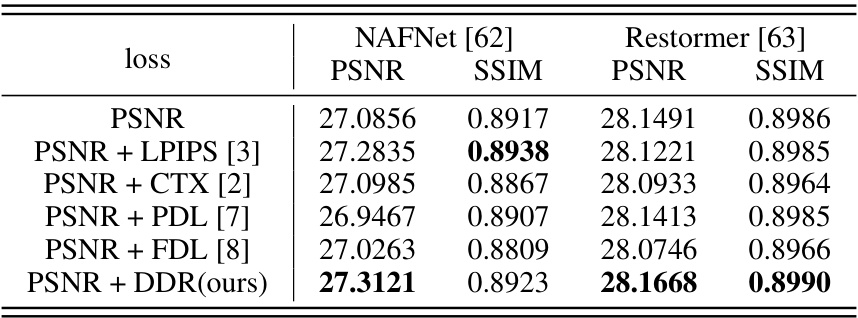

This table presents the quantitative results of image motion deblurring experiments using two different datasets (GoPro [51] and RealBlur [52]). It compares the performance of a standard PSNR loss function against variations including other feature domain losses (LPIPS, CTX, PDL, FDL) and the proposed DDR method. The results show that adding DDR to the PSNR loss consistently improves both PSNR and SSIM scores, demonstrating its effectiveness in enhancing image quality and robustness across various model architectures.

This table presents the quantitative results of single image super-resolution (SISR) experiments conducted on real-world datasets ([53, 54]). It compares the performance of different loss functions, including PSNR (Peak Signal-to-Noise Ratio) and SSIM (Structural Similarity Index), when used for training the NAFNet and Restormer models. The results show the PSNR and SSIM scores achieved by using PSNR alone, PSNR combined with LPIPS, CTX, PDL, FDL, and finally, PSNR combined with the proposed DDR method.

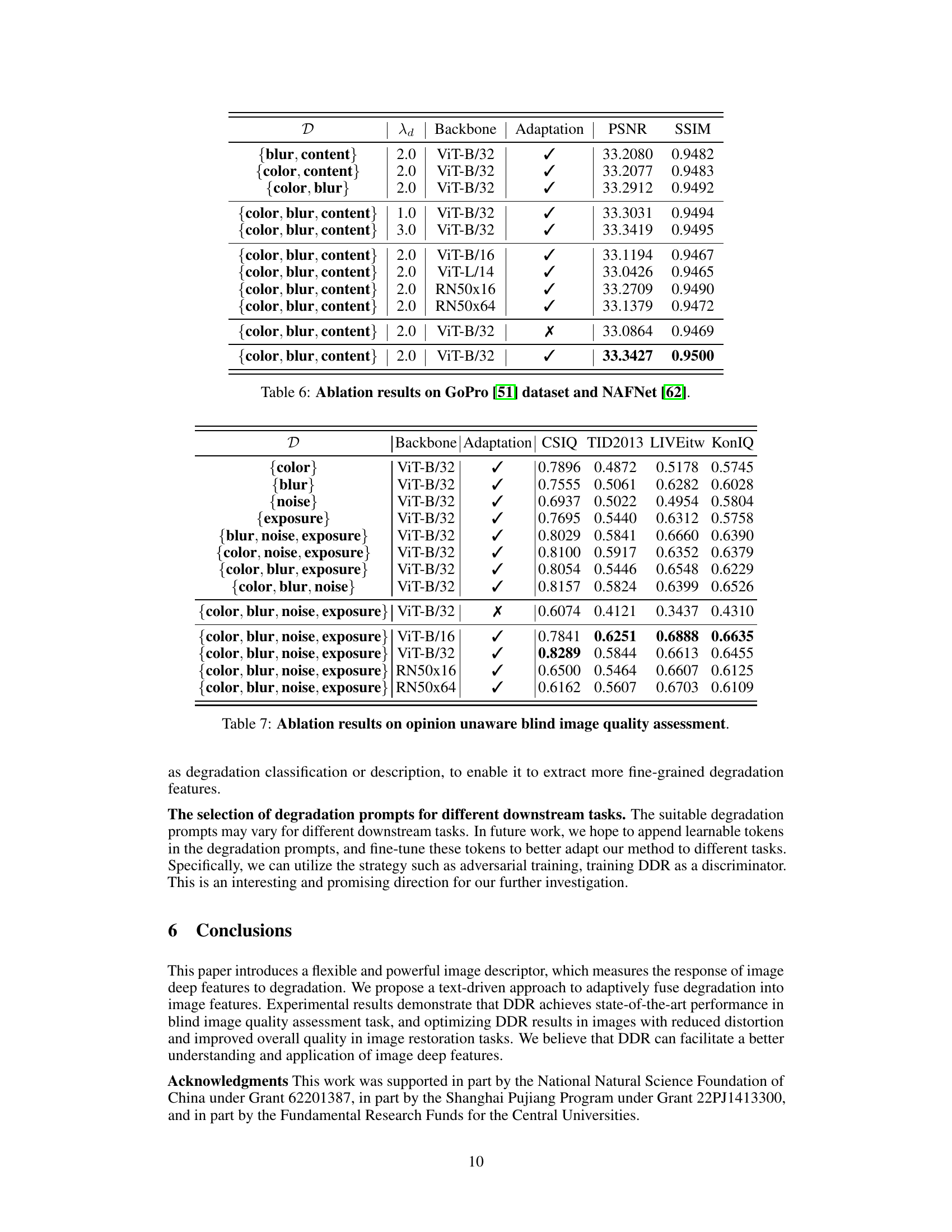

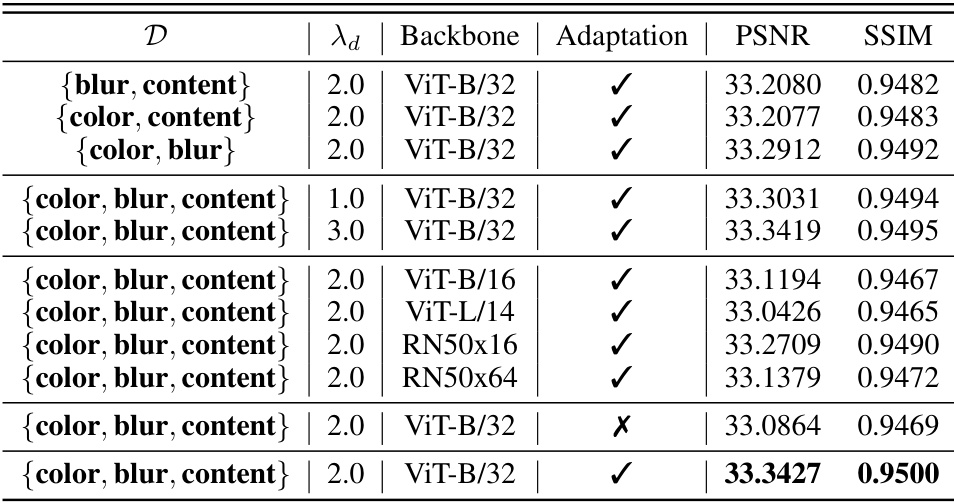

This table presents ablation study results focusing on the impact of different factors on the performance of image deblurring using the NAFNet model trained on the GoPro dataset. The factors varied include the set of degradation types considered (D), the weight of the DDR loss (λd), the backbone network used for feature extraction, and whether degradation adaptation was applied. The table shows the PSNR and SSIM scores achieved under different configurations, demonstrating the contribution of each component to the overall performance.

This table presents the ablation study results for the opinion-unaware blind image quality assessment task. It shows the impact of different factors on the performance of the proposed DDR method, including the selection of degradation types, the backbone architecture, and the usage of degradation adaptation strategy. The results are presented in terms of SRCC scores across four datasets (CSIQ, TID2013, LIVEitw, and KonIQ).

This table presents the Spearman’s Rank Correlation Coefficient (SRCC) between the Deep Degradation Response (DDR) and four image characteristics (complexity, colorfulness, sharpness, and quality) for five different degradation types (color, noise, blur, exposure, and content). It shows how the correlation between DDR and these image attributes changes depending on the type of image degradation considered.

Full paper#