↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Deep neural networks (DNNs) are vulnerable to adversarial attacks—maliciously crafted inputs designed to fool the model. Current imperceptible attacks often rely on incorporating existing paradigms with perception-based losses or generative models, but these can be complex and may not guarantee imperceptibility. The goal is to create attacks that are both effective and undetectable to the human eye.

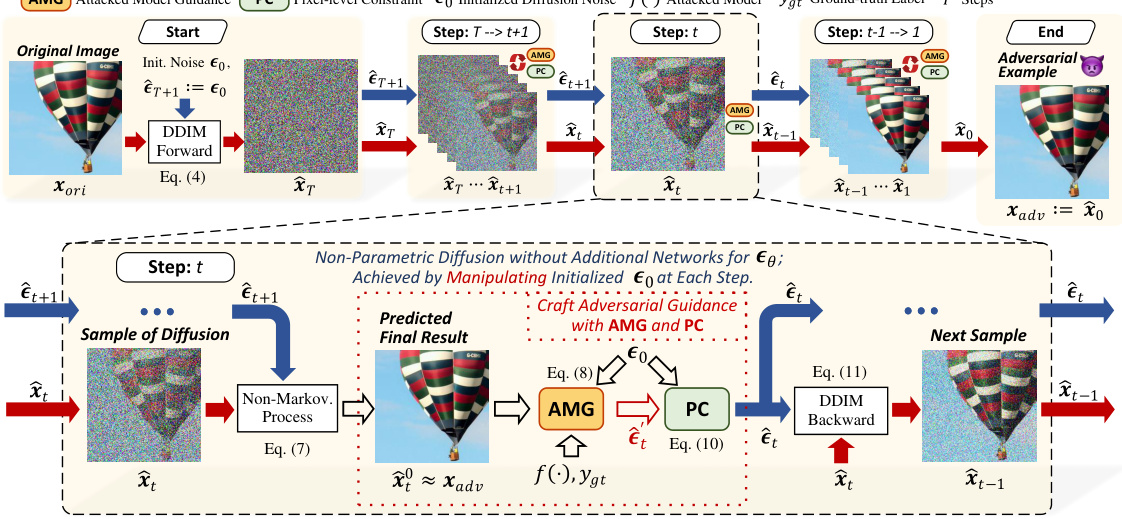

AdvAD offers a novel approach, conceptualizing attacks as a non-parametric diffusion process. Instead of using denoising or generative abilities of diffusion models, AdvAD leverages two modules—Attacked Model Guidance (AMG) and Pixel-level Constraint (PC)—to guide a diffusion process from initial noise to an adversarial example. This approach achieves high attack success rates with significantly lower perturbation strength and improved imperceptibility, outperforming state-of-the-art methods. An enhanced version, AdvAD-X, further demonstrates the extreme capabilities under ideal conditions.

Key Takeaways#

Why does it matter?#

This paper is crucial because it introduces a novel, non-parametric diffusion model for imperceptible adversarial attacks, addressing a critical challenge in adversarial machine learning. Its theoretical framework, high efficacy, and low perturbation strength make it relevant to the broader AI security community, opening avenues for more robust defenses and enhanced attack models. Researchers can leverage this innovative approach to improve the design of both attacks and defenses, thereby contributing to a more secure AI ecosystem.

Visual Insights#

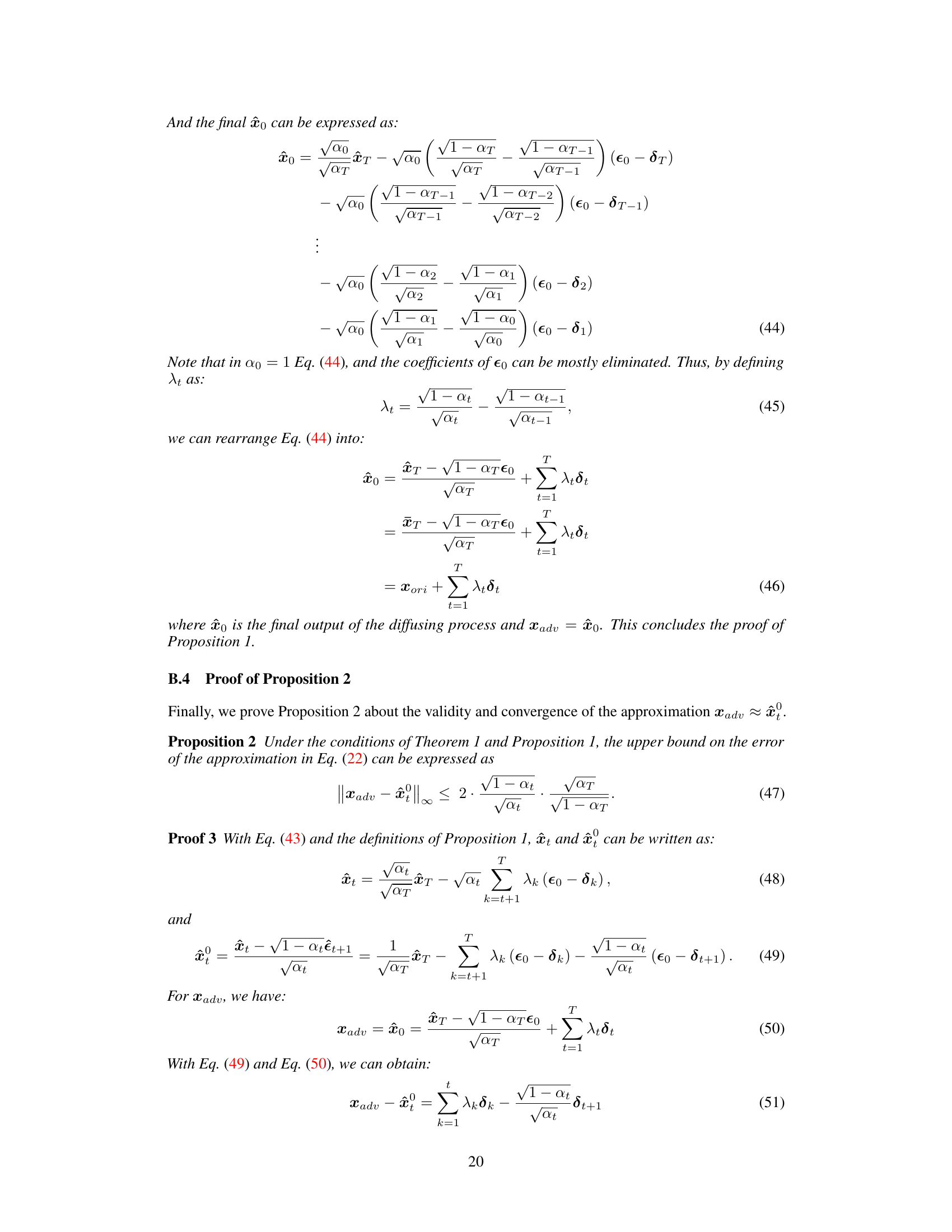

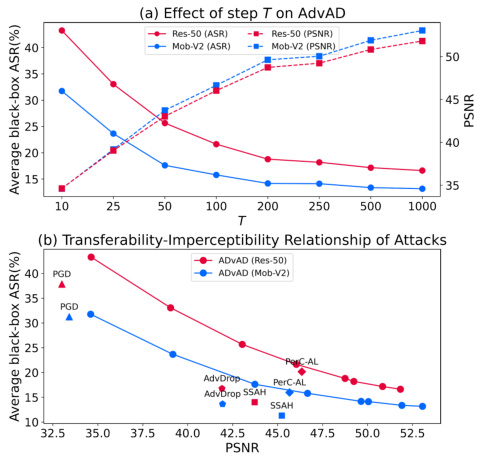

This figure illustrates the AdvAD framework. It shows how the attack process is modeled as a non-parametric diffusion process, starting from an initial noise and gradually evolving towards the adversarial example. At each step, the AMG (Attacked Model Guidance) module uses the attacked model to craft subtle adversarial guidance, which is then refined by the PC (Pixel-level Constraint) module. This ensures that the diffusion process remains close to the original image, maintaining imperceptibility while still achieving a successful attack. The figure visually depicts the process using a hot air balloon as the input image.

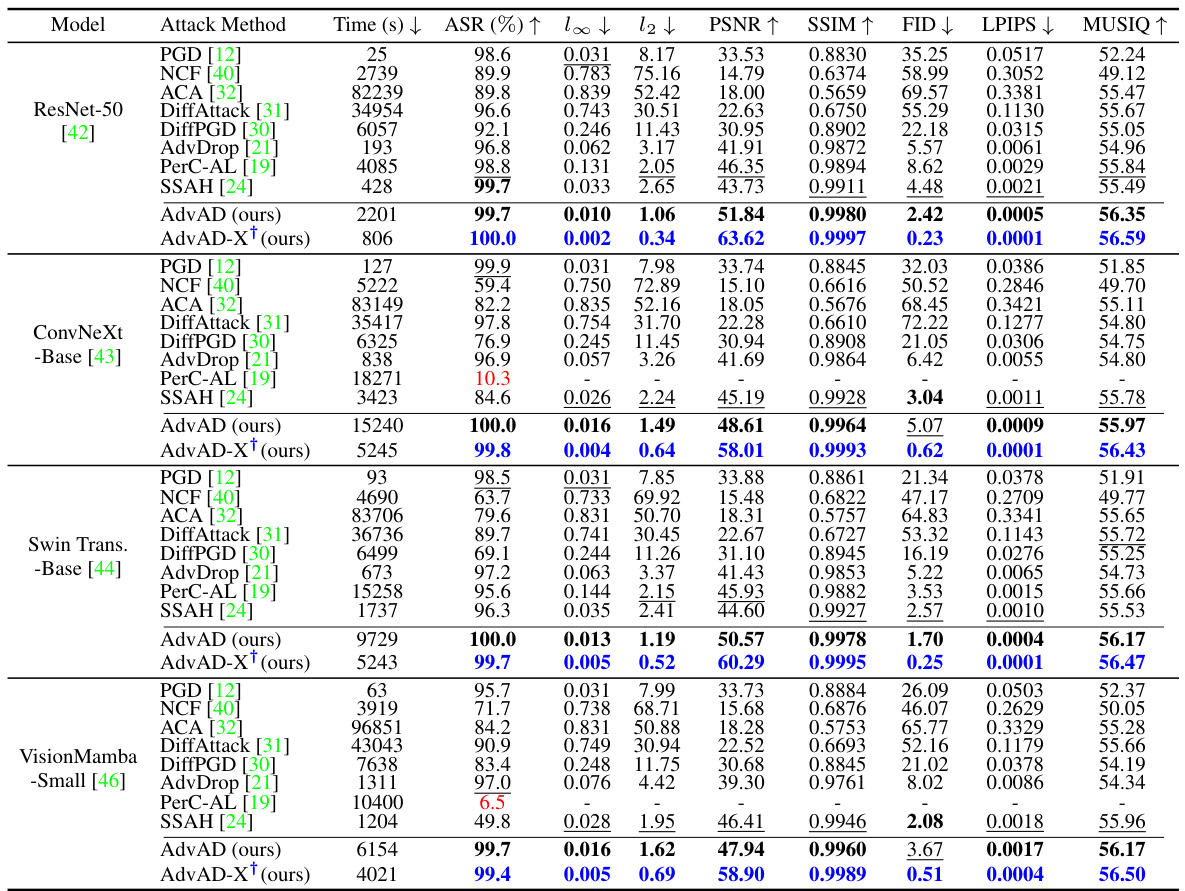

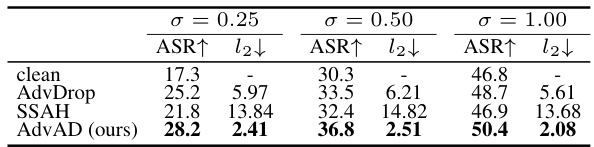

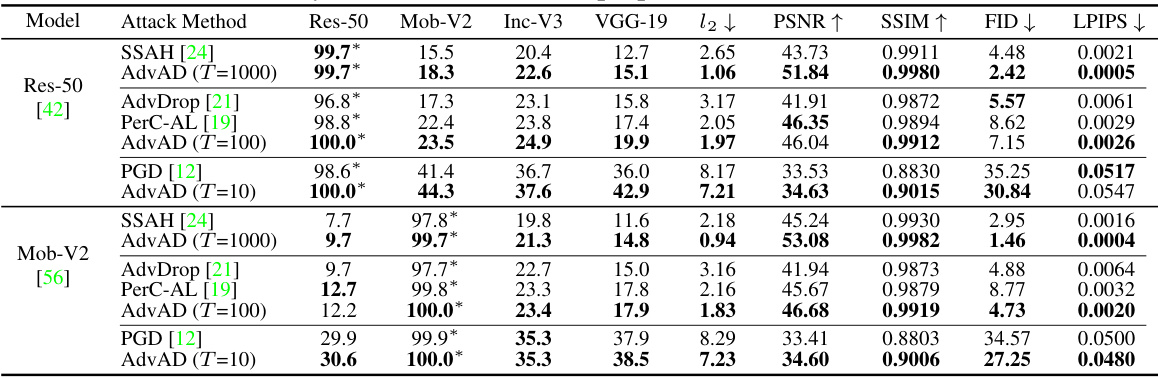

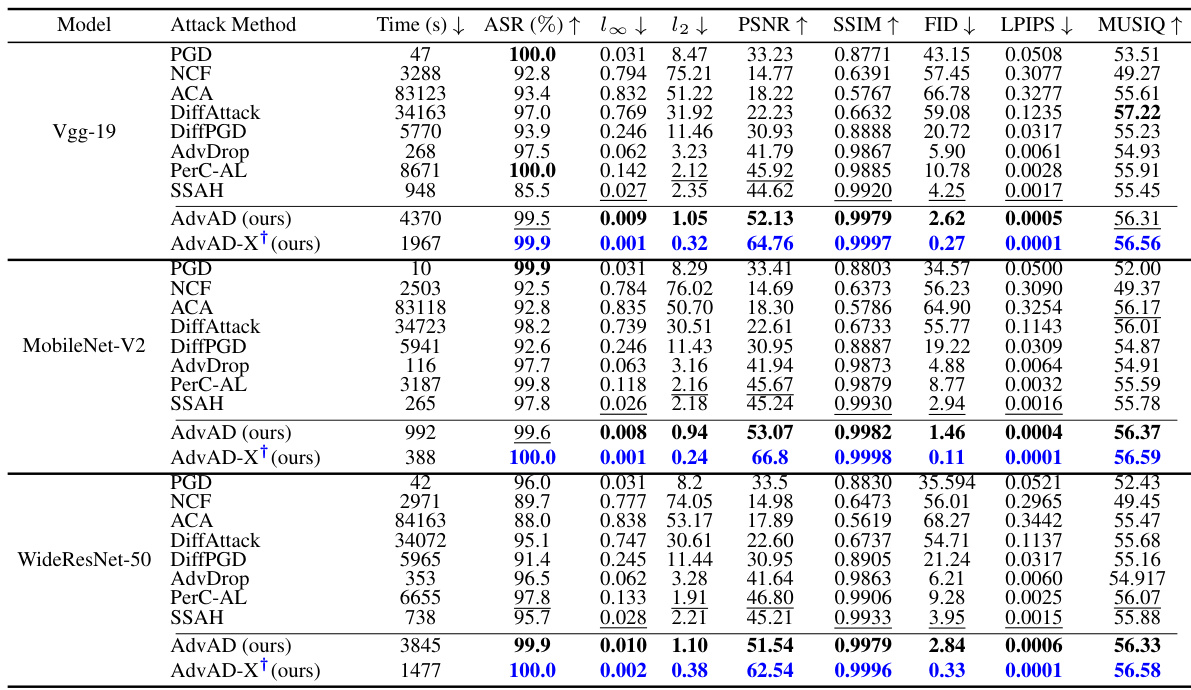

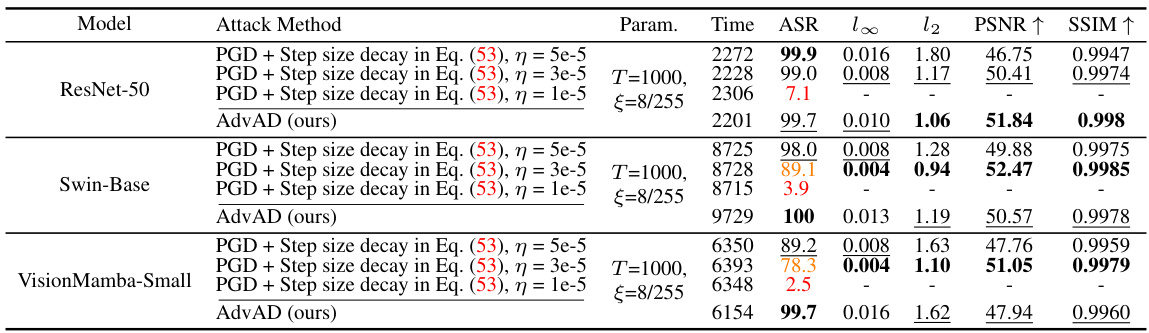

This table presents a comparison of different attack methods against four different models (ResNet-50, ConvNeXt-Base, Swin Transformer-Base, VisionMamba-Small). For each attack and model, it shows the attack success rate (ASR), L-infinity and L2 perturbation distances, PSNR, SSIM, FID, LPIPS, and MUSIQ scores, providing a comprehensive evaluation of attack efficacy and imperceptibility. Running times are also provided. Results marked with † are from AdvAD-X using floating-point data in an ideal scenario.

In-depth insights#

AdvAD: Non-parametric Diffusion#

The proposed AdvAD framework innovatively models adversarial attacks as a non-parametric diffusion process, diverging from traditional gradient-based or loss-function-driven methods. This approach leverages the theoretical underpinnings of diffusion models without relying on their neural network components. AdvAD iteratively refines an initial noise distribution, gradually steering it towards an adversarial example through subtle yet effective guidance crafted using only the target model. This inherently minimizes perturbation strength, enhancing imperceptibility. The framework’s theoretical grounding ensures efficacy while reducing computational complexity, surpassing existing imperceptible attack methods in both attack success rate and perceptual fidelity. Key advantages include reduced perturbation magnitude, improved imperceptibility, and simplified model architecture, leading to improved efficiency.

Imperceptible Attacks#

Imperceptible attacks, a crucial area in adversarial machine learning, focus on generating adversarial examples that are visually indistinguishable from benign inputs while still fooling the target model. The core challenge lies in balancing attack effectiveness with the imperceptibility constraint. Various strategies exist, such as incorporating perceptual losses to guide the perturbation process, or leveraging generative models to craft realistic-looking modifications. Non-parametric diffusion models present an intriguing novel approach, offering theoretical grounding and the potential for intrinsically lower perturbation strength. However, the trade-off between attack success rate and imperceptibility remains a key limitation, requiring careful design and parameter tuning. Future research should explore further refinements of these methods, particularly focusing on enhancing transferability and addressing the robustness of defense mechanisms against these subtle attacks.

AMG & PC Modules#

The AMG (Attacked Model Guidance) and PC (Pixel-level Constraint) modules are central to the AdvAD framework’s novel approach to adversarial attacks. AMG leverages the attacked model itself, without needing additional networks, to craft subtle yet effective adversarial guidance at each diffusion step. This is a significant departure from traditional methods relying on gradient calculations or perception-based losses. PC ensures the modified diffusion trajectory remains close to the original, maintaining imperceptibility by constraining the noise at each step. The synergy between AMG and PC allows for a theoretically-grounded, non-parametric diffusion process that guides the transformation of an image to an adversarial example with minimal perturbation. This design is computationally efficient and produces attacks with high attack success rates and superior imperceptibility compared to existing methods. The combined effect creates imperceptible adversarial examples with lower perturbation strength, a key improvement over prior art.

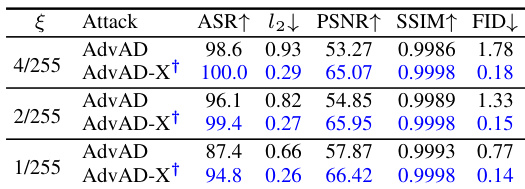

AdvAD-X Enhancements#

The AdvAD-X enhancements section would delve into the improvements made to the base AdvAD model to achieve superior performance. This would likely involve a discussion of novel strategies, perhaps focusing on dynamic guidance injection (DGI), which would adaptively reduce the computational cost by selectively skipping the injection of adversarial guidance in certain steps of the diffusion process. Another key enhancement could be CAM assistance (CA) which would incorporate Class Activation Maps (CAMs) to further suppress perturbation strength in non-critical image regions, effectively making attacks harder to detect while maintaining high attack success rates. Theoretical analysis of these strategies and their combined effect on imperceptibility and efficiency would be crucial, potentially demonstrating how AdvAD-X manages to achieve an extreme level of performance under ideal experimental conditions by reducing the adversarial perturbation to near imperceptible levels. Overall, this section would present a detailed explanation of the architectural and methodological changes that elevate AdvAD-X’s effectiveness compared to its predecessor.

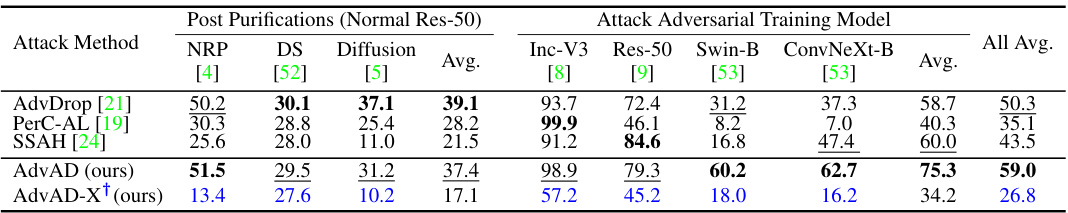

Robustness & Transferability#

The robustness and transferability of adversarial attacks are critical aspects of evaluating their effectiveness. Robustness refers to the attack’s resilience against defensive mechanisms, such as adversarial training or input transformations. A robust attack maintains its effectiveness even when the target model employs defenses. Transferability, on the other hand, measures the attack’s ability to generalize across different models. A transferable attack crafted against one model is likely to be effective against other similar models. These two properties are often intertwined; a highly transferable attack often exhibits a degree of robustness, though the converse isn’t necessarily true. A comprehensive evaluation requires analyzing both aspects, as attacks with high transferability but low robustness may be easily mitigated by defenses, whereas highly robust but non-transferable attacks might be model-specific and less broadly impactful.

More visual insights#

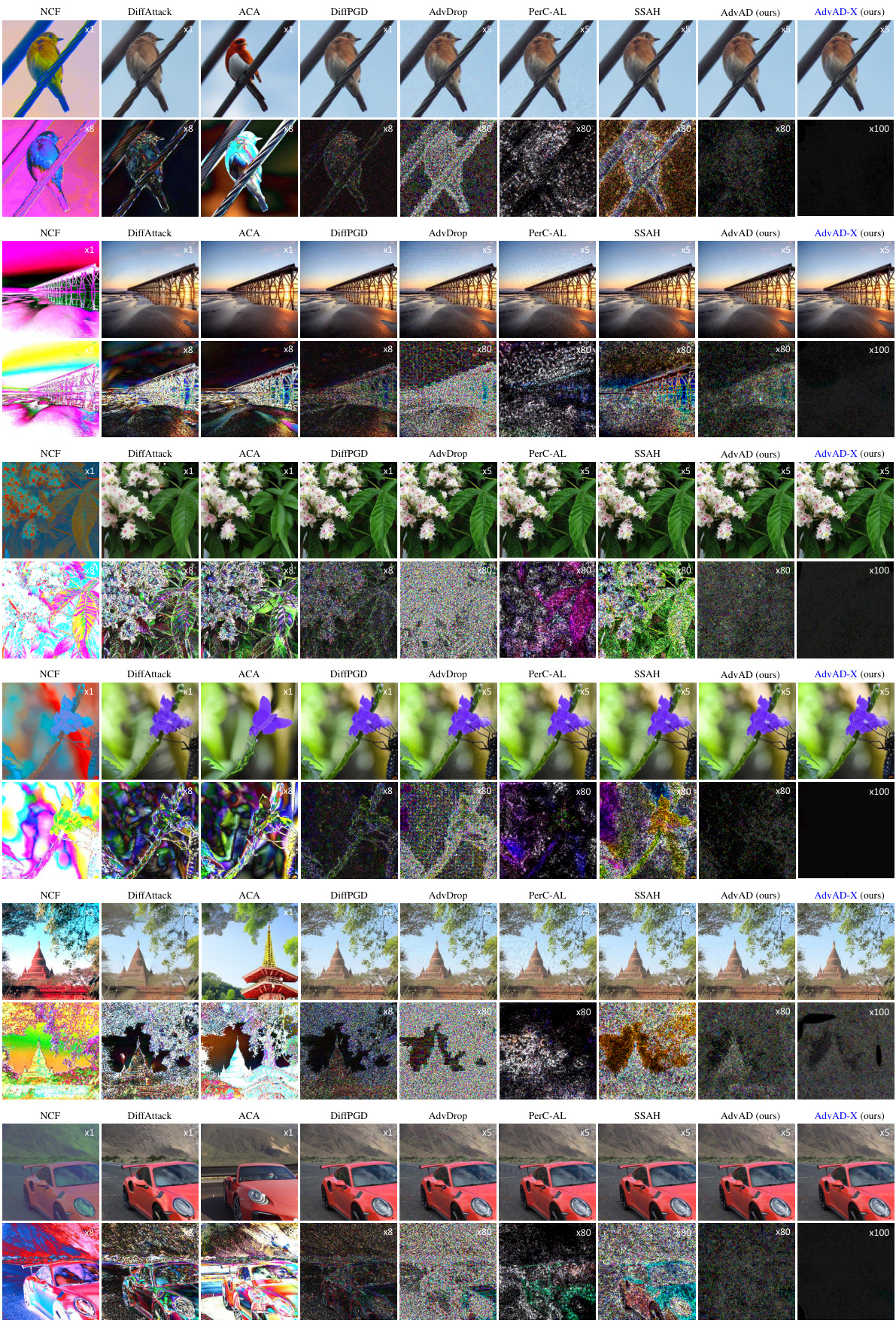

More on figures

This figure visualizes the adversarial examples and their corresponding perturbations generated by nine different imperceptible attack methods. The perturbations are amplified for better visibility. The figure aims to demonstrate the differences in the visual quality and imperceptibility of the attacks, showing how some attacks result in more noticeable changes than others. It is important to zoom in to fully appreciate the details at the original image resolution.

This figure shows the robustness of different imperceptible attacks against two common image processing defenses: JPEG compression and bit-depth reduction. The x-axis represents the level of the defense (e.g., different compression ratios or bit depths). The y-axis shows the attack success rate (ASR) achieved by each attack method. The figure demonstrates the robustness of the proposed AdvAD method, which maintains high ASR even under strong defenses compared to other attack methods.

This figure illustrates the AdvAD framework, which models adversarial attacks as a non-parametric diffusion process. It starts with an initial Gaussian noise and iteratively refines it through a series of steps. At each step, two modules, Attacked Model Guidance (AMG) and Pixel-level Constraint (PC), work together. AMG uses the attacked model to craft subtle yet effective adversarial guidance, injected into the noise. PC then constrains the noise to ensure the modified trajectory stays close to the original, maintaining imperceptibility. The process continues until the final adversarial example (xadv) is generated.

This figure visualizes the values of λt and ||δt||∞, which are key components in the mathematical formulation of the AdvAD attack process. The left panel shows the values of λt, which represents the coefficient that scales the magnitude of the adversarial guidance injected at each step of the diffusion process. The right panel displays the infinity norm of δt (||δt||∞), which signifies the maximum change in the noise at each step. Both graphs show a decrease over time (t), indicating that the strength of the adversarial perturbation reduces progressively as the attack progresses, thus contributing to the imperceptibility of the adversarial examples.

This figure provides a visual representation of the AdvAD model, illustrating its non-parametric diffusion process for generating imperceptible adversarial examples. It details the steps involved, starting from an initial noise and progressively incorporating adversarial guidance via the AMG and PC modules, to reach a final adversarially conditioned distribution. The process is achieved without any additional networks, using only the attacked model.

More on tables

This table presents a comparison of different attack methods against four different models (ResNet-50, ConvNeXt-Base, Swin Transformer-Base, VisionMamba-Small). The metrics used for comparison include attack success rate (ASR), perturbation strength (l∞ and l2), PSNR, SSIM, FID, LPIPS, and MUSIQ. The running time for each attack is also given. A special note is made about AdvAD-X results which were obtained using a floating-point data type in an ideal scenario.

This table presents a comparison of different attack methods against four different models (ResNet-50, ConvNeXt-Base, Swin Transformer-Base, VisionMamba-Small). For each attack and model, the table shows the attack success rate (ASR), perturbation strength (l∞ and l2 distances), perceptual quality (PSNR, SSIM), and image quality (FID, LPIPS, MUSIQ). The results for AdvAD-X marked with a ‘+’ are obtained under ideal conditions using floating-point data.

This table presents a comparison of different attack methods (including the proposed AdvAD and AdvAD-X) against four different models (ResNet-50, ConvNeXt-Base, Swin Transformer-Base, VisionMamba-Small). It shows the attack success rate (ASR), as well as metrics evaluating the imperceptibility of the attacks, such as l-infinity and l2 distances, PSNR, SSIM, FID, LPIPS, and MUSIQ. Running times on a RTX 3090 GPU are also provided. AdvAD-X results marked with a † are from an ideal scenario using floating-point data.

This table presents a comparison of different attack methods against four different models (ResNet-50, ConvNeXt-Base, Swin Transformer-Base, VisionMamba-Small). For each attack and model, the table shows the attack success rate (ASR), l-infinity and l2 perturbation distances, PSNR, SSIM, FID, LPIPS, and MUSIQ scores. Higher ASR indicates a more successful attack, while higher PSNR, SSIM, and MUSIQ, and lower FID and LPIPS indicate better imperceptibility (i.e., the adversarial examples are more similar to the original images and harder to distinguish). The running time for each attack is also provided.

This table presents a comparison of different attack methods against four different models (ResNet-50, ConvNeXt-Base, Swin Transformer-Base, VisionMamba-Small). For each attack and model, the table shows the attack success rate (ASR), the L2 and L-infinity perturbation norms, PSNR, SSIM, FID, LPIPS, and MUSIQ scores. Higher ASR, lower perturbation norms, and higher PSNR, SSIM, and MUSIQ scores indicate more successful and imperceptible attacks. The running time for each attack is also provided. The results for AdvAD-X marked with a † are obtained using floating-point data in an ideal scenario, which is described in the paper.

This table presents a comparison of different attack methods (including the proposed AdvAD and AdvAD-X) against four different models (ResNet-50, ConvNeXt-Base, Swin Transformer-Base, VisionMamba-Small). For each attack and model combination, the table shows the attack success rate (ASR), l∞ and l2 perturbation distances, PSNR, SSIM, FID, LPIPS, and MUSIQ scores. These metrics assess the effectiveness and imperceptibility of the attacks. The running time of each attack is also provided. Results marked with † represent AdvAD-X using floating point data in an ideal scenario (described in Section 3.4 of the paper).

This table presents a comparison of different attack methods against four different models (ResNet-50, ConvNeXt-Base, Swin Transformer-Base, VisionMamba-Small). For each attack and model, the table shows the attack success rate (ASR), the L∞ and L2 distances (measuring perturbation strength), PSNR, SSIM, FID, LPIPS, and MUSIQ (metrics evaluating imperceptibility). The running time for each attack is also provided. Results marked with † indicate that AdvAD-X was run in an ideal scenario using floating-point data, not the typical 8-bit data.

This table presents a comparison of different attack methods against four different models (ResNet-50, ConvNeXt-Base, Swin Transformer-Base, VisionMamba-Small). For each attack and model, the table shows the attack success rate (ASR), l∞ and l2 distances (measures of perturbation strength), PSNR, SSIM, FID, LPIPS, and MUSIQ (measures of imperceptibility). The running time for each attack is also provided. A special note is made about the results for AdvAD-X, which used a floating point data type under ideal conditions.

This table presents a comparison of different attack methods (including the proposed AdvAD and AdvAD-X) against four different models (ResNet-50, ConvNeXt-Base, Swin Transformer-Base, VisionMamba-Small). It evaluates the attacks based on attack success rate (ASR), and several metrics related to the imperceptibility of the adversarial examples produced, including L∞ and L2 distances, PSNR, SSIM, FID, LPIPS, and MUSIQ. Running times on an RTX 3090 GPU are also provided. AdvAD-X results marked with a † and in blue were obtained using floating point data in an ideal scenario.

Full paper#