↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Strategic classification, where agents manipulate features to achieve favorable outcomes, typically assumes agents have complete knowledge of the deployed classifier. This is unrealistic; this paper introduces a Bayesian setting where agents have a prior distribution over the classifier, and the learner can strategically reveal partial information about the classifier to agents to improve accuracy. This raises the question of how much information should be released to maximize accuracy.

The paper demonstrates that partial information release can counter-intuitively improve accuracy. The authors provide oracle-efficient algorithms for computing the best response of an agent in scenarios with low-dimensional linear classifiers or submodular cost functions. They also analyze the learner’s optimization problem, showing it to be NP-hard in the general case, but providing solutions for specific cases like continuous uniform priors.

Key Takeaways#

Why does it matter?#

This paper is crucial because it addresses a critical gap in strategic classification by considering the realistic scenario where agents have incomplete knowledge of the classifier. The findings offer novel algorithms and insights into optimal information release strategies, directly impacting the design of robust and accurate machine learning systems in various applications. The NP-hardness results highlight the theoretical challenges, and the focus on both accuracy and fairness metrics opens up exciting new avenues for research.

Visual Insights#

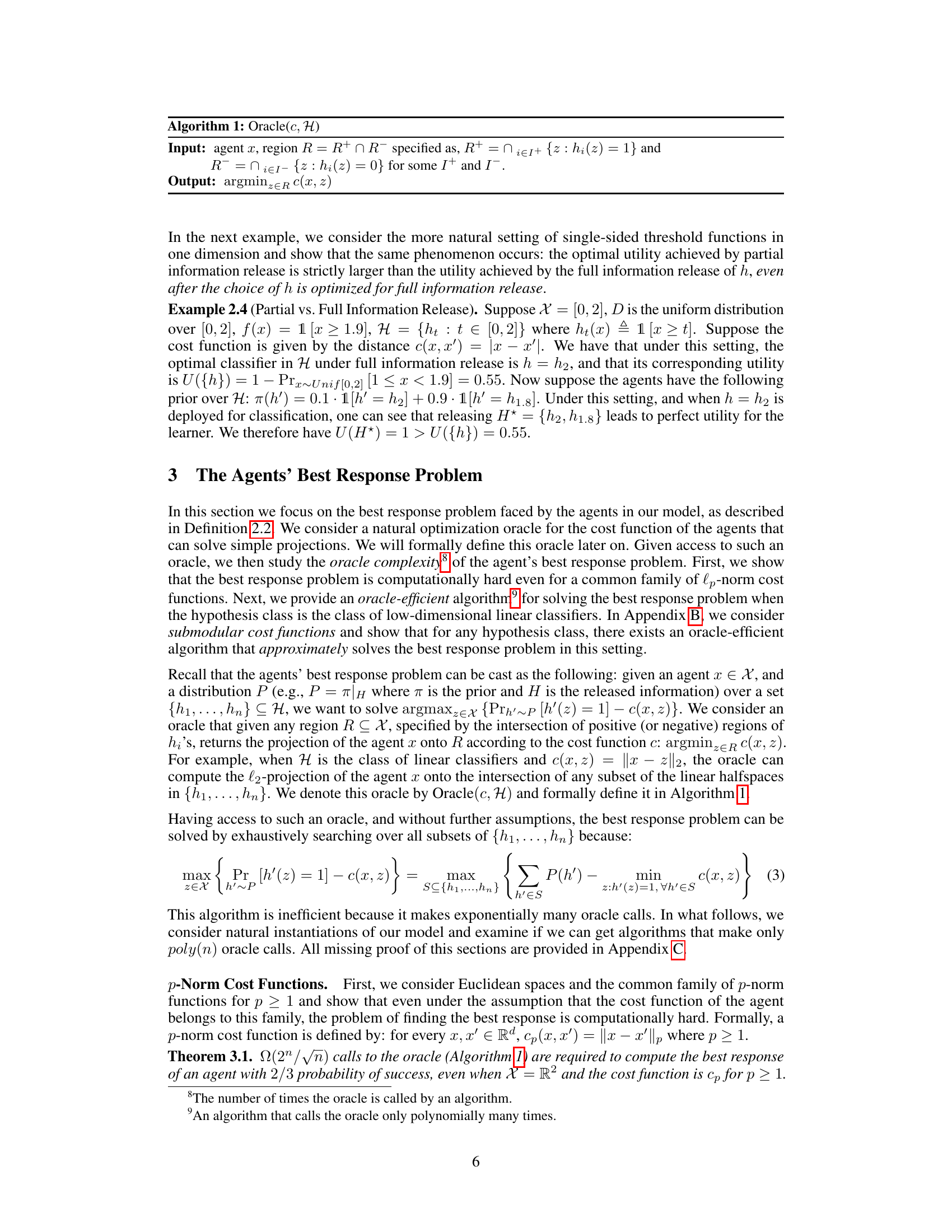

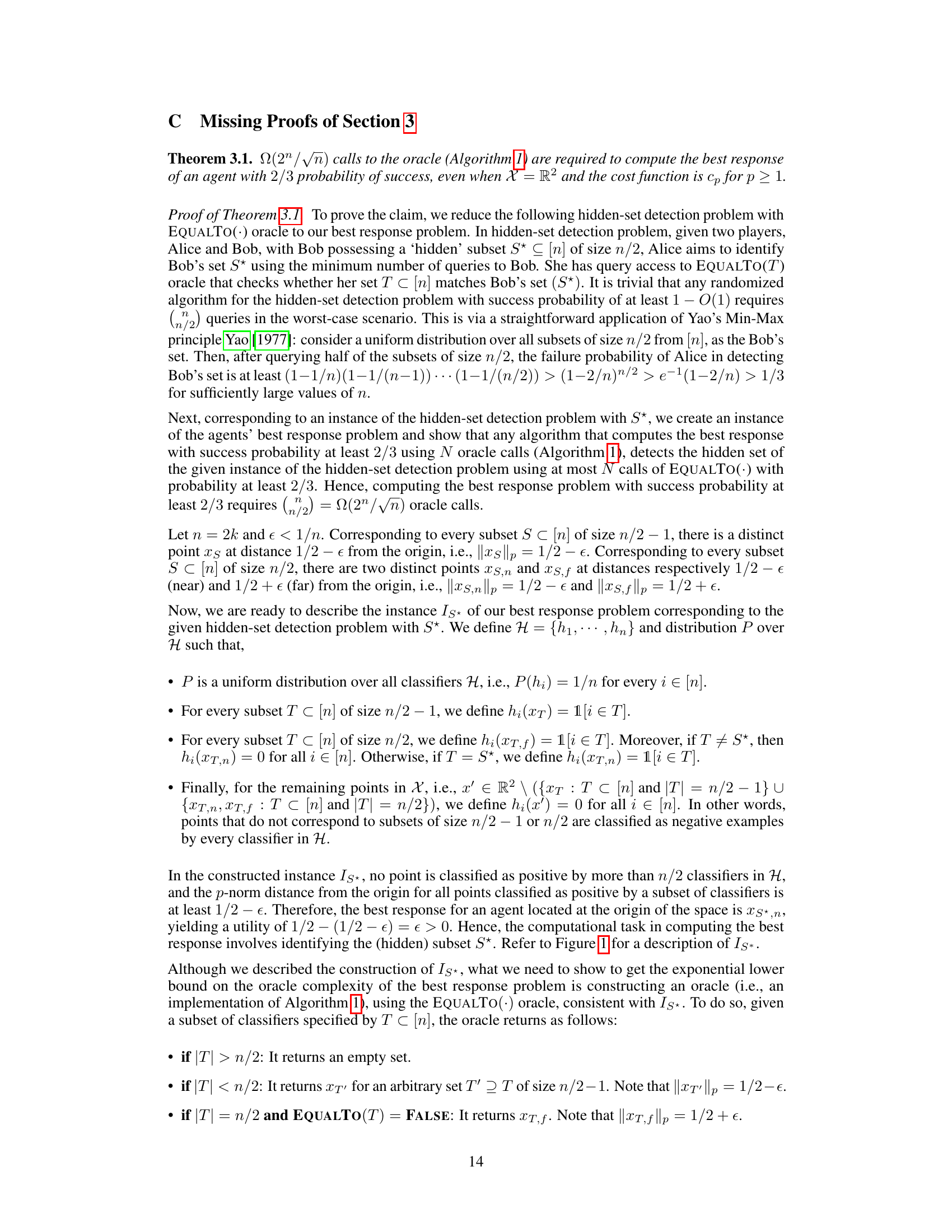

This figure illustrates the reduction from the hidden-set detection problem to the agents’ best response problem. The agent is at the origin. Blue points represent subsets of size n/2-1, and red points represent subsets of size n/2. The red point corresponding to S* is closer to the origin than the other red points, making it uniquely identifiable with sufficiently many queries.

Full paper#