↗ OpenReview ↗ NeurIPS Homepage ↗ Hugging Face ↗ Chat

TL;DR#

Current 3D Generative Adversarial Networks (GANs) rely on computationally expensive ray casting for rendering. This paper addresses this by proposing an alternative: rasterization-based 3D Gaussian splatting, which is much faster. However, simply using Gaussians in a GAN framework leads to instability and artifacts.

To overcome these issues, the authors introduce GSGAN. GSGAN employs a hierarchical multi-scale Gaussian representation, which effectively regularizes the position and scale of generated Gaussians, resulting in improved stability and reduced artifacts. The hierarchical structure allows for the generation of both coarse and fine details. The experimental results show that GSGAN achieves a significant speedup (x100) in rendering compared to the state-of-the-art 3D consistent GANs, with comparable 3D generation quality.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in 3D GANs and generative modeling. It offers a significantly faster rendering speed (100x) compared to existing methods while maintaining comparable 3D generation quality. This opens avenues for high-resolution 3D model generation previously computationally infeasible, driving advancements in various applications like virtual reality and gaming.

Visual Insights#

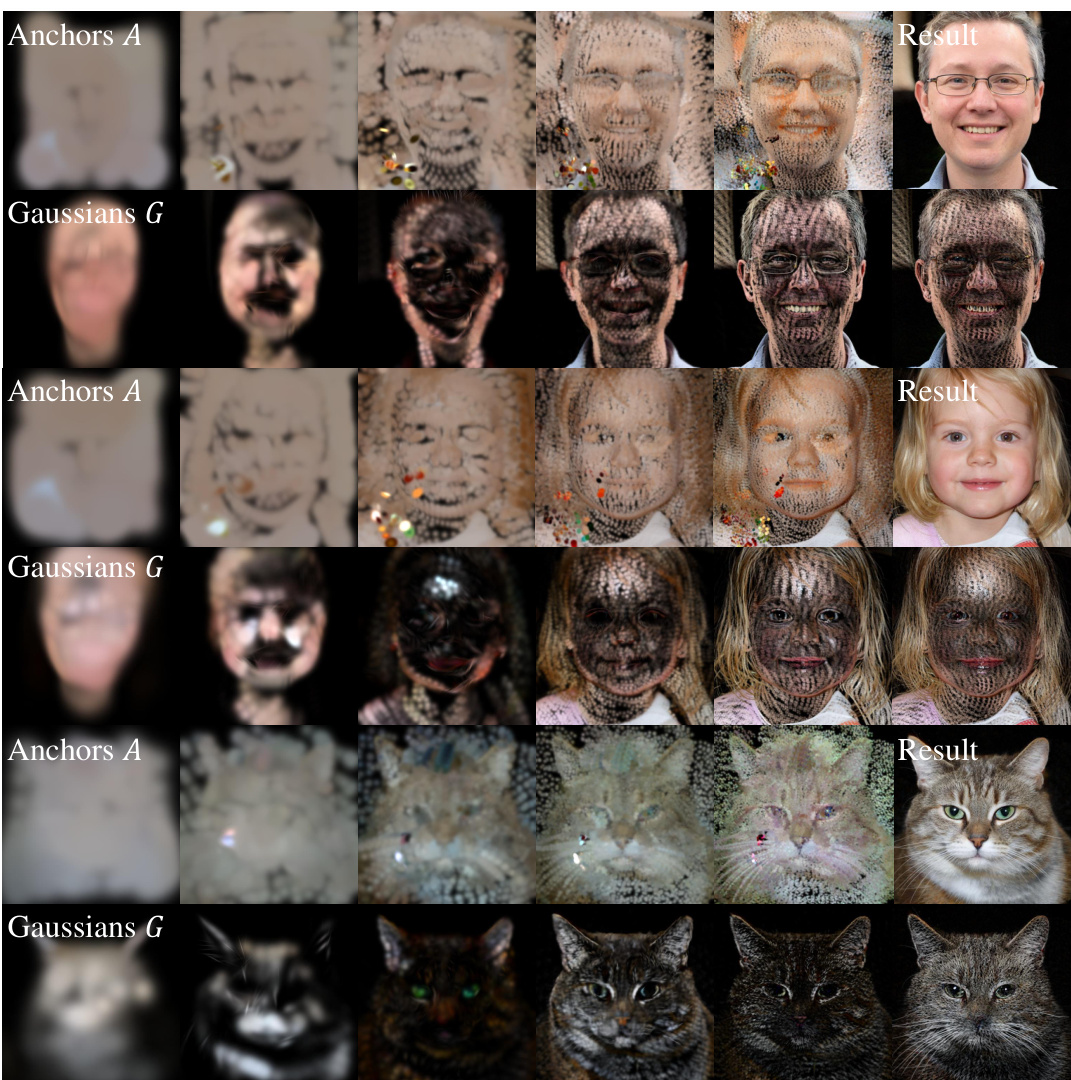

This figure compares the results of ablation studies on the proposed GSGAN model. It shows the effects of removing or adding different components of the model, such as scale clipping, position regularization, and anchor Gaussians. Each image displays the positions of the generated Gaussians, illustrating how different constraints affect their distribution and ultimately influence the quality of generated images. The visualization highlights the importance of the hierarchical structure and regularization techniques for stable training and high-quality results.

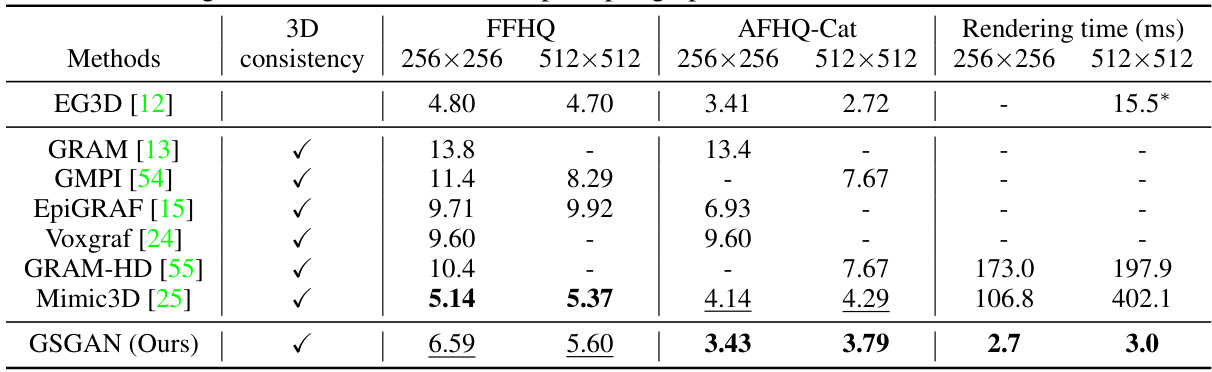

This table quantitatively compares the proposed GSGAN model with other state-of-the-art 3D-consistent GANs on the FFHQ and AFHQ-Cat datasets. The comparison uses two metrics: Fréchet Inception Distance (FID) at 50K images, and rendering time (in milliseconds) measured on a single RTX A6000 GPU. The table highlights the significantly faster rendering speed of GSGAN compared to other methods while maintaining comparable image quality.

In-depth insights#

3D Gaussian GANs#

3D Gaussian GANs represent a novel approach to 3D object generation, combining the efficiency of Gaussian splatting with the power of Generative Adversarial Networks (GANs). Instead of relying on computationally expensive ray tracing, this method leverages the faster rendering capabilities of Gaussian splatting for generating multi-view consistent images. The hierarchical nature of the Gaussian representation allows for detailed modeling of 3D scenes at multiple scales, effectively capturing both coarse and fine details. A key challenge is managing the positions and scales of the generated Gaussians to avoid training instability and visual artifacts. This is addressed through architectural innovations that regularize Gaussian parameters using a hierarchical structure, improving training stability and overall generation quality. The speed advantage over existing methods is significant, paving the way for more efficient high-resolution 3D content creation. However, limitations remain, especially concerning adaptive Gaussian densification, and further research is needed to fully explore the potential of this approach.

Hierarchical GSGAN#

A Hierarchical GSGAN leverages a multi-scale Gaussian splatting representation for 3D object generation. This approach differs from traditional 3D GANs that rely on computationally expensive volume rendering techniques. Instead, it uses efficient rasterization, significantly accelerating the rendering process. The hierarchical structure is key, organizing Gaussians into levels of detail: coarse-level Gaussians capture the overall shape, while finer levels add increasingly fine details. This hierarchical organization not only improves rendering speed but also stabilizes training, addressing challenges like model divergence and visual artifacts commonly associated with naive Gaussian-based GANs. By parameterizing finer-level Gaussians based on their coarser counterparts, the model learns to effectively regularize the position and scale of Gaussians. This constraint promotes a coarse-to-fine generation, resulting in higher-quality 3D models that are visually consistent across multiple viewpoints.

Fast 3D Rendering#

Achieving fast 3D rendering is crucial for interactive applications and real-time experiences. Traditional methods often struggle with the computational demands of rendering complex 3D scenes. This paper explores techniques to significantly accelerate 3D rendering, potentially through novel data structures, optimized rendering algorithms, or a combination of both. The focus may be on improving the efficiency of ray tracing, rasterization, or other rendering pipelines, possibly by leveraging techniques like GPU acceleration, level of detail (LOD) rendering, or image-based rendering. The goal is to enable fluid interaction with 3D models, even in high-fidelity, without compromising visual quality or frame rate. Success hinges on finding the right balance between speed and visual fidelity, adapting to the complexity of the 3D scene and the hardware capabilities. Hierarchical representations of the 3D geometry are likely explored to optimize rendering time. Ultimately, the success of a fast 3D rendering method is evaluated by its performance in terms of frames per second (FPS), memory usage, and visual quality metrics.

Training Stability#

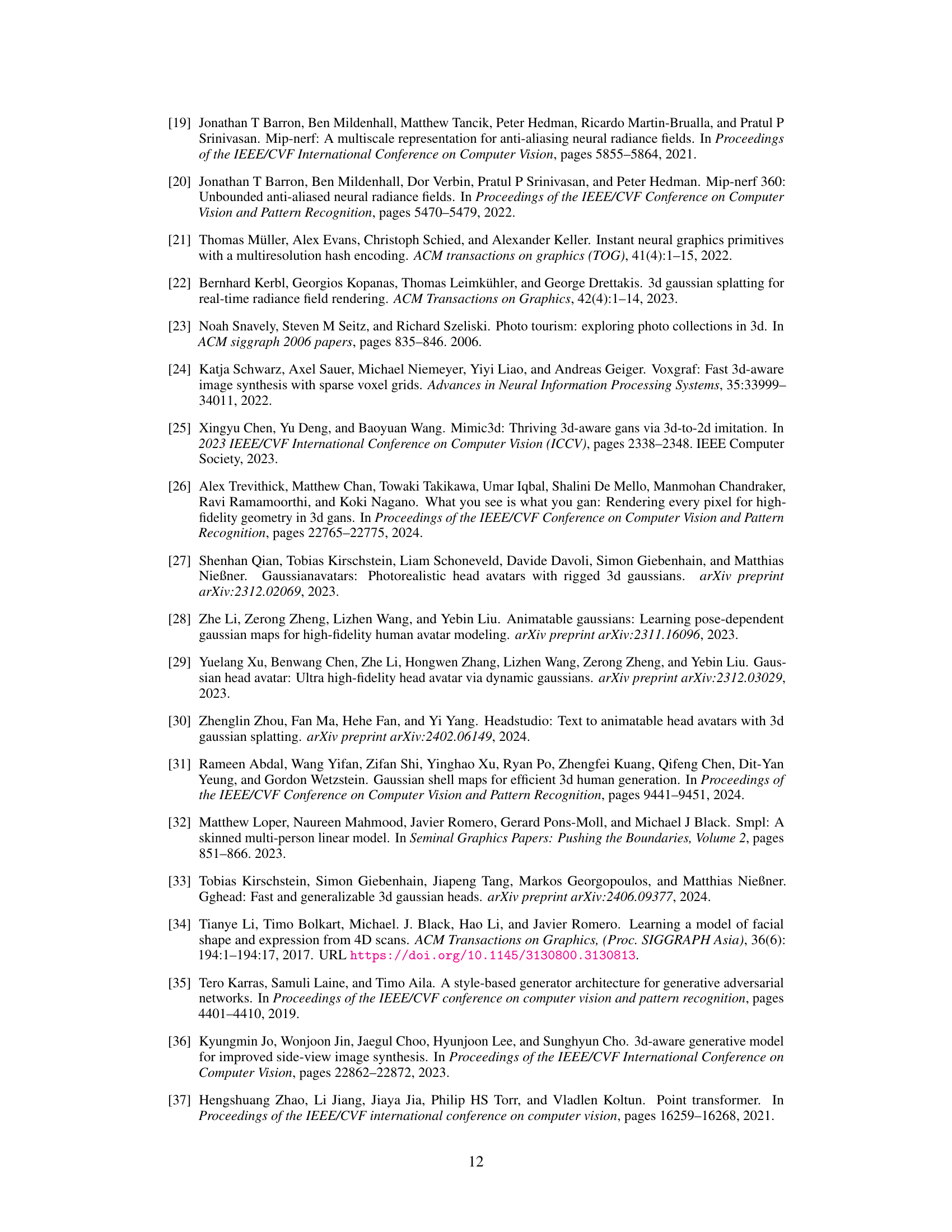

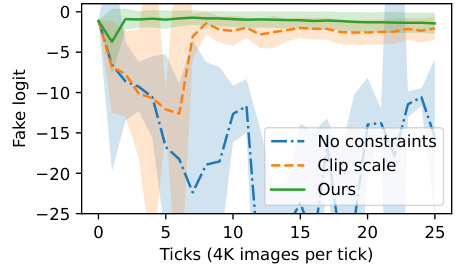

The paper investigates the training stability of generative adversarial networks (GANs) for 3D Gaussian splatting. A naive approach suffers from instability, leading to model divergence and visual artifacts due to the lack of proper guidance in initializing Gaussian positions and scales. The core of the proposed solution, GSGAN, addresses this by introducing a hierarchical multi-scale Gaussian representation. This hierarchy regularizes the positions and scales of generated Gaussians, improving stability. Finer-level Gaussians are parameterized by their coarser-level counterparts, ensuring that finer details are built upon a stable foundation of coarser structures. Experimental results demonstrate that GSGAN achieves significantly improved training stability compared to naive methods, exhibiting a more controlled and consistent generation process. This is visualized by analyzing the fake logits at early training stages, where GSGAN shows stable behavior, unlike the unstable oscillations observed in naive approaches. The hierarchical structure, coupled with additional architectural details (e.g., anchor Gaussians), effectively prevents the collapse or divergence of the generated Gaussians, resulting in a robust and stable training process that successfully synthesizes realistic 3D models.

Future Directions#

Future research directions for 3D Gaussian splatting GANs could involve exploring more sophisticated hierarchical structures for Gaussian representation, potentially incorporating learned relationships between different Gaussian levels rather than relying on fixed rules. This would allow for more adaptive modeling of complex shapes and details. Another promising area lies in improving the generator’s ability to handle highly varied scenes with diverse levels of detail. Investigating alternative training methodologies, such as using different loss functions or incorporating reinforcement learning techniques, could further enhance the model’s training stability and generation quality. Finally, extending the approach to incorporate other modalities, such as depth information or point clouds, could significantly increase the model’s expressiveness and its applications to diverse 3D content creation tasks. Exploring the potential of combining this approach with other advanced 3D representation methods, could also lead to significant advancements in both the quality and efficiency of 3D-GANs.

More visual insights#

More on figures

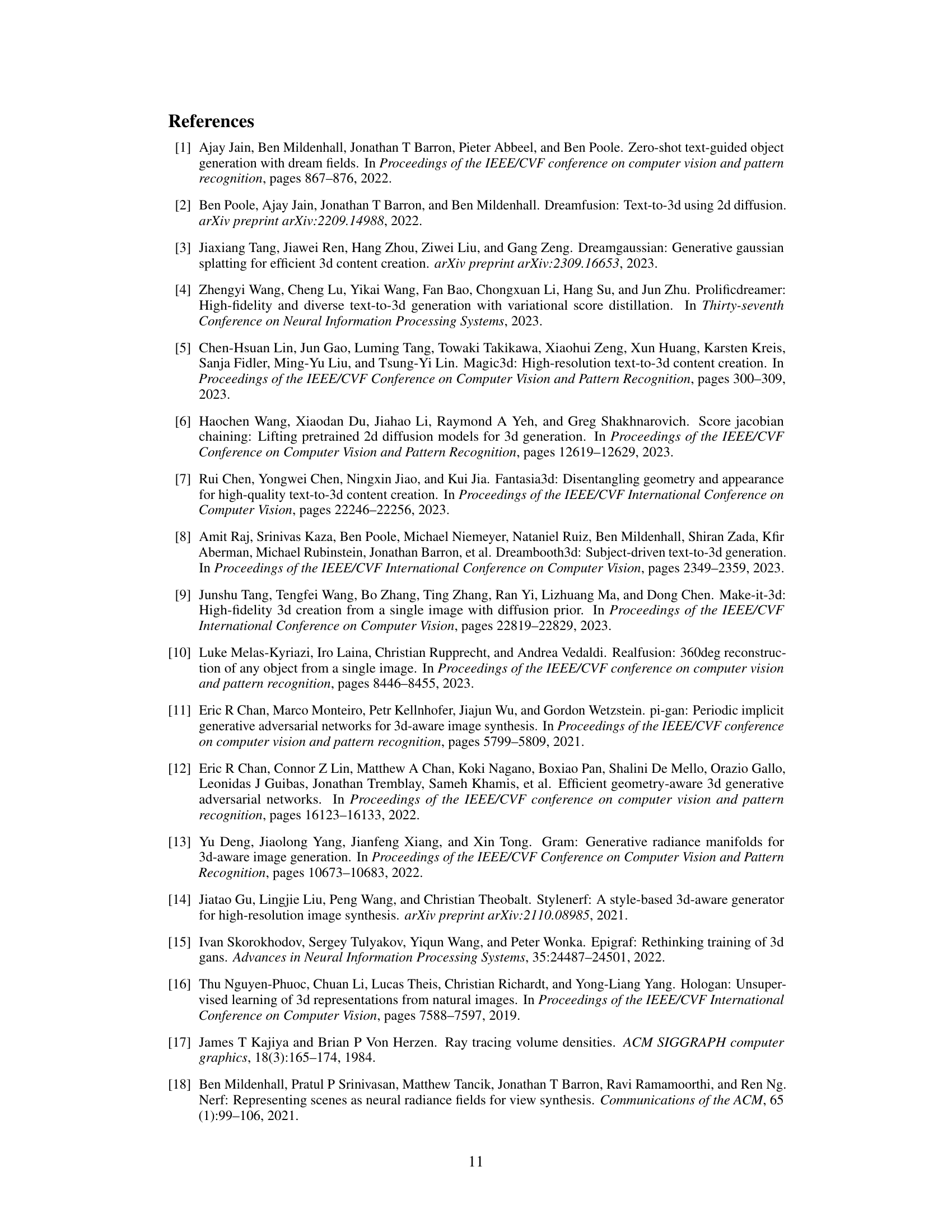

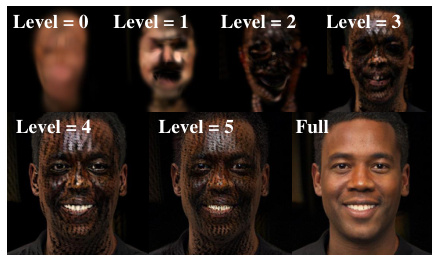

This figure visualizes the hierarchical Gaussian splatting process used in GSGAN. It shows how the generated 3D scene is built up from coarse to fine details across multiple levels. At the coarsest level (Level 0), the representation is very blurry and lacks detail. As the level increases, finer-level Gaussians provide more detail, resulting in a sharper and more realistic image at the final level (Full). This demonstrates the efficacy of GSGAN’s hierarchical approach in modelling both coarse and fine details in the 3D scene.

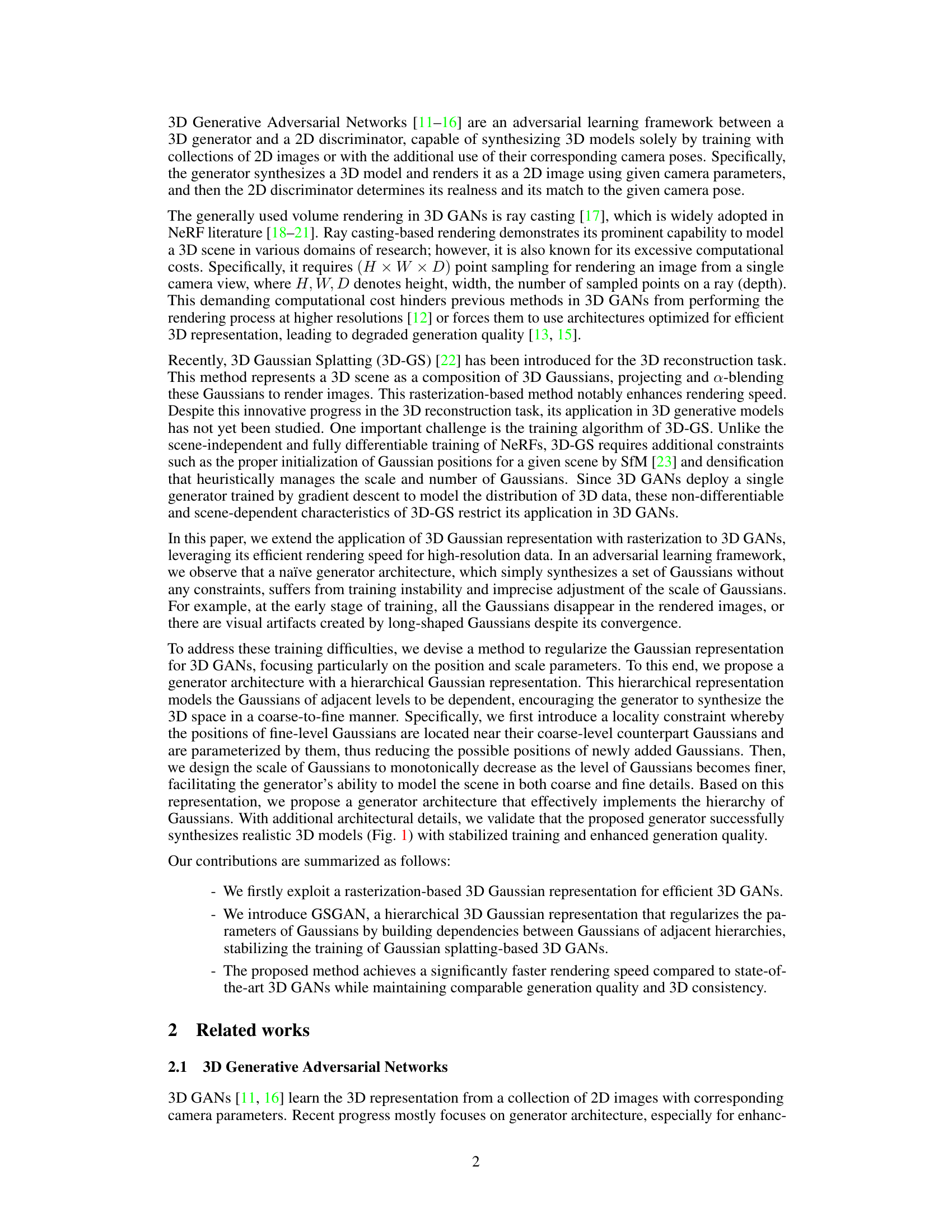

This figure illustrates the architecture of the generator used in GSGAN. The generator uses a hierarchical approach, building up a representation of the scene from coarse to fine levels of detail. Each level uses ‘anchors’ to guide the placement and scale of finer-level Gaussians which are then used for rendering the final image. The generator uses blocks consisting of attention and MLP layers and employs techniques like AdaIN and layerscaling to condition the latent code.

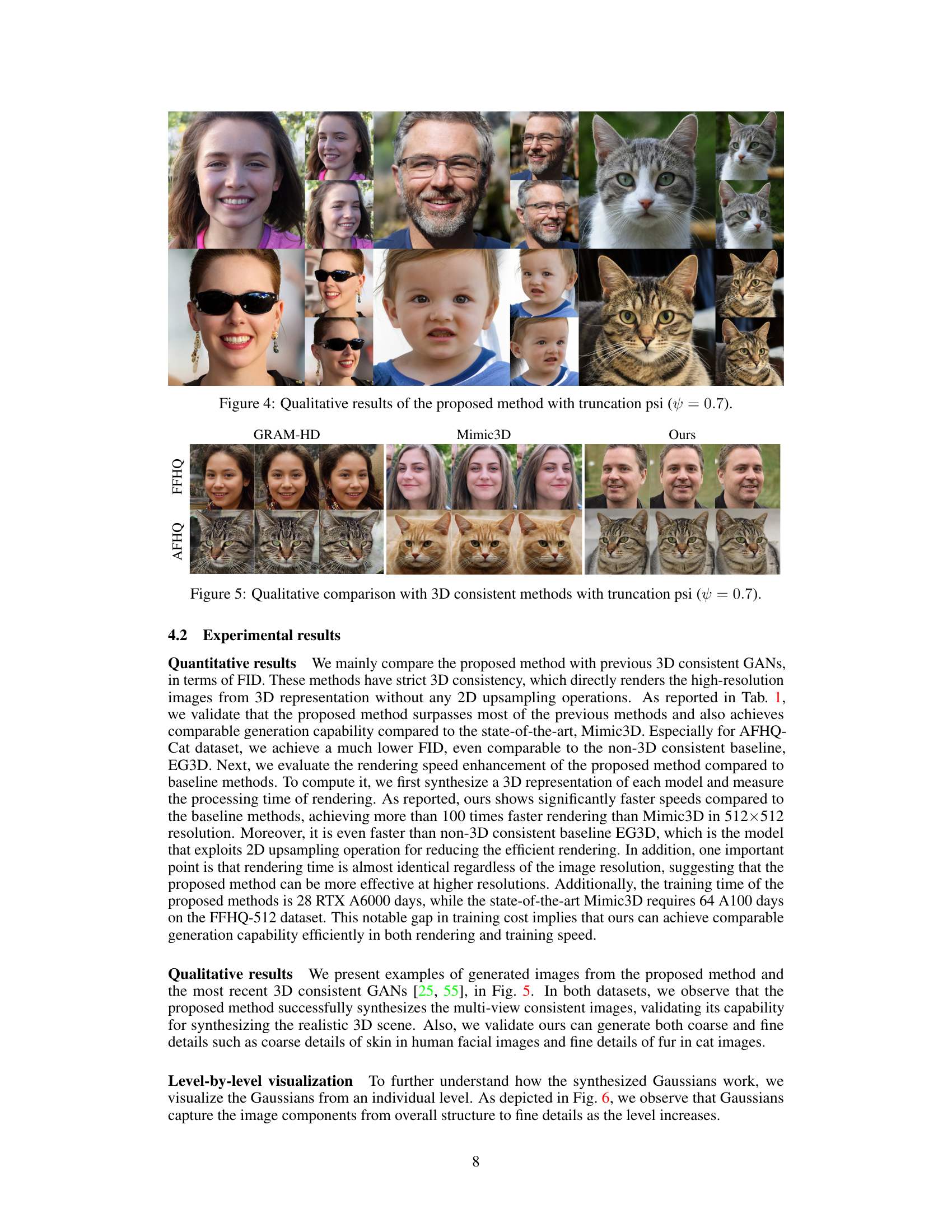

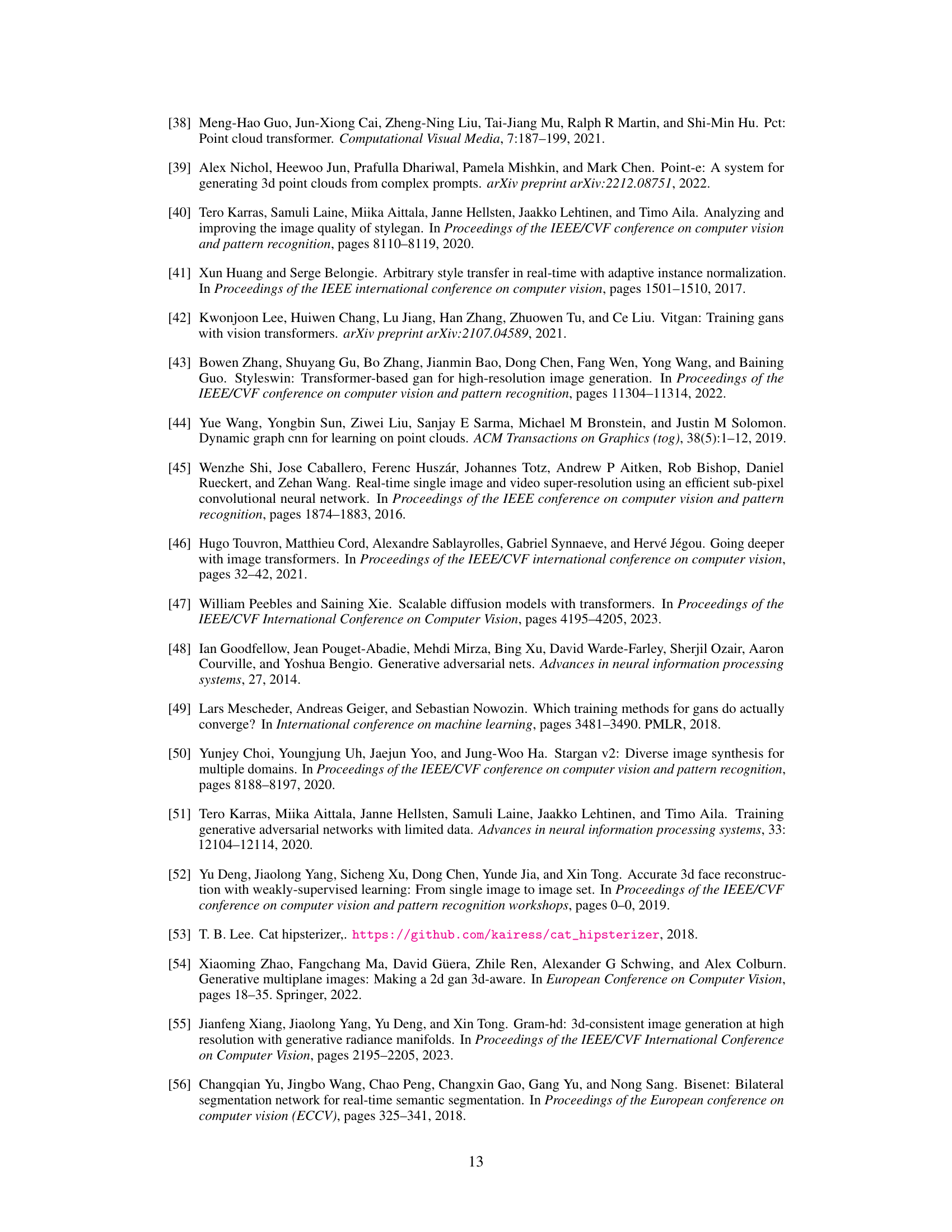

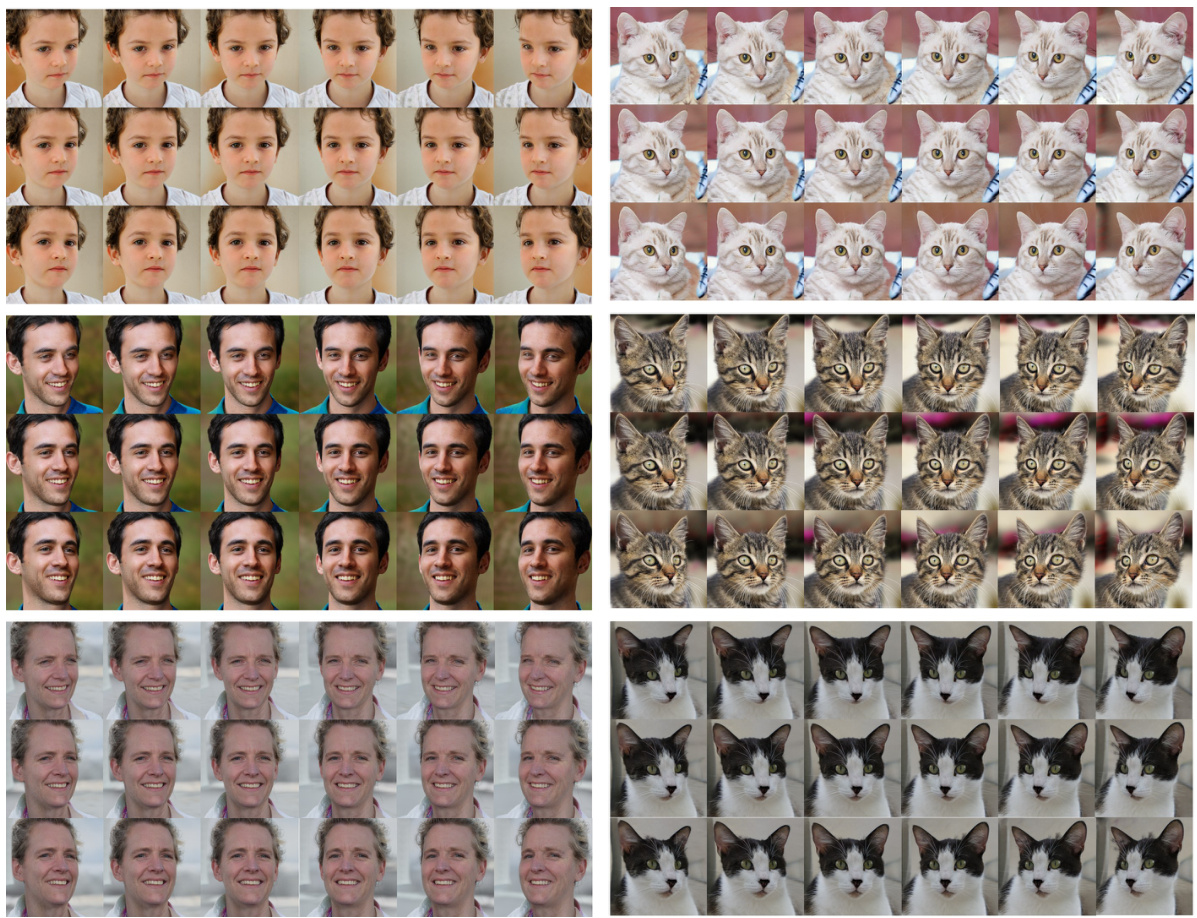

This figure showcases the qualitative results of the GSGAN model, which leverages a hierarchical Gaussian representation for generating high-resolution images. The truncation parameter ψ is set to 0.7, controlling the level of randomness in the generation process. The figure presents a diverse set of generated faces and cats, demonstrating the model’s ability to produce high-quality, realistic images with diverse attributes.

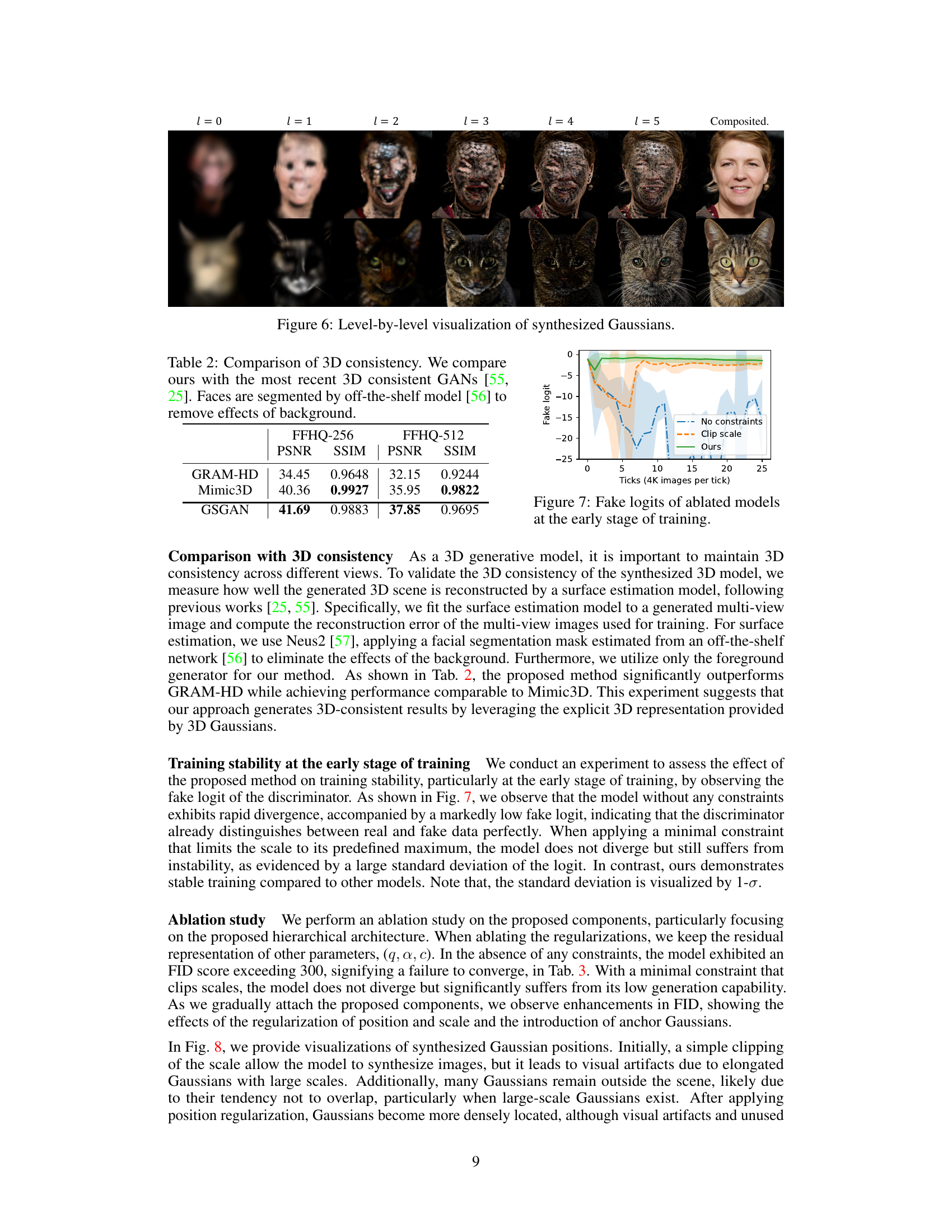

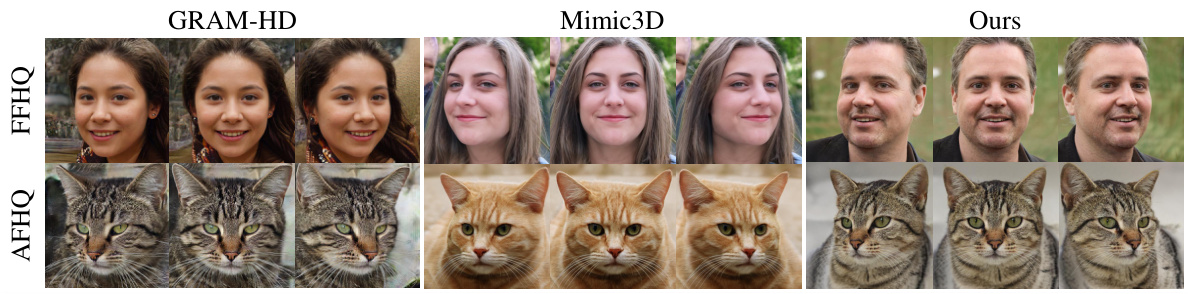

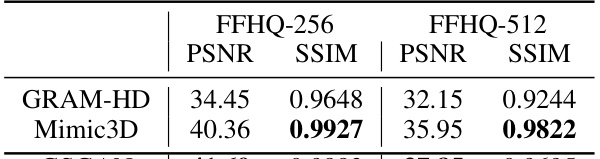

This figure shows a qualitative comparison of image generation results between GSGAN (the proposed method) and two state-of-the-art 3D-consistent GANs: GRAM-HD and Mimic3D. The comparison uses a truncation trick (ψ = 0.7) which enhances the quality of the generated images. For both the FFHQ (human faces) and AFHQ-cat (cat faces) datasets, the results demonstrate that GSGAN produces comparable image quality to the existing methods but with significantly faster rendering speed (as detailed in Table 1 of the paper). The images illustrate the 3D consistency of the generated samples across multiple views.

This figure visualizes the hierarchical Gaussian splatting process. It shows the results at each level of the hierarchy, starting with a very blurry image at level 0 (coarsest level). As the level increases, finer details appear until the final, fully rendered image is shown in the last column. This demonstrates how the hierarchical structure builds from coarse to fine detail in the generation of the 3D scene.

This figure shows the fake logits of three different models during the early stages of training. The ‘No constraints’ model shows significant instability and divergence, indicated by large fluctuations and a consistently low fake logit. The ‘Clip scale’ model, with a constraint on Gaussian scale, shows improved stability but still exhibits some volatility. The ‘Ours’ model, incorporating the hierarchical Gaussian representation proposed in the paper, demonstrates the most stable and consistent training behavior, as evidenced by a relatively flat and high fake logit.

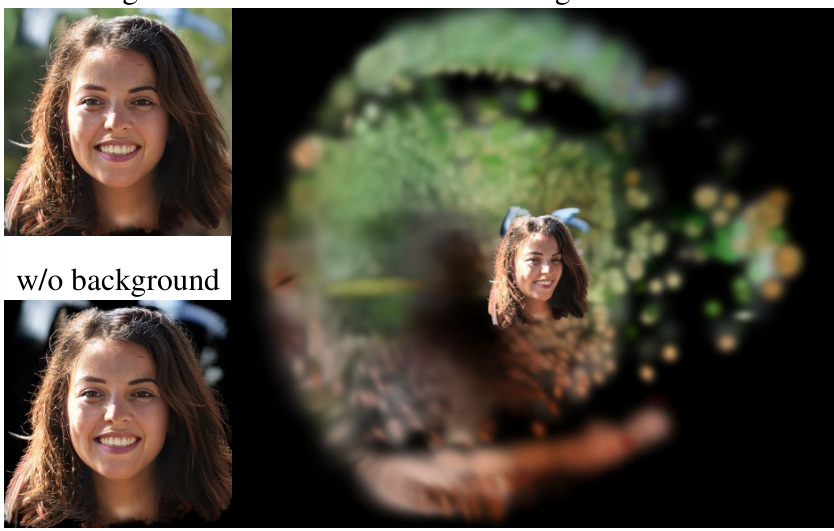

This figure visualizes the effects of ablating different components of the GSGAN model. The top-left shows results with only clipping of the scale, resulting in elongated Gaussians and visual artifacts. The top-right adds position regularization, improving Gaussian density but still showing artifacts. The bottom-left incorporates scale regularization, leading to better shape but still some artifacts. Finally, the bottom-right shows results with all components (our full model), producing the most realistic and well-formed Gaussians.

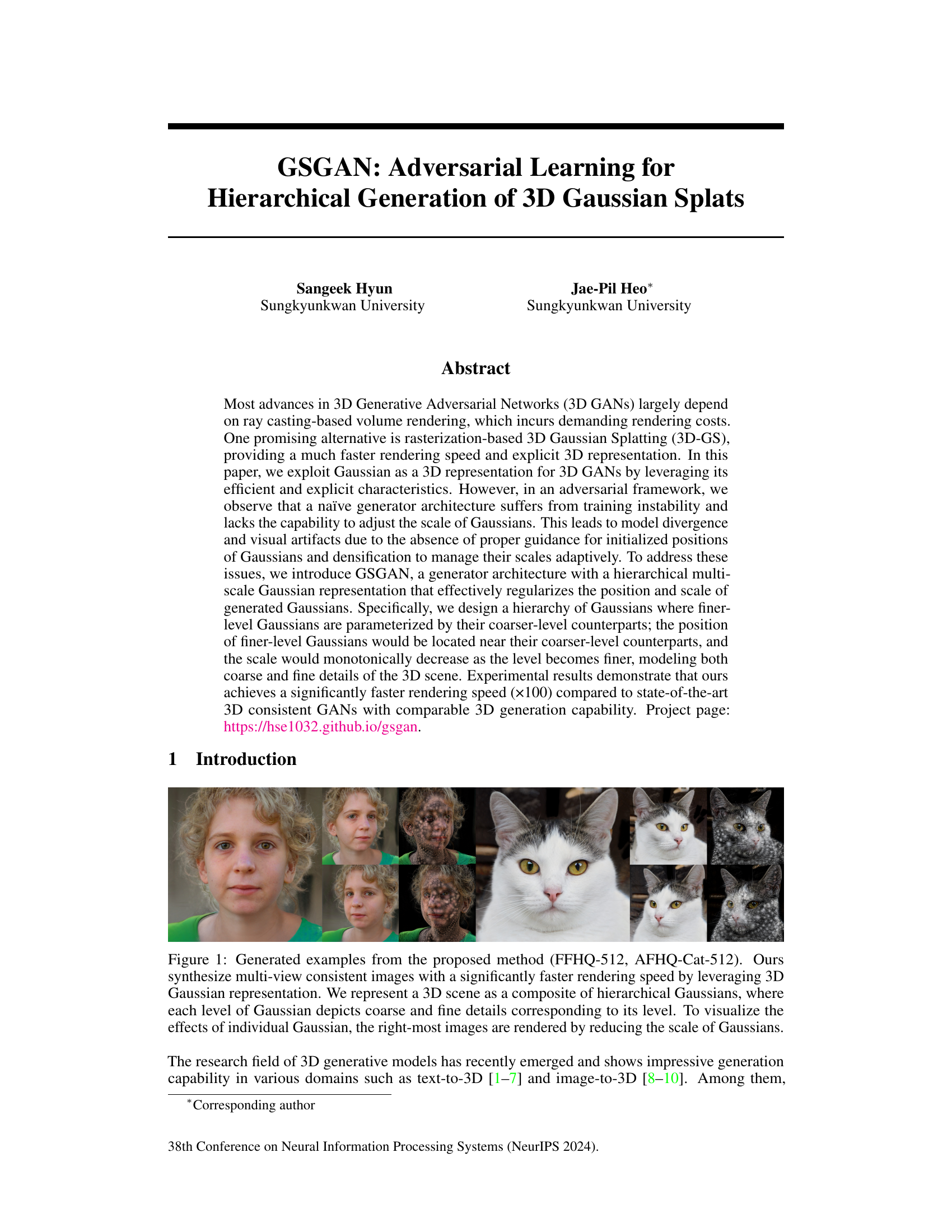

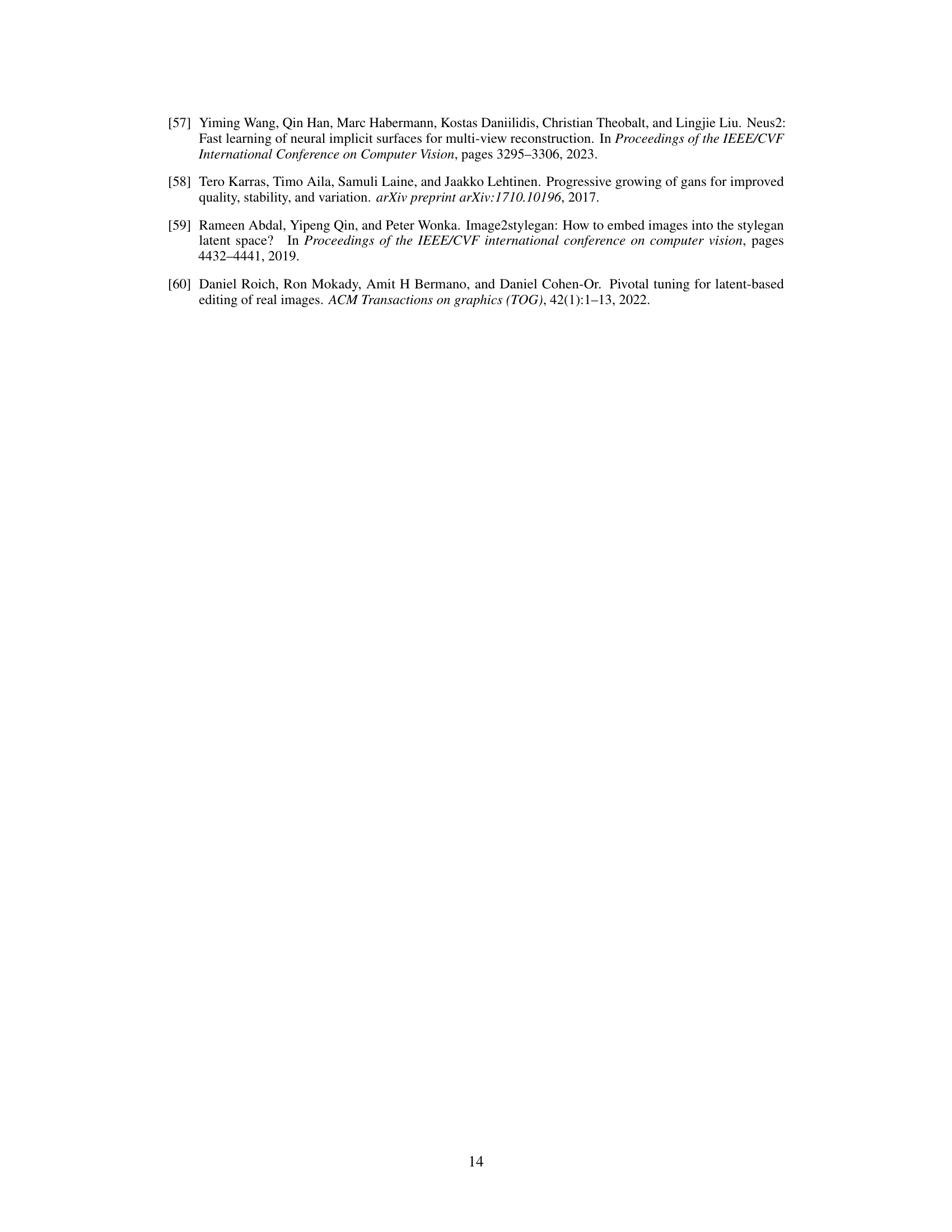

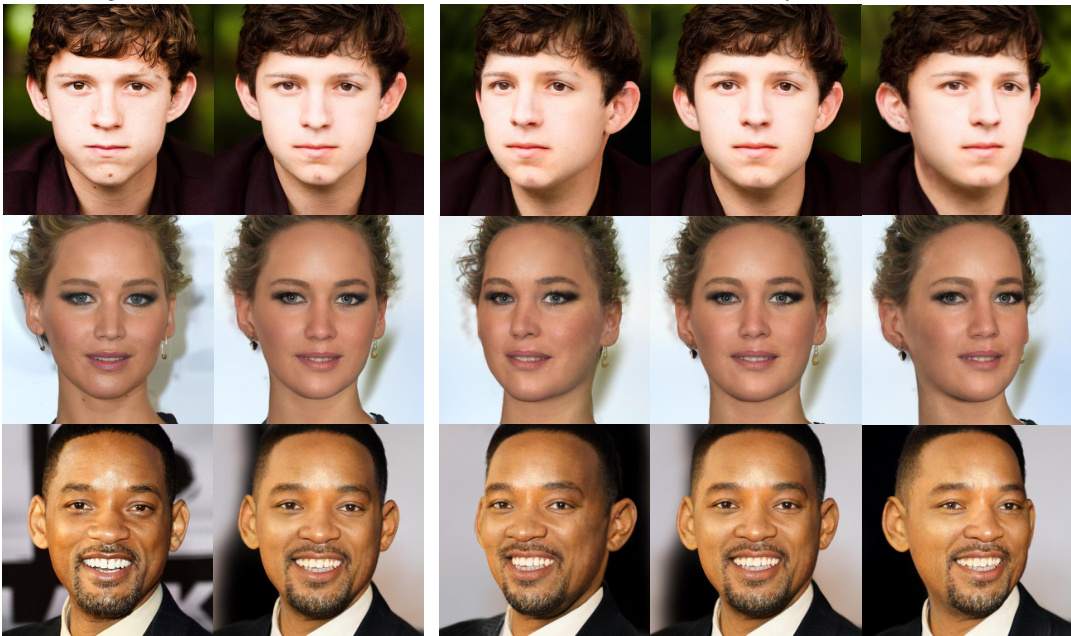

This figure shows example images generated by the proposed GSGAN model. The left side displays generated images of faces (FFHQ dataset) and cats (AFHQ-Cat dataset) that are multi-view consistent, meaning they look realistic from different viewpoints. The method achieves this speed using a 3D Gaussian splatting approach. The rightmost column shows the individual Gaussians used to generate each image, highlighting how different levels contribute to the overall image detail. The hierarchical nature of the Gaussians (coarse to fine levels) is also illustrated.

This figure showcases sample images generated by the proposed GSGAN model. It highlights the model’s ability to create multi-view consistent images (meaning the images look realistic from multiple viewpoints) using a 3D Gaussian splatting approach. The images are of faces (FFHQ-512 dataset) and cats (AFHQ-Cat-512 dataset). The hierarchical nature of the Gaussian representation is illustrated, showing how different levels represent varying levels of detail. The rightmost images in each row show the effect of reducing the scale of Gaussians, making the individual Gaussians clearly visible.

This figure shows example images generated by the proposed GSGAN model. It demonstrates the model’s ability to generate high-quality, multi-view consistent images of faces (FFHQ-512 dataset) and cats (AFHQ-Cat-512 dataset). The images showcase the hierarchical Gaussian splatting representation used, where each level represents a different level of detail (coarse to fine). The rightmost column of images shows the effects of individual Gaussians by reducing their scale for visualization.

This figure shows example images generated by the proposed GSGAN model. It demonstrates the model’s ability to create multi-view consistent images of faces and cats at high resolution (512x512). The hierarchical Gaussian splatting approach is highlighted, with the rightmost column showing individual Gaussians at varying scales to illustrate the level of detail captured at each hierarchy level.

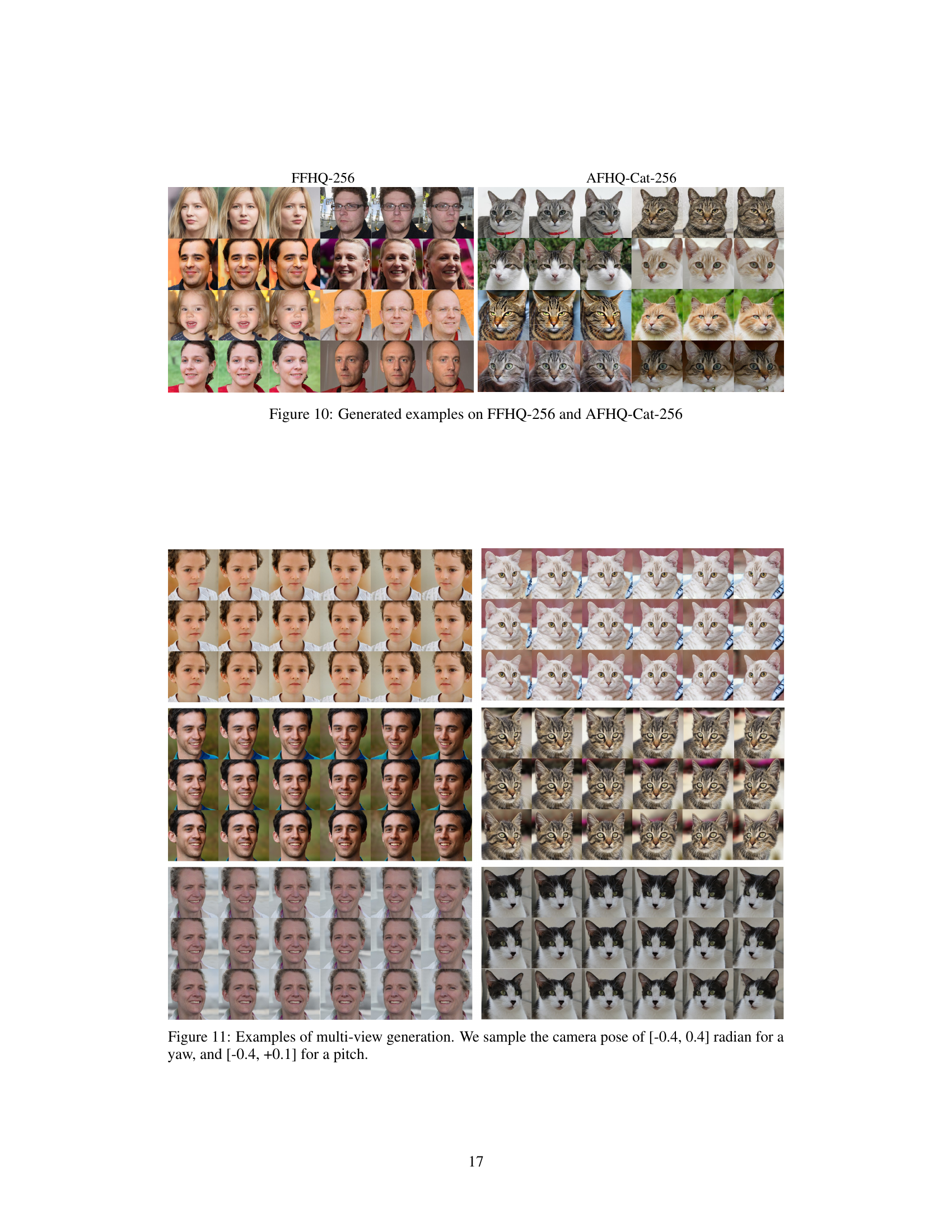

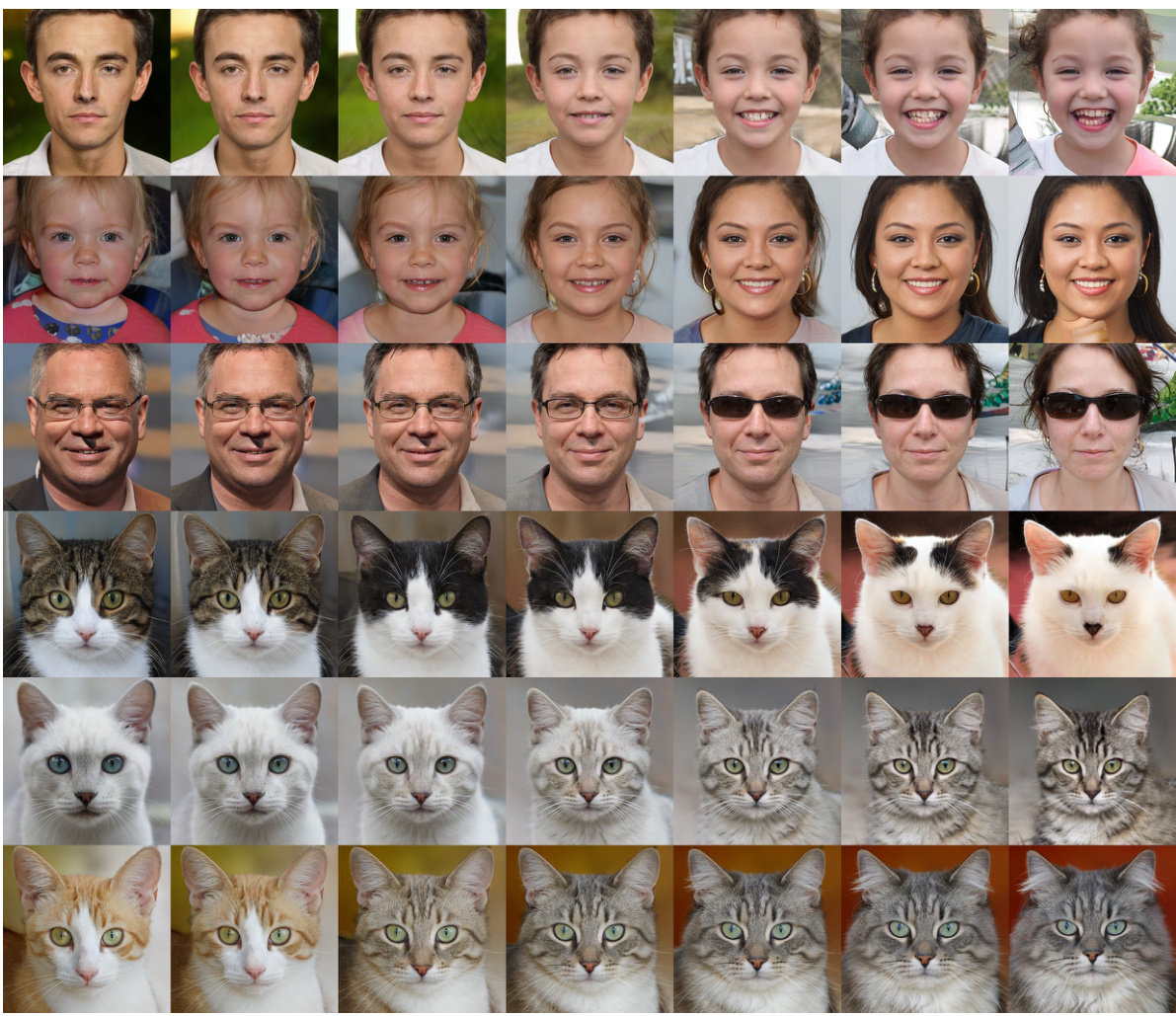

This figure shows the results of linear interpolation in the latent space (w space) of the proposed GSGAN model. It demonstrates the model’s ability to smoothly transition between different generated images by interpolating latent codes. The top row shows the interpolation between two different facial images, while the bottom row shows the interpolation between two cat images. The smooth transitions showcase the semantic richness and organization of the learned latent space, highlighting the model’s capacity to generate realistic and diverse outputs.

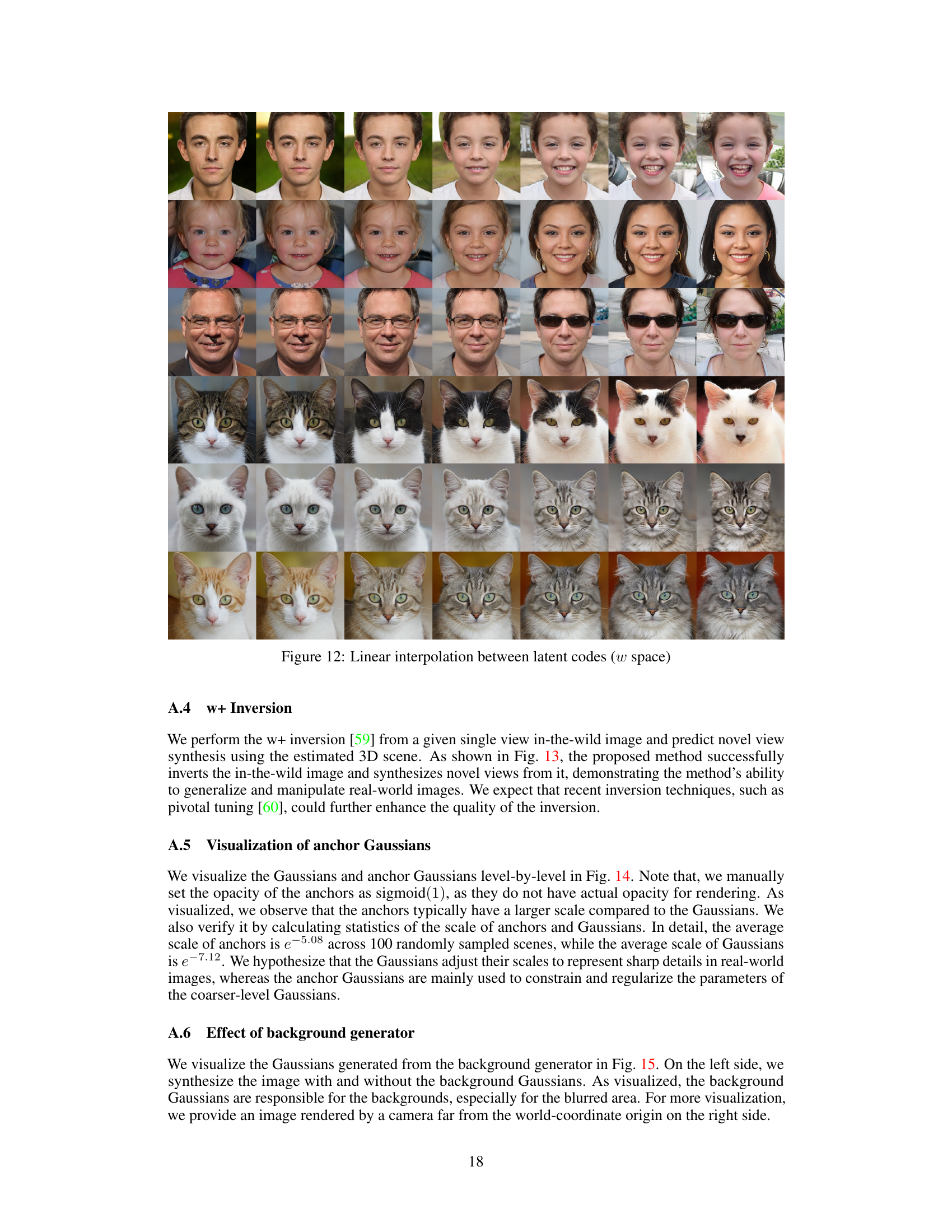

This figure visualizes the hierarchical Gaussian representation used in GSGAN. It shows the anchor Gaussians (A) and the generated Gaussians (G) at different levels of the hierarchy. The anchor Gaussians guide the generation of the finer-level Gaussians, providing regularization. The opacity of anchor Gaussians is set to sigmoid(1) for visualization purposes. The final rendered image is a composite of all Gaussian levels.

The figure shows a comparison between images generated with and without the background generator. The image on the left shows a person’s face without any background, while the image on the right displays the same person’s face with the background generator applied. The background generator adds a blurred, naturalistic backdrop to the generated images, enhancing their realism and visual appeal.

More on tables

This table compares the proposed GSGAN model with other state-of-the-art 3D-consistent GANs on FFHQ and AFHQ-Cat datasets. The comparison is based on Fréchet Inception Distance (FID) scores and rendering time. It highlights GSGAN’s significantly faster rendering speed while maintaining comparable generation quality.

This table presents the ablation study results performed on the FFHQ-256 dataset. It shows the impact of different components on FID scores, starting from a model without any constraints and progressively adding components like clipping scale, position regularization, scale regularization, background generator, and finally, anchor Gaussian. Each row represents the FID score of a model with cumulative addition of these components. Lower FID scores indicate better performance.

Full paper#