↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Many existing methods for customizing diffusion models struggle with generating images across different domains efficiently. They often rely on training multiple, separate models, which is computationally expensive and lacks flexibility in handling dynamic domains. This is particularly challenging when dealing with unsupervised domain adaptation (UDA) and domain generalization (DG), which involve adapting models to unseen data distributions.

This paper introduces Terra, a novel framework that utilizes a time-varying low-rank adapter to fine-tune diffusion models effectively across domains. Terra constructs a continuous parameter manifold, allowing for seamless transitions between domains and generation of intermediate domains. The paper provides theoretical analysis proving Terra’s ability to effectively manage multiple domains simultaneously, outperforming existing techniques in experiments on various benchmark datasets for both image interpolation and domain adaptation/generalization tasks.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers working with diffusion models due to its novel approach to cross-domain fine-tuning. It offers significant improvements in image generation and adaptation across various domains, paving the way for more robust and versatile AI systems. The theoretical analysis and extensive experiments provide a solid foundation for future research in parameter-efficient fine-tuning and generation-based domain adaptation.

Visual Insights#

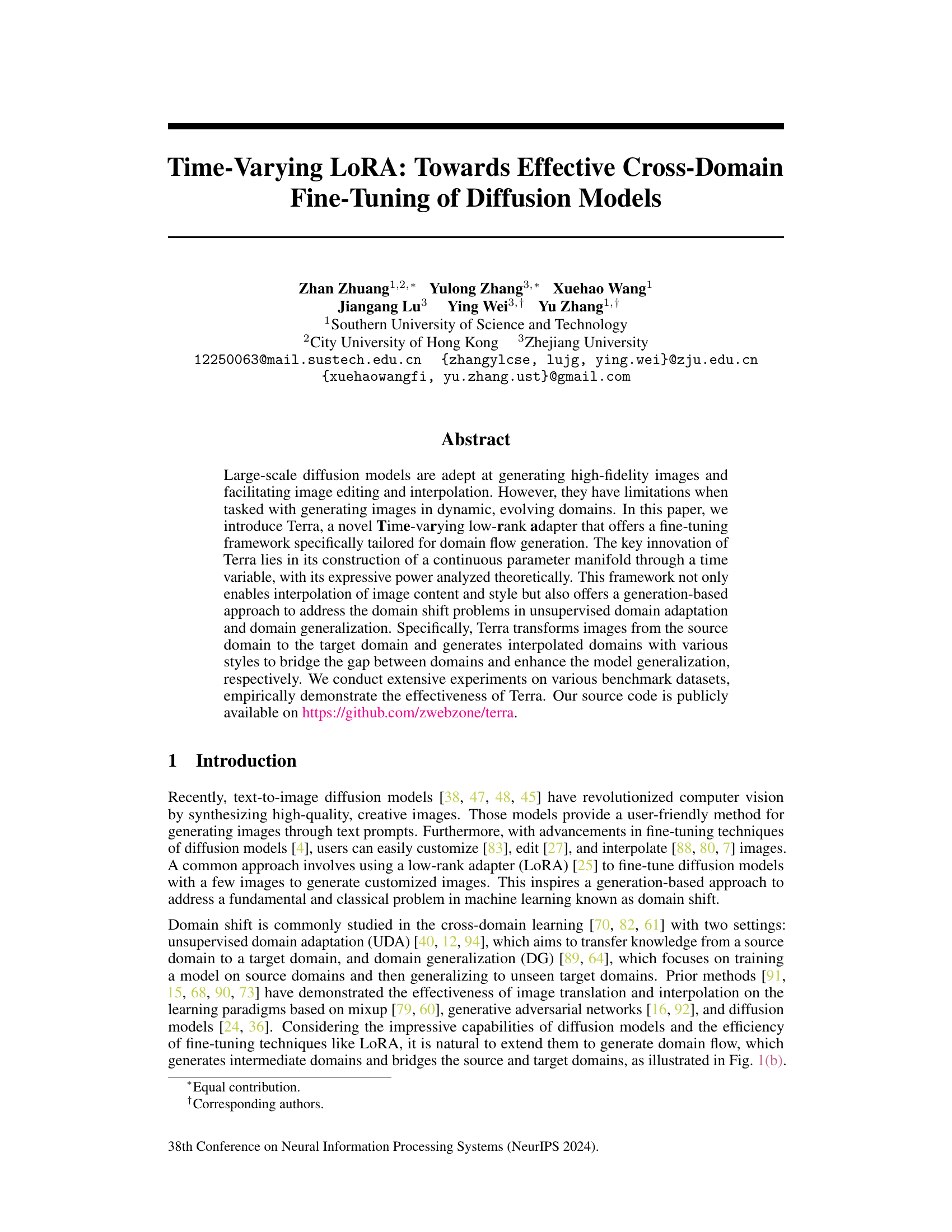

This figure illustrates the difference between the traditional LoRA and the proposed Terra method. (a) shows the LoRA method, where low-rank matrices are added to the pre-trained weights. (b) shows the Terra method, which introduces a time variable (t) to create a continuous parameter manifold. This allows for the generation of intermediate domains between the source and target domains, facilitating smoother transitions in image style and content.

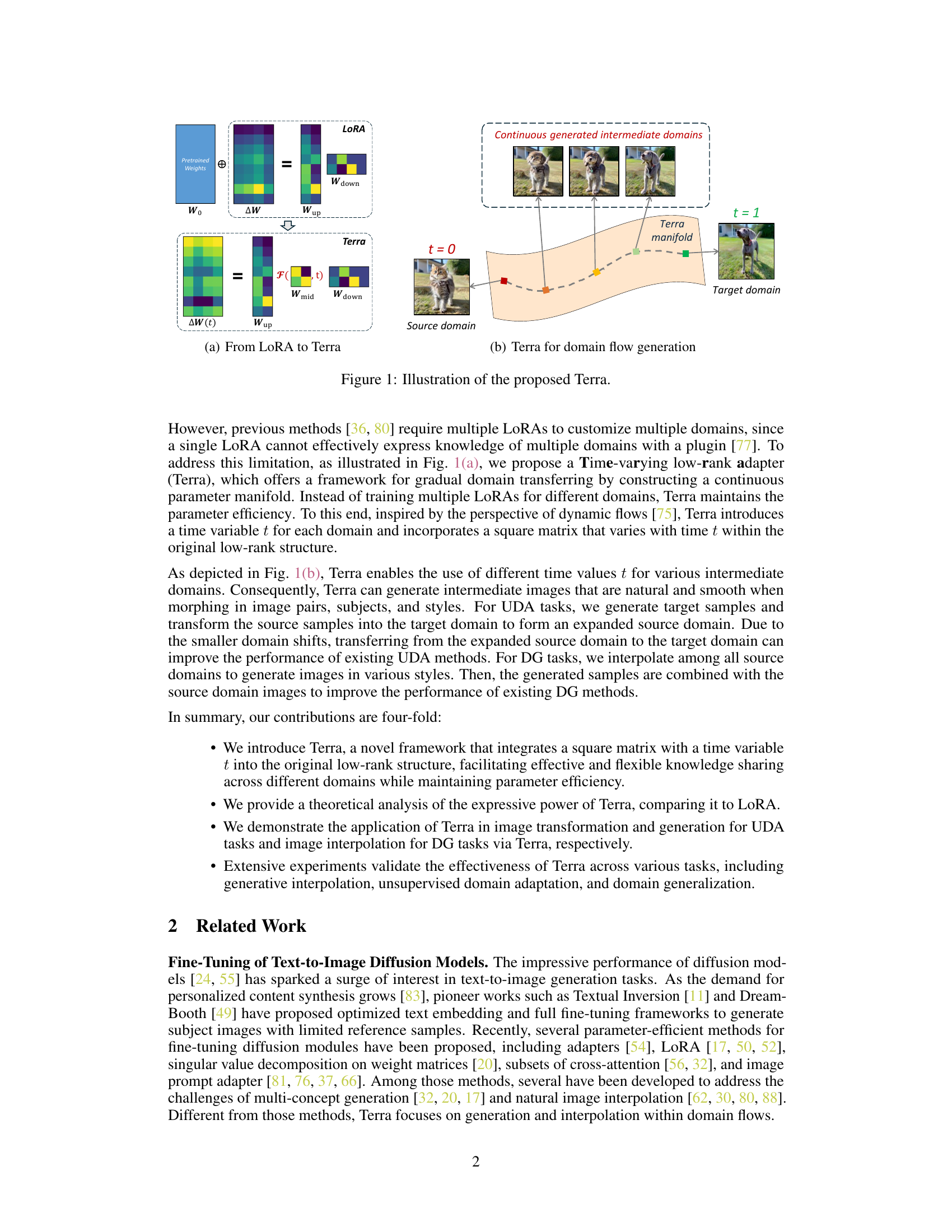

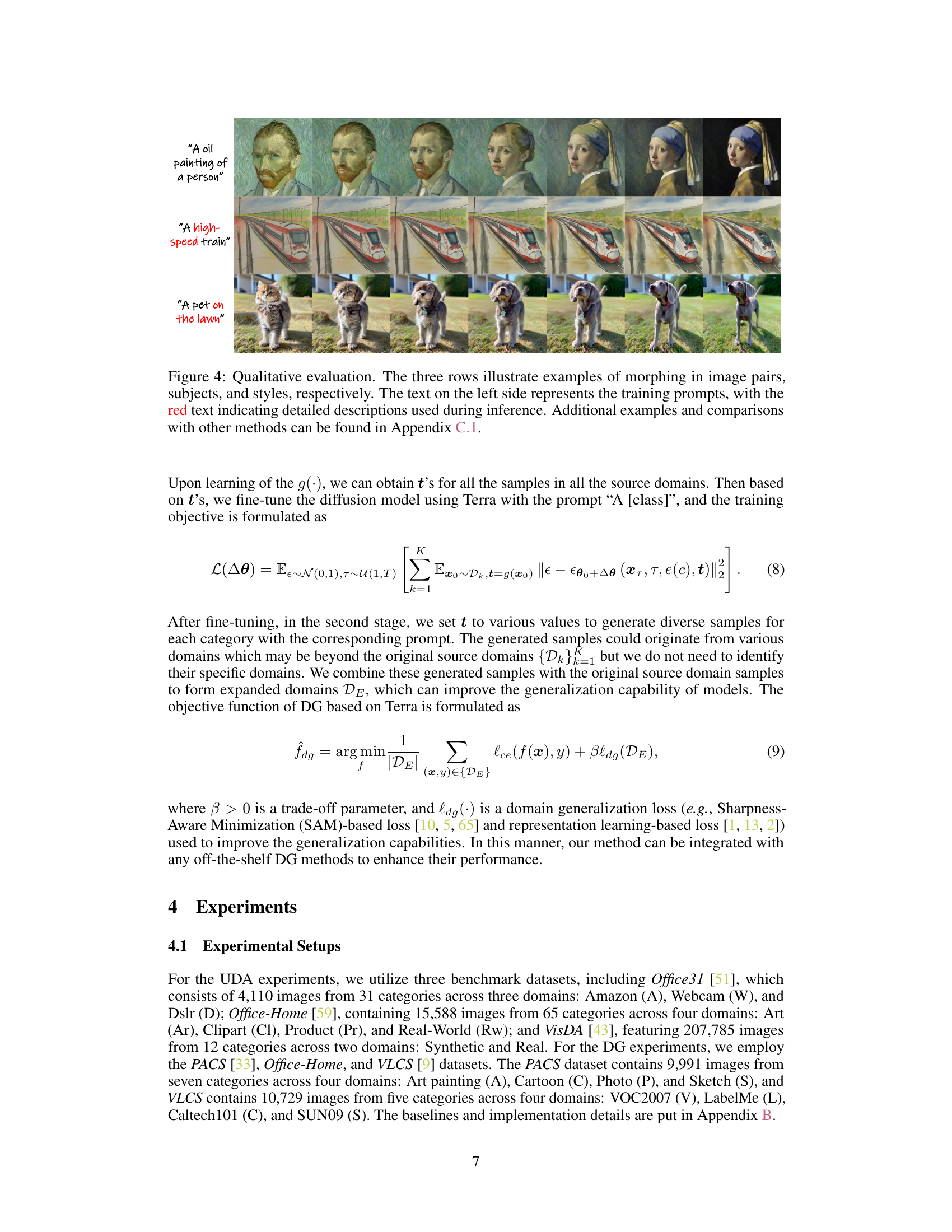

This table presents a quantitative comparison of different methods for generative image interpolation. The methods are evaluated using two metrics: the Frechet Inception Distance (FID), which measures the quality of generated images, and the Perceptual Path Length (PPL), which measures the smoothness of the transition between images. Lower scores on both FID and PPL indicate better performance. The table shows results for image pairs, style morphing, and subject morphing. The Terra method shows significantly better results compared to other methods, indicating high-fidelity and smooth image generation.

In-depth insights#

Time-Varying LoRA#

Time-Varying LoRA presents a novel approach to fine-tuning large diffusion models, addressing the limitations of traditional LoRA in handling cross-domain tasks. Instead of employing multiple, static LoRAs for different domains, it introduces a time-varying parameter within the low-rank adapter. This creates a continuous parameter manifold, enabling smooth transitions and interpolations across various domains. Theoretically, it’s shown to be more expressive than multiple LoRAs with comparable parameter counts, effectively bridging source and target domains through intermediate generated domains. This approach is particularly valuable for unsupervised domain adaptation (UDA) and domain generalization (DG), where it helps to mitigate domain shift. The time-varying nature facilitates generating smoothly morphing intermediate domains, which are useful for building bridges between source and target datasets. The flexibility of the Time-Varying LoRA framework, therefore, makes it a powerful and efficient technique for tackling the challenges of domain adaptation and generalization in generative models.

Domain Flow#

The concept of “Domain Flow” in the context of this research paper is quite innovative. It introduces a novel way to address the challenge of cross-domain adaptation and generalization in image generation models by creating a continuous parameter manifold spanning different domains. This manifold enables the generation of intermediate domains or styles, effectively bridging the gap between source and target domains. The key innovation is in using a time-varying low-rank adapter, a modification of LoRA, which allows smooth interpolation and image transformation. This approach offers parameter efficiency compared to using multiple separate adapters, making it a practical solution. By generating intermediate domains, the method tackles domain shift issues present in both unsupervised domain adaptation (UDA) and domain generalization (DG). The theoretical analysis presented further supports the expressiveness and efficiency of this method. The ‘Domain Flow’ is therefore not just a simple transition, but a powerful technique that enables seamless transitions between domains or styles, leading to improved generalization and adaptation capabilities in image generation models.

Theoretical Analysis#

A theoretical analysis section in a research paper would ideally delve into the mathematical underpinnings of the proposed model or method. It should go beyond empirical observations and provide a rigorous justification for the model’s properties. This could involve proving theorems about its convergence, analyzing its computational complexity, or demonstrating its expressive power relative to existing techniques. A strong theoretical analysis builds confidence in the reliability and generalizability of the results, suggesting why the method works and under what conditions it’s expected to perform well. For instance, a proof of convergence can offer assurance that the method will eventually find a solution, while a complexity analysis might inform its scalability to larger datasets. By examining the model’s capacity to represent various data distributions or functions, a well-structured theoretical analysis provides a deeper understanding of its capabilities and limitations compared to purely empirical evaluations. Crucially, any assumptions made should be clearly stated and their implications discussed. The analysis should also consider edge cases or scenarios where the model might fail, highlighting any potential weaknesses.

UDA & DG#

The paper delves into unsupervised domain adaptation (UDA) and domain generalization (DG), two crucial areas in machine learning tackling the challenge of applying models trained on one domain to another, unseen domain. UDA focuses on transferring knowledge from a labeled source domain to an unlabeled target domain, aiming to minimize domain discrepancy. DG, conversely, trains models robust enough to generalize across multiple source domains and perform well on completely new, unseen domains. The core of the paper appears to be a novel method bridging these two approaches by generating intermediate domains to ease the transition between source and target domains, enhancing adaptability and generalization. The effectiveness is demonstrated through extensive experiments on various benchmark datasets, highlighting the approach’s ability to significantly improve the performance of existing UDA and DG methods. The proposed framework appears to offer an innovative solution to domain adaptation and generalization challenges. Its emphasis on data generation and theoretical analysis showcases a deeper understanding of the inherent difficulties of domain transfer.

Future Works#

The paper’s “Future Works” section presents exciting avenues for extending the research. Improving the theoretical analysis of Terra’s expressive power is crucial, potentially involving exploring different function forms for the time-varying matrix and establishing tighter bounds on approximation error. Expanding the application scope of Terra to encompass other modalities (audio, video, 3D models), larger datasets, and more complex tasks like multi-modal generation is vital. The authors also rightly suggest exploring different integration strategies with existing UDA/DG methods, testing its efficacy when combined with advanced techniques. Lastly, addressing potential limitations such as computational costs and the need for a careful balance of parameter efficiency with expressiveness, warrants further investigation. These future steps would significantly enhance the impact and robustness of Terra.

More visual insights#

More on figures

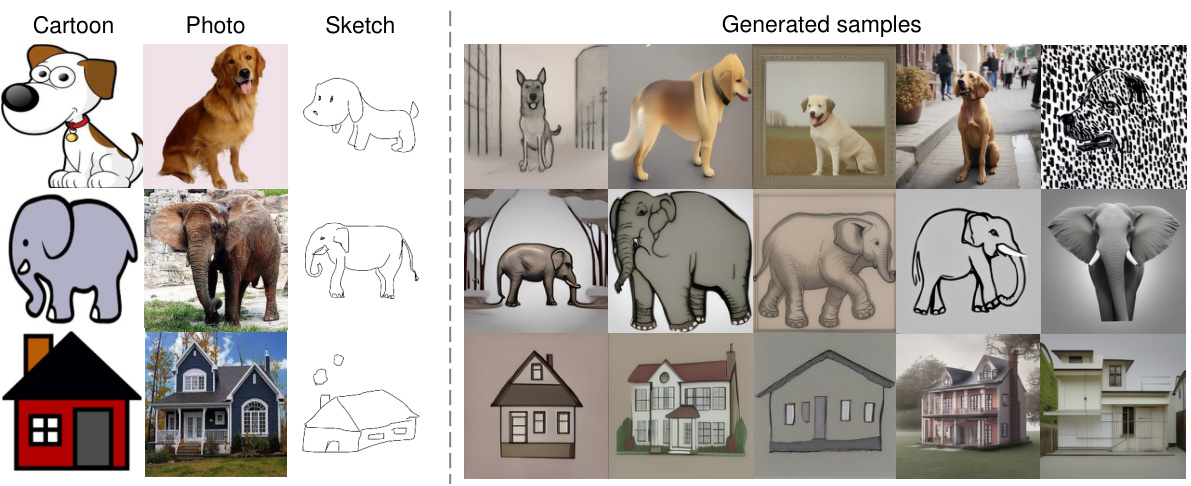

This figure illustrates the training process for creating evolving visual domains using Terra. The process involves fine-tuning a text-to-image diffusion model with Terra, a time-varying low-rank adapter. The model is trained on source images (t=0) and target images (t=1), each with corresponding text prompts. The continuous time variable ’t’ allows for the generation of intermediate domains between the source and target domains, representing smooth transitions between the two.

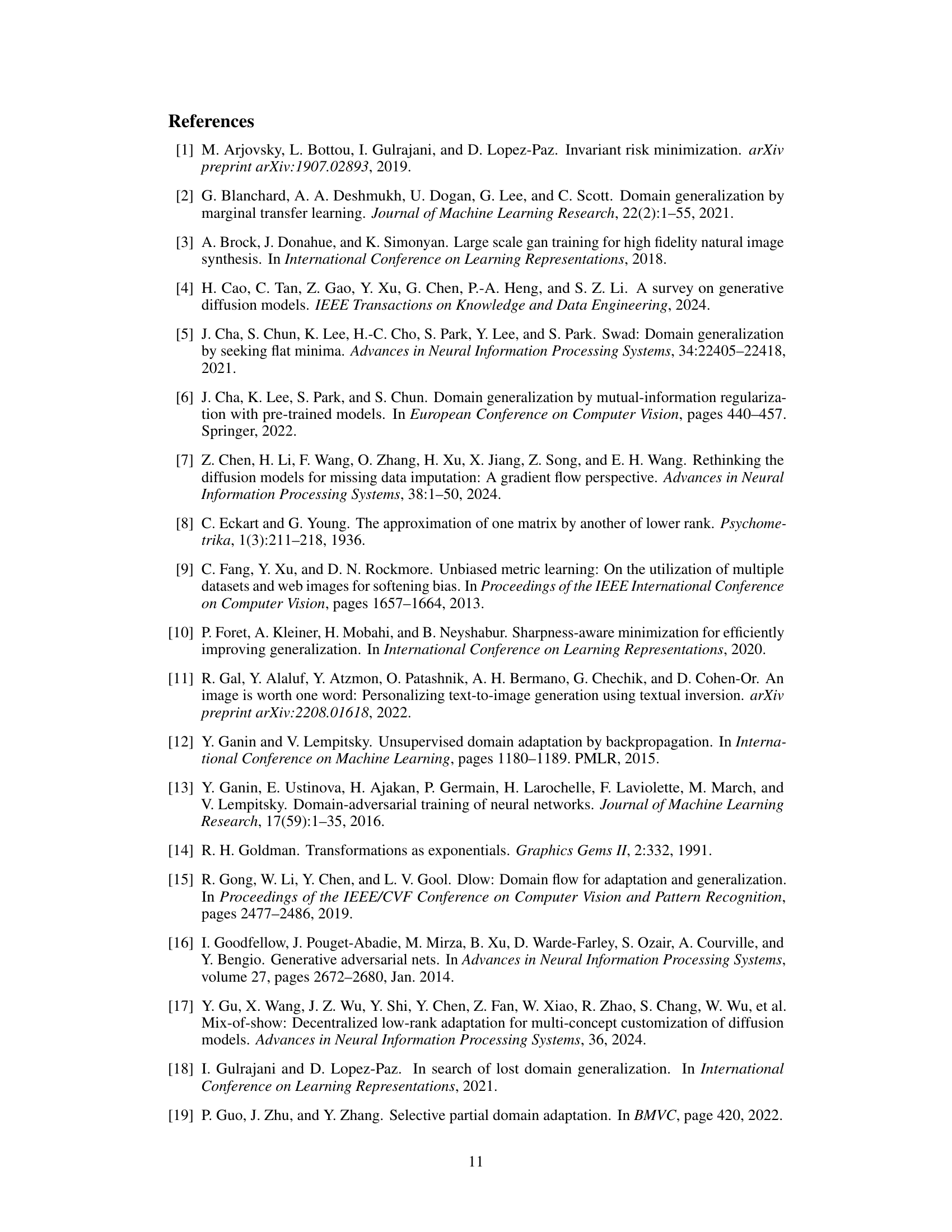

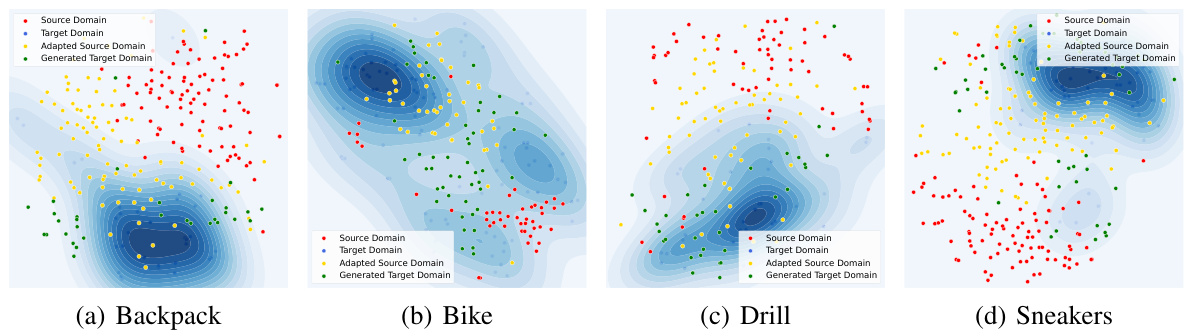

This figure illustrates the two-stage frameworks for unsupervised domain adaptation (UDA) and domain generalization (DG) using the proposed Terra model. The UDA framework (a) involves first training Terra to generate images in both the source and target domains. Then, it uses the trained Terra to transform source domain images into the style of the target domain, creating an ‘adapted’ source domain with a smaller domain gap to the target domain. This expanded source domain is then used with an existing UDA method. The DG framework (b) trains a ’t predictor’ network to assign a time variable to each image based on its style. This variable is then used by Terra to generate intermediate domains by interpolating between existing source domains. These new generated samples are combined with the original source domains to train a more generalized model.

This figure shows three examples of image morphing using the Terra model. The top row shows morphing between two portraits, one in the style of Van Gogh and the other in a more realistic style. The second row shows morphing between two images of a high-speed train, one in a photorealistic style and the other in a more painterly style. The bottom row shows morphing between two images of a pet, one a kitten and the other a dog. Each row demonstrates the ability of Terra to generate smooth transitions between different image styles and subjects.

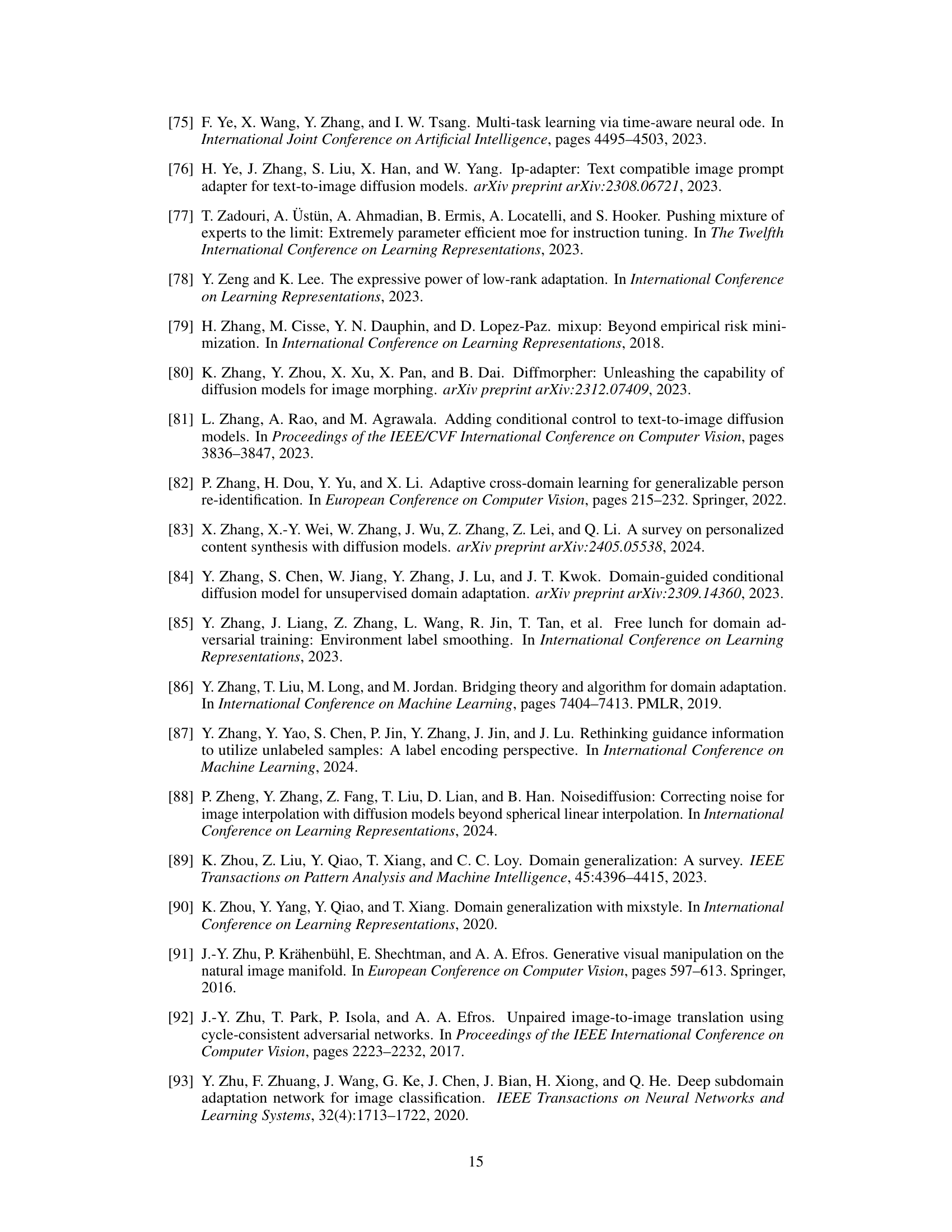

This figure uses t-SNE to visualize the effectiveness of the proposed Terra method in unsupervised domain adaptation (UDA). It shows the distribution of data points from the source domain, target domain, adapted source domain (source data transformed to resemble the target), and generated target domain (synthetic data generated to resemble the target). The visualization is done for four image classes from the Office-Home dataset (Pr→Cl task). The plots show how Terra helps bridge the gap between the source and target domains by generating intermediate samples, improving the performance of UDA.

The figure illustrates the proposed Terra, a Time-varying low-rank adapter, and its differences from the conventional LoRA adapter. Panel (a) shows the conceptual difference between LoRA and Terra: LoRA utilizes a single low-rank matrix update while Terra incorporates a time-varying component for dynamic domain transformations. Panel (b) demonstrates Terra’s application in generating domain flows. A continuous parameter manifold is formed by varying the time parameter ’t’ allowing for smooth transitions and the generation of intermediate domains between the source and target domains.

This figure shows a qualitative comparison of image morphing results between different methods: DGP, DDIM, LoRA interpolation, DiffMorpher and Terra. The top row shows the results using DGP (GAN-based). The second row displays results using DDIM, while the third row illustrates those obtained via LoRA interpolation. The fourth row shows the results using DiffMorpher, and the fifth shows results from the Terra method. Finally, the bottom row provides results of a method combining Terra with DiffMorpher. The figure demonstrates that Terra generates smoother and more natural-looking intermediate images compared to the other methods.

This figure shows three examples of the generative interpolation capabilities of Terra. The top row demonstrates morphing between two images (a portrait photo and an oil painting). The middle row shows style morphing (from photorealistic to watercolor painting). The bottom row depicts subject morphing (transitioning between images of a person and a pet). Each row illustrates a smooth transition between the source and target image, highlighting Terra’s capacity to create intermediate representations.

This figure shows three examples of image morphing using the Terra model. The top row demonstrates morphing between two images; the middle row shows style morphing; and the bottom row illustrates subject morphing. Each row displays a sequence of images that smoothly transition between the source and target images, showcasing Terra’s ability to generate intermediate images with consistent styles or subjects.

This figure illustrates the proposed Terra method, a time-varying low-rank adapter for fine-tuning diffusion models. Panel (a) shows how Terra builds upon the existing LoRA method by introducing a time-varying component, allowing for a continuous parameter manifold. Panel (b) demonstrates how Terra generates a continuous flow of intermediate domains between a source and target domain. This is achieved by varying a time parameter, ’t’, allowing for smooth transitions between different domains and styles.

More on tables

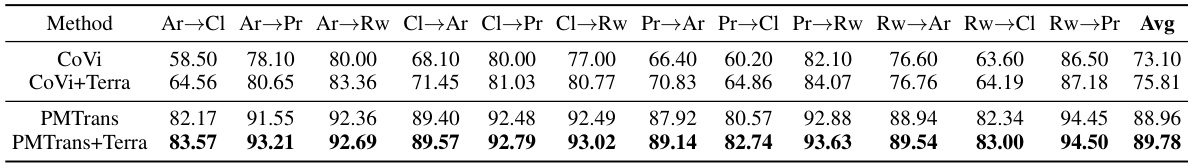

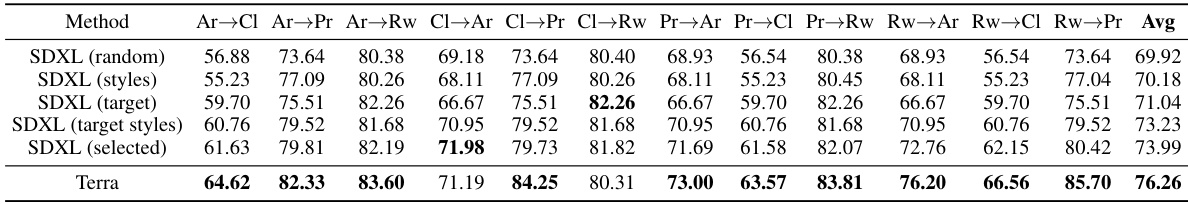

This table presents the results of unsupervised domain adaptation (UDA) experiments using different methods on the Office-Home and VisDA datasets. The table shows the transfer accuracy (percentage) achieved by each method on various sub-tasks within each dataset. The best performing method for each sub-task is highlighted in bold, providing a clear comparison across multiple state-of-the-art UDA techniques and the proposed Terra method.

This table shows the transfer accuracies achieved by different methods on the Office-Home and VisDA datasets for unsupervised domain adaptation (UDA). The results are presented as percentages and broken down by individual class and domain. The best performance for each setting is highlighted in bold, showing the effectiveness of the proposed method.

This table presents the results of unsupervised domain adaptation (UDA) experiments on the Office-Home and VisDA datasets. It compares the performance of several different methods, including the proposed Terra method, and shows the transfer accuracy for each method across different source and target domains. The best accuracy for each domain transfer task is highlighted in bold, showcasing the effectiveness of certain methods in transferring knowledge between domains.

The table presents three possible forms for the time-dependent function F(W, t) within the Terra model. These are Linear, Exponential, and Cosine. Each form offers a different way of incorporating the time variable (t) and the weight matrix (W) to create a parameter manifold for domain adaptation. The table also provides the derivatives of these functions with respect to time and the value of the function at time t=0. The ‘Diagonal’ column shows variants designed for the diagonal of the matrix, allowing for control over specific elements.

This table presents the results of an ablation study on the dimensionality of the time variable (t) used in the Terra model and a comparison of the linear form of Terra with other configurations. The experiment uses the PACS dataset for domain generalization (DG). The table shows the average accuracy across different domains (A, C, P, S) for various dimensions of t (dim1, dim2, dim3) and the Linear form, along with the baseline (ERM). The highest average accuracy for each configuration is highlighted in bold.

This table presents the performance of various unsupervised domain adaptation (UDA) methods on the Office-Home and VisDA datasets. The results are expressed as transfer accuracy percentages, which is a measure of how well a model trained on a source domain generalizes to a target domain. The table highlights the best-performing method for each task. The methods compared include ERM, DANN, AFN, CDAN, MDD, SDAT, MSGD, MCC, and the proposed Terra, both alone and integrated with existing methods (MCC+Terra and ELS+Terra).

This table presents the results of unsupervised domain adaptation (UDA) experiments using the proposed Terra method and several baseline methods on the Office-Home and VisDA datasets. The table shows the transfer accuracy (percentage of correctly classified images) for each method across different source and target domain combinations. The ‘Avg’ column represents the average accuracy across all domain combinations. The best performance for each domain combination is highlighted in bold, indicating the effectiveness of the Terra method in improving the accuracy of UDA compared to existing approaches.

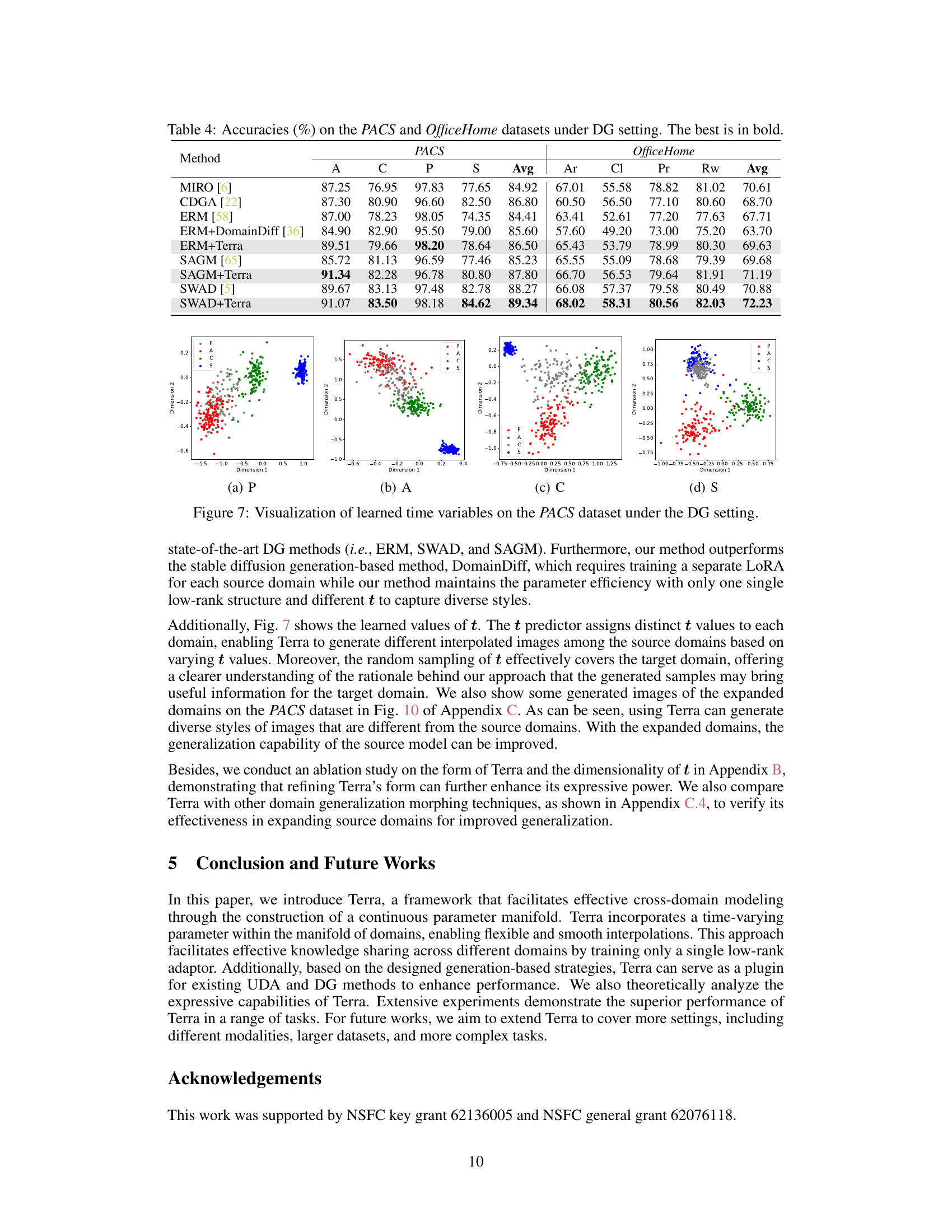

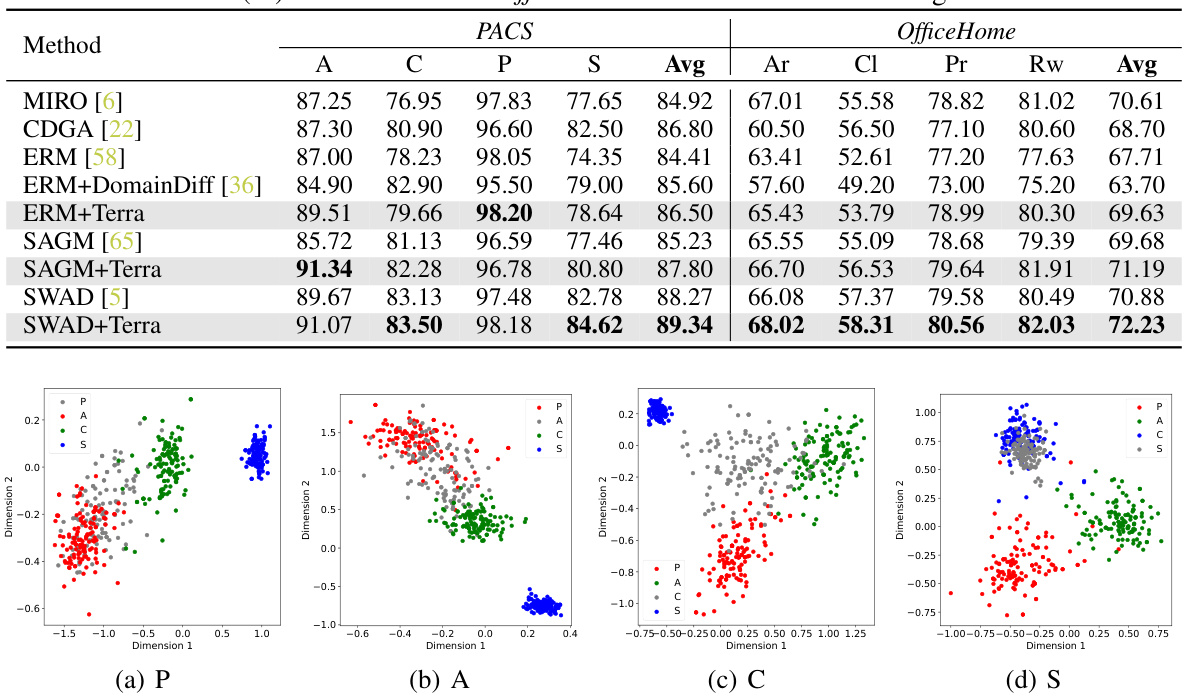

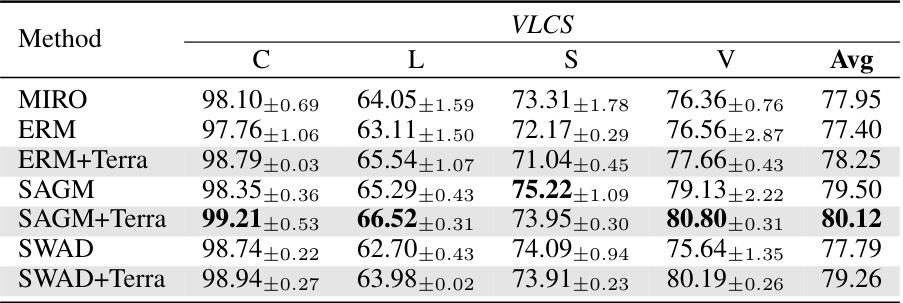

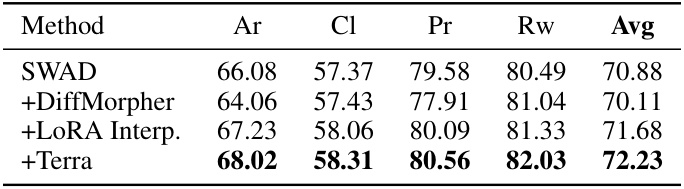

This table presents the classification accuracies achieved by different domain generalization (DG) methods on the PACS and Office-Home datasets. The methods include several baselines (ERM, MIRO, SAGM, SWAD) and the proposed Terra method, both alone and integrated with existing baselines. The table shows the average accuracy across different visual domains (A, C, P, S for PACS; Ar, Cl, Pr, Rw for Office-Home) and the overall average accuracy. The best performance for each setting is highlighted in bold. The results demonstrate the effectiveness of the Terra method in improving the generalization capabilities of the baseline models.

This table shows the transfer accuracies achieved by different unsupervised domain adaptation (UDA) methods on the Office-Home and VisDA datasets. The results are presented as percentages, indicating the success rate of transferring knowledge from a source domain to a target domain. The ‘best performance’ is highlighted in bold for each task, indicating which method performed best in each scenario.

This table compares the performance of different morphing methods (DiffMorpher and LoRA Interpolation) combined with the SWAD method for domain generalization on the Office-Home dataset. It highlights the superior performance of Terra, which integrates domain knowledge more effectively than direct interpolation between images.

This table shows the transfer accuracy achieved by different methods on the Office-Home and VisDA datasets for unsupervised domain adaptation (UDA). The results are presented as percentages and indicate the model’s ability to generalize from a source domain to a target domain. The best performing method for each sub-task is highlighted in bold, allowing for easy comparison between different approaches.

This table presents the transfer accuracies achieved by various unsupervised domain adaptation (UDA) methods on the Office-Home and VisDA datasets. The results are shown as percentages, and the best performance for each category is highlighted in bold. The table compares the performance of the proposed Terra method (combined with existing UDA methods) against several baseline UDA methods. This allows for a quantitative evaluation of Terra’s effectiveness in improving the accuracy of domain adaptation.

This table presents the transfer accuracy results for several unsupervised domain adaptation (UDA) methods on two benchmark datasets: Office-Home and VisDA. The results show the performance of each method across different source and target domain pairs. The best performing method for each pair is highlighted in bold. This allows for a comparison of the effectiveness of different UDA approaches in transferring knowledge from a source domain to a target domain.

This table presents the performance comparison of different unsupervised domain adaptation (UDA) methods on two benchmark datasets: Office-Home and VisDA. The results are presented as transfer accuracy percentages, indicating the success rate of adapting a model trained on a source domain to a target domain. The best performing method for each task and dataset is highlighted in bold, allowing for a clear comparison of UDA method effectiveness.

This table presents the results of domain generalization (DG) experiments on the PACS and OfficeHome datasets. Several methods are compared, including ERM (Empirical Risk Minimization), SWAD (Sharpness-Aware Minimization), SAGM (Sharpness-Aware Gradient Matching), DomainDiff, and the proposed Terra method, both individually and in combination with other methods. The table shows the accuracy achieved by each method on different subsets of each dataset, representing diverse visual domains. The best performance for each subset is highlighted in bold, enabling easy comparison between the different methods and their performance in various domains.

Full paper#