↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Active learning aims to improve learning efficiency by querying labels only for the most informative data points. Previous research mainly focused on uniform guarantees, overlooking distribution-specific behaviors. This led to a less precise understanding of optimal learning rates across various datasets and learning scenarios.

This work shifts to a distribution-dependent framework, offering a complete characterization of achievable learning rates in active learning. Four distinct categories of learning rates are identified: arbitrarily fast, exponential, sublinear approaching 1/n, and arbitrarily slow. These are determined by combinatorial measures like Littlestone trees, star trees, and VCL trees, providing a precise map of learning curve possibilities based on data complexity.

Key Takeaways#

Why does it matter?#

This paper is crucial because it completely characterizes optimal learning rates in active learning, a field lacking such a comprehensive understanding. It resolves a long-standing open problem, offering new algorithms and insights for researchers. This work opens new avenues for research by exploring the relationship between combinatorial complexity measures and achievable learning rates, impacting future active learning algorithm design.

Visual Insights#

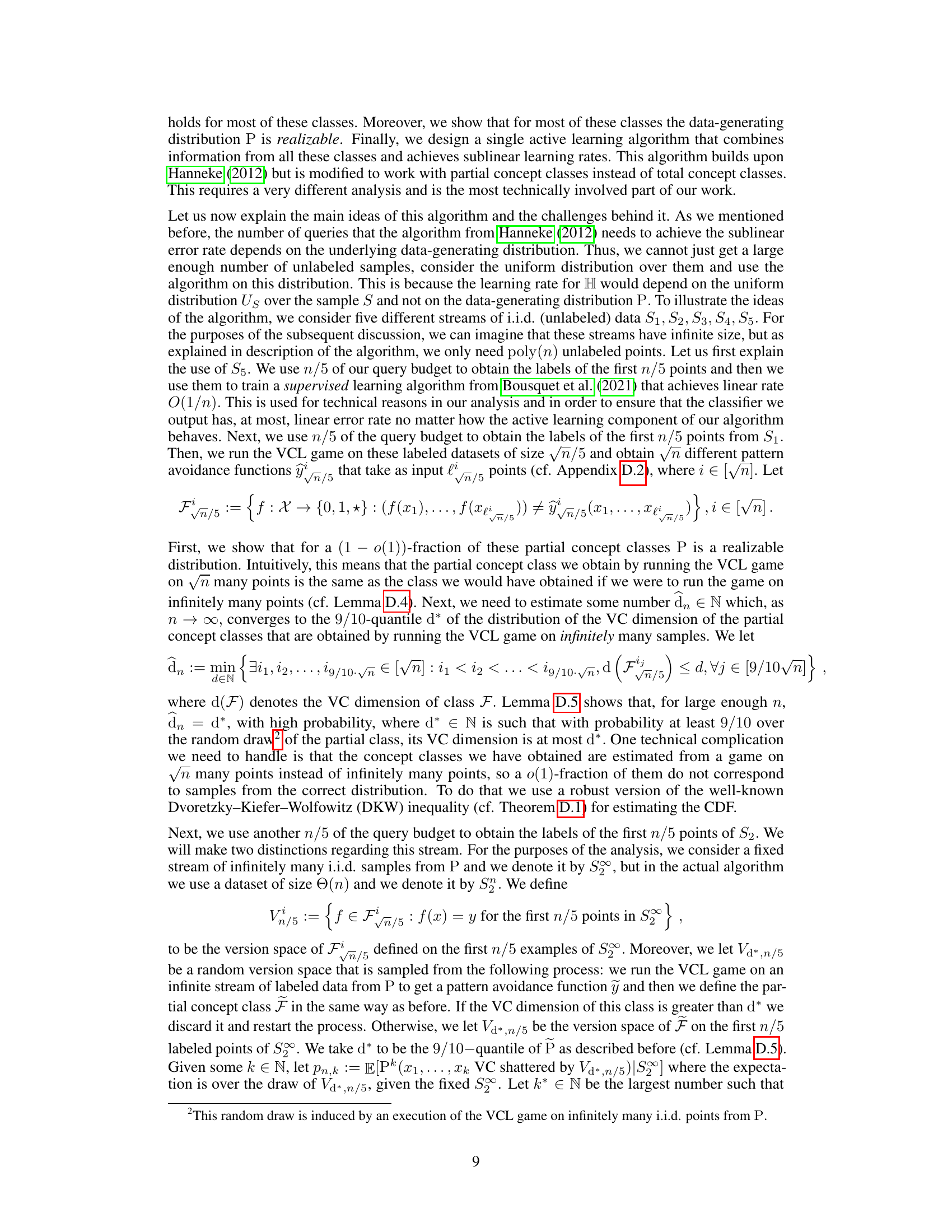

This algorithm achieves arbitrarily fast learning rates in the active learning setting. It takes as input a label budget N and a rate function R(n). The algorithm proceeds by creating sets of labeled and unlabeled data, splitting the labeled data into batches, training ordinal SOA (Sequential Optimal Algorithm) on prefixes of batches, evaluating classifiers on unlabeled data, and forming equivalence classes based on classifier behavior. The algorithm iteratively queries labels of points that cause disagreement among classifiers, aiming to efficiently identify a classifier correctly classifying all queried points. If such a classifier is found, it’s returned; otherwise, an arbitrary classifier from the equivalence classes is selected.

Full paper#