↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Collaborative inference, while enhancing resource-limited IoT device capabilities, suffers from data leakage vulnerabilities exposing input data and predictions. Existing defense mechanisms often severely compromise model accuracy.

InfoScissors, a novel defense strategy, tackles this by directly reducing the mutual information between a model’s intermediate outputs and the device’s input/predictions. This is achieved through a carefully designed training process that regularizes the model to filter private information while maintaining accuracy. InfoScissors demonstrates significant improvements, outperforming existing methods in diverse attack scenarios and offering a superior trade-off between accuracy and privacy.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers working on edge computing, collaborative inference, and data privacy. It offers a novel defense mechanism against data leakage, addresses limitations of existing methods, and opens avenues for further research in secure AI systems. Its theoretical analysis and empirical evaluation provide valuable insights for advancing privacy-preserving machine learning.

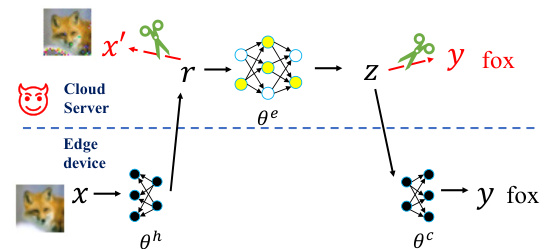

Visual Insights#

This figure illustrates a general framework for collaborative inference, where a model is split between an edge device and a cloud server. The edge device processes the input data and sends intermediate representations to the cloud server, which then performs further processing and sends back the results. A malicious server attempts to infer the input data and predictions from the exchanged information. The proposed method, InfoScissors, aims to prevent this data leakage by reducing the mutual information between the model’s intermediate outputs and the edge device’s input and predictions.

This table shows a comparison of the accuracy achieved by three different methods: the clean accuracy of a classifier on the edge device, the accuracy of a model completion (MC) attack performed by a malicious cloud server using only 40 labeled samples, and the accuracy of a model trained from scratch on the cloud server (also using 40 labels). The table highlights that the MC attack achieves surprisingly high accuracy (69.31%), close to the clean accuracy of the edge device (77.20%), demonstrating the vulnerability of collaborative inference to prediction leakage. In contrast, training a model from scratch on the server produces significantly lower accuracy (15.34%).

In-depth insights#

Mutual Info Defense#

Mutual information (MI) based defenses against data leakage in collaborative inference aim to reduce the information shared between sensitive data (input/predictions) and intermediate model outputs. The core idea is that by limiting the MI, the risk of a malicious server reconstructing private information is significantly decreased. InfoScissors, a defense strategy mentioned in the context, exemplifies this approach by regularizing the model during training to minimize the MI between intermediate representations and the original input/output. A key challenge lies in accurately estimating MI, which is often computationally expensive for high-dimensional data. Variational methods are frequently employed to approximate MI, but these approximations can introduce inaccuracies and potentially limit the effectiveness of the defense. The effectiveness of MI defenses strongly depends on the specific attack model and dataset used for evaluation, and a comprehensive theoretical analysis is crucial to understand their limitations and strengths. The trade-off between privacy and model utility is paramount, as overly strong defenses might severely hamper model performance. Therefore, careful design and rigorous evaluation are vital to ensure that an MI-based defense achieves a balance between privacy protection and acceptable utility.

InfoScissors Method#

The InfoScissors method proposes a novel defense against data leakage in collaborative inference by leveraging mutual information. It directly addresses the vulnerabilities of prior approaches based on the Variational Information Bottleneck (VIB) which often suffer from significant utility loss. InfoScissors operates by regularizing the model during training to minimize the mutual information between intermediate representations and both the input data and the final predictions. This dual regularization strategy, applied to both the edge device’s head model and the cloud server’s encoder, enhances privacy without heavily compromising model accuracy. The method is theoretically grounded, providing variational upper bounds for mutual information and incorporating an adversarial training scheme to further refine the defense. Empirical evaluations demonstrate InfoScissors’s superior robustness against multiple attacks, including model inversion and model completion attacks, showcasing a better trade-off between privacy protection and model utility compared to existing baselines.

VIB Inadequacies#

The authors critique the Variational Information Bottleneck (VIB) approach, frequently used in mutual information-based defense strategies against data leakage in collaborative inference. They argue that VIB’s focus on minimizing the mutual information between representations and inputs, while seemingly beneficial for privacy, neglects the crucial role of information relevant to the prediction task. By forcing the representations to be close to a fixed Gaussian distribution, VIB inadvertently compromises model utility, leading to substantial performance degradation. This is particularly problematic when dealing with shallow head models on resource-constrained edge devices, where strong attacks are more easily launched. InfoScissors, in contrast, directly tackles data leakage by regularizing both input and prediction representations separately, achieving a superior balance between privacy and accuracy. This is accomplished by developing a novel mutual information estimation method that circumvents VIB’s limitations, demonstrating a clear advantage in defending against strong attacks without a significant drop in model performance.

Attack Robustness#

Attack robustness is a critical aspect of any defense mechanism against data leakage in collaborative inference. A robust defense should effectively withstand various attack strategies, including model inversion (MI) and model completion (MC) attacks. The paper’s evaluation of InfoScissors across multiple datasets and diverse attacks (black-box and white-box) demonstrates its strong robustness. InfoScissors’s ability to maintain high model accuracy (less than a 3% drop) even under strong attacks, particularly when the edge device has a shallow model, is a significant achievement. The theoretical analysis further strengthens the claim, highlighting InfoScissors’ superiority over VIB-based methods. However, future research should investigate robustness against more sophisticated and adaptive attacks, and explore the impact of various hyperparameter settings on robustness, particularly concerning the trade-off between accuracy and privacy preservation. The generalizability of the defense across different model architectures and datasets also requires further exploration.

Future Research#

Future research directions stemming from this paper could explore several promising avenues. Extending InfoScissors to handle more complex collaborative inference settings, such as those involving multiple edge devices or heterogeneous models, is crucial. Improving the efficiency and scalability of the InfoScissors training algorithm is also needed for practical deployment. The theoretical analysis could be deepened by investigating the tightness of the proposed mutual information bounds and exploring alternative regularization techniques. Furthermore, a comparative study with other defense methods beyond those considered in the paper, including advanced adversarial training strategies and differential privacy approaches, would provide additional insights. Finally, it would be valuable to analyze the robustness of InfoScissors against more sophisticated attacks, such as those which adapt during training, and to investigate the potential for combining InfoScissors with other defense mechanisms for enhanced security.

More visual insights#

More on figures

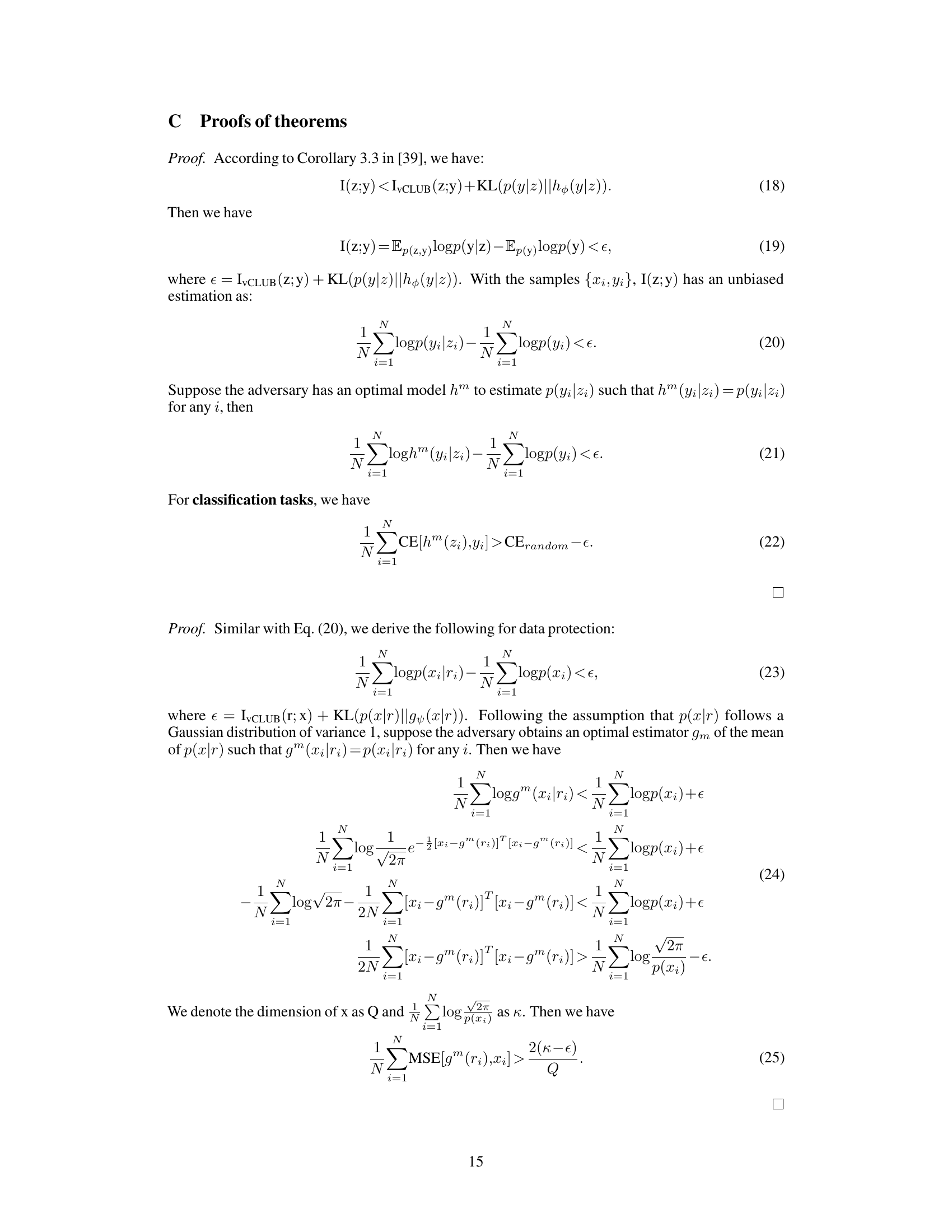

This figure shows the results of the Knowledge Alignment (KA) attack on the CIFAR10 dataset using different defense mechanisms. Each row displays the reconstructed images under a specific level of defense, starting with no defense at the top and progressively stronger defenses towards the bottom. The reconstructed image quality decreases as the defense strength increases, reflecting the trade-off between privacy protection and model accuracy. The SSIM (Structural Similarity Index) values quantitatively measure the similarity between the original and reconstructed images.

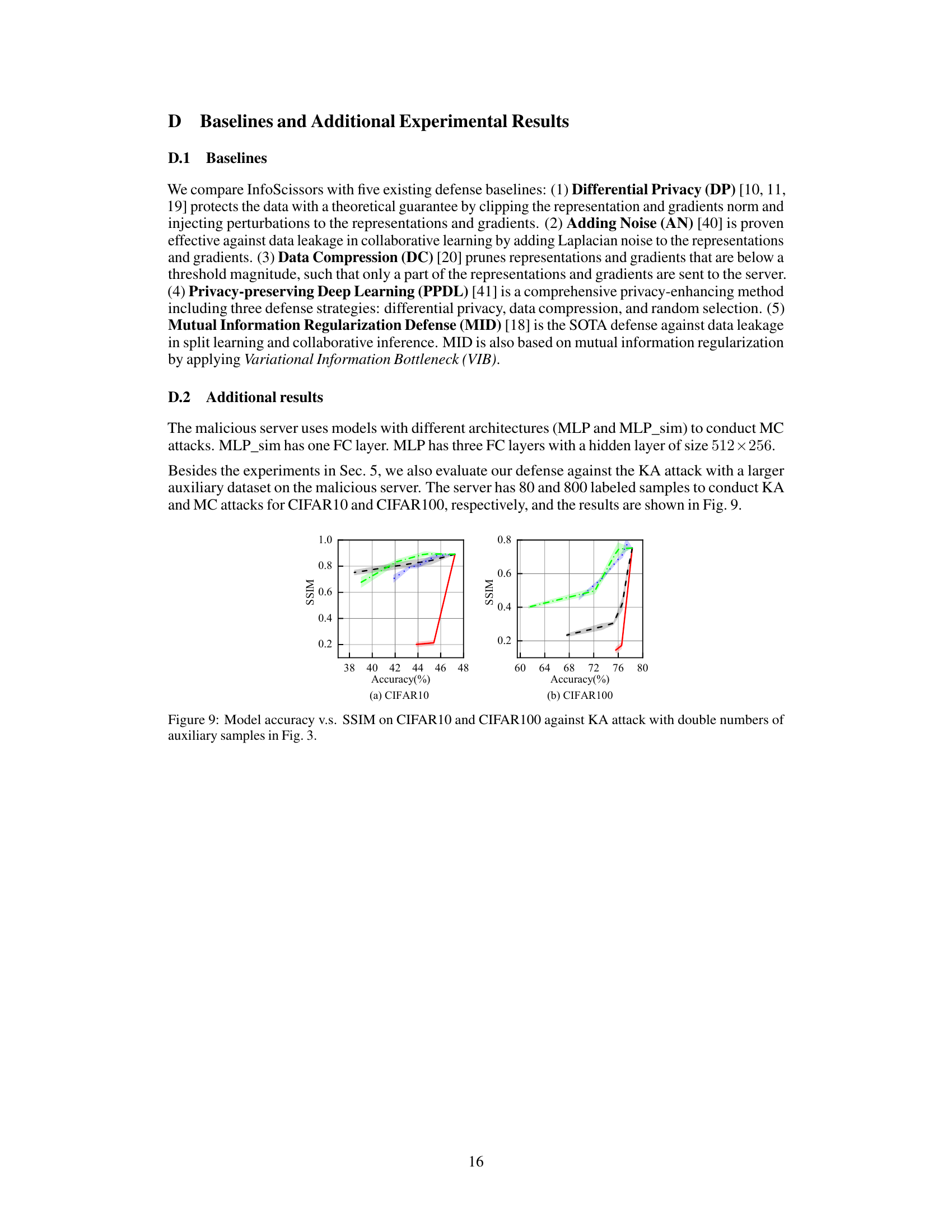

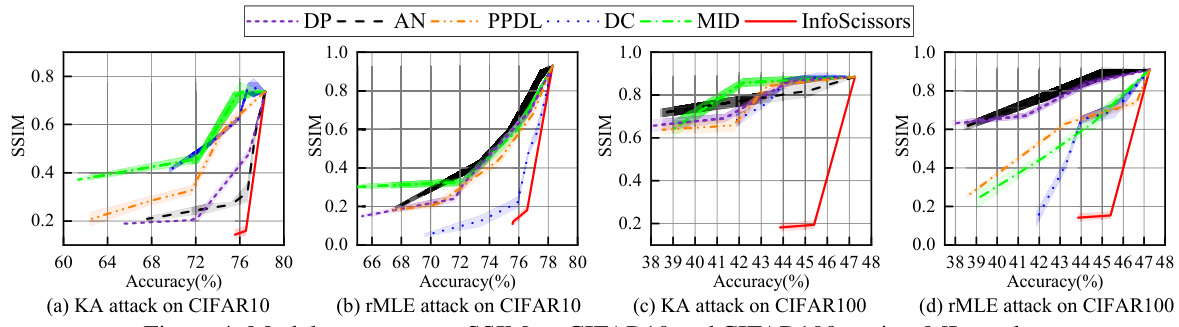

This figure shows the trade-off between model accuracy and the structural similarity index (SSIM) of reconstructed images under different defense methods against model inversion (MI) attacks. Lower SSIM values indicate better defense against input leakage, while higher accuracy represents better model utility. The figure compares InfoScissors with DP, AN, PPDL, DC, and MID on both CIFAR10 and CIFAR100 datasets, using KA and rMLE attacks. It demonstrates InfoScissors’s superior performance in achieving low SSIM with minimal accuracy loss.

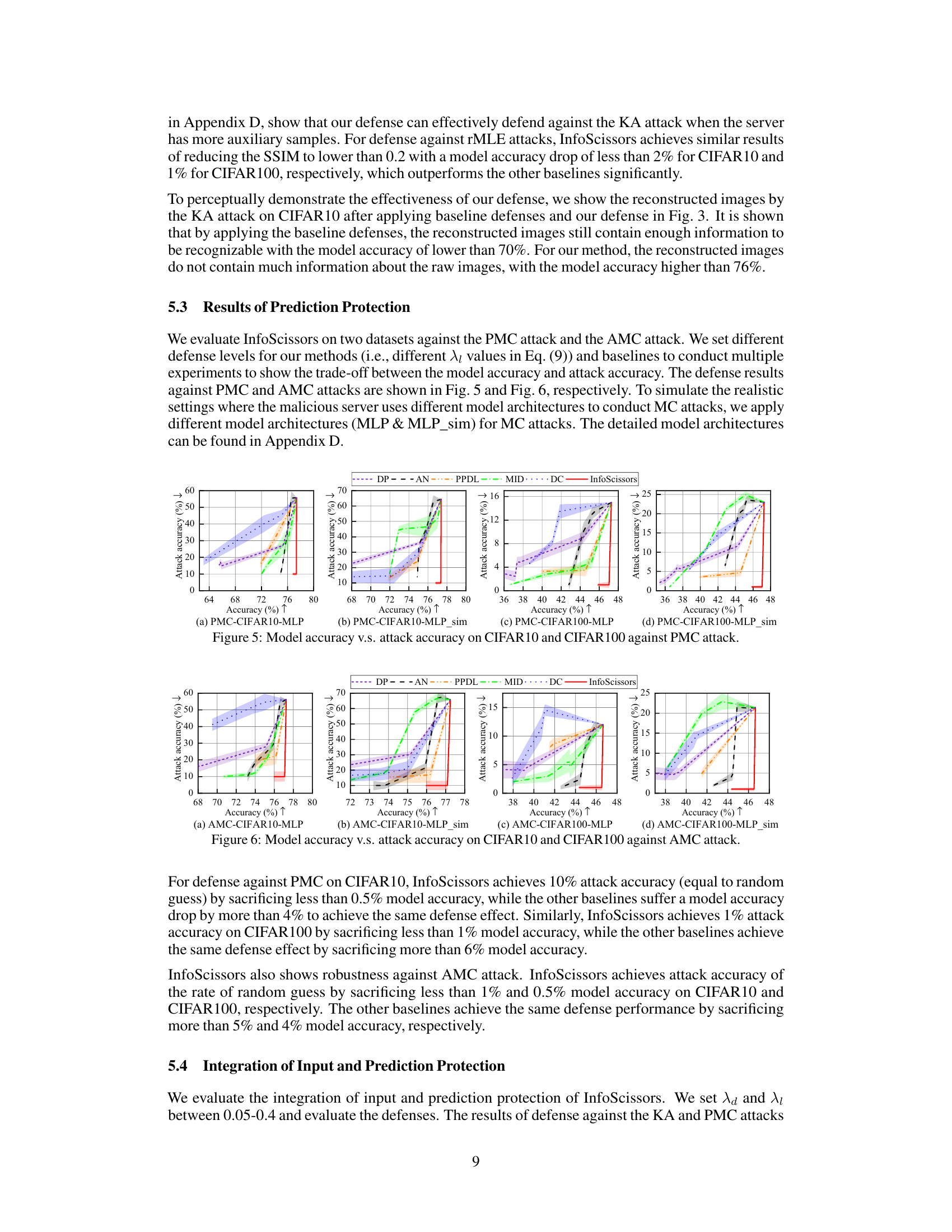

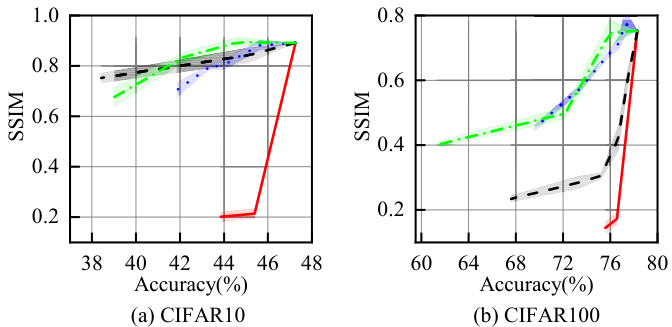

This figure compares the model accuracy against the attack accuracy on CIFAR10 and CIFAR100 datasets when using different defense methods against Passive Model Completion (PMC) attacks. It shows the trade-off between maintaining model accuracy and the effectiveness of the defense in preventing successful attacks. The results are presented for two variations of the PMC attack using different MLP architectures.

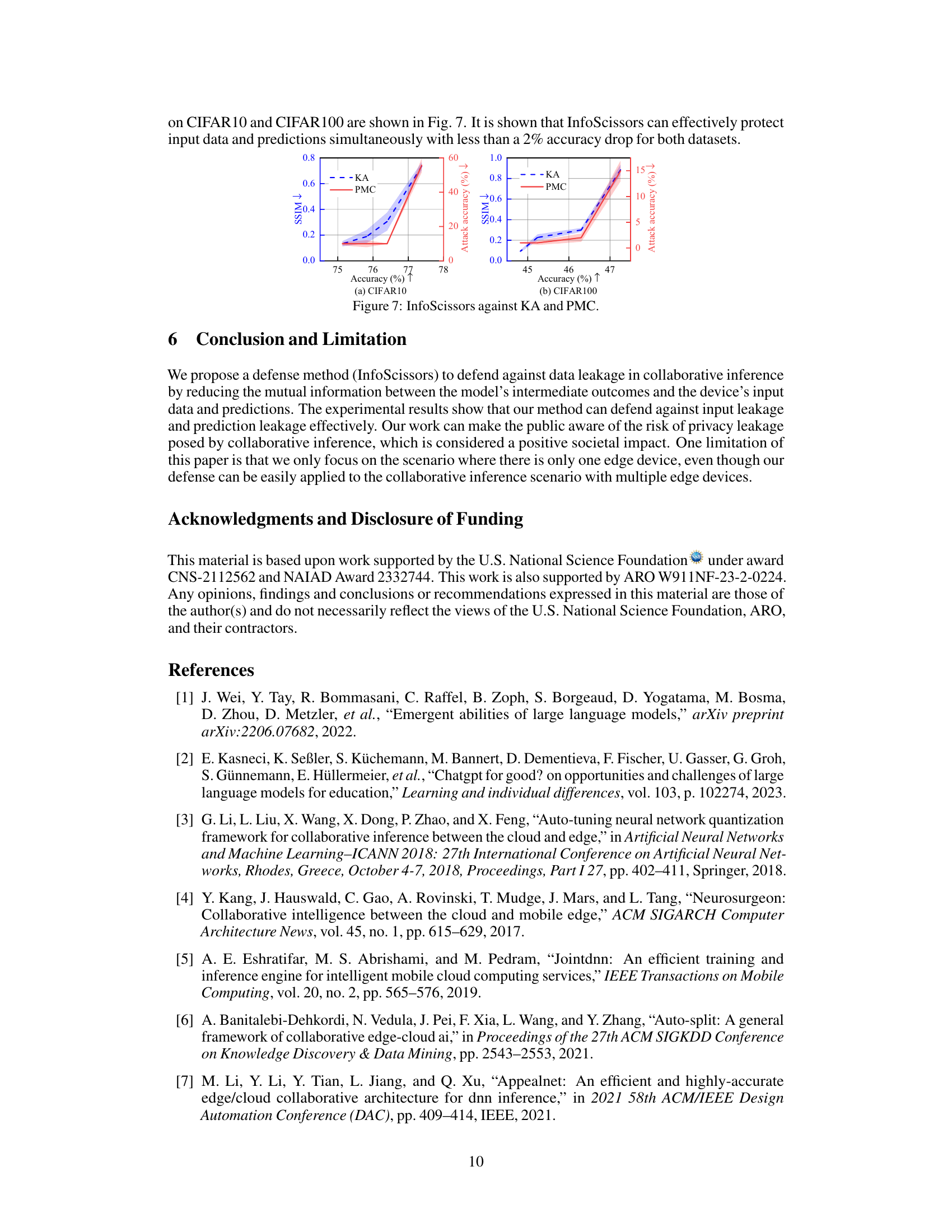

This figure shows the results of the integrated defense of InfoScissors against the KA and PMC attacks on CIFAR10 and CIFAR100 datasets. It demonstrates the trade-off between model accuracy and the effectiveness of defense against both input and prediction leakage. The shaded areas represent the range of results across multiple trials.

This figure shows the results of a Knowledge Alignment (KA) attack on CIFAR10. The left column displays the original input images from the CIFAR10 dataset. The right column shows the images reconstructed by the KA attack, demonstrating the ability of the attacker to reconstruct input images from intermediate representations. The quality of the reconstructed images varies, highlighting the effectiveness of different defense mechanisms.

This figure shows the trade-off between model accuracy and the structural similarity index (SSIM) for various defense methods against model inversion attacks on CIFAR10 and CIFAR100 datasets. Lower SSIM values indicate better defense performance against reconstructing input images, while higher accuracy reflects better model utility. The red line represents the proposed InfoScissors method, while other lines represent baseline defense methods. The plots demonstrate InfoScissors’s effectiveness in achieving low SSIM values (high defense performance) with minimal accuracy loss.

Full paper#