↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Deep learning models often struggle with out-of-distribution (OOD) data, making overconfident wrong predictions. Current methods like Evidential Deep Learning (EDL) have limitations in handling uncertainty, especially when dealing with ambiguous data points. This often leads to poor OOD detection performance.

The proposed Hyper-opinion Evidential Deep Learning (HEDL) tackles this issue by integrating both ‘sharp’ evidence (supporting a single category) and ‘vague’ evidence (accommodating multiple categories). A novel opinion projection mechanism converts the hyper-opinion into a standard multinomial opinion, allowing for precise classification and uncertainty estimation within the EDL framework. HEDL achieves superior OOD detection performance by providing a more comprehensive evidentiary foundation. It also solves the vanishing gradient problem, a common issue with fully connected layers in EDL, significantly improving classification accuracy.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers working on out-of-distribution (OOD) detection in deep learning. It introduces a novel approach that significantly improves OOD detection accuracy while maintaining in-distribution classification performance, directly addressing a major challenge in the field. The proposed method, Hyper-opinion Evidential Deep Learning (HEDL), offers a new way to model uncertainty and provides a valuable contribution to the ongoing efforts to develop more robust and reliable deep learning models. The superior performance of HEDL, along with its mitigation of the vanishing gradient problem, opens up new avenues for improving model robustness and reliability.

Visual Insights#

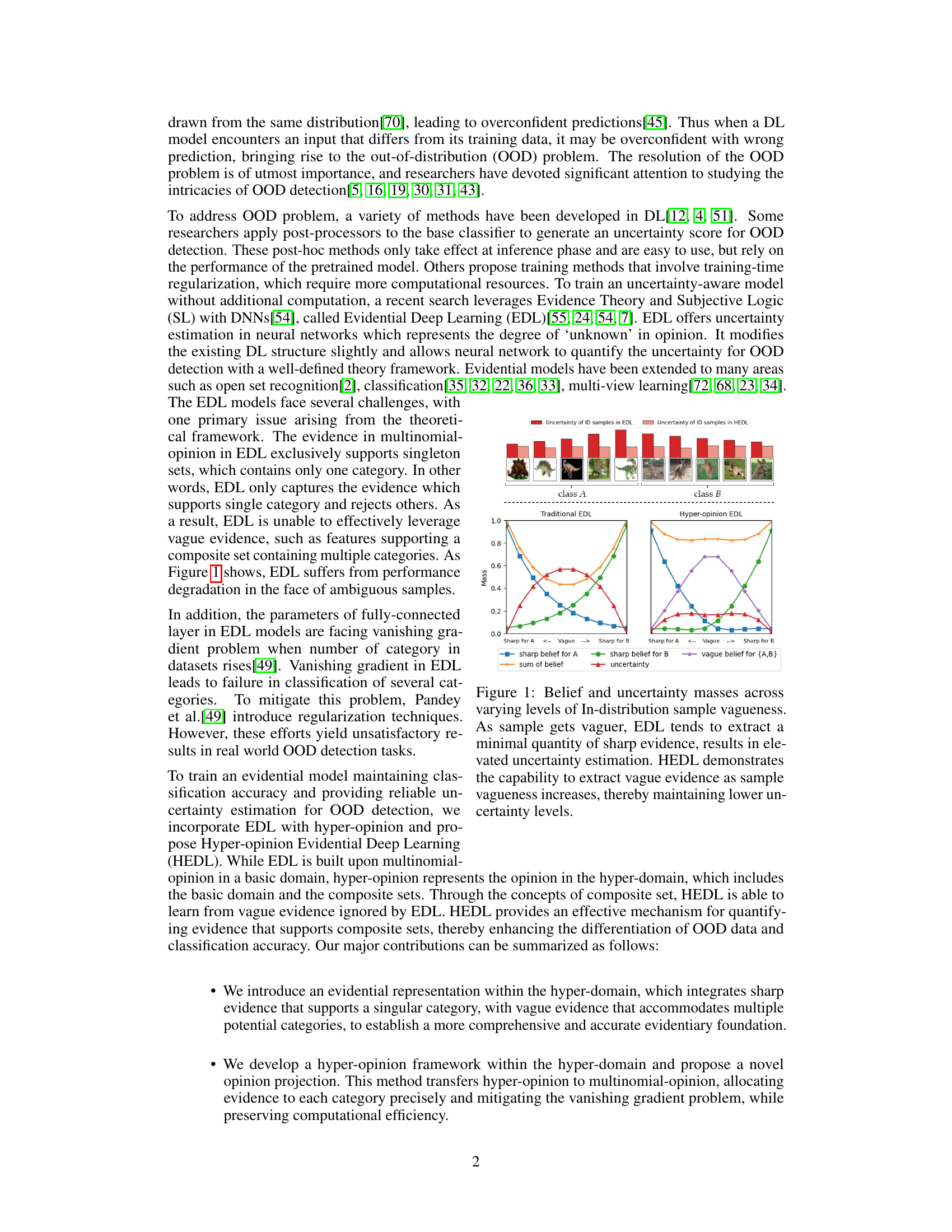

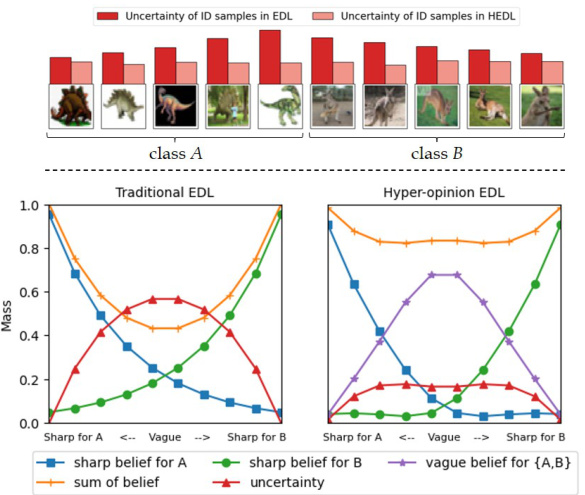

This figure compares the belief and uncertainty masses produced by traditional Evidential Deep Learning (EDL) and the proposed Hyper-opinion Evidential Deep Learning (HEDL) methods for in-distribution (ID) samples with varying degrees of vagueness. It shows that as the vagueness of the ID samples increases, EDL extracts less sharp evidence, leading to higher uncertainty. In contrast, HEDL effectively leverages vague evidence, resulting in lower uncertainty estimates even when dealing with ambiguous data points.

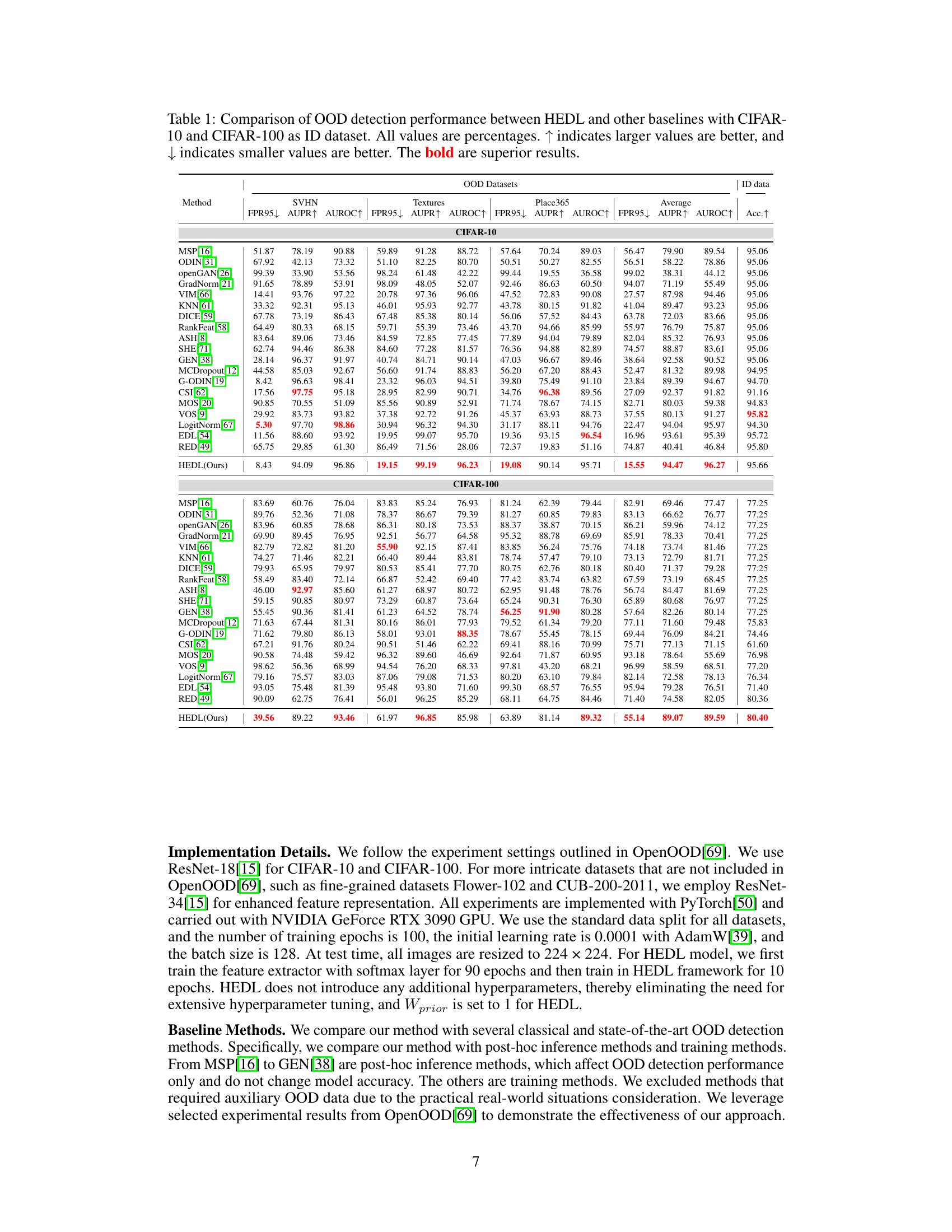

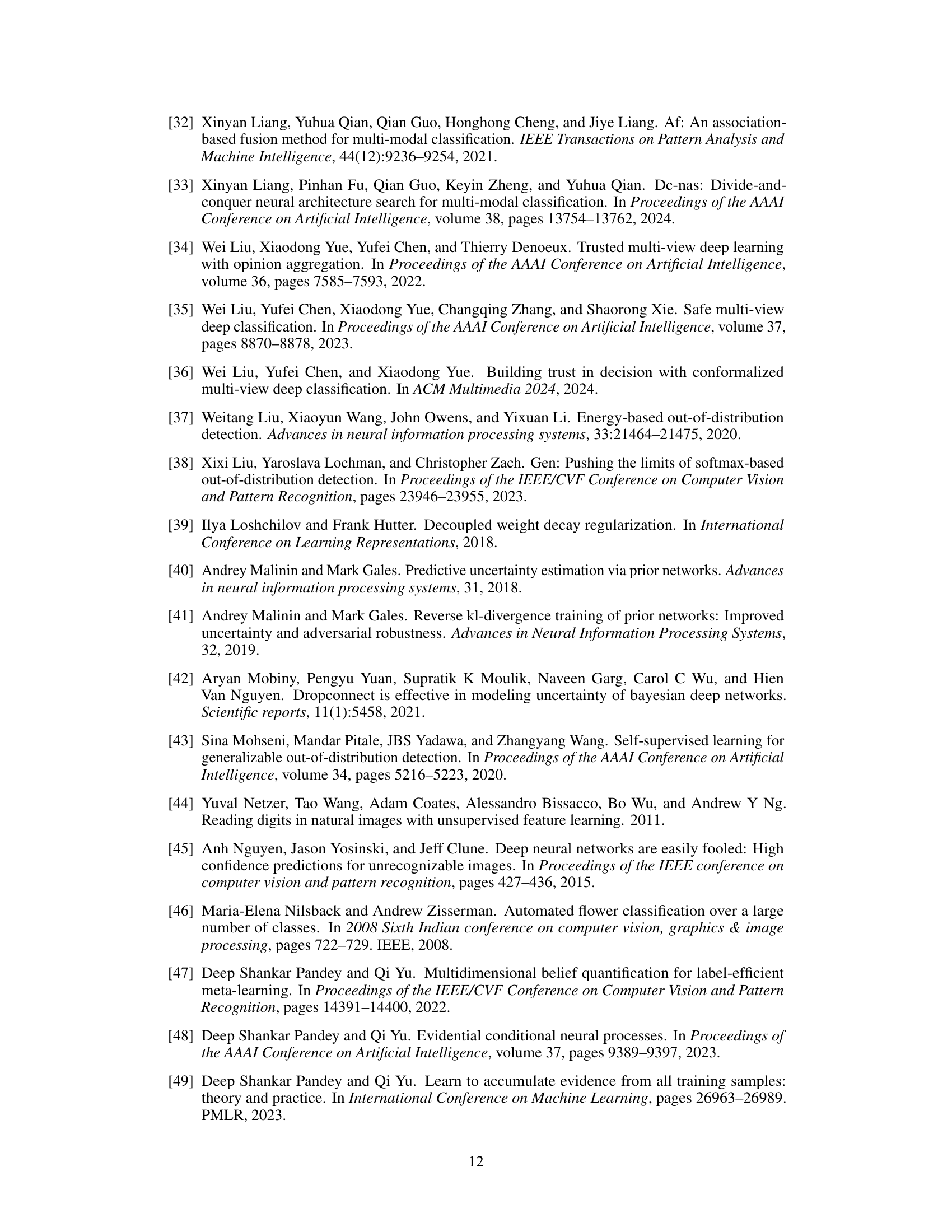

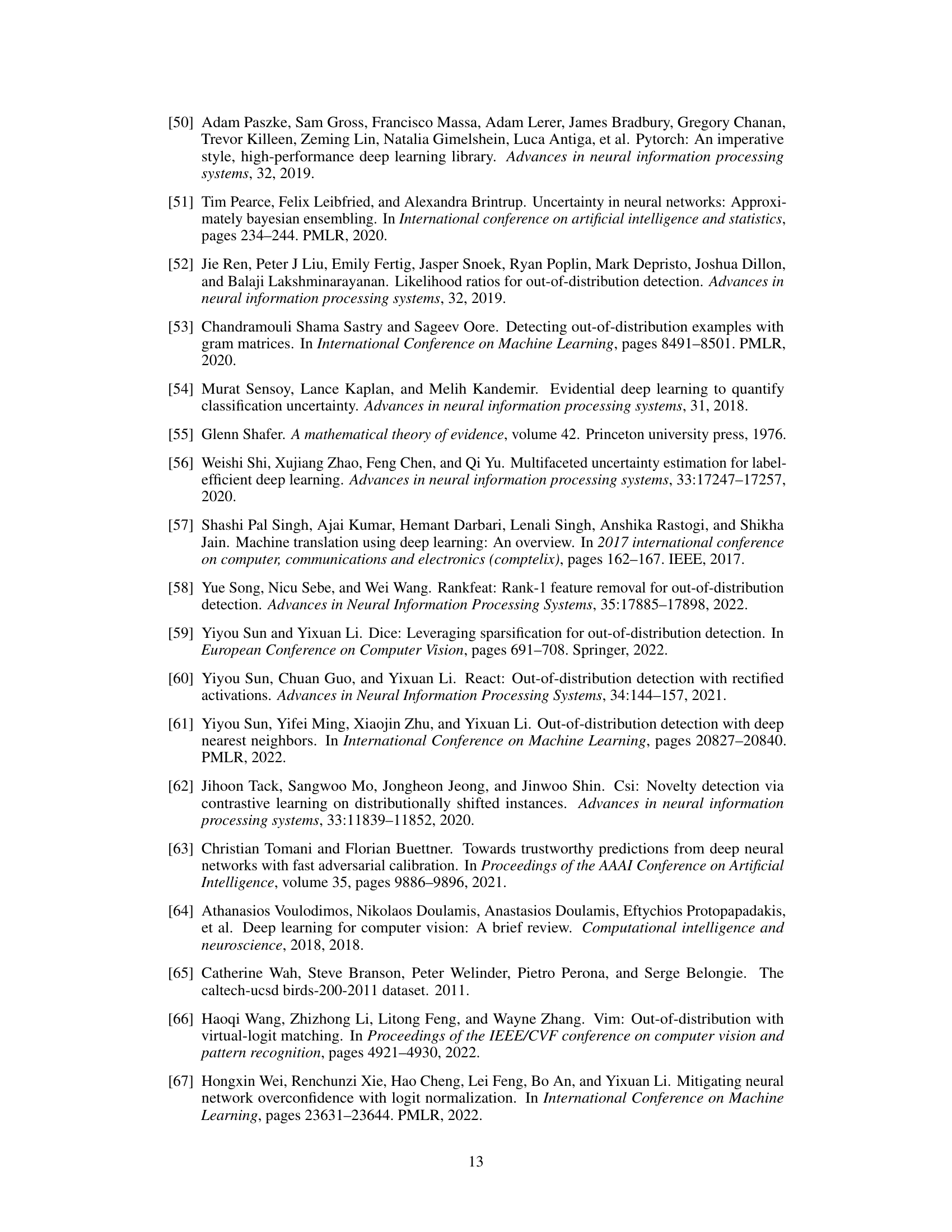

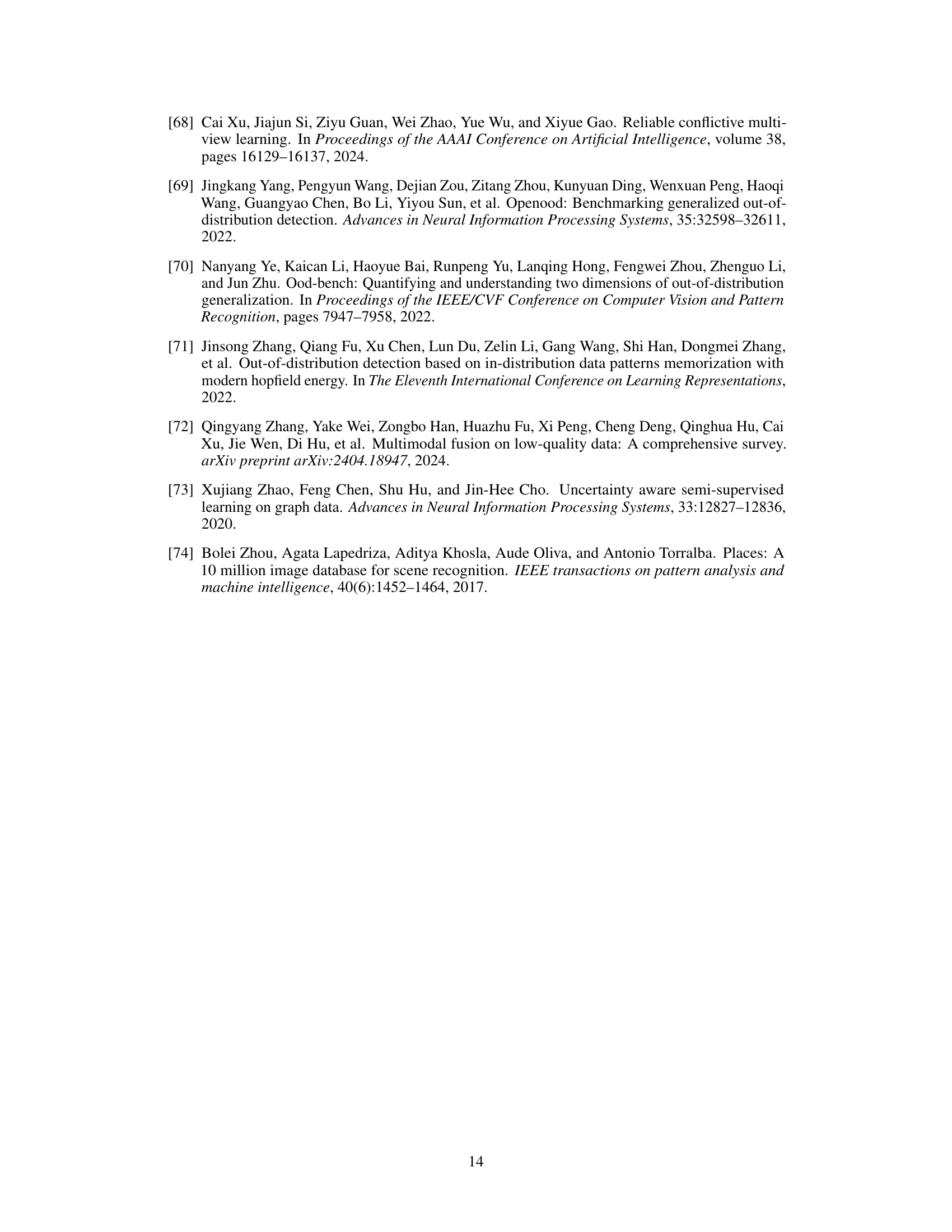

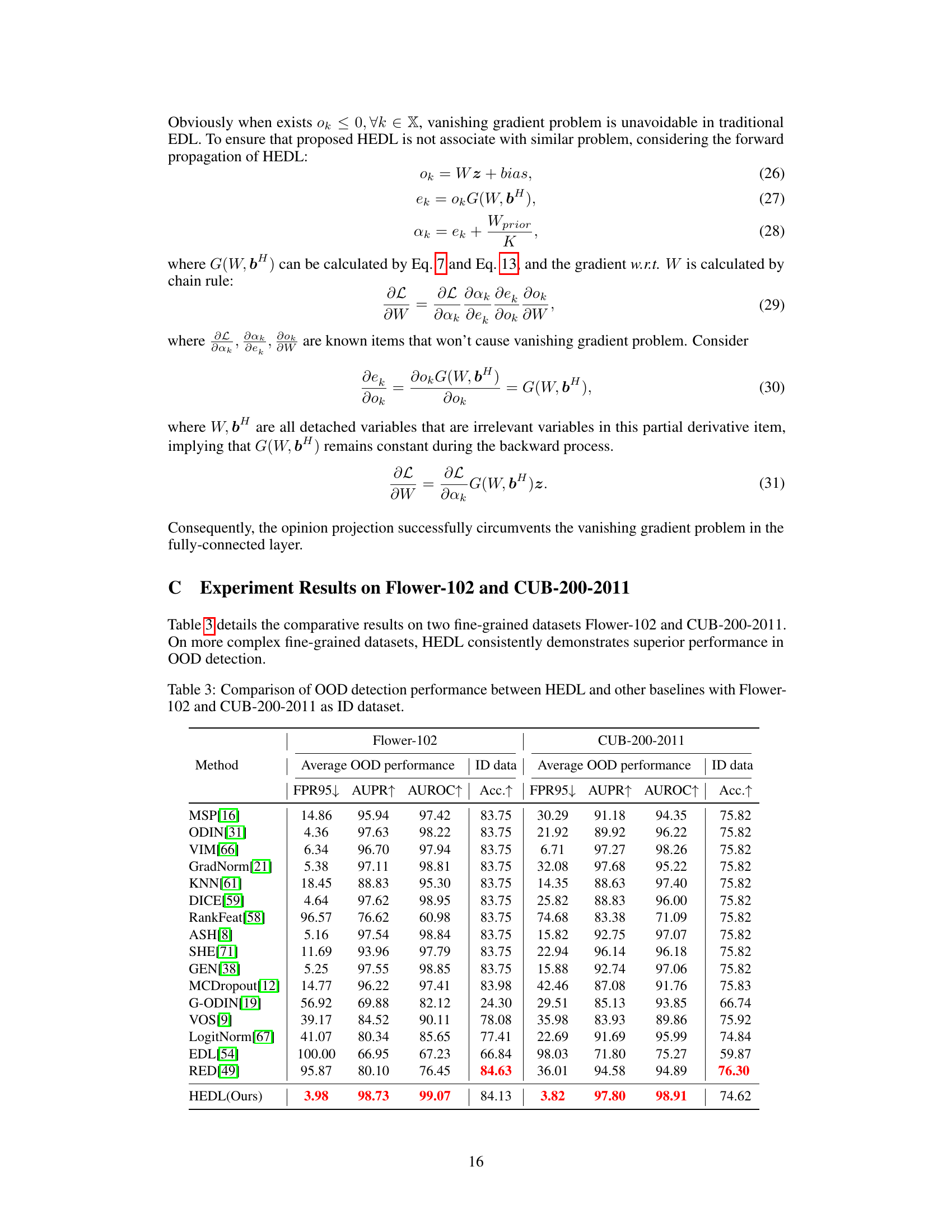

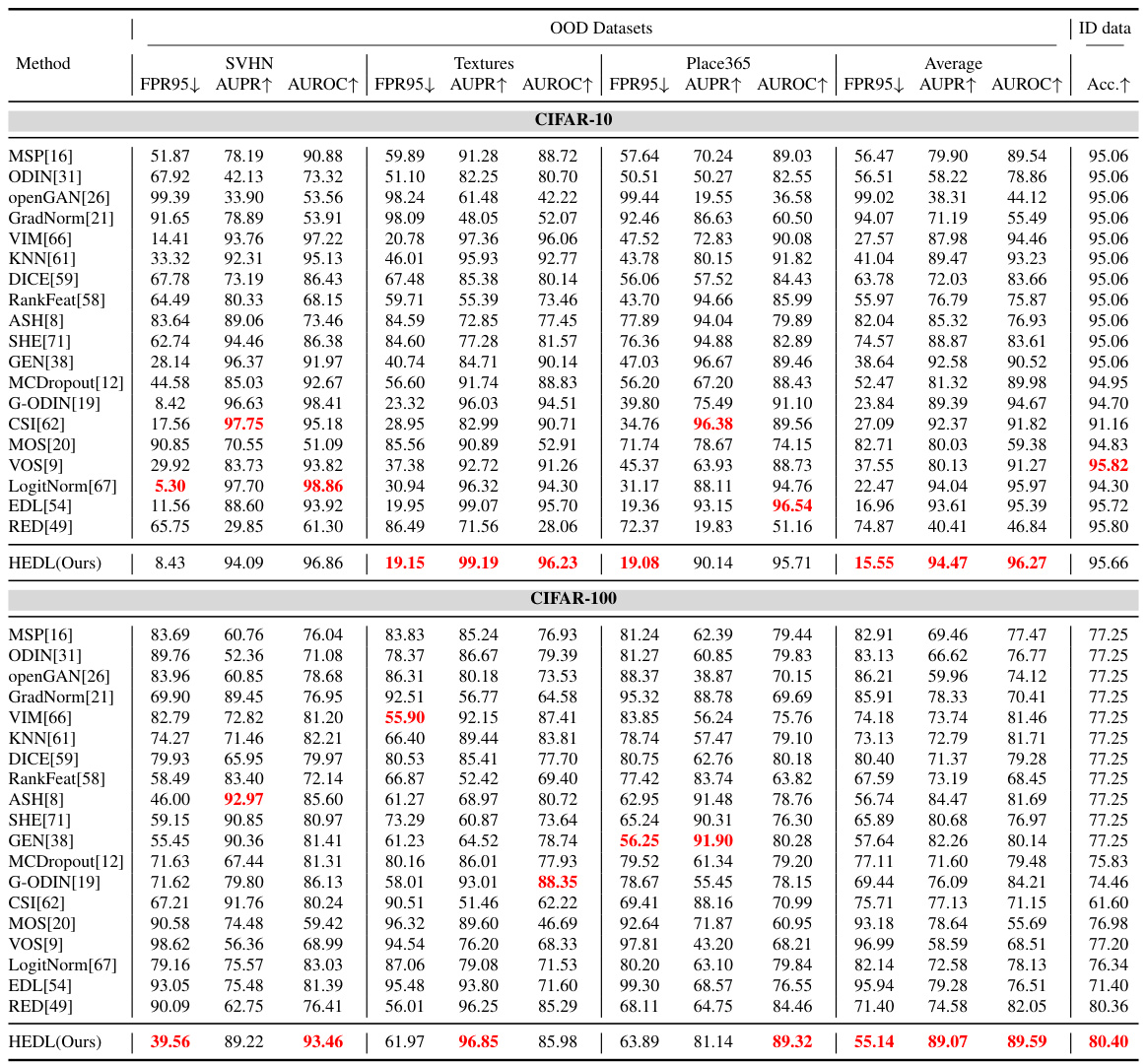

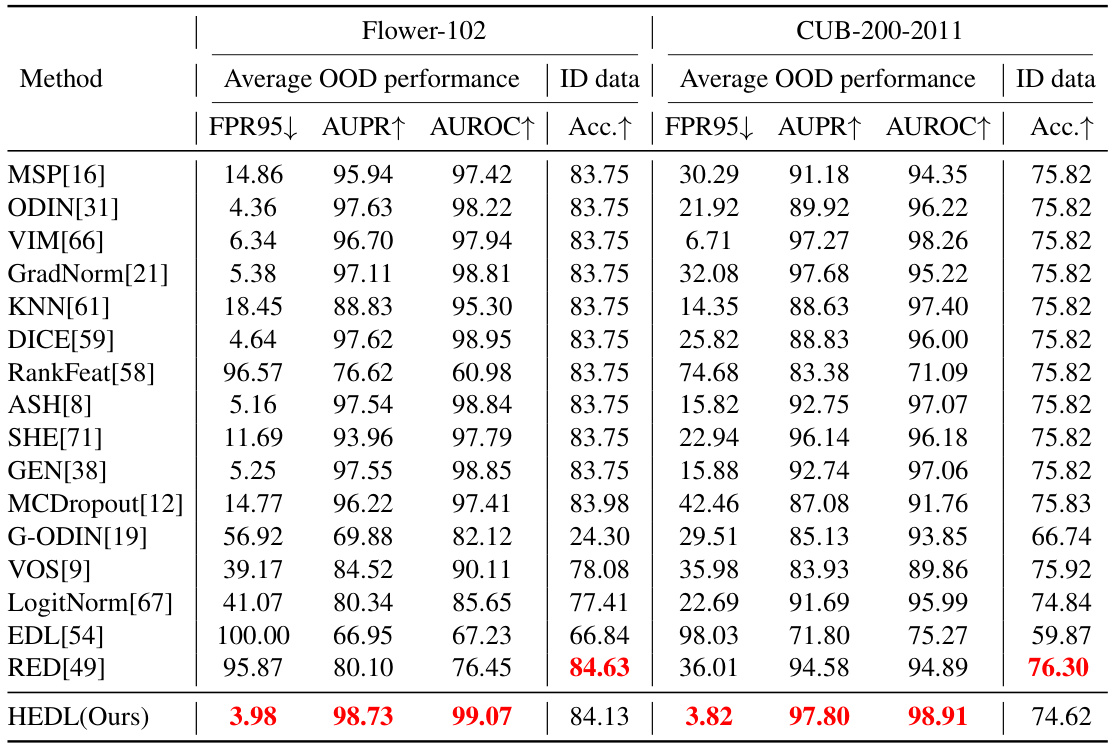

This table presents a comparison of the out-of-distribution (OOD) detection performance of the proposed Hyper-opinion Evidential Deep Learning (HEDL) method against several other baseline methods. The comparison uses CIFAR-10 and CIFAR-100 datasets as in-distribution (ID) datasets and evaluates performance using three different OOD datasets (SVHN, Textures, and Place365). The metrics used for evaluation are FPR95 (False Positive Rate at 95% True Positive Rate), AUPR (Area Under the Precision-Recall curve), AUROC (Area Under the Receiver Operating Characteristic curve), and the classification accuracy (Acc). Higher values for AUPR and AUROC indicate better performance, while a lower value for FPR95 indicates better performance. The bold numbers indicate that HEDL outperforms other methods.

In-depth insights#

Hyper-opinion EDL#

Hyper-opinion Evidential Deep Learning (HEDL) presents a novel approach to enhance out-of-distribution (OOD) detection by integrating both sharp and vague evidence within an extended evidential deep learning (EDL) framework. Traditional EDL focuses solely on sharp evidence supporting single categories, limiting its ability to capture the uncertainty inherent in ambiguous data. HEDL addresses this by incorporating hyper-opinions, which represent opinions across the powerset of categories, explicitly including evidence supporting multiple categories simultaneously (vague evidence). This holistic approach improves uncertainty estimation, leading to better OOD detection performance. The introduction of hyper-opinions is further complemented by an opinion projection mechanism that efficiently maps hyper-opinions to multinomial opinions used in EDL’s optimization process, mitigating issues like vanishing gradients often associated with traditional EDL. The combination of hyper-opinions and efficient projection yields a robust and accurate uncertainty estimation, resulting in a superior OOD detection method compared to existing techniques. The paper’s experimental results corroborate these claims, demonstrating HEDL’s enhanced performance across various datasets.

Opinion Projection#

The concept of ‘Opinion Projection’ in this context appears crucial for bridging the gap between a hyper-opinion model and a traditional multinomial opinion framework. The core idea seems to be a mechanism that translates the richer, more nuanced representation of hyper-opinions into the simpler, more readily usable format of multinomial opinions. This translation is not a mere simplification, but rather a careful transformation that preserves essential information. It is likely designed to maintain the distinction between sharp and vague evidence, which are key to the proposed HEDL approach. A successful opinion projection would allow for the effective integration of hyper-opinions into existing evidential deep learning (EDL) architectures, enabling the use of the powerful EDL framework while benefiting from the enhanced representational capabilities of hyper-opinions. The method’s effectiveness likely depends heavily on its ability to prevent information loss during the projection, especially concerning uncertainty quantification. This is essential for robust out-of-distribution detection, a key goal of the research. Therefore, this projection process is not merely a technical detail, but a central element connecting the theoretical innovation of hyper-opinions to a practical implementation within the EDL framework. The effectiveness of the overall method strongly depends on how well this crucial step is implemented.

OOD Detection#

The research paper delves into the critical area of Out-of-Distribution (OOD) detection, a significant challenge in deep learning where models trained on one distribution encounter data from a different distribution. The core problem lies in model overconfidence leading to inaccurate predictions. The paper introduces a novel approach, Hyper-opinion Evidential Deep Learning (HEDL), designed to address the limitations of existing methods, notably the inability to effectively leverage vague evidence and the vanishing gradient problem. HEDL’s key innovation involves a hyper-opinion framework that integrates sharp and vague evidence, offering a more comprehensive understanding of uncertainty. The paper demonstrates how HEDL outperforms existing OOD detection methods across various benchmark datasets, highlighting its robustness and accuracy. A crucial aspect of the study is the mitigation of the vanishing gradient problem through a novel opinion projection mechanism that improves accuracy. Furthermore, the research demonstrates how HEDL achieves superior OOD detection performance while maintaining ID classification accuracy, offering a holistic solution to this important challenge.

Gradient Analysis#

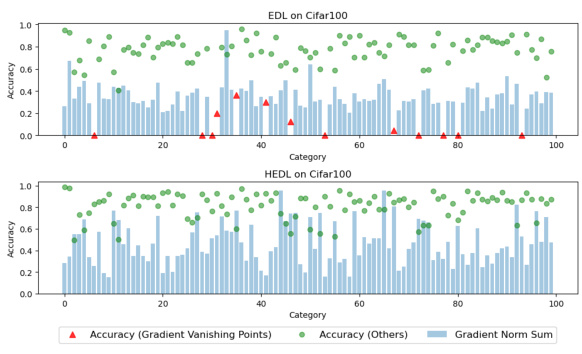

The gradient analysis section in this research paper is crucial for understanding the effectiveness and stability of the proposed Hyper-opinion Evidential Deep Learning (HEDL) model, especially in comparison to traditional Evidential Deep Learning (EDL). The key finding is that HEDL mitigates the vanishing gradient problem, a significant weakness in EDL, particularly when dealing with a large number of categories. This is achieved through a novel opinion projection mechanism. The analysis likely involves comparing the magnitude of gradients for each layer during the training process for both HEDL and EDL. Visualizations, such as plots of gradient norms over training epochs, are probably included to highlight the differences. A key aspect of the analysis would be to demonstrate how HEDL’s modified architecture and opinion projection prevent gradients from vanishing, ensuring that all parameters in the network are updated effectively, leading to improved model accuracy and reliable uncertainty estimation. The absence of vanishing gradients in HEDL correlates directly with improved classification accuracy, supporting a key claim of the research. The results likely emphasize how HEDL’s superior gradient flow leads to more effective training and ultimately better performance in out-of-distribution (OOD) detection.

Ablation Study#

An ablation study systematically removes components of a model to assess their individual contributions. In this context, it would likely involve removing or disabling parts of the proposed Hyper-opinion Evidential Deep Learning (HEDL) model to understand their effects on OOD detection performance. Key aspects investigated would likely include the hyper-opinion framework, the opinion projection mechanism, and the integration of both sharp and vague evidence. Removing the hyper-opinion module would show if the model’s improved OOD capabilities are solely dependent on this module or if other elements play a significant role. Similarly, disabling the opinion projection would illuminate its importance in translating hyper-opinions to the EDL framework effectively. Finally, the analysis of using only sharp or vague evidence would reveal the individual contribution of each type of evidence in enhancing OOD detection. The results would provide crucial insights into the strengths and weaknesses of each module of the HEDL architecture, ultimately confirming its effectiveness and justifying design choices.

More visual insights#

More on figures

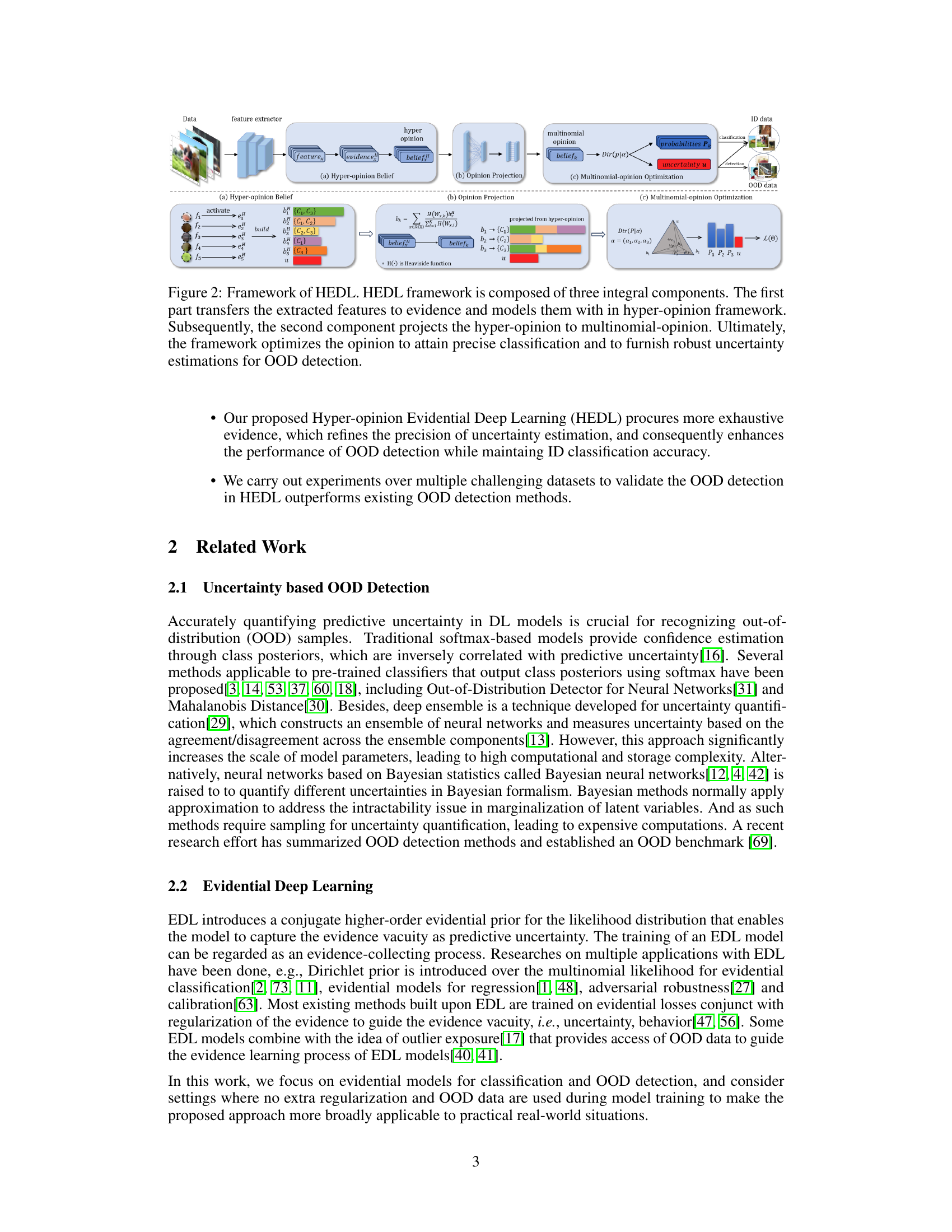

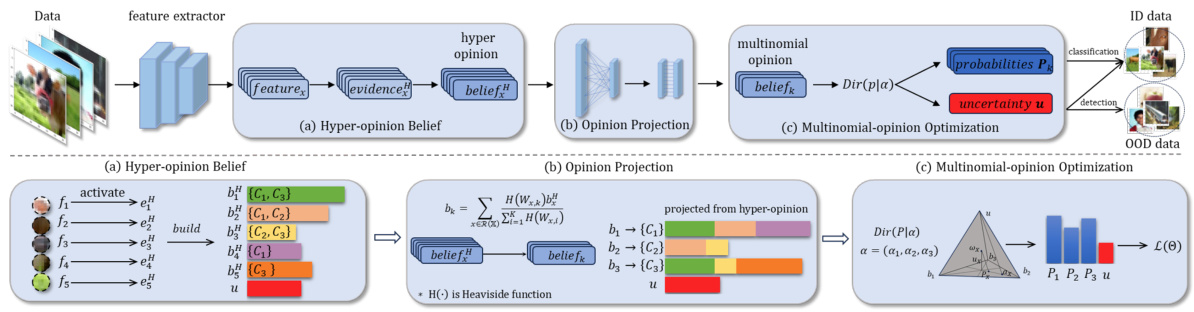

This figure illustrates the framework of the Hyper-opinion Evidential Deep Learning (HEDL) method. It consists of three main components: (a) Hyper-opinion Belief, which models evidence using a hyper-opinion framework; (b) Opinion Projection, which projects the hyper-opinion into a multinomial-opinion; and (c) Multinomial-opinion Optimization, which optimizes the multinomial-opinion to achieve precise classification and robust uncertainty estimation for out-of-distribution (OOD) detection. Each component is visually represented with diagrams and mathematical formulas to show how features are processed and evidence is integrated.

This figure compares the sum of gradient norms in the fully connected layer of EDL and HEDL models during training on the CIFAR-100 dataset. It shows that EDL suffers from the vanishing gradient problem, with some categories having zero gradient norms throughout training, which correlates with lower accuracy for those categories. In contrast, HEDL avoids the vanishing gradient problem, maintaining non-zero gradient norms and higher accuracy for all categories.

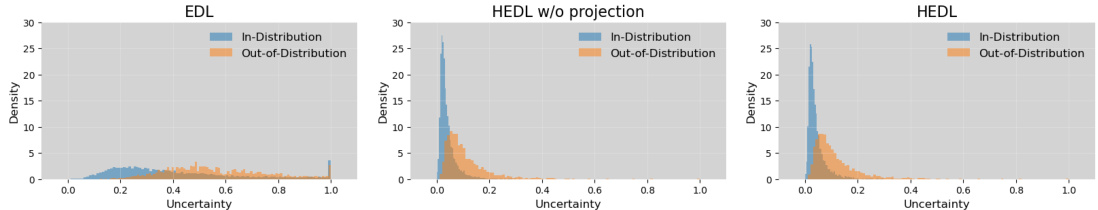

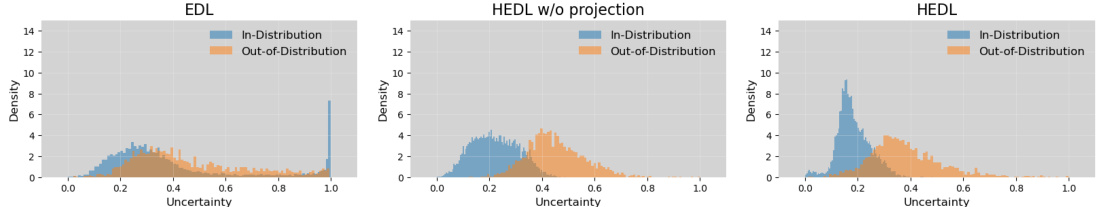

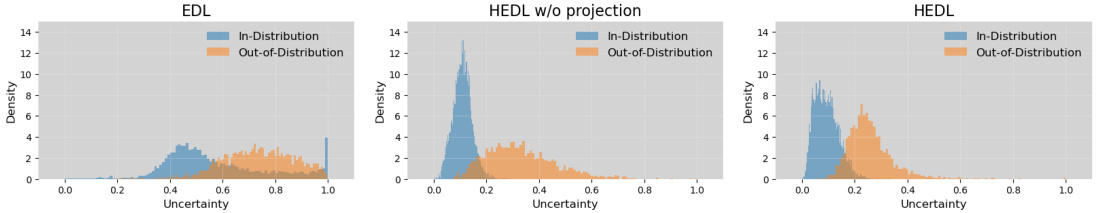

This figure visualizes the uncertainty distributions of in-distribution (ID) and out-of-distribution (OOD) samples across four different datasets (CIFAR-10, CIFAR-100, Flower-102, and CUB-200-2011). Three models are compared: EDL (Evidential Deep Learning), HEDL without projection, and HEDL (Hyper-opinion Evidential Deep Learning). The overlap between the ID and OOD uncertainty distributions illustrates the performance of each model in distinguishing between the two types of samples. HEDL shows a better separation between ID and OOD, indicating improved OOD detection capability compared to the other two.

This figure compares the uncertainty distributions of in-distribution (ID) and out-of-distribution (OOD) samples for three different methods: EDL, HEDL without projection, and HEDL. Across four datasets (CIFAR-10, CIFAR-100, Flower-102, and CUB-200-2011), the figure shows the uncertainty scores generated by each method. The overlap between ID and OOD distributions indicates the difficulty of distinguishing between them. The results show that HEDL achieves better separation between ID and OOD samples compared to the other two methods, indicating improved OOD detection performance.

This figure compares the uncertainty distribution of in-distribution (ID) and out-of-distribution (OOD) samples for three different methods: EDL (Evidential Deep Learning), HEDL without opinion projection, and HEDL (Hyper-opinion Evidential Deep Learning). Across four datasets (CIFAR-10, CIFAR-100, Flower-102, CUB-200-2011), the plots show the density of uncertainty scores. The goal is to visualize how well each method separates ID and OOD samples based on their uncertainty. The overlap between the distributions indicates the difficulty of distinguishing between ID and OOD data. The figure demonstrates that HEDL achieves the best separation, indicating improved OOD detection performance.

The figure shows the uncertainty distributions of in-distribution (ID) and out-of-distribution (OOD) samples across four datasets (CIFAR-10, CIFAR-100, Flower-102, and CUB-200-2011). Three methods are compared: EDL (Evidential Deep Learning), HEDL without opinion projection, and the full HEDL model. The distributions reveal the effectiveness of the HEDL method in separating ID and OOD samples, particularly when dealing with more complex datasets (Flower-102 and CUB-200-2011) that contain more vague evidence.

This figure shows a comparison of how EDL and HEDL classify an image. EDL, relying on sharp evidence, produces an incorrect classification with high uncertainty. HEDL, by incorporating vague evidence, provides a correct classification with lower uncertainty, demonstrating its improved accuracy and reliability in handling ambiguous data.

More on tables

This table compares the out-of-distribution (OOD) detection performance of the proposed Hyper-opinion Evidential Deep Learning (HEDL) method against several other baseline methods. The evaluation is performed using CIFAR-10 and CIFAR-100 datasets as in-distribution (ID) data, and three other common OOD benchmark datasets (SVHN, Textures, Places365) as out-of-distribution data. The metrics used for comparison include the False Positive Rate at 95% True Positive Rate (FPR95), Area Under the Precision-Recall curve (AUPR), Area Under the Receiver Operating Characteristic curve (AUROC), and the accuracy of in-distribution classification. Higher AUPR and AUROC values, and lower FPR95 values indicate better performance. The table highlights HEDL’s superior performance across all metrics.

This table compares the out-of-distribution (OOD) detection performance of the proposed Hyper-opinion Evidential Deep Learning (HEDL) method against several other baseline methods. The evaluation is performed using CIFAR-10 and CIFAR-100 as in-distribution (ID) datasets, and three common OOD benchmark datasets (SVHN, Textures, Places365). The metrics used for comparison include FPR95 (False Positive Rate at 95% True Positive Rate), AUPR (Area Under the Precision-Recall curve), AUROC (Area Under the Receiver Operating Characteristic curve), and accuracy (classification accuracy on ID samples). Higher AUPR and AUROC values are better, while lower FPR95 values indicate better performance. The bold values highlight the superior performance achieved by HEDL.

This table compares the out-of-distribution (OOD) detection performance of the proposed Hyper-opinion Evidential Deep Learning (HEDL) method with several other baseline methods on the CIFAR-10 and CIFAR-100 datasets. The performance is measured using three metrics: FPR95 (False Positive Rate at 95% True Positive Rate), AUPR (Area Under the Precision-Recall curve), and AUROC (Area Under the Receiver Operating Characteristic curve). Lower FPR95 values are better, while higher AUPR and AUROC values are better. The table also shows the accuracy of ID (In-Distribution) classification for each method. The results are presented as percentages. The best results for each metric are shown in bold.

Full paper#