TL;DR#

Class-incremental learning (CIL) faces two major challenges: catastrophic forgetting (CF) and task confusion (TC). CF refers to the model’s inability to remember previously learned classes after learning new ones, while TC describes the difficulty of distinguishing between classes from different tasks when task IDs are unavailable. Existing CIL research often conflates CF and TC, hindering a proper understanding of their individual effects. This paper is focused on tackling this issue.

This paper proposes a novel mathematical framework for CIL and introduces two groundbreaking theorems: the Infeasibility Theorem and the Feasibility Theorem. The Infeasibility Theorem demonstrates that achieving optimal CIL with discriminative models is inherently impossible due to TC, even if CF is completely avoided. Conversely, the Feasibility Theorem shows that generative models can solve TC and thus achieve optimal CIL. The paper analyzes popular CIL strategies like regularization, bias-correction, replay, and generative classifiers under this framework. Findings show that generative modeling, either for generative replay or direct classification, is crucial for optimal CIL.

Key Takeaways#

Why does it matter?#

This paper is crucial because it offers a novel mathematical framework to differentiate between task confusion and catastrophic forgetting in class-incremental learning. This directly addresses a significant limitation in current research by providing a theoretical understanding of a key challenge that has previously lacked such analysis. The findings have significant implications for the design and evaluation of future class-incremental learning models, suggesting that generative models are essential for optimal performance. The paper opens new research avenues into task-free settings and explores different class-IL strategies through this new framework.

Visual Insights#

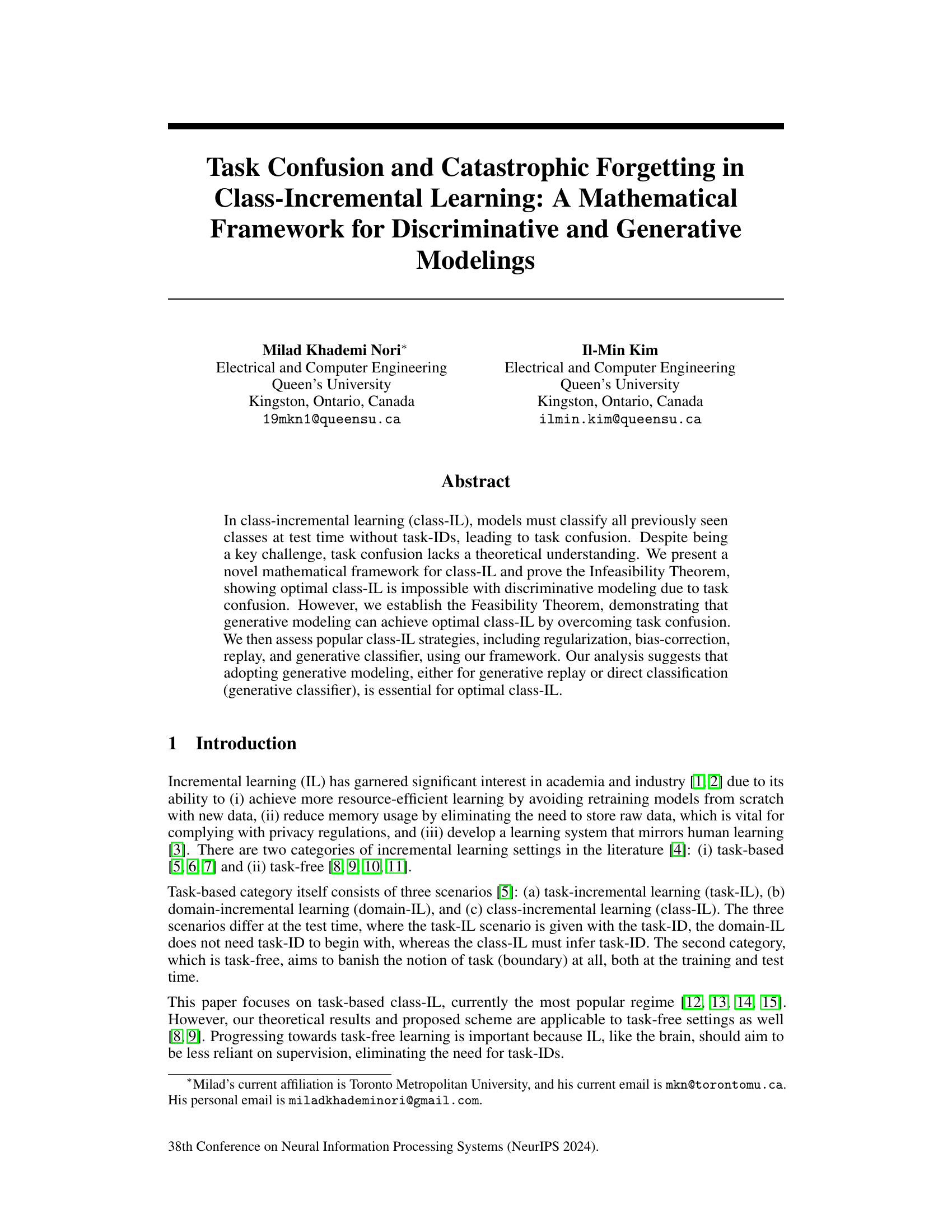

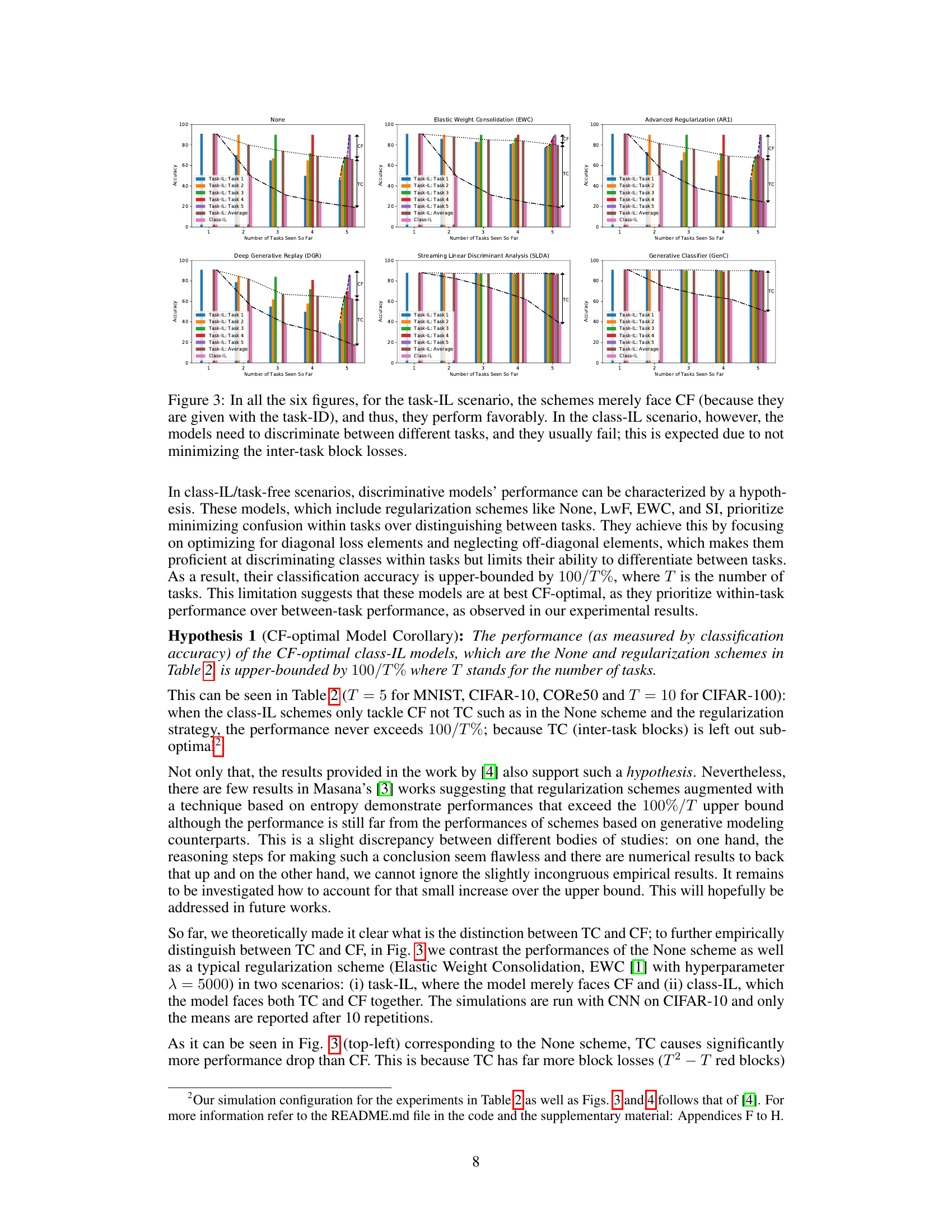

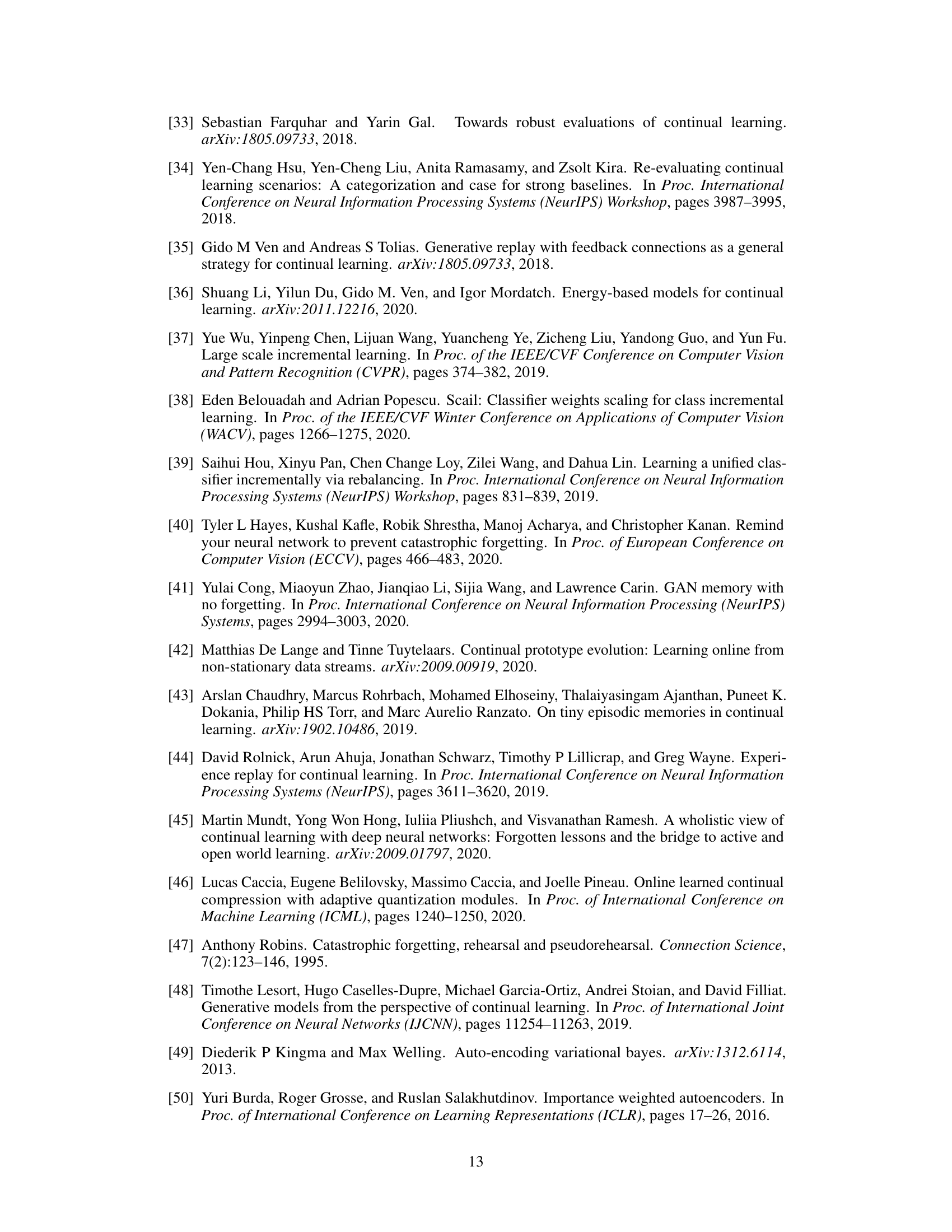

🔼 This figure illustrates the difference between discriminative and generative modeling in handling task confusion in class-incremental learning. In discriminative modeling (a-c), the model learns tasks separately and fails to discriminate between classes from different tasks at test time, even if it remembers individual classes (dotted line in (c)). Generative modeling (d-f), on the other hand, learns a joint representation that allows for successful discrimination between all classes. Thus, the figure visually demonstrates the core argument of the paper that generative models overcome task confusion, while discriminative models do not.

read the caption

Figure 1: Task Confusion in Discriminative and Generative Modeling.

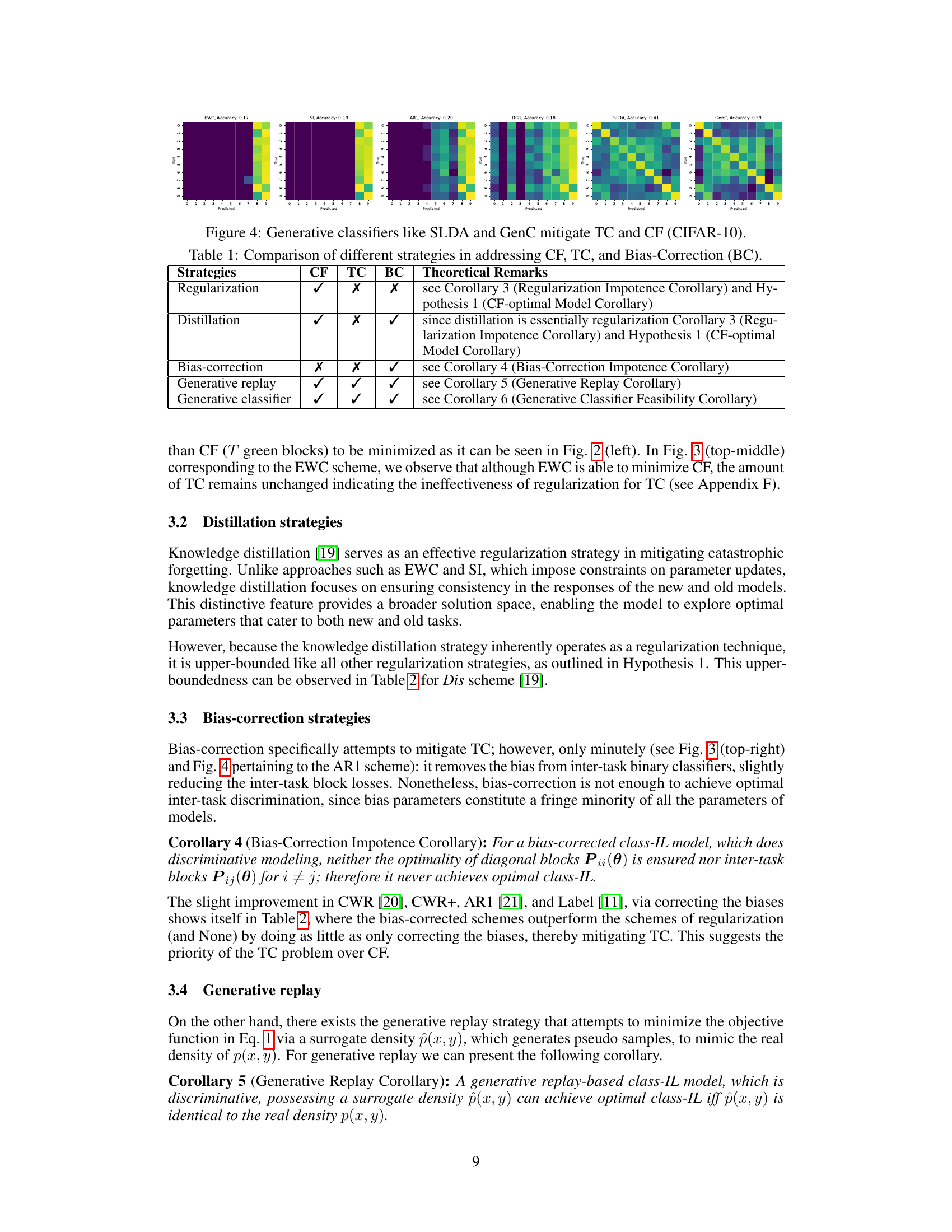

🔼 This table summarizes the theoretical analysis of various class-incremental learning strategies. It shows whether each strategy effectively mitigates catastrophic forgetting (CF), task confusion (TC), and bias correction (BC). The ‘Theoretical Remarks’ column references specific corollaries and hypotheses from the paper that support the analysis for each strategy.

read the caption

Table 1: Comparison of different strategies in addressing CF, TC, and Bias-Correction (BC).

In-depth insights#

Catastrophic Forgetting#

Catastrophic forgetting, a significant challenge in incremental learning, describes the phenomenon where a neural network trained on a new task forgets previously learned information. This paper delves into the nuances of catastrophic forgetting, particularly in the context of class-incremental learning, differentiating it from task confusion. The authors argue that in class-incremental learning, performance degradation is often primarily attributed to task confusion, where the model struggles to distinguish between classes from different tasks. This contrasts with traditional views that attribute all performance loss to forgetting. The study proposes a novel mathematical framework that helps formalize this distinction, further highlighting the limitations of discriminative models in overcoming task confusion. The framework suggests that generative modeling offers a more promising approach by overcoming task confusion and enabling optimal performance in class-incremental learning.

Task Confusion#

The concept of “Task Confusion” in class-incremental learning (CIL) centers on the model’s difficulty in distinguishing between classes from different tasks when task IDs are absent during testing. This confusion arises because the model never encounters classes from separate tasks together during training, hindering its ability to learn the necessary discriminative features. The authors highlight that this issue significantly impacts performance, often overshadowing the effects of catastrophic forgetting. A key contribution is the mathematical framework developed to formally define and analyze task confusion, demonstrating its unique challenges and providing a foundation for a deeper theoretical understanding of CIL. This framework reveals that task confusion is inherently unavoidable in discriminative models, but potentially surmountable in generative models. The authors further suggest that adopting generative modeling, whether through generative replay or direct generative classification, is a crucial step towards overcoming task confusion and achieving optimal performance in CIL.

Generative Modeling#

Generative modeling offers a compelling alternative to discriminative approaches in class-incremental learning by directly modeling the joint probability distribution of data and labels. This fundamentally addresses the task confusion problem inherent in discriminative models, where the model struggles to discriminate between classes from different tasks it has never seen together. Generative models overcome this limitation by learning the underlying data structure across all tasks simultaneously. This allows for better generalization and avoids the catastrophic forgetting often observed in discriminative methods. The feasibility theorem presented demonstrates the potential of generative models to achieve optimal class-incremental learning by preventing both catastrophic forgetting and task confusion. However, practical implementations, such as generative replay, face challenges in accurately approximating the true data distribution, potentially limiting their effectiveness. Direct classification using generative models, on the other hand, offers a more promising solution by directly leveraging the learned data representation for classification, thereby eliminating the reliance on an auxiliary discriminative step and its associated limitations.

Class-IL Strategies#

The analysis of class-incremental learning (Class-IL) strategies reveals a critical distinction between discriminative and generative approaches. Discriminative methods, encompassing regularization, distillation, and bias-correction, primarily focus on minimizing catastrophic forgetting (CF) within individual tasks. However, they often fail to address task confusion (TC), a significant Class-IL challenge stemming from the inability to discriminate between classes from different tasks seen in isolation during training. In contrast, generative strategies, including generative replay and generative classifiers, offer a more comprehensive solution. By directly modeling the joint probability of data and labels, generative approaches inherently address both TC and CF. Generative classifiers, in particular, demonstrate superior performance by overcoming the limitations of discriminative models, highlighting the importance of generative modeling for effective Class-IL.

Future Directions#

Future research could explore several avenues. Firstly, extending the mathematical framework to encompass more complex scenarios like task-free incremental learning or handling concept drift would provide deeper theoretical insights. Secondly, the framework’s applicability needs testing on a wider range of datasets and network architectures to assess its generalizability and robustness. Thirdly, developing new class-IL strategies directly inspired by the theoretical findings, particularly those focused on generative modeling and efficient inter-task discrimination, could be valuable. Finally, investigating the interplay between task confusion and other phenomena like catastrophic forgetting, and how different class-IL techniques address these issues, warrants further study. These future directions hold promise for advancing the understanding and performance of class-incremental learning significantly.

More visual insights#

More on figures

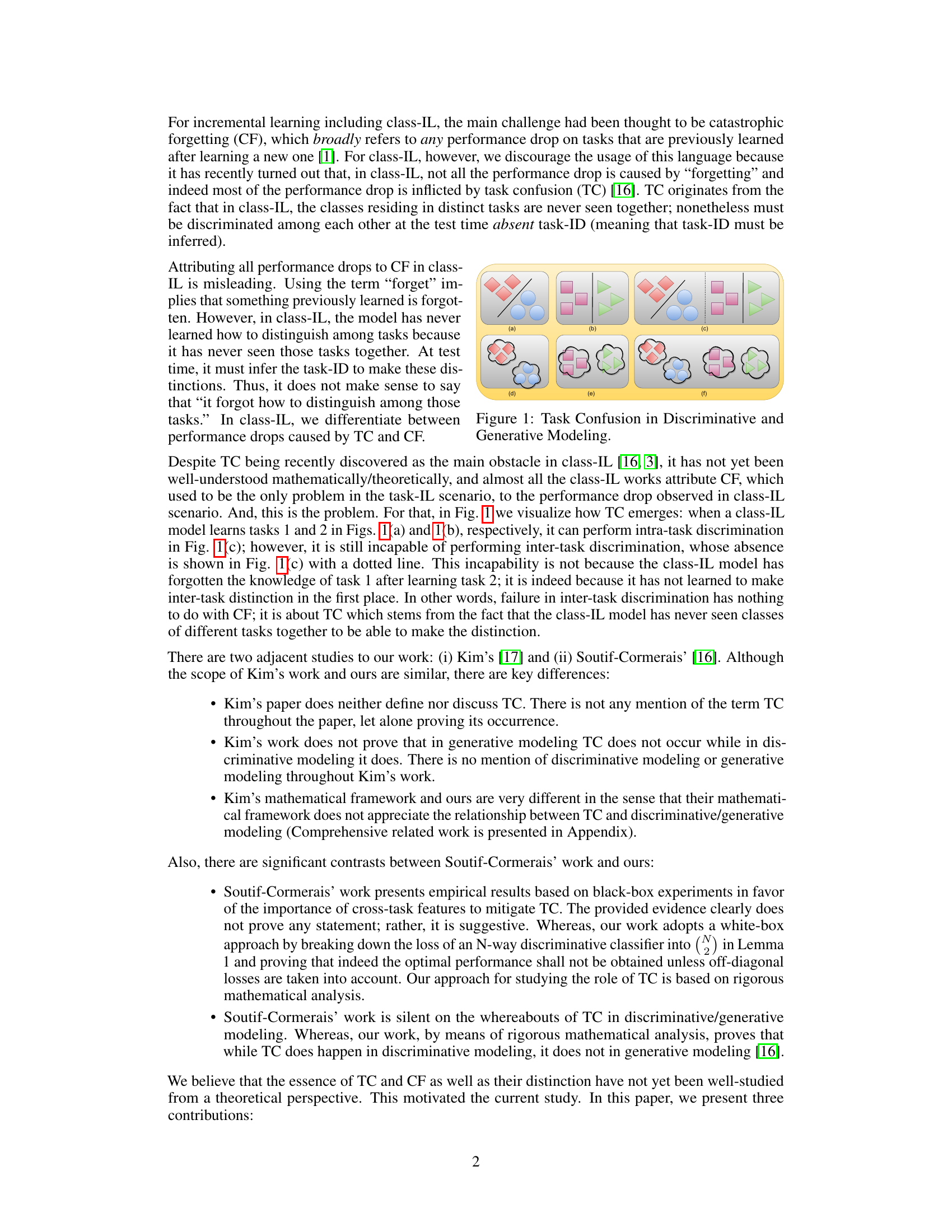

🔼 This figure visually represents task confusion (TC) in both discriminative and generative modeling within the context of class-incremental learning. In the discriminative model (left), the diagonal blocks represent intra-task discrimination (within the same task), while the off-diagonal blocks represent inter-task discrimination (between different tasks). The discriminative model shows a high degree of task confusion (red blocks) because it cannot discriminate between tasks easily, while also suffering from catastrophic forgetting (lighter green). The generative model (right), on the other hand, demonstrates significantly less task confusion, as evidenced by the largely empty inter-task blocks. This is because generative modeling naturally avoids TC.

read the caption

Figure 2: Task Confusion in Discriminative and Generative Modeling.

🔼 This figure illustrates the difference between task confusion (TC) in discriminative and generative modeling in class incremental learning. The left side shows discriminative modeling, where sequentially training on tasks leads to forgetting (lighter green) and an inability to discriminate between tasks (red). This is due to the focus on minimizing intra-task losses only. Generative modeling, on the right side, avoids this issue because it minimizes both intra and inter-task losses simultaneously.

read the caption

Figure 2: Task Confusion in Discriminative and Generative Modeling.

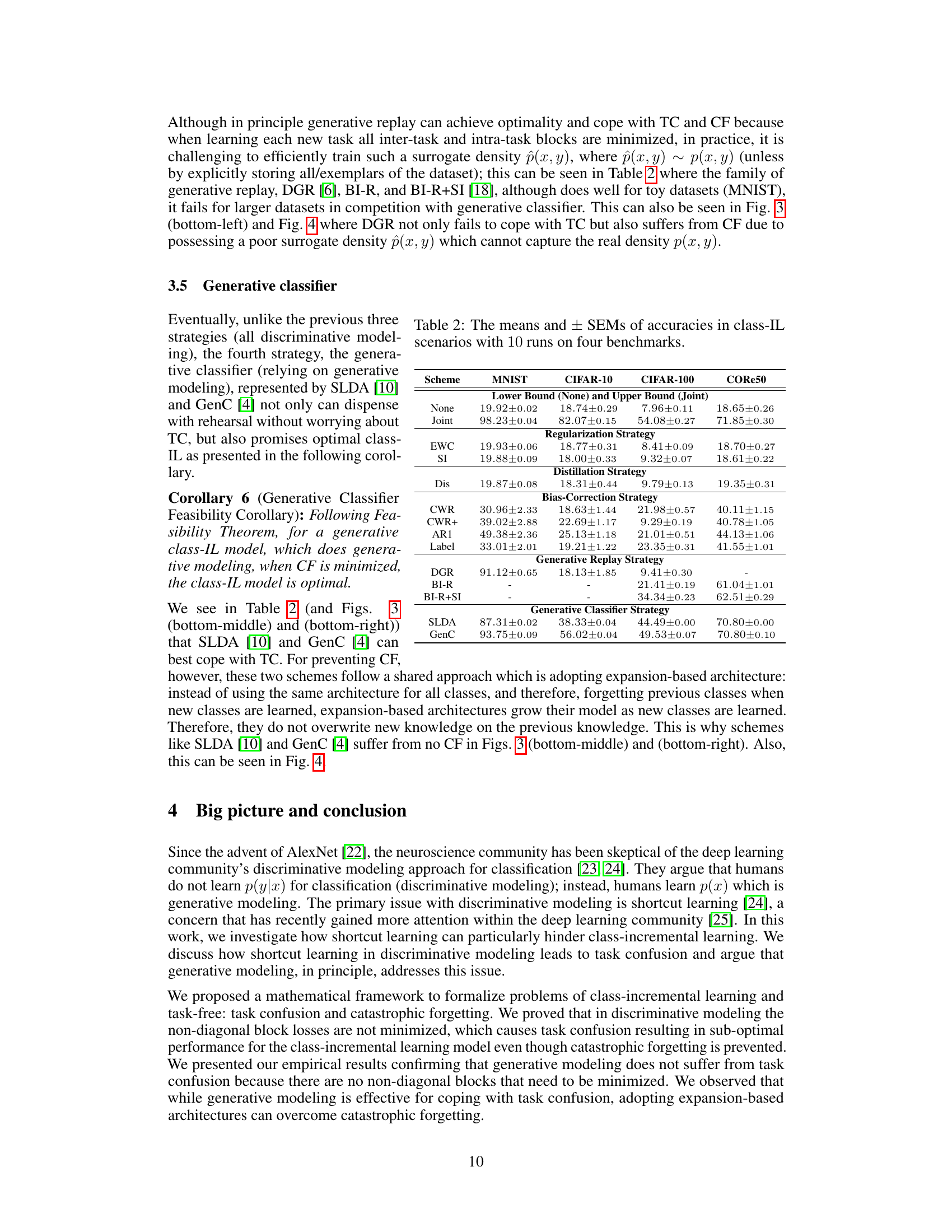

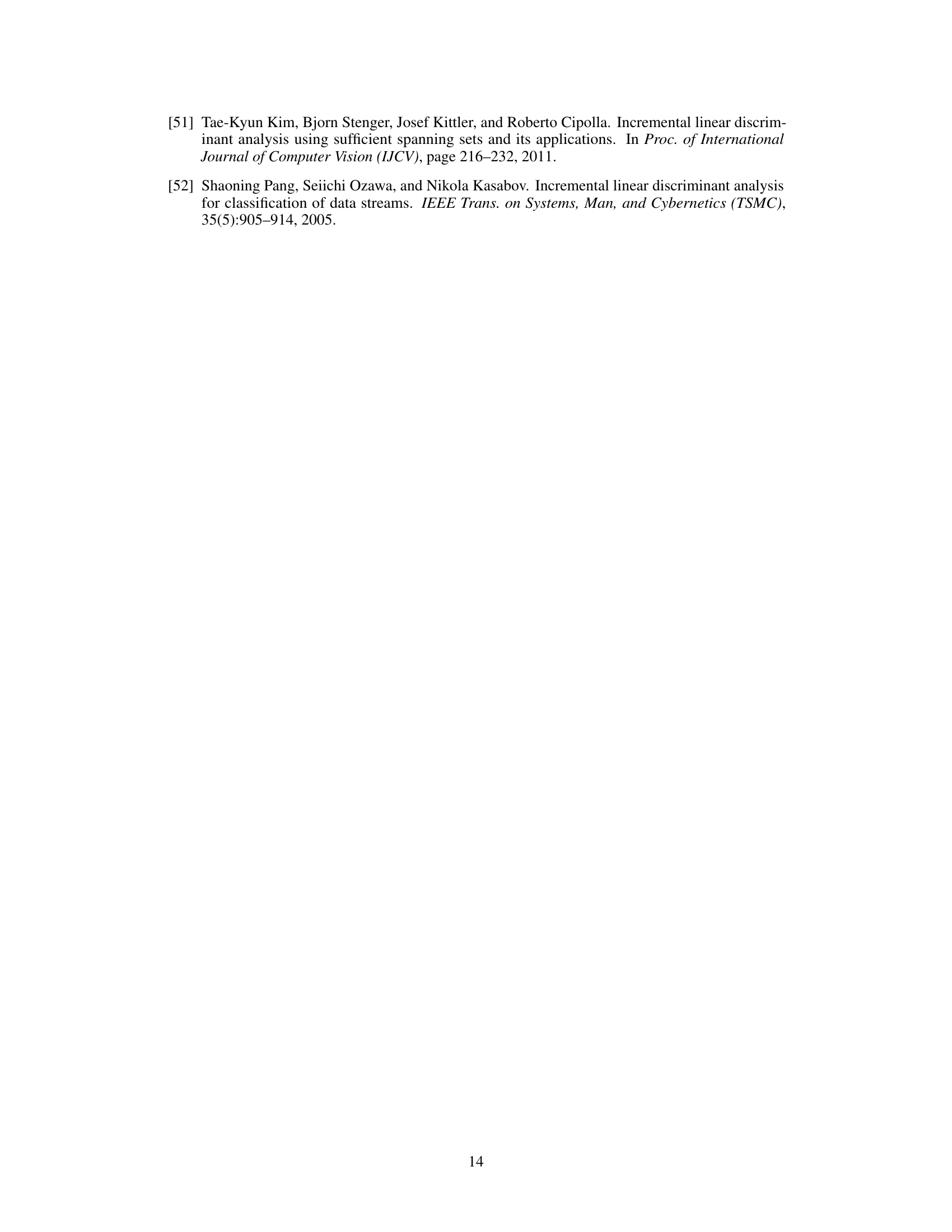

🔼 This figure displays confusion matrices for six different class-incremental learning strategies on the CIFAR-10 dataset. Each matrix visualizes the performance of a classifier, showing the relationship between true and predicted classes. The strategies are EWC, SI, AR1 (all discriminative methods), DGR (generative replay), SLDA, and GenC (both generative classifiers). The color intensity represents the number of correctly classified samples for each class pair, with darker colors indicating more correct classifications. The figure demonstrates the superior performance of the generative classifiers (SLDA, GenC) compared to discriminative methods in mitigating task confusion (TC) and catastrophic forgetting (CF). GenC achieves the highest accuracy (0.59), significantly outperforming the other methods.

read the caption

Figure 4: Generative classifiers like SLDA and GenC mitigate TC and CF (CIFAR-10).

🔼 The figure displays the performance of the Synaptic Intelligence (SI) model for both task-IL and class-IL scenarios. In task-IL, where task IDs are provided, SI demonstrates a considerable mitigation of catastrophic forgetting (CF), as indicated by a smaller performance drop on previously learned tasks. However, in the class-IL setting (no task IDs), SI is largely ineffective at mitigating task confusion (TC), shown by a substantial decline in accuracy as new tasks are introduced. This highlights that while SI reduces forgetting in the setting where task boundaries are known, it fails to address the more significant problem of task confusion when tasks are presented incrementally without task IDs.

read the caption

Figure F.1: In this figure, Synaptic Intelligence (SI) [2] with λ = 1 is adopted. It is clear that SI is almost effective at mitigating CF; however, ineffective for TC.

Full paper#