↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Current methods for enhancing certified robustness using diffusion models struggle to balance efficiency and effectiveness. While some methods are efficient, they don’t always generate purified images that retain the semantic meaning of the originals. Others are effective but computationally expensive. This creates a trade-off that limits the practical application of these techniques.

This work introduces Consistency Purification, a novel framework that addresses this limitation. It leverages a consistency model—a one-step generative model—for efficient purification, combined with fine-tuning using the Learned Perceptual Image Patch Similarity (LPIPS) loss to ensure semantic meaning is preserved. Empirical results show that this approach achieves state-of-the-art performance, offering a significant improvement in both efficiency and effectiveness compared to existing methods.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in certified robustness due to its novel Consistency Purification framework. It offers a Pareto superior solution, balancing efficiency and effectiveness, which significantly advances the state-of-the-art. The findings on semantic alignment via LPIPS loss and the theoretical analysis provide valuable insights for future work in this area, opening up new research avenues in diffusion model applications for security.

Visual Insights#

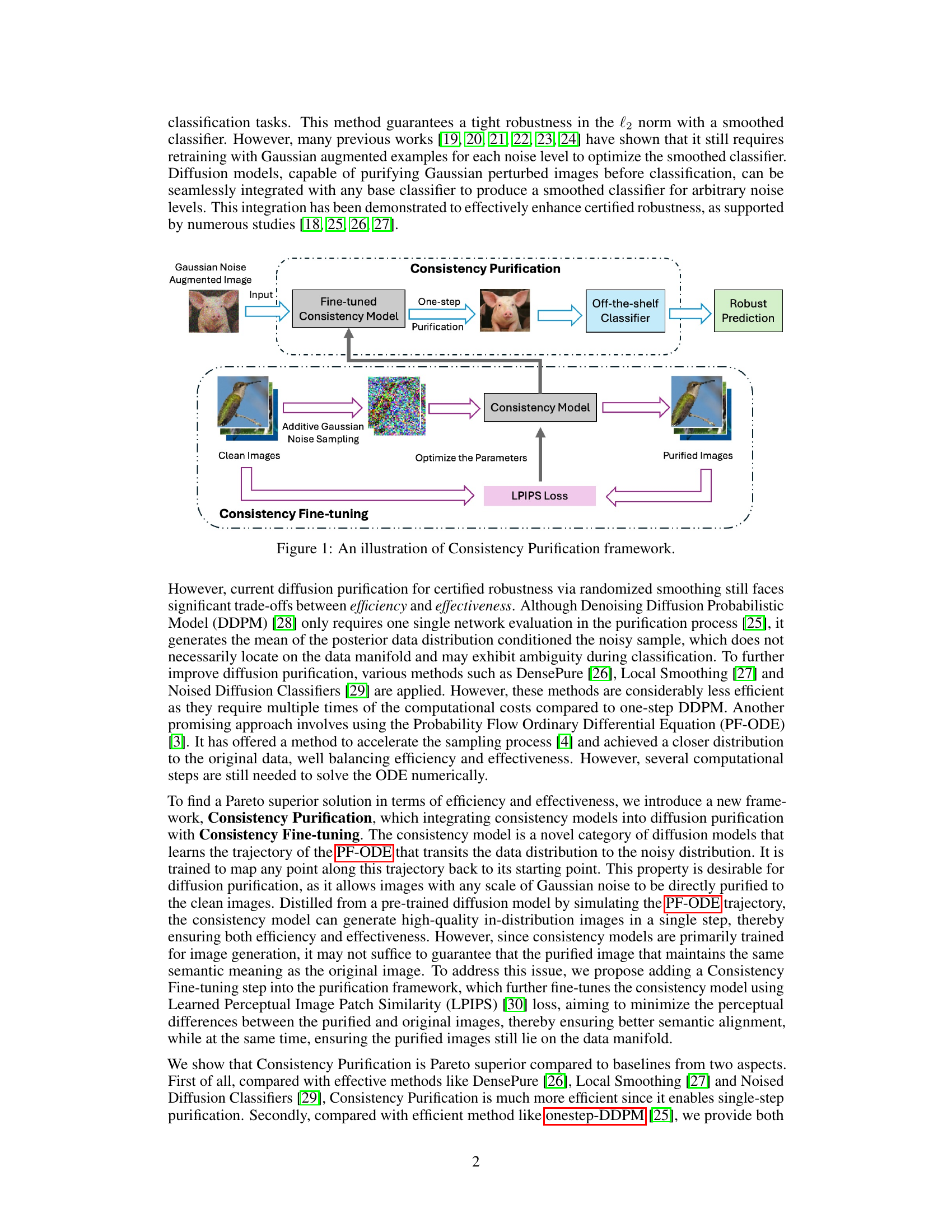

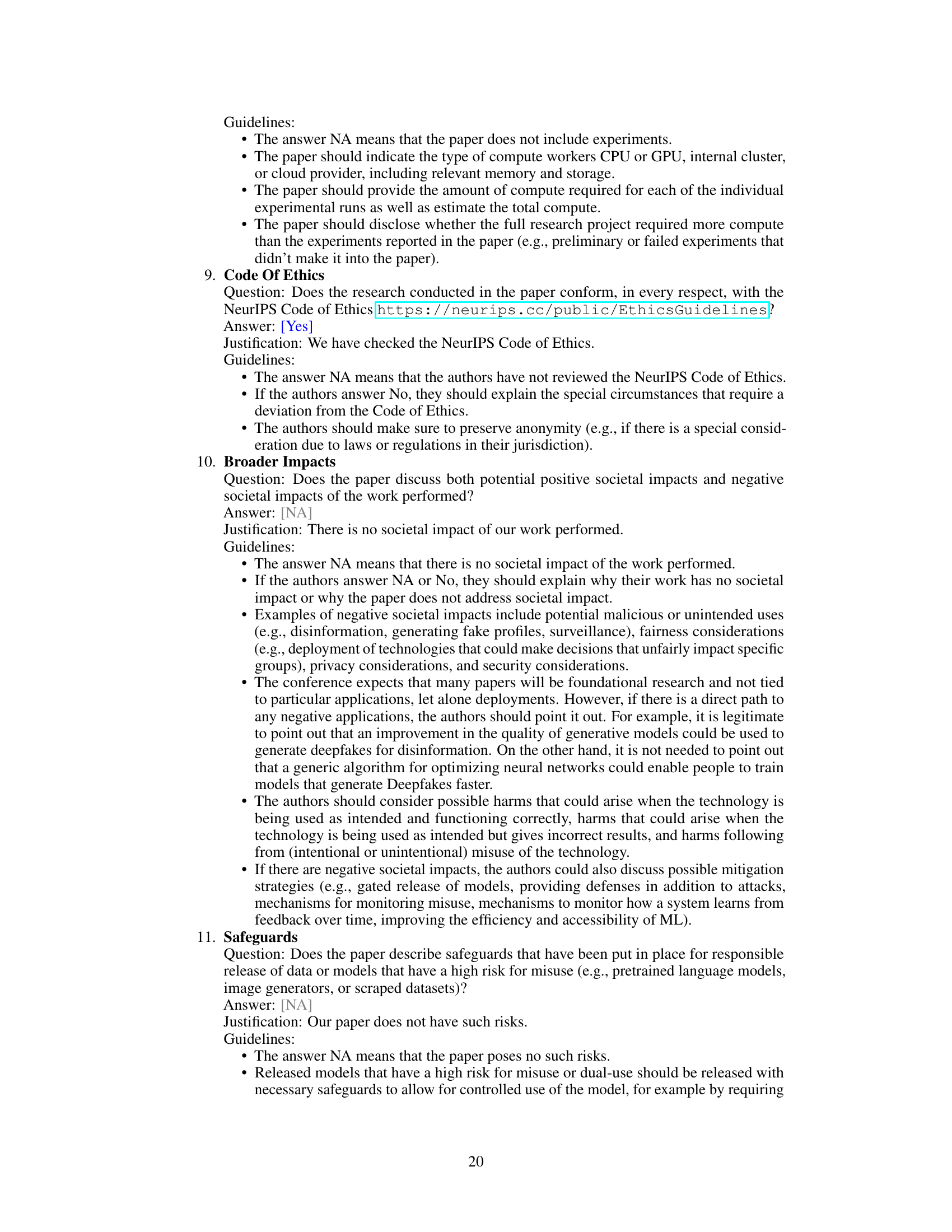

The figure illustrates the Consistency Purification framework. It shows how a Gaussian noise augmented image is purified using a fine-tuned consistency model. This purified image is then passed to an off-the-shelf classifier for robust prediction. The framework also includes a Consistency Fine-tuning step using LPIPS loss to optimize the parameters of the consistency model for better semantic alignment between purified and original images. The process is depicted with both a pig and a hummingbird as example images.

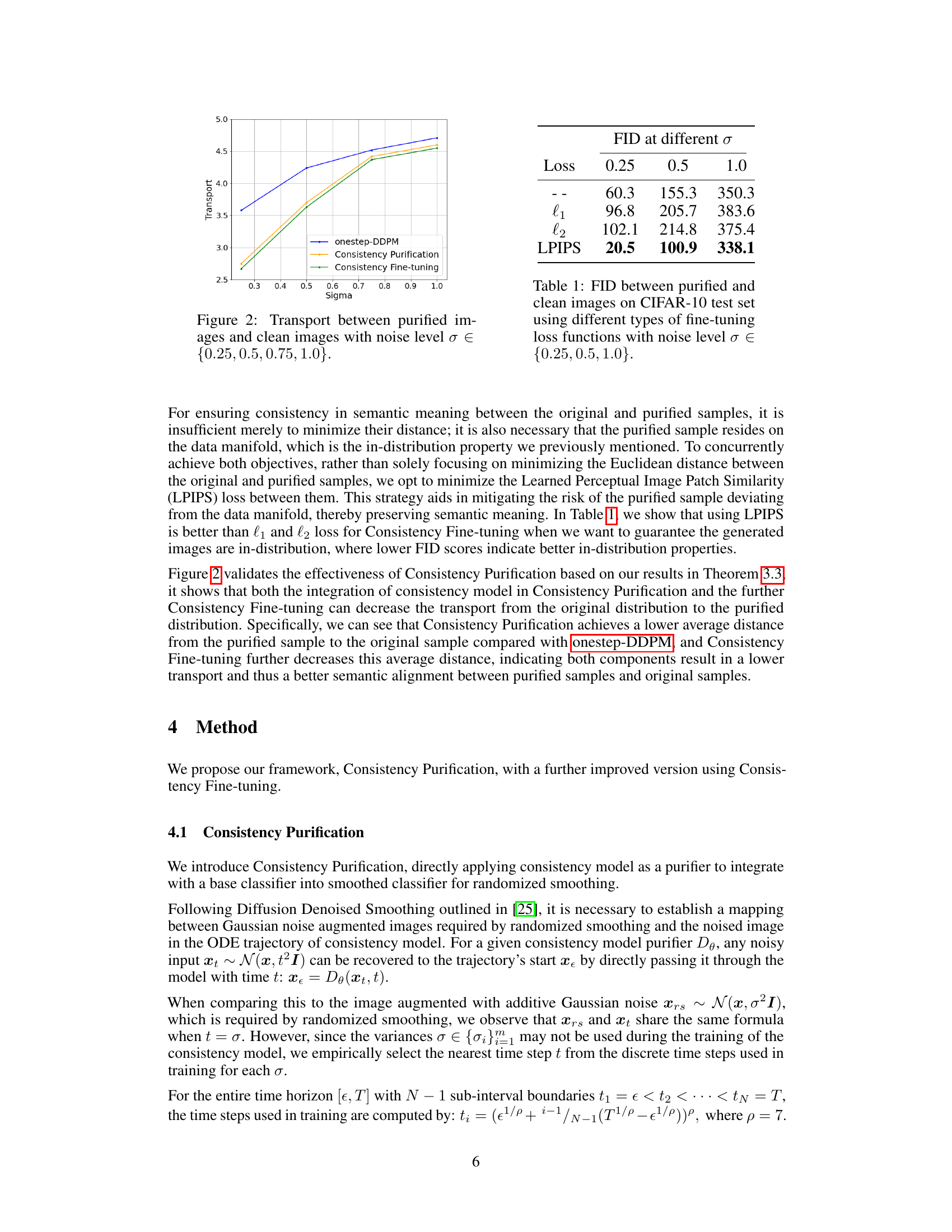

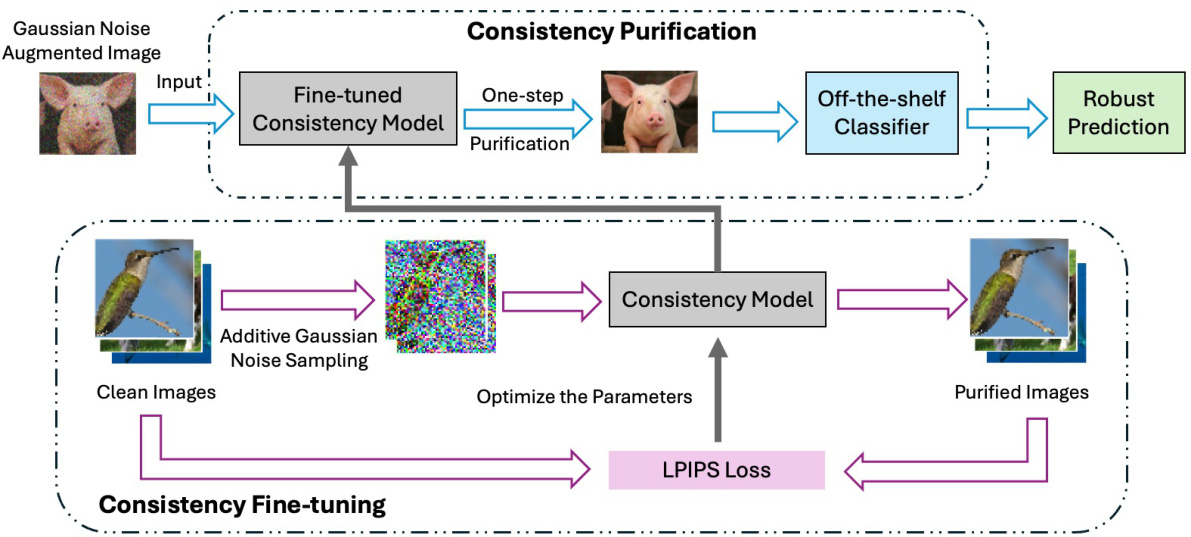

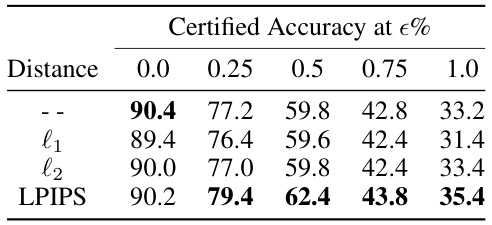

This table shows the Fréchet Inception Distance (FID) scores between purified images and clean images on the CIFAR-10 test set. The FID, a metric evaluating image quality and similarity, is calculated for different noise levels (σ) and using three types of fine-tuning loss functions (l1, l2, and LPIPS) during the training of the Consistency model. Lower FID scores indicate better image quality and a higher degree of similarity between purified and original images. The table helps demonstrate the effectiveness of the LPIPS loss function in preserving semantic information and generating better purified images than l1 or l2 loss.

In-depth insights#

Consistency Purification#

The concept of “Consistency Purification” in the context of enhancing certified robustness of AI models is quite intriguing. It addresses the limitations of existing diffusion-based purification methods by aiming for both efficiency and effectiveness. The key innovation lies in leveraging “consistency models,” which are one-step generative models trained to map noisy images back to their clean counterparts. This contrasts with multi-step approaches that solve stochastic differential equations, significantly improving efficiency. However, the inherent limitation of consistency models, not being specifically designed for purification, is addressed through Consistency Fine-tuning with LPIPS loss. This ensures the purified images not only reside on the data manifold but also maintain semantic alignment with the originals, leading to improved robustness. The proposed framework achieves state-of-the-art results by striking a balance between effectiveness and efficiency, demonstrating the efficacy of its approach in certified robustness.

Certified Robustness#

The concept of “Certified Robustness” in the context of this research paper centers on developing methods to enhance the reliability and resilience of machine learning models, specifically against adversarial attacks or noisy inputs. The core aim is to mathematically guarantee a level of robustness, unlike traditional methods that rely on empirical evaluations. This certification is achieved by providing a rigorous proof that the model’s performance will remain within acceptable bounds, even when faced with perturbations. The paper likely explores techniques like randomized smoothing, where carefully introduced noise during classification helps certify robustness. Efficiency and effectiveness are crucial, as methods must not only offer strong guarantees but also be computationally feasible for practical applications. The research likely proposes novel algorithms or architectural changes to improve this balance, possibly exploring the use of diffusion models to purify noisy inputs and bring them closer to the data manifold before classification, thereby enhancing certified robustness. The ultimate goal is to develop a more practical approach to certified robustness, surpassing the limitations of existing methods in terms of speed and accuracy.

One-Step Purification#

The concept of ‘One-Step Purification’ in the context of diffusion models for enhancing certified robustness is intriguing. It addresses the efficiency-effectiveness trade-off inherent in existing methods. Multi-step methods like PF-ODE achieve high-quality purification but suffer from computational cost. Single-step methods such as DDPM are fast but may not guarantee purified images reside on the data manifold, potentially leading to misclassifications. One-step purification aims to offer the best of both worlds: fast processing with the assurance of generating semantically meaningful, in-distribution purified images. This is a significant challenge and achieving this depends heavily on the design and training of the underlying generative model and any subsequent fine-tuning steps. The success hinges on creating a model that efficiently maps noisy input to a cleaned version without requiring iterative refinement while maintaining semantic consistency. This requires a deeper analysis of what constitutes a ‘good’ purification model and how to measure this beyond simple distance metrics; perceptual similarity measures may play a crucial role.

LPIPS Fine-tuning#

The proposed LPIPS fine-tuning method is a crucial enhancement to the Consistency Purification framework. It directly addresses the limitation of the consistency model, which, while efficient at generating on-manifold purified images, may not preserve the semantic meaning of the original image. By incorporating the LPIPS loss during fine-tuning, the model learns to minimize the perceptual distance between purified and original images. This ensures a closer semantic alignment while retaining the benefit of one-step purification and on-manifold generation. The effectiveness of this approach is demonstrated empirically through improvements in certified robustness, outperforming baselines in experiments. This targeted fine-tuning proves a powerful technique to improve the quality of diffusion purification, offering a Pareto-superior solution that balances effectiveness and efficiency.

Future Directions#

Future research could explore several promising avenues. Improving the efficiency of consistency models is crucial, potentially through architectural innovations or more efficient training methods. Investigating alternative loss functions beyond LPIPS for consistency fine-tuning could enhance semantic alignment. Extending Consistency Purification to other modalities like audio or video would broaden its applicability. The development of theoretical bounds on the certified robustness achievable with Consistency Purification would provide further insights into its effectiveness. Finally, a deeper investigation into the generalizability of Consistency Purification across different architectures and datasets is needed to assess its wider applicability and robustness.

More visual insights#

More on figures

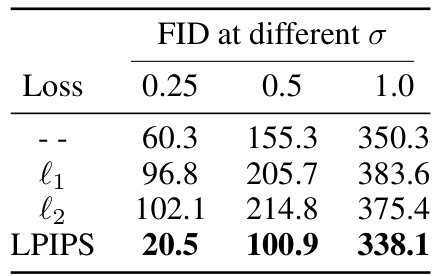

This figure shows the transport (a measure of distance between probability distributions) between purified images generated by different methods (onestep-DDPM, Consistency Purification, and Consistency Fine-tuning) and clean images at various noise levels (sigma). Lower transport indicates better purification, as it implies the purified samples are closer to the original clean images in terms of semantic meaning and are less likely to deviate from the data manifold.

This figure shows the certified accuracy of the Consistency Purification method and the onestep-DDPM baseline at different noise levels (σ) and certified radii (ε) on CIFAR-10 and ImageNet-64 datasets. The lines represent the certified accuracy for various radii under each noise level. The figure demonstrates the superior performance of Consistency Purification over the baseline, achieving higher certified accuracy across various radii and noise levels.

This figure compares the results of purification using onestep-DDPM and Consistency Purification on CIFAR-10 dataset with a noise level of σ = 0.5. The images show that Consistency Purification produces higher-quality purified images, leading to better classification accuracy than onestep-DDPM. Green borders indicate correctly classified images, while red borders indicate misclassified images after purification.

This figure compares the results of image purification using onestep-DDPM and Consistency Purification methods on the CIFAR-10 dataset with a noise level of σ = 0.5. It visually demonstrates the superior quality of images produced by Consistency Purification, which also leads to improved classification accuracy. Each pair of images shows the same noised input image purified by each method, with a green border indicating correct classification and a red border indicating misclassification by the classifier.

This figure compares the results of image purification using onestep-DDPM and Consistency Purification methods on the CIFAR-10 dataset with a noise level of σ = 0.5. Identical noise patterns were applied to the same locations in each image pair. The green border indicates that the classifier correctly identified the purified image, while a red border indicates misclassification.

This figure compares the results of purifying images using onestep-DDPM and Consistency Purification methods on CIFAR-10 dataset with a noise level of σ = 0.5. Identical noise patterns were added to the same locations in each original image. The purified images are shown, with green borders indicating correct classification by a classifier after purification and red borders indicating misclassification. This visual comparison highlights the difference in the quality of purified images produced by each method and their impact on classification accuracy.

More on tables

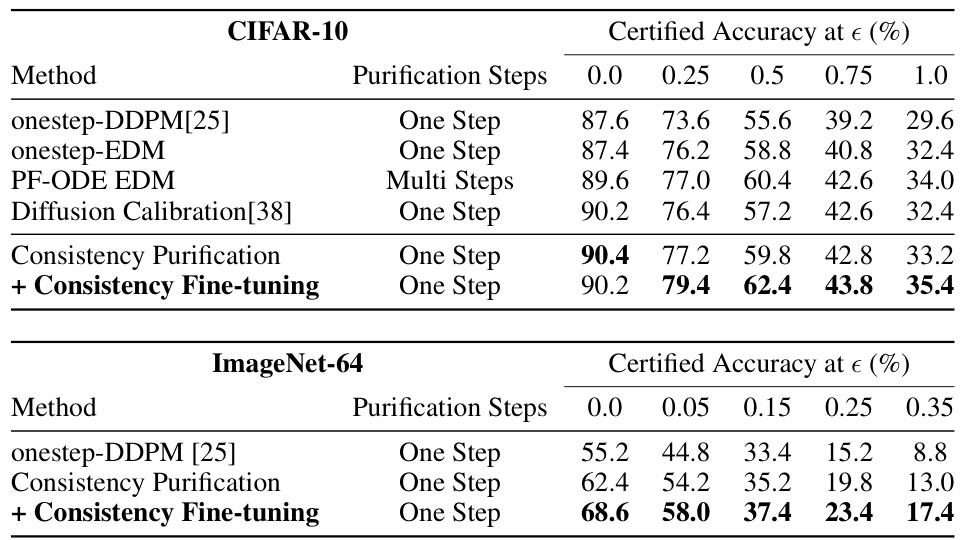

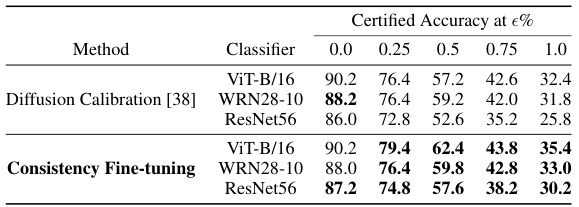

This table presents the certified accuracy results of the Consistency Purification method and several baseline methods on CIFAR-10 and ImageNet-64 datasets. It shows the certified accuracy at different epsilon (є) values (representing the robustness level) and different noise levels (σ). The number of purification steps required by each method is also specified. The results demonstrate the superior performance of the proposed Consistency Purification approach, particularly when combined with Consistency Fine-tuning, compared to existing methods.

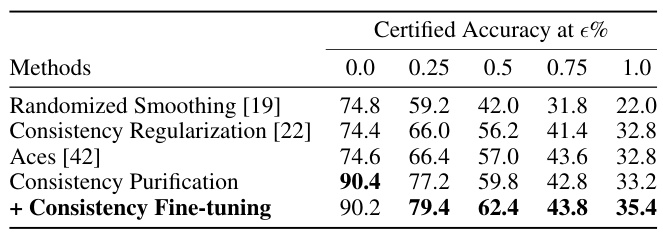

This table compares the certified accuracy of Consistency Purification against three other non-diffusion-based methods for certified robustness: Randomized Smoothing, Consistency Regularization, and Aces. The comparison is made across different certified accuracy thresholds (epsilon). The results show that Consistency Purification significantly outperforms the other methods.

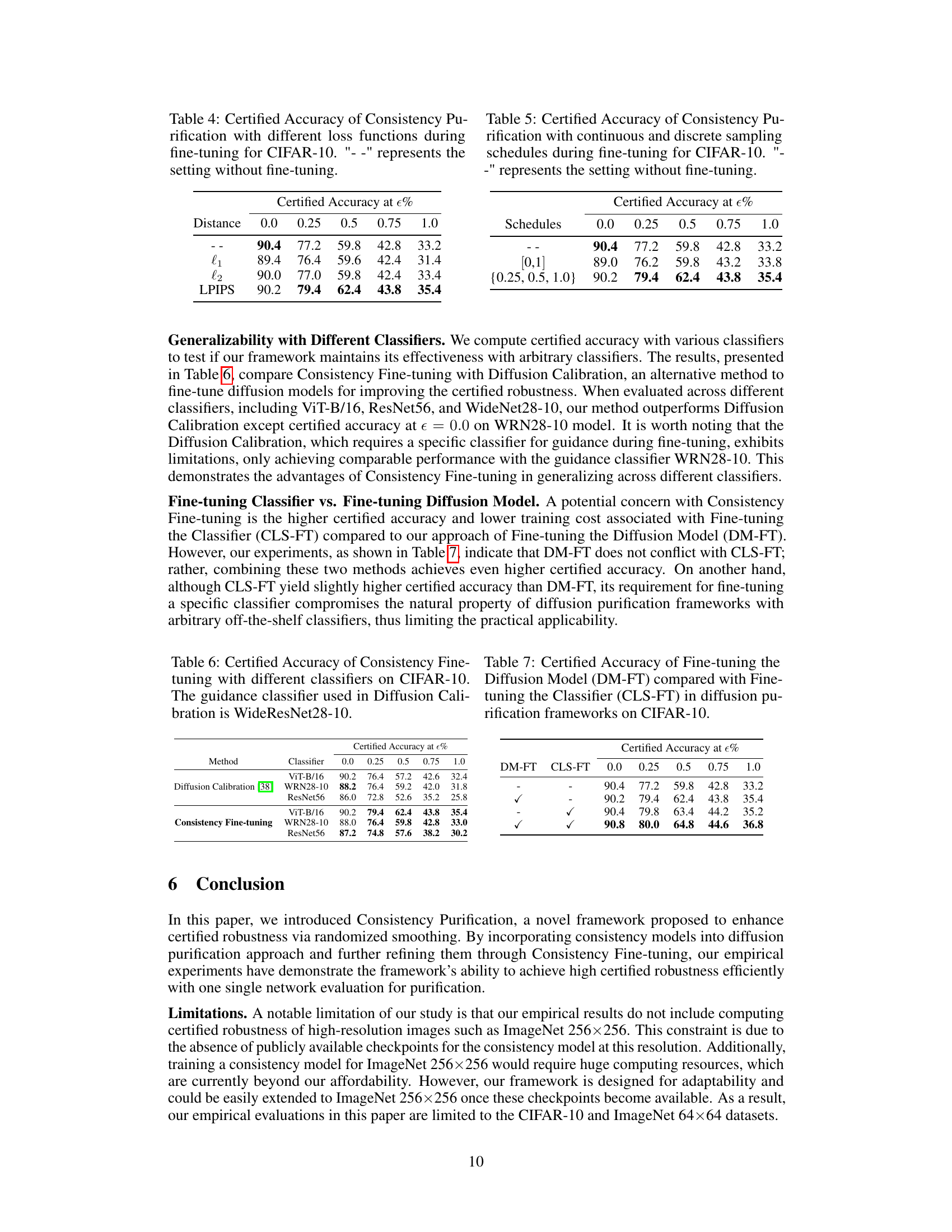

This table presents the certified accuracy results of the Consistency Purification method on the CIFAR-10 dataset, comparing different loss functions used during the fine-tuning stage. The ‘–’ row shows the results without fine-tuning. It demonstrates how different loss functions (l1, l2, and LPIPS) impact the model’s performance in terms of certified accuracy at various noise levels (0.0, 0.25, 0.5, 0.75, and 1.0). The LPIPS loss consistently shows superior performance, highlighting its effectiveness in maintaining semantic alignment between purified and original images.

This table presents the certified accuracy of the Consistency Purification method and several baselines on CIFAR-10 and ImageNet-64 datasets. The certified accuracy is evaluated at various noise levels (epsilon). It shows the purification steps (One Step vs. Multi Steps) and highlights the significant improvement achieved by Consistency Purification, particularly when combined with Consistency Fine-tuning, over the onestep-DDPM baseline and other methods like onestep-EDM, PF-ODE EDM, and Diffusion Calibration.

This table presents the certified accuracy results of the Consistency Purification method and its comparison with several baselines on CIFAR-10 and ImageNet-64 datasets. The certified accuracy is evaluated at different noise levels (ϵ) using various classifiers. The table shows the purification steps (One Step or Multi Steps) required for each method and highlights the performance gains achieved with Consistency Purification and its fine-tuning variant.

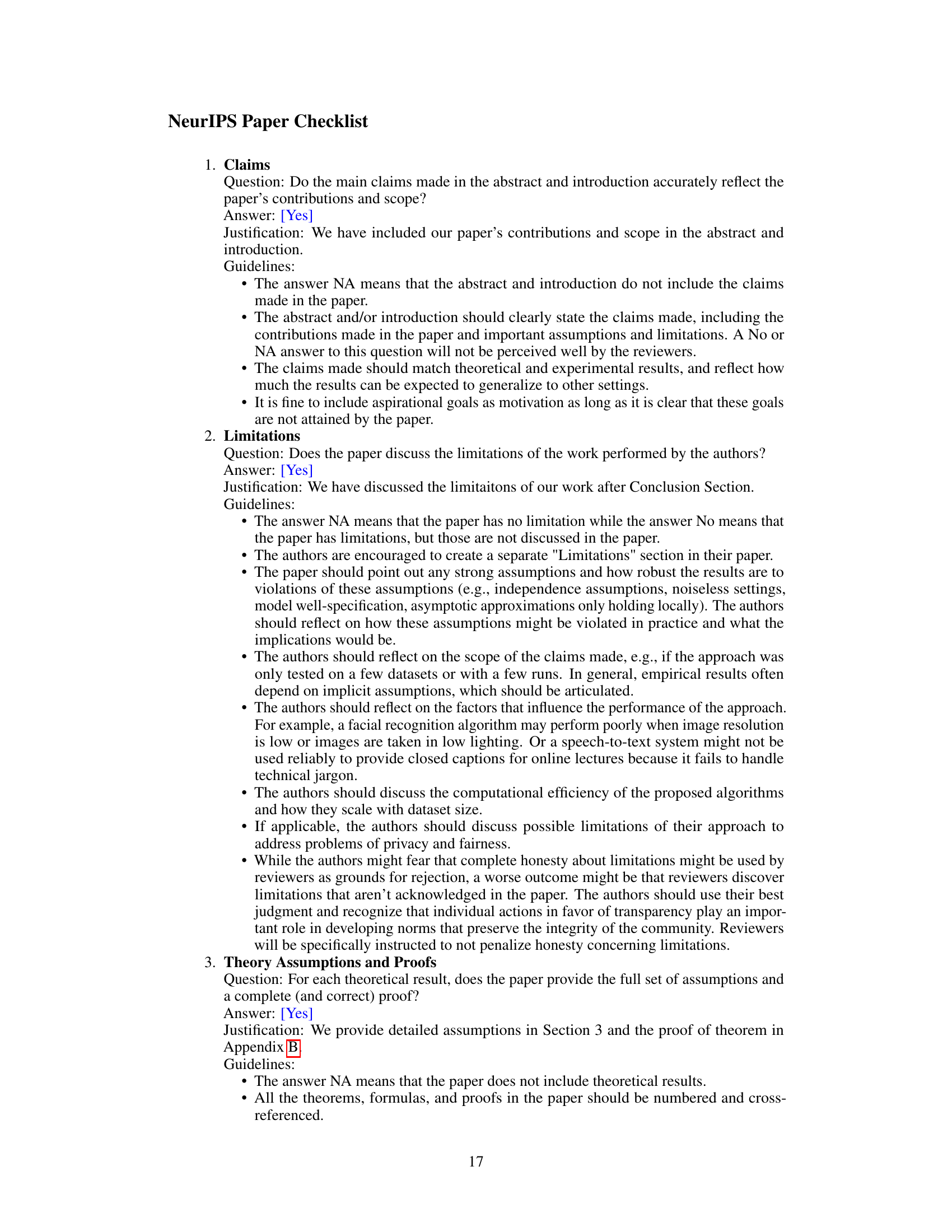

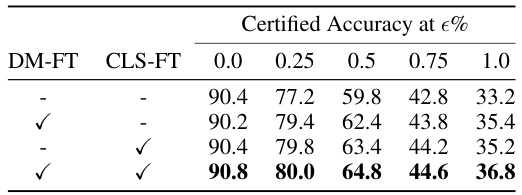

This table presents the certified accuracy results on CIFAR-10 for different fine-tuning strategies. It compares the performance of fine-tuning only the diffusion model (DM-FT), fine-tuning only the classifier (CLS-FT), and fine-tuning both the diffusion model and the classifier. The results are shown for various certified accuracy thresholds (epsilon).

Full paper#