↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Traditional Look-Up Table (LUT)-based methods for image restoration face a storage explosion problem, hindering their application on resource-limited edge devices. This is because the LUT size increases exponentially with the convolution kernel size, making it impractical to deploy complex models on such devices. Existing solutions, while improving accuracy, fail to mitigate this storage issue sufficiently.

TinyLUT introduces an innovative solution by employing a separable mapping strategy, decoupling the kernel and activation to transform the storage from exponential to linear dependence on kernel size. Furthermore, it incorporates a dynamic discretization mechanism to further reduce storage. This combined approach leads to a dramatic reduction in LUT storage—over 7x from separable mapping and an additional 4.48x from dynamic discretization—while maintaining accuracy comparable to or exceeding existing methods and significantly reducing inference latency. The result is efficient image restoration even on low-power edge devices.

Key Takeaways#

Why does it matter?#

This paper is important because it addresses the critical challenge of storage explosion in LUT-based image restoration methods, a significant bottleneck for edge device applications. By introducing innovative separable mapping and dynamic discretization mechanisms, it achieves significant storage reduction without compromising accuracy, making high-performance image restoration feasible on resource-constrained devices. This opens new avenues for deploying computationally intensive CNN models on edge devices and has implications for various computer vision tasks. This work also introduces a new state-of-the-art accuracy for image super-resolution and denoising on edge devices.

Visual Insights#

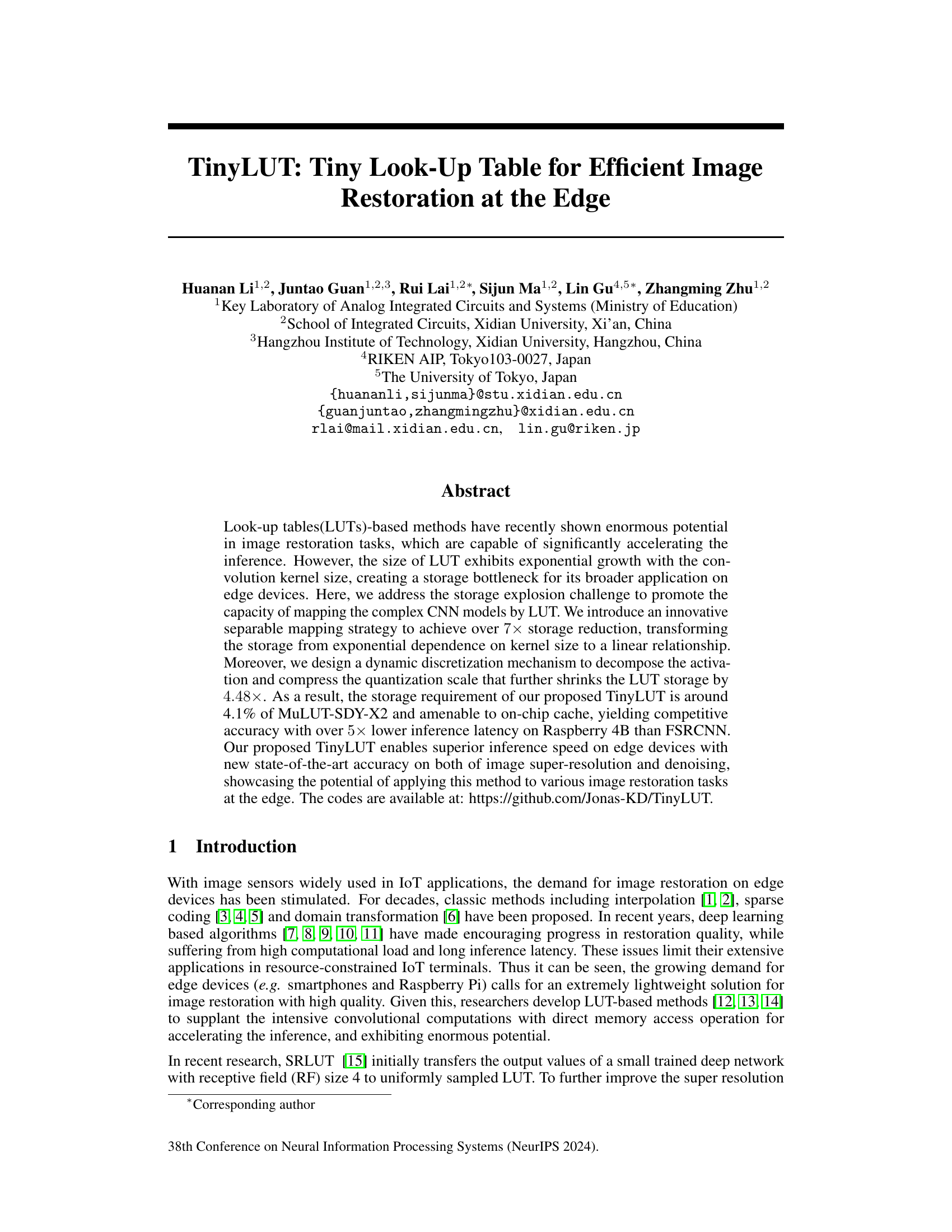

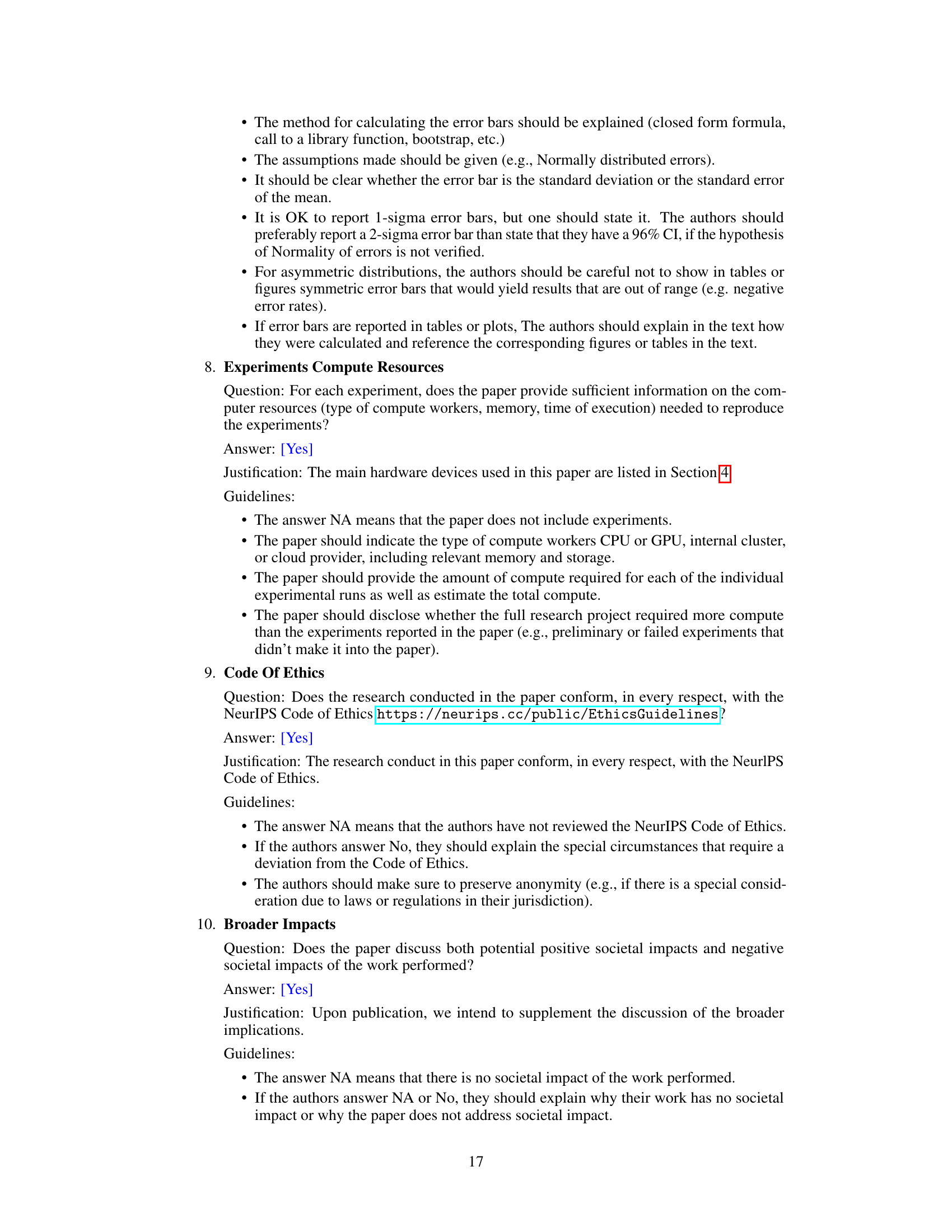

This figure compares the performance of TinyLUT against other state-of-the-art LUT-based image super-resolution algorithms on the Set5 benchmark dataset. The x-axis represents the LUT volume (in KB), and the y-axis represents the Peak Signal-to-Noise Ratio (PSNR) achieved at a 4x upscaling factor. TinyLUT demonstrates superior accuracy (higher PSNR) while requiring significantly less memory (smaller LUT volume) than competing methods.

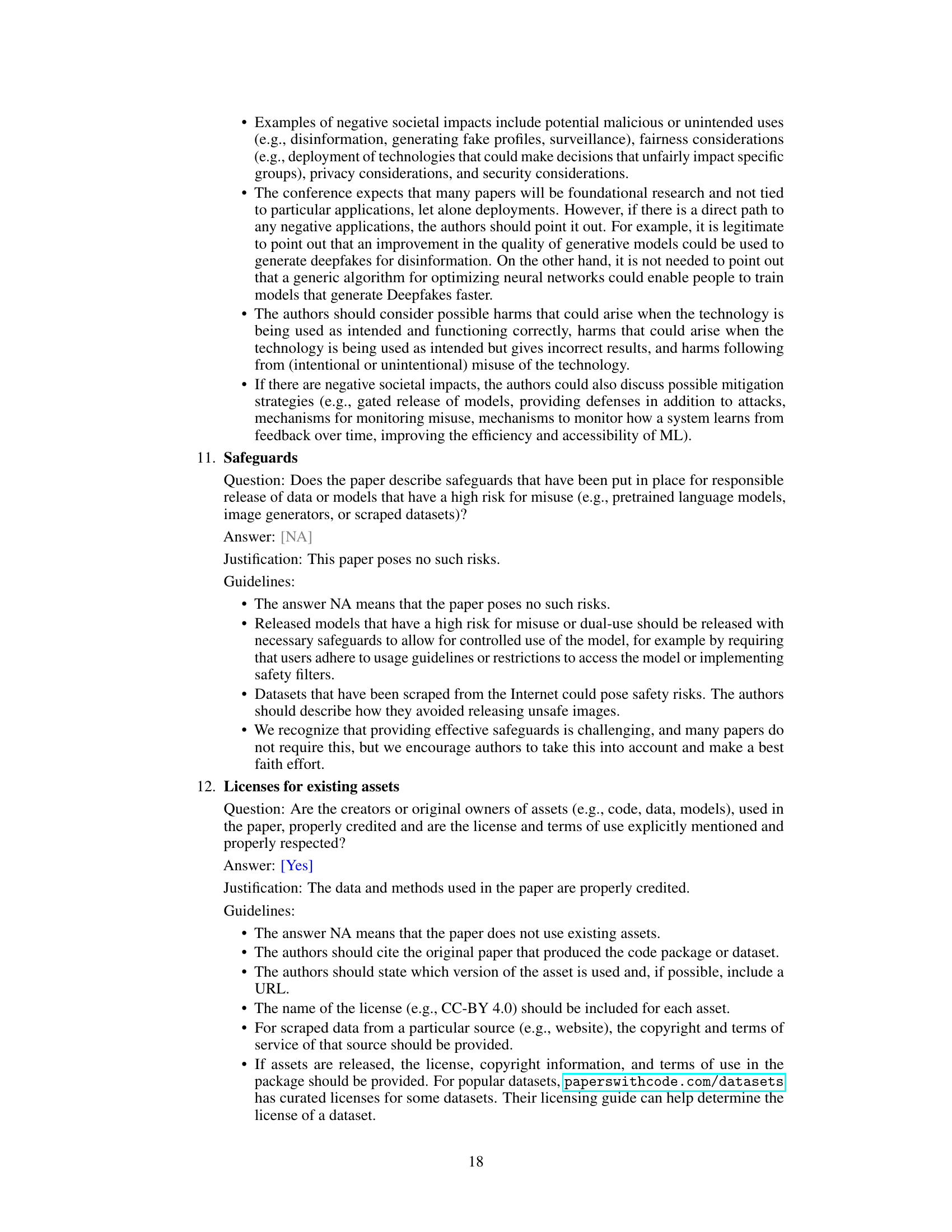

This table compares the size of Lookup Tables (LUTs) for different receptive field (RF) sizes using various optimization techniques. It shows the full size of LUT, the size after applying the SRLUT method, and the further reductions achieved with the Separable Mapping Strategy (SMS) and the combined SMS and Dynamic Discretization Mechanism (DDM). The table highlights the significant storage reduction achieved by these methods, making LUT-based approaches more feasible for edge devices.

In-depth insights#

TinyLUT: Storage Crunch#

The heading “TinyLUT: Storage Crunch” aptly captures a critical challenge in applying Look-Up Table (LUT)-based methods to image restoration tasks, especially on resource-constrained edge devices. LUTs offer significant speed advantages over traditional Convolutional Neural Networks (CNNs) for inference, but their storage requirements grow exponentially with kernel size. This exponential growth creates a storage bottleneck, severely limiting the practicality of LUTs for complex image restoration operations. The core of TinyLUT’s innovation lies in its elegant approach to alleviate this storage crunch. By employing a separable mapping strategy, TinyLUT dramatically reduces storage needs, transforming the relationship from exponential to linear. Further storage compression is achieved via a dynamic discretization mechanism, optimizing activation quantization. This two-pronged approach results in a significantly smaller LUT, making TinyLUT feasible for edge deployment while maintaining competitive accuracy and inference speed. Addressing this “storage crunch” is thus pivotal to the wider adoption of LUTs in resource-limited settings, opening doors to efficient real-time image restoration on a variety of devices.

Separable Mapping#

Separable mapping, in the context of optimizing look-up table (LUT) based image restoration methods, is a crucial technique to mitigate the storage explosion problem. The core idea is to decompose a high-dimensional convolution operation into multiple lower-dimensional operations, significantly reducing the number of entries required in the LUT. This is achieved by cleverly separating the spatial and channel dimensions of the convolution kernel, allowing for parallel processing and dramatically shrinking the LUT’s size from exponential to linear growth concerning kernel size. The effectiveness of separable mapping hinges on the trade-off between accuracy and memory efficiency. While it offers a substantial reduction in storage requirements, potentially enabling on-device deployment, some loss of accuracy is inevitable due to the inherent approximation introduced by the decomposition. Therefore, clever design considerations are critical to minimize this accuracy loss while still maximizing the benefits of reduced storage. The success of this approach depends heavily on the specific application and the choice of decomposition strategy, highlighting the need for further exploration and optimization techniques tailored to different image restoration tasks.

Dynamic Discretization#

Dynamic discretization, in the context of optimizing look-up tables (LUTs) for efficient image restoration, is a crucial technique to reduce storage needs. Instead of using a fixed, uniform quantization of activation values, dynamic discretization adapts the quantization levels based on the data’s characteristics. This approach cleverly leverages a learnable clipping mechanism to fine-tune the precision of the quantization dynamically. By doing this, it achieves a significant reduction in the size of the LUTs without compromising accuracy excessively. The method’s key advantage lies in its data-driven nature, allowing it to optimally compress the activation data without excessive information loss. This adaptable quantization strategy, in contrast to fixed-point quantization, leads to more efficient use of the limited storage capacity available for edge devices, making the system more suitable for real-time applications.

Edge Device Speedup#

The concept of ‘Edge Device Speedup’ in the context of image restoration using look-up tables (LUTs) centers on reducing computational latency and memory footprint for improved performance on resource-constrained edge devices. The core idea is to replace computationally expensive convolutional neural network (CNN) operations with fast LUT lookups. This involves pre-computing and storing the results of CNN operations in the LUT, allowing for near-instantaneous retrieval during inference. However, a major challenge is the exponential growth of LUT size with increasing kernel size, making it impractical for larger kernels used in high-performing CNNs. The proposed TinyLUT method tackles this problem using a separable mapping strategy to reduce storage and dynamic discretization to further compress the data. The result is a significant decrease in inference latency and storage requirements, demonstrating a substantial speedup on edge devices like Raspberry Pi, while maintaining comparable accuracy to more complex CNN-based approaches. Achieving a balance between speed, memory efficiency, and accuracy on edge devices is crucial, and TinyLUT provides a compelling solution for efficient image restoration in resource-limited environments.

Future Directions#

The ‘Future Directions’ section of this research paper on TinyLUT, a tiny look-up table for efficient image restoration, could fruitfully explore several avenues. Extending TinyLUT’s applicability beyond image super-resolution and denoising to other image restoration tasks such as deblurring, inpainting, and colorization would significantly broaden its impact. Investigating the unified mapping approach for diverse model architectures like transformers and CNNs would enhance its versatility. Additionally, researching optimizations for specific hardware platforms to maximize TinyLUT’s performance on edge devices is crucial. Furthermore, a detailed analysis of the trade-offs between accuracy, storage, and computational efficiency across various scenarios would refine the algorithm. Exploring techniques to handle higher-resolution images effectively and expanding the support for various color depths are necessary for wider adoption. Finally, the development of robust methods for handling noisy or incomplete data would strengthen TinyLUT’s real-world applicability.

More visual insights#

More on figures

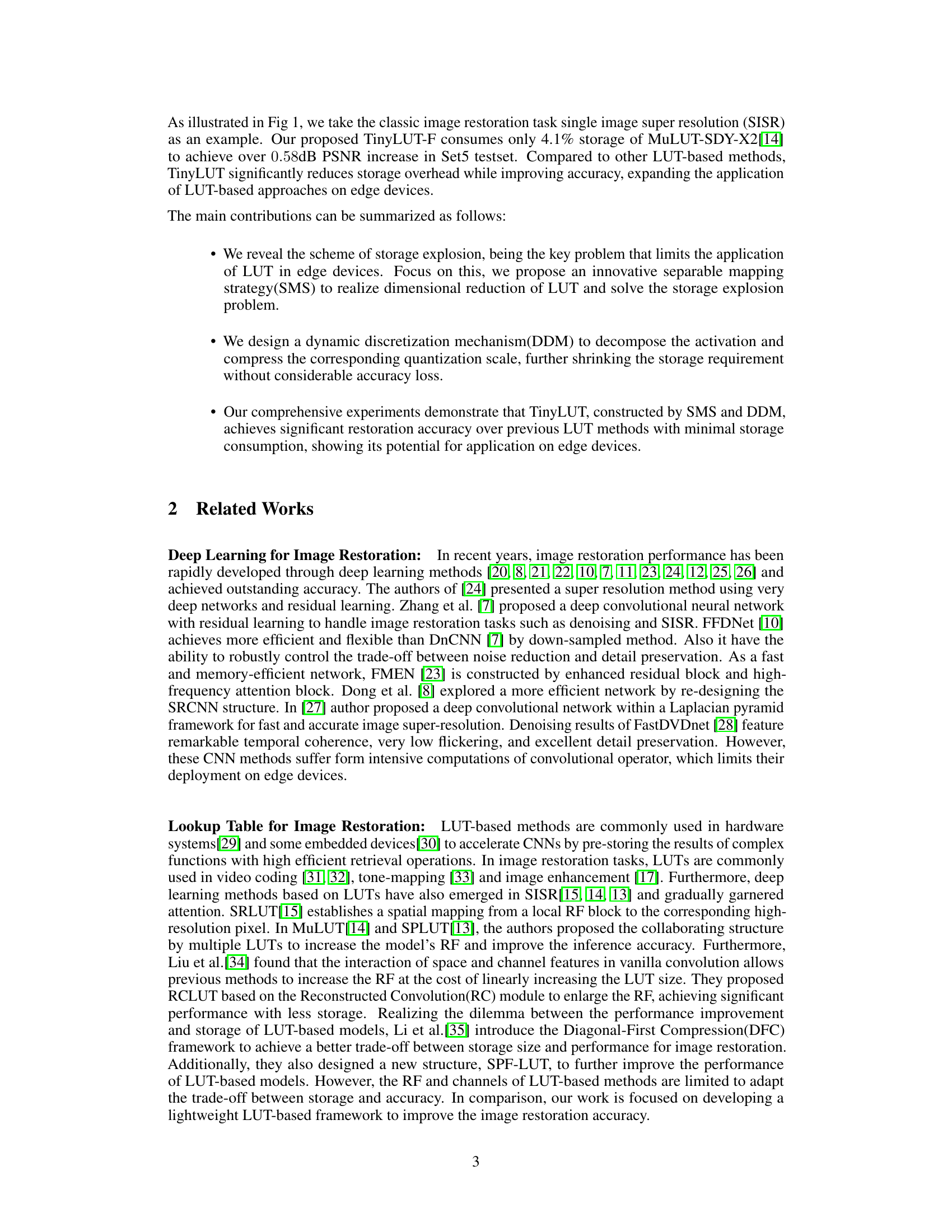

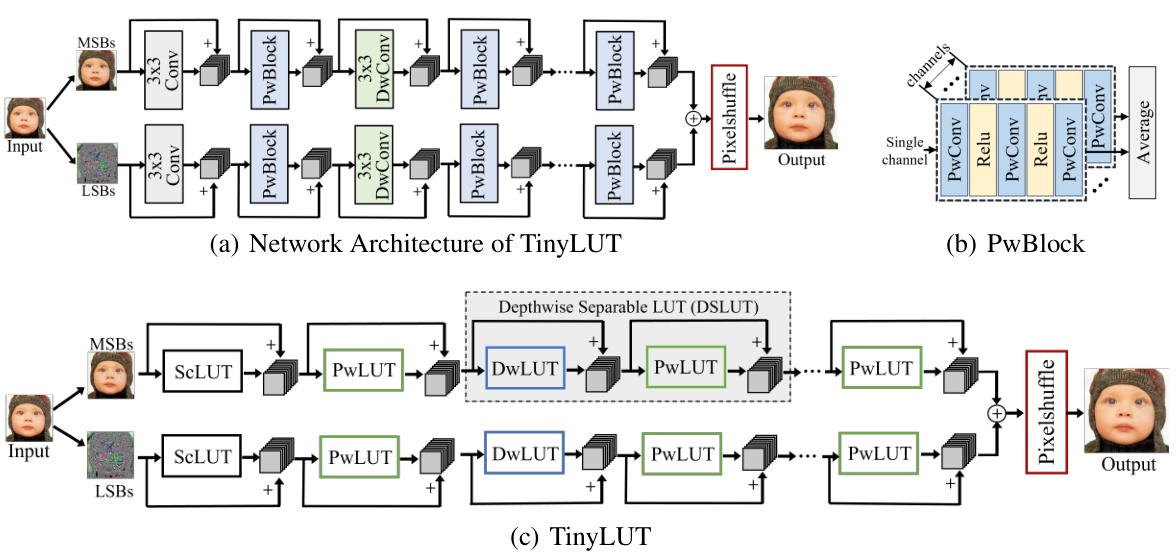

This figure provides a detailed illustration of the TinyLUT architecture. (a) shows the overall network structure, highlighting the two parallel branches processing MSBs and LSBs data, respectively, and the use of cascaded LUTs. (b) focuses on the PwBlock, detailing its structure and function in cross-channel feature integration. Finally, (c) provides a closer look at the LUT framework in TinyLUT, illustrating the organization of depthwise separable LUTs (DSLUTs) and pointwise LUTs (PwLUTs).

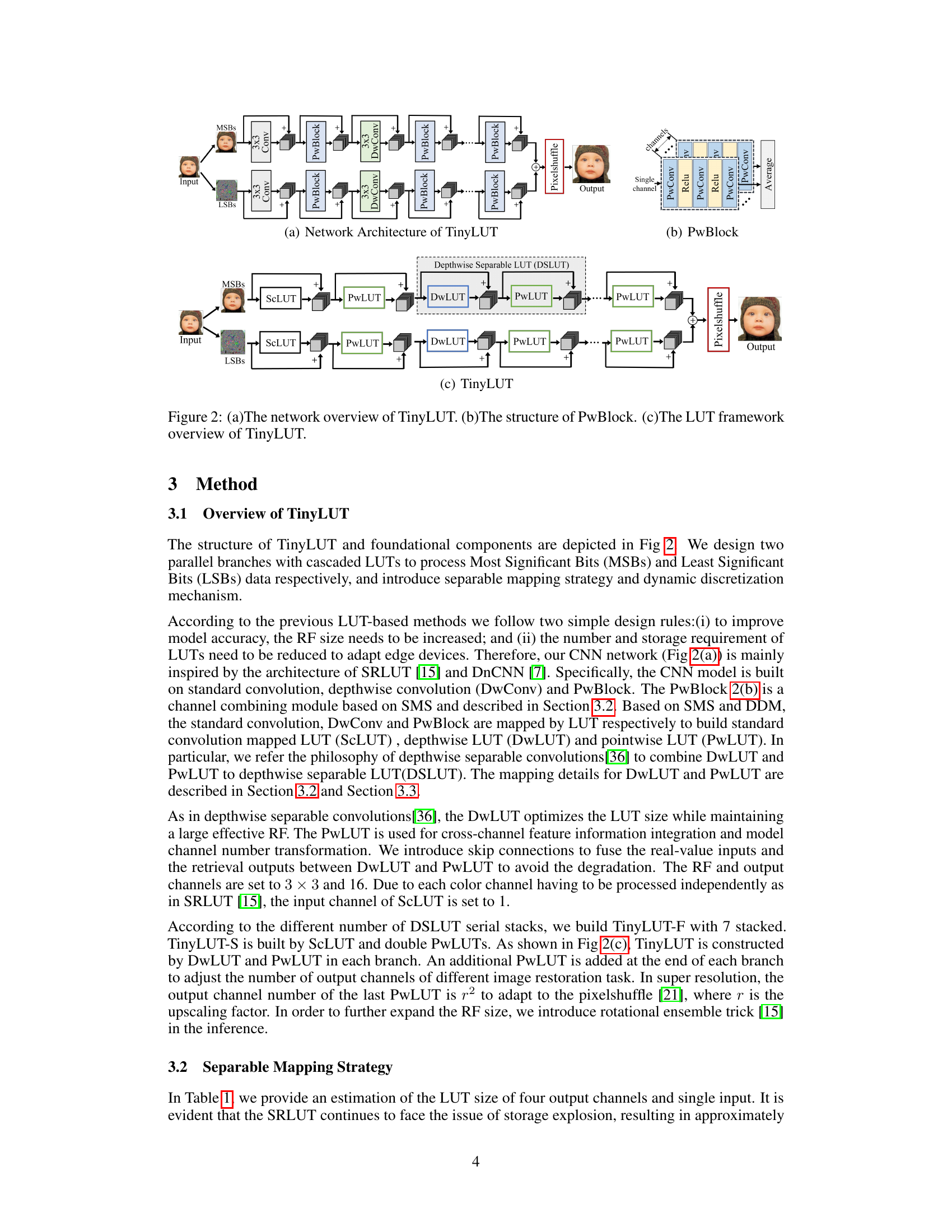

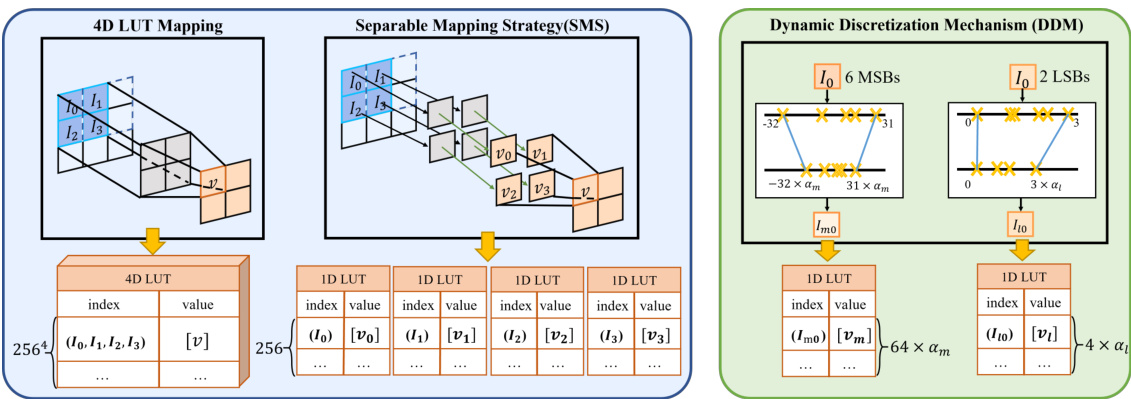

This figure illustrates the comparison of the traditional 4D LUT mapping method and the proposed TinyLUT method. The left side shows how a 2x2 input convolution is mapped to a 4-dimensional LUT, resulting in a large storage requirement. The right side demonstrates the proposed separable mapping strategy (SMS) and dynamic discretization mechanism (DDM). SMS decomposes the convolution kernel into smaller parts, reducing the storage complexity. DDM further compresses the activation data, resulting in an even smaller LUT size. The figure demonstrates how TinyLUT effectively reduces the storage size compared to traditional methods while maintaining competitive accuracy.

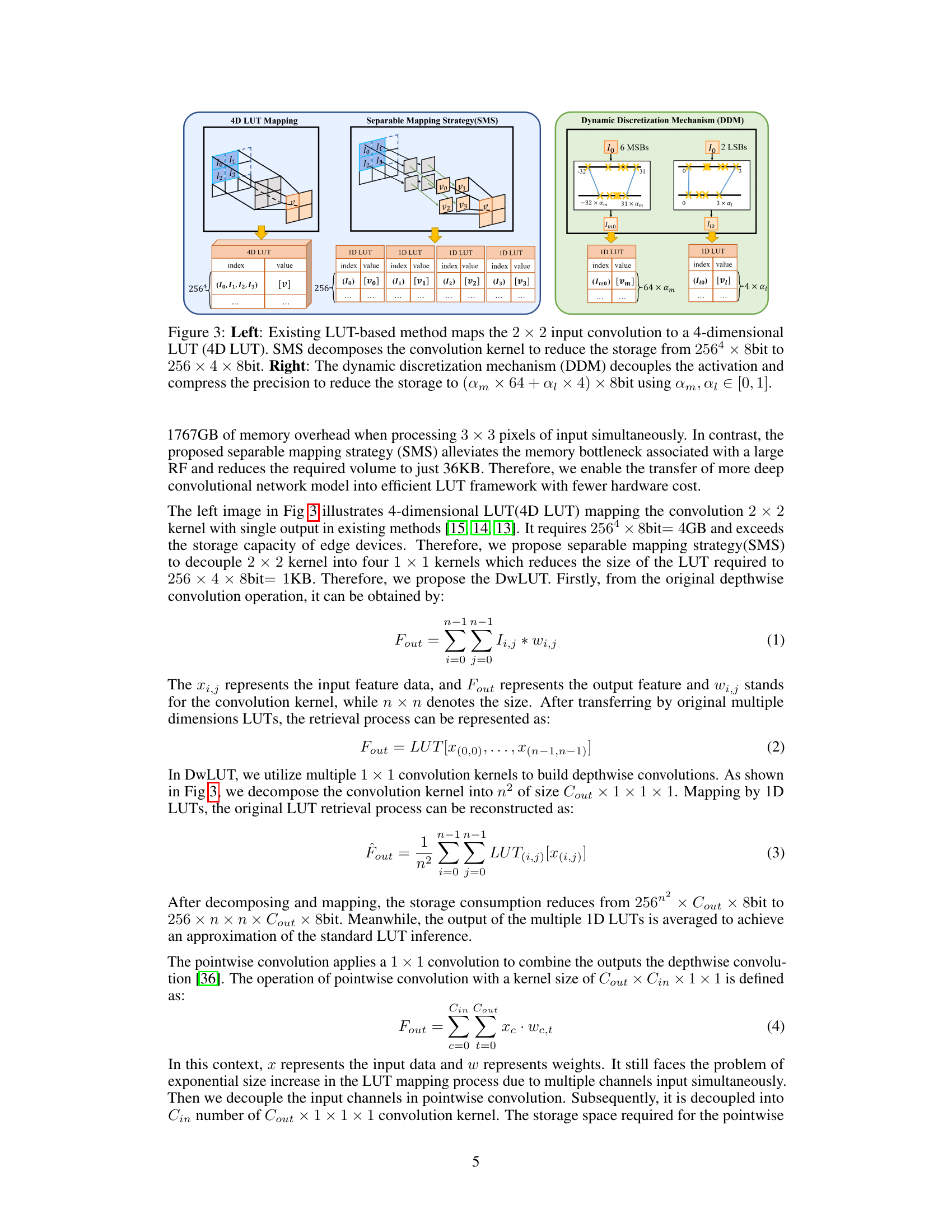

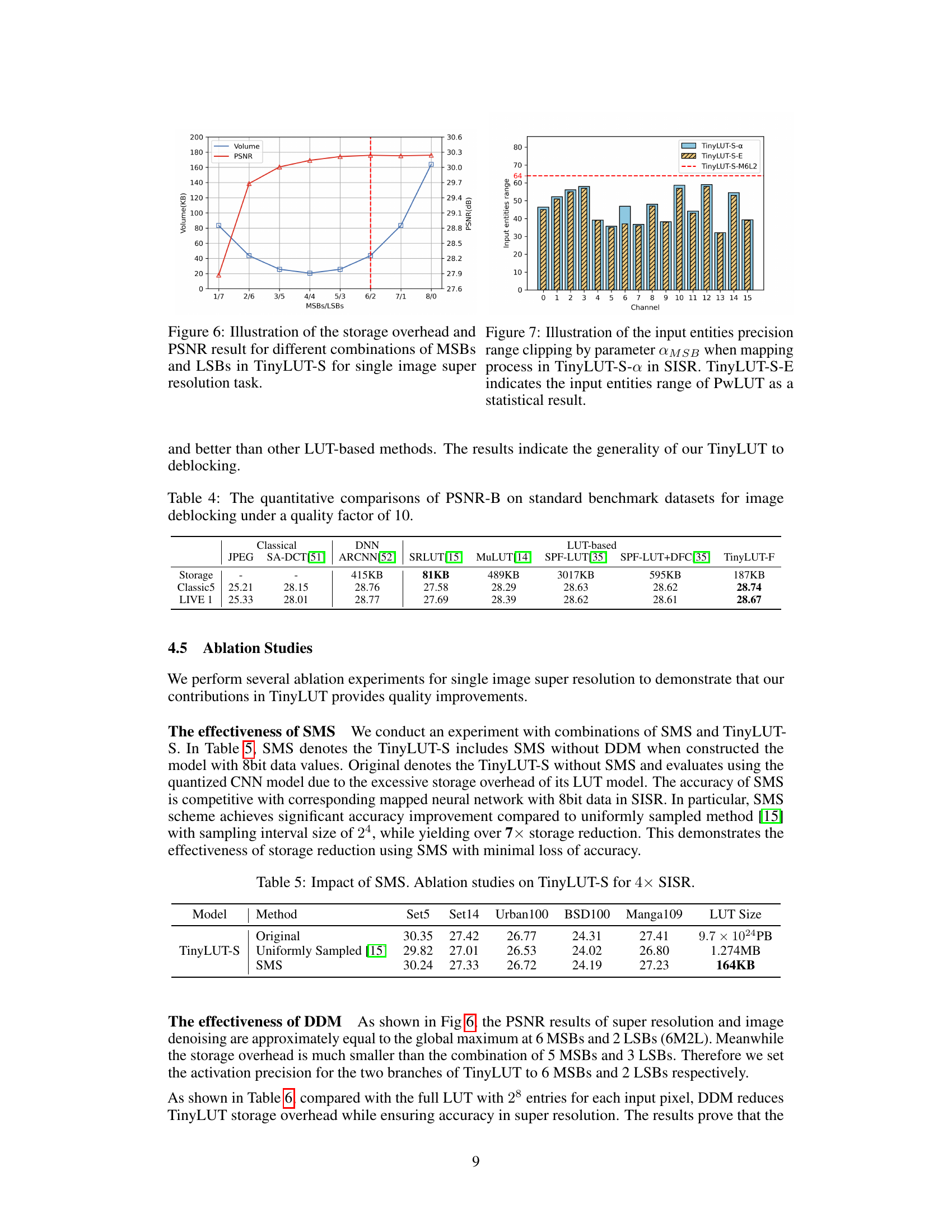

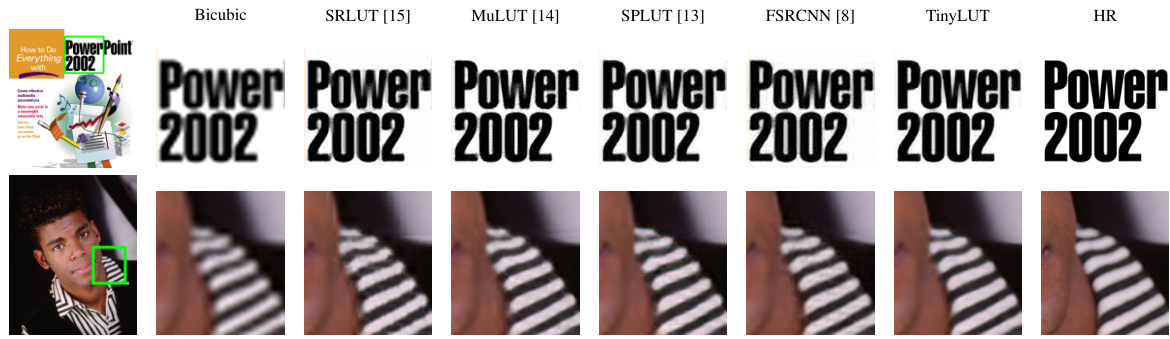

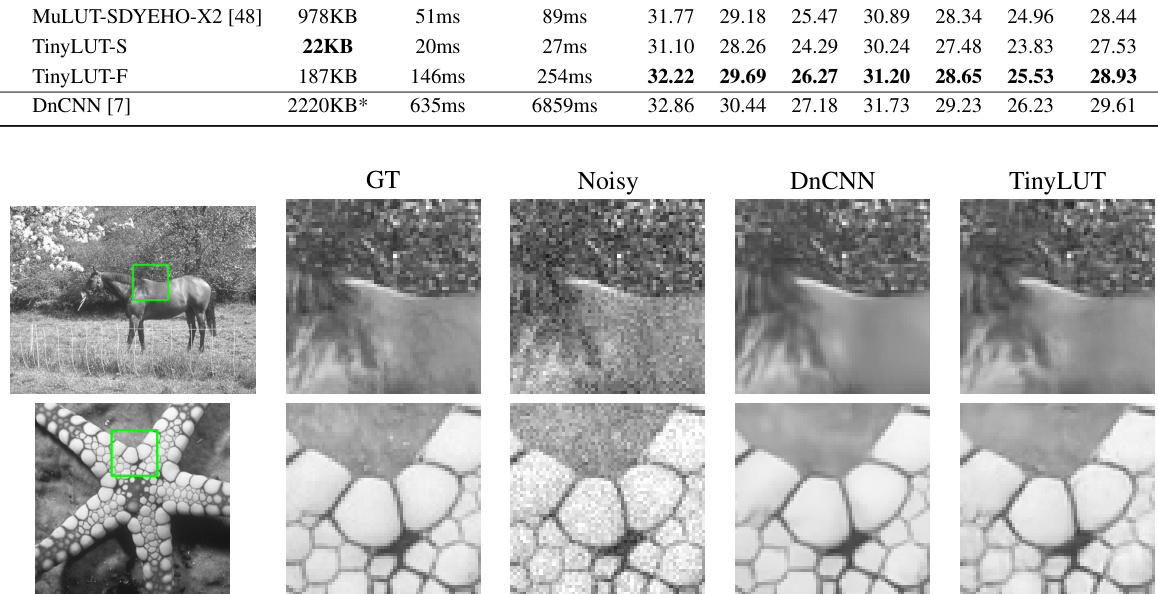

This figure displays a qualitative comparison of image super-resolution results from different methods. Two example images are shown: one of text and another of a person. Each column represents a different super-resolution technique: Bicubic interpolation, SRLUT, MuLUT, SPLUT, FSRCNN, the authors’ TinyLUT method, and finally the ground truth high-resolution (HR) image. The visual differences highlight the strengths and weaknesses of each method in terms of sharpness, artifacts (e.g., ringing), and overall visual quality.

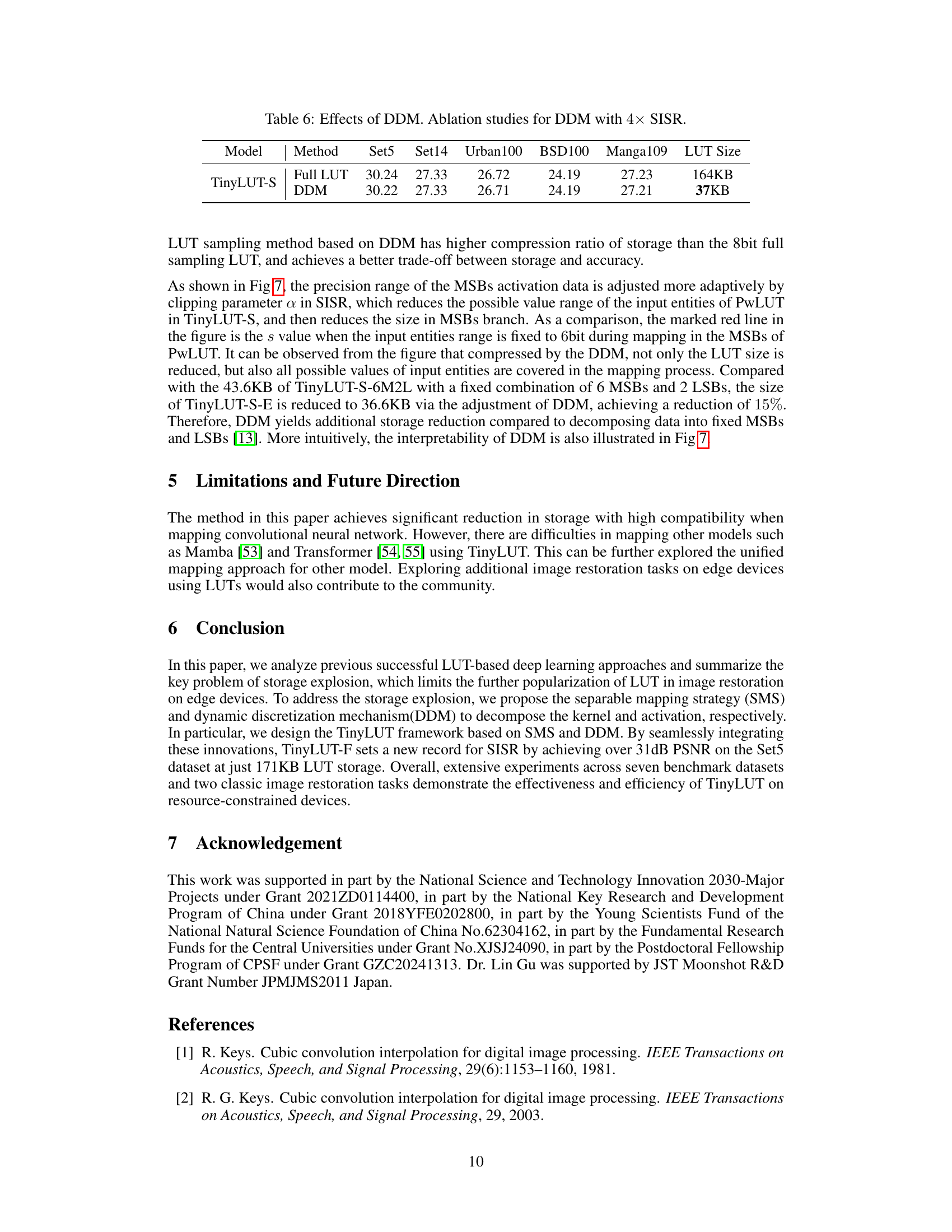

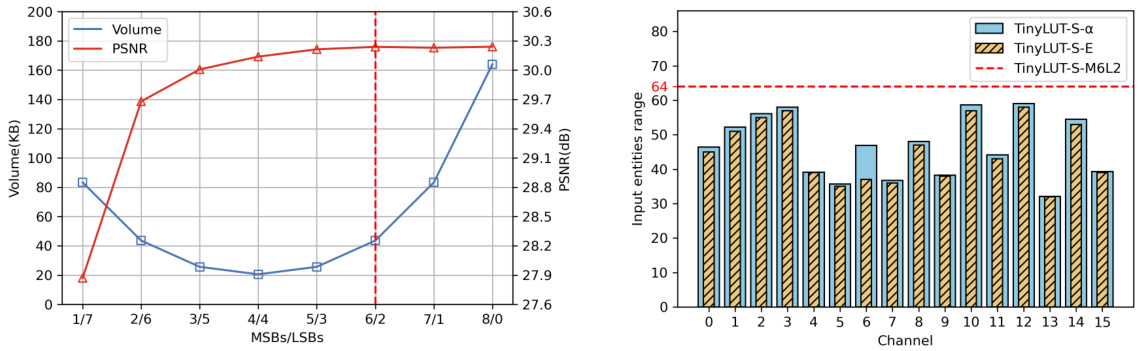

This figure shows two plots. The left plot shows the relationship between the number of MSBs and LSBs bits used and the storage volume and PSNR value obtained for single image super-resolution using TinyLUT-S. The right plot displays the input entities precision range for each channel using the parameter QMSB for mapping process in TinyLUT-S-a. The results show an optimal balance between storage and accuracy around 6 MSBs and 2 LSBs.

More on tables

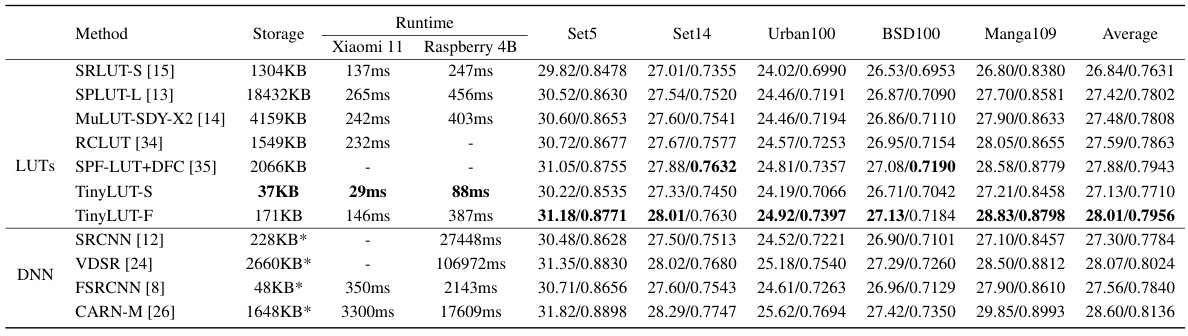

This table presents a quantitative comparison of TinyLUT and other state-of-the-art methods on five standard image super-resolution (SISR) datasets with an upscaling factor of 4. The metrics used for comparison are Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM). The table also shows the storage requirements of each method and inference latency on two different hardware platforms (Xiaomi 11 and Raspberry Pi 4B). The results demonstrate that TinyLUT achieves better accuracy with significantly lower memory consumption.

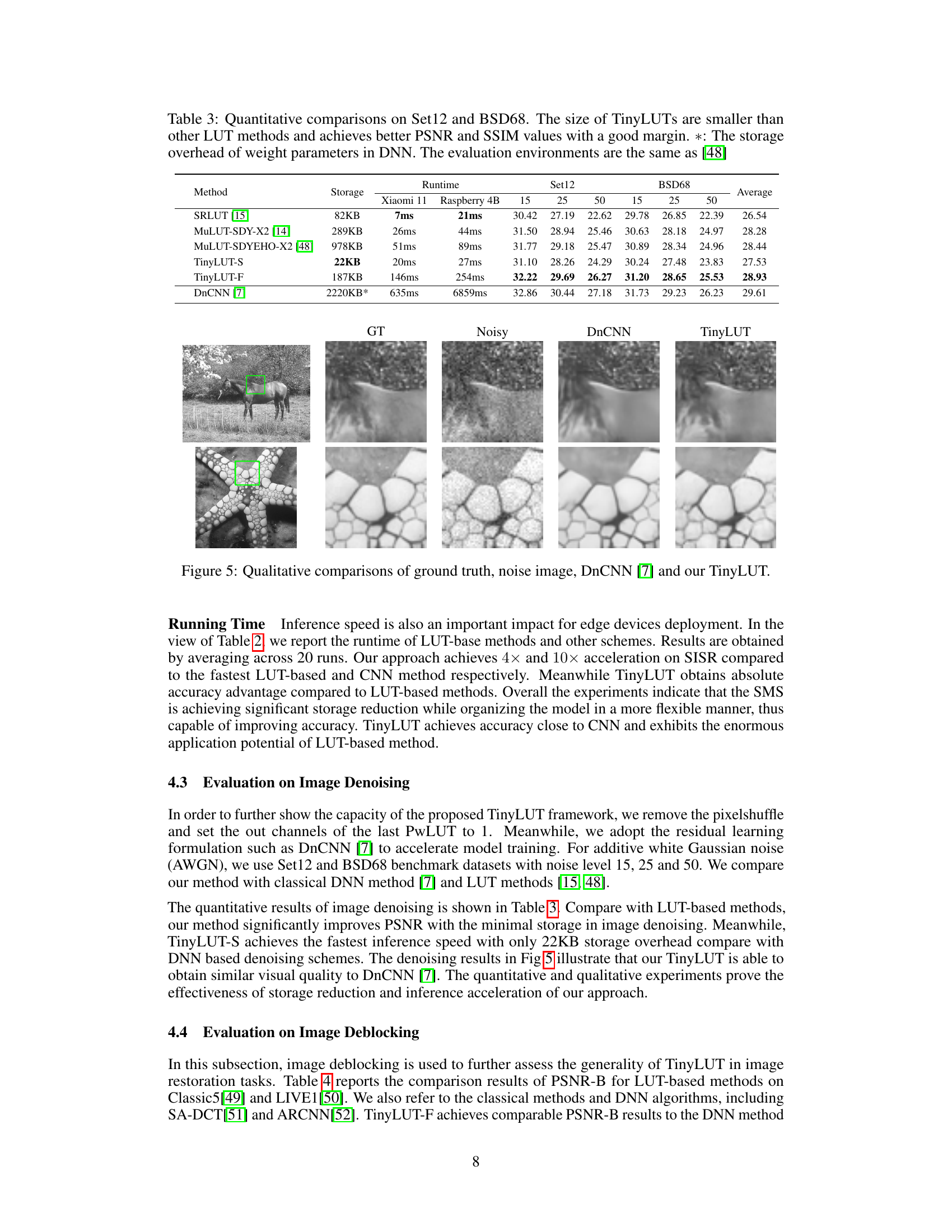

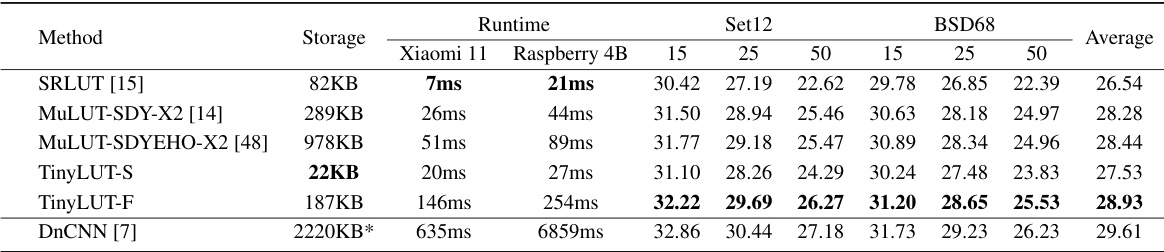

This table presents a quantitative comparison of different super-resolution methods on the Set12 and BSD68 datasets. The metrics used are PSNR and SSIM. The table highlights that TinyLUT achieves better results with significantly lower storage requirements compared to other Look-Up Table (LUT) based methods and even a Deep Convolutional Neural Network (DnCNN). The inference latency is also compared on two different devices.

This table presents a quantitative comparison of TinyLUT with other state-of-the-art LUT-based and DNN-based methods for single image super-resolution (SISR) tasks on five standard benchmark datasets (Set5, Set14, Urban100, BSD100, Manga109). The comparison includes PSNR and SSIM values, storage consumption (in KB), and inference latency (runtime in ms) on two different edge devices (Xiaomi 11 and Raspberry 4B). The results demonstrate that TinyLUT achieves superior performance (higher PSNR/SSIM) with significantly lower storage and latency compared to the alternatives.

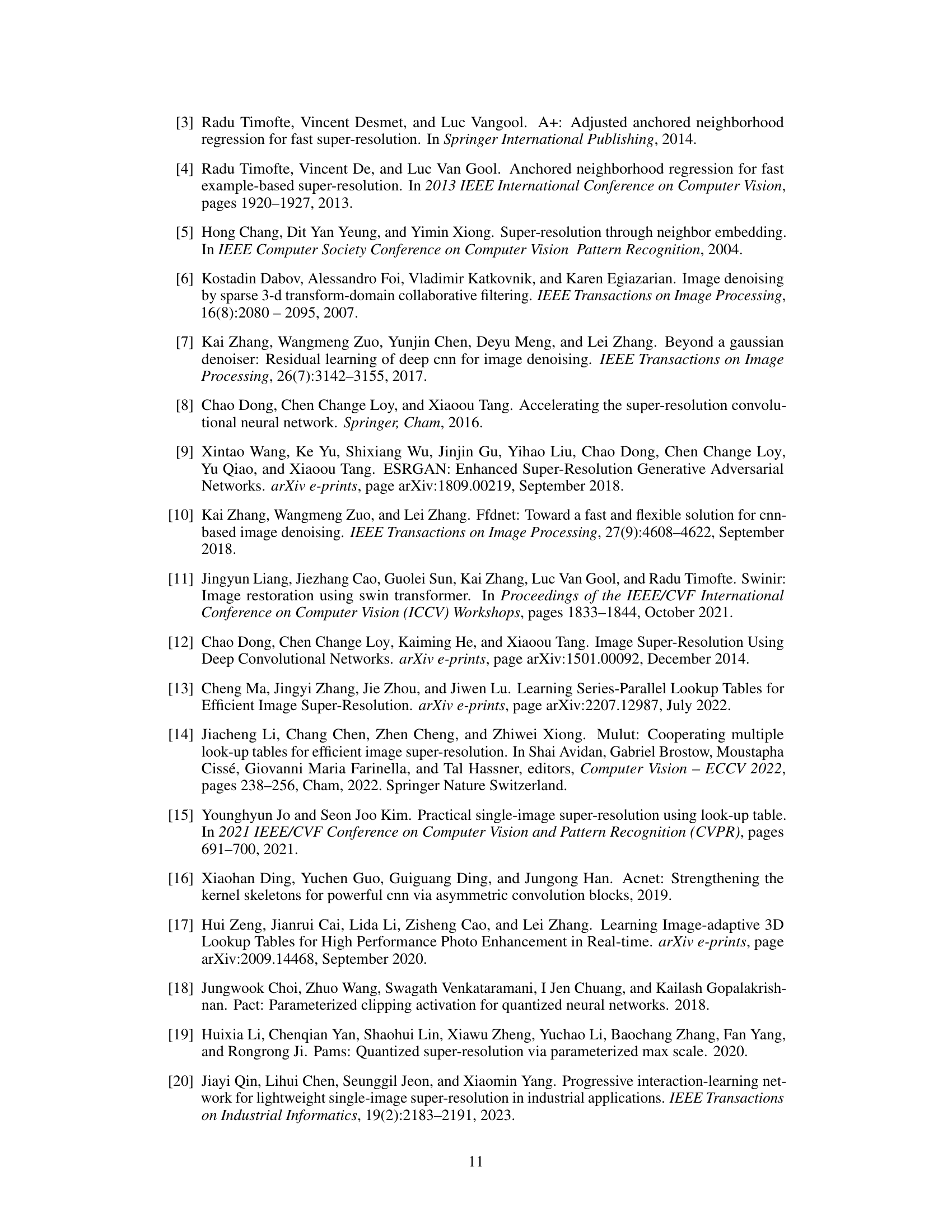

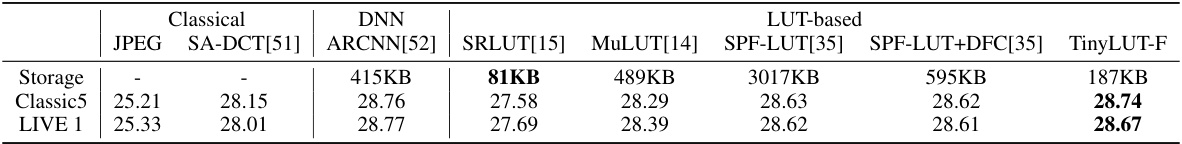

This table presents a comparison of PSNR-B (Peak Signal-to-Noise Ratio for deblocking) scores achieved by various methods on two standard benchmark datasets for image deblocking (Classic5 and LIVE1). The methods compared include classical JPEG compression, two deep learning-based methods (SA-DCT and ARCNN), and several lookup table (LUT)-based approaches (SRLUT, MuLUT, SPF-LUT, SPF-LUT+DFC), and the authors’ proposed TinyLUT-F method. The table shows that the TinyLUT-F method achieves comparable PSNR-B scores to the DNN (deep neural network) methods while having a significantly smaller model size.

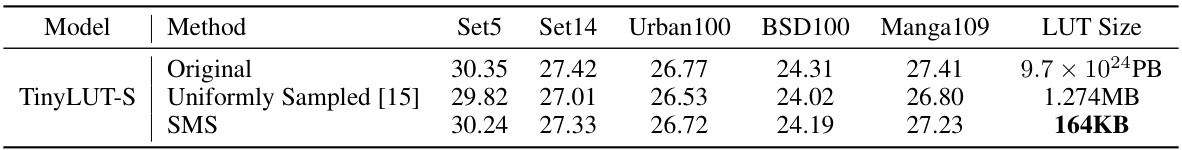

This table presents the ablation study results on the impact of the Separable Mapping Strategy (SMS) in TinyLUT-S model for image super-resolution (SISR) with an upscaling factor of 4. It compares the performance (PSNR in dB) on different benchmark datasets (Set5, Set14, Urban100, BSD100, Manga109) using three methods: Original (without SMS), Uniformly Sampled [15] (a previous method), and SMS (the proposed method). It also shows the LUT size for each method. The results demonstrate SMS’s effectiveness in achieving significant storage reduction with minimal performance loss.

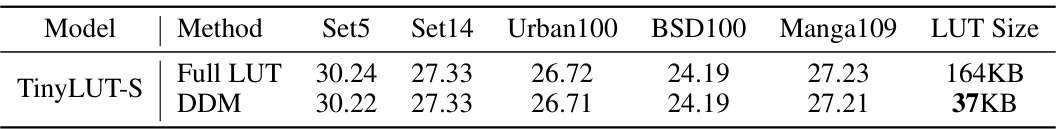

This table presents the ablation study results on the impact of the Dynamic Discretization Mechanism (DDM) on the TinyLUT-S model for image super-resolution with a 4x upscaling factor. It compares the performance (PSNR values on Set5, Set14, Urban100, BSD100, and Manga109 datasets) and LUT size of the TinyLUT-S model using the full LUT and DDM. The results demonstrate the effectiveness of DDM in reducing LUT size while maintaining comparable accuracy.

Full paper#