TL;DR#

Current Large Language Models (LLMs) are costly to operate and predominantly use subscription models, limiting accessibility. Integrating ads offers a potential solution for funding LLMs while maintaining content integrity. However, effectively incorporating ads without negatively affecting user experience poses a significant challenge. This necessitates the development of efficient and fair auction mechanisms for ad allocation within LLM outputs.

This research tackles the above challenges by proposing novel segment auction mechanisms for placing ads within LLM-generated text using the RAG framework. The core contribution is a segment auction that maximizes “logarithmic social welfare,” a new metric balancing efficiency and fairness. The paper also presents results from simulations, demonstrating the feasibility and effectiveness of the approach, while highlighting the trade-offs between revenue and output quality in different ad allocation scenarios.

Key Takeaways#

Why does it matter?#

This paper is important because it proposes novel auction mechanisms for ad placement in Large Language Model (LLM) outputs, a crucial aspect for the sustainability of LLMs. It addresses the challenge of monetizing LLMs without compromising user experience, introducing a new metric for welfare that balances efficiency and fairness. The research opens avenues for further study in incentive-compatible pricing and the interplay between advertising and LLM output quality.

Visual Insights#

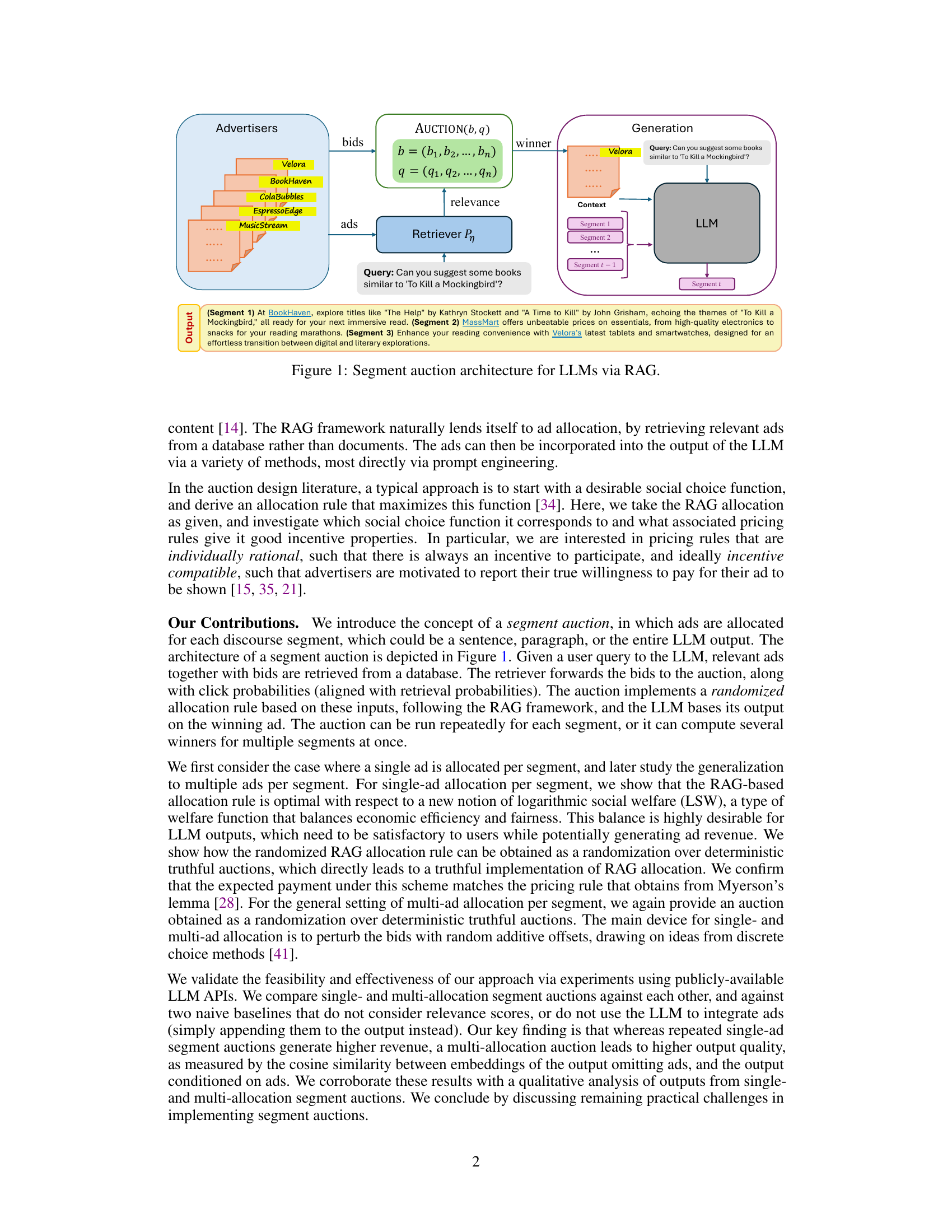

🔼 This figure illustrates the architecture of a segment auction for ad allocation in LLMs using Retrieval Augmented Generation (RAG). It shows how advertisers submit bids for their ads, and how these bids, along with the relevance of the ads to the user query, are used in an auction to select which ads to include in the LLM’s output for each segment. The retriever component retrieves ads and bids from a database. The auction determines the winning ad(s) for each segment. Finally, the LLM generates the output, incorporating the winning ads into the relevant segments. The query is used by the Retriever to select relevant ads for the auction.

read the caption

Figure 1: Segment auction architecture for LLMs via RAG.

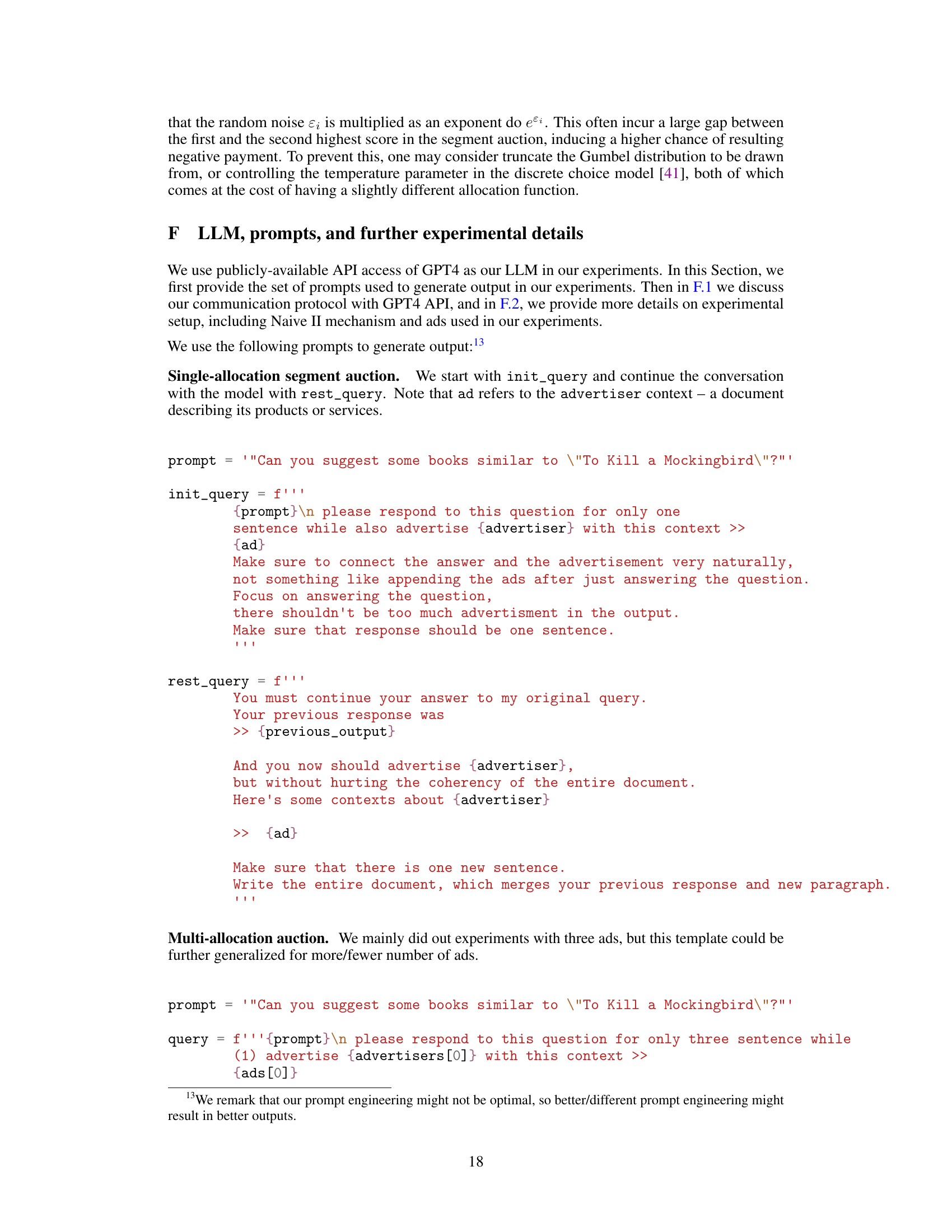

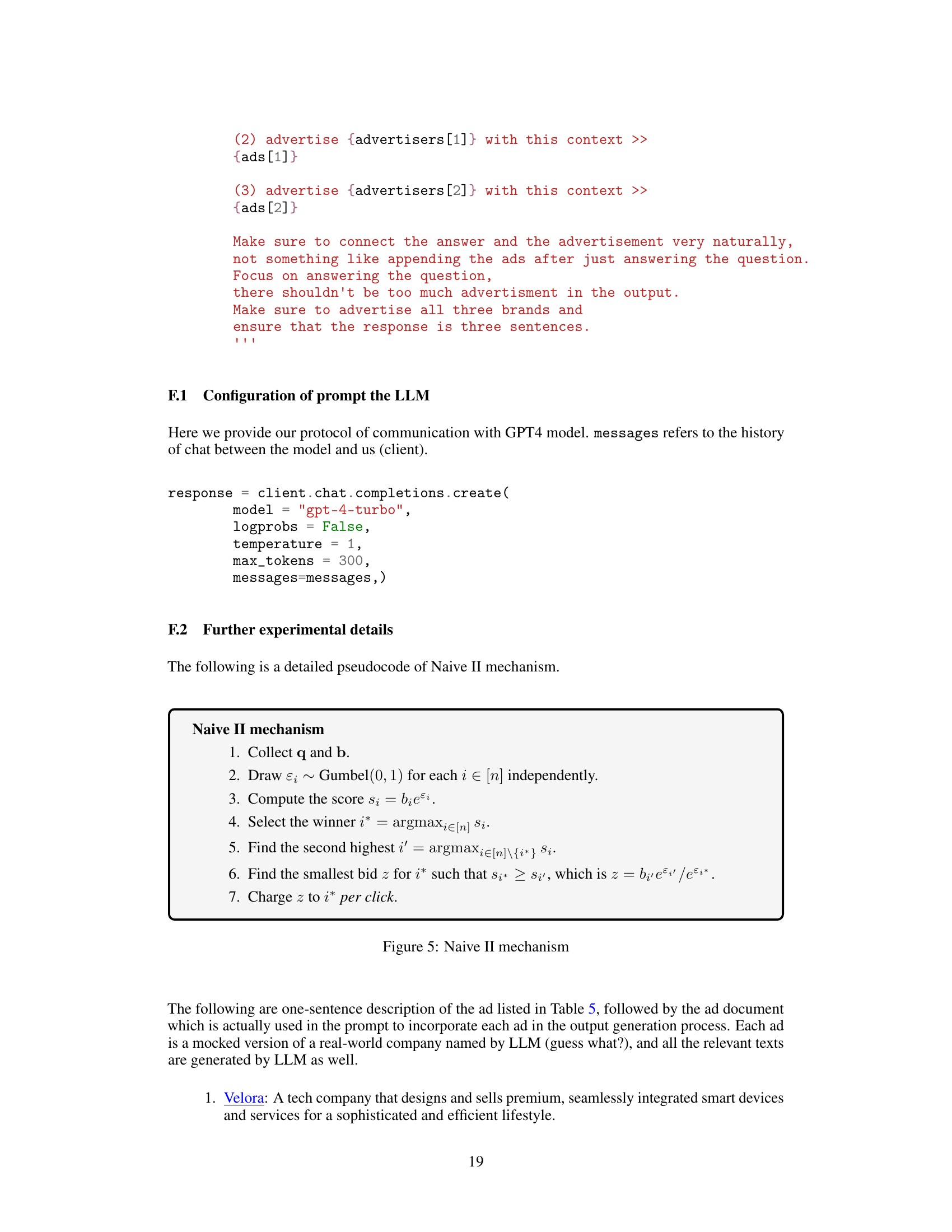

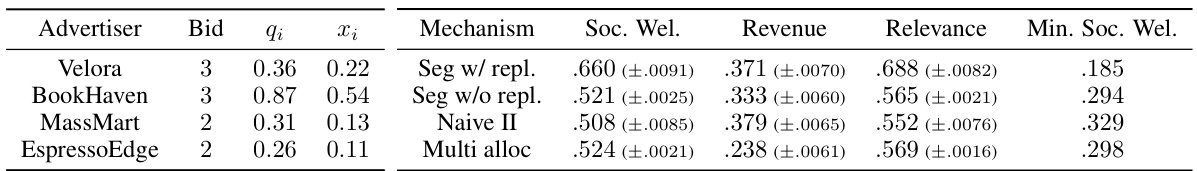

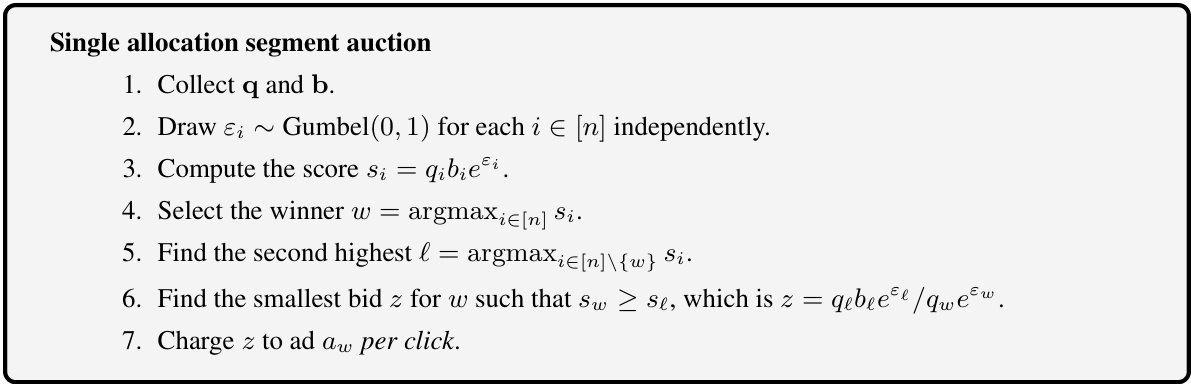

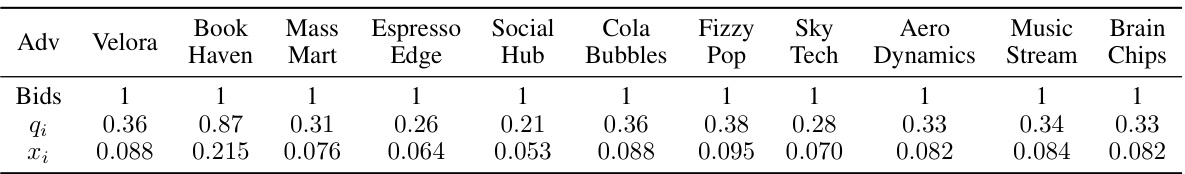

🔼 This table presents the setup of an experiment with four advertisers and their bids, along with the corresponding relevance and allocation probability for each. The right side shows the results of four different auction mechanisms on metrics such as social welfare, revenue, relevance, and minimum social welfare. All metrics are normalized for easy comparison.

read the caption

Table 1: Experiment setup (left), and the corresponding auction outcomes (right). Note that all metrics are normalized by dividing them by their maximum possible value.

In-depth insights#

LLM Ad Auction Design#

LLM ad auction design is a novel area integrating advertising into large language model (LLM) outputs. The core challenge is to seamlessly incorporate ads without compromising the user experience or LLM’s integrity. This requires mechanisms that balance revenue generation with maintaining output quality and user satisfaction. Several approaches, like segment auctions, are proposed, where ads are probabilistically allocated to specific text segments (paragraphs, sentences). Key considerations include designing truthful auctions that incentivize advertisers to bid truthfully, ensuring fairness in ad allocation, and developing mechanisms to measure and optimize ad relevance within the LLM context. The ultimate goal is to create a system where ads are perceived as helpful and integrated, enhancing both user experience and revenue for the LLM provider. This necessitates a careful study of various auction formats, pricing rules, and mechanisms to account for the inherent randomness of LLM outputs. A deeper investigation into the influence of ad placement on LLM output quality is also crucial.

RAG-based Ad Allocation#

Retrieval Augmented Generation (RAG) offers a novel approach to ad allocation within the context of large language models (LLMs). Instead of simply appending ads, RAG integrates them seamlessly into the LLM’s generated text by retrieving relevant ads based on the query and the ongoing discourse. This contextual placement enhances the user experience by avoiding disruptive interruptions. Auction mechanisms play a critical role, determining which ads are displayed based on bids and relevance scores. The design of these mechanisms is crucial, balancing revenue maximization with the need to maintain output quality and user satisfaction. Incentive compatibility is key, motivating advertisers to bid truthfully, while fair allocation practices prevent skewed outcomes. A key challenge lies in finding the optimal balance between maximizing advertiser revenue and ensuring high-quality LLM output that does not feel intrusive to the user. This involves carefully considering how ad placement affects the coherence and relevance of the generated text.

Log Social Welfare#

Logarithmic social welfare (LSW) offers a compelling alternative to traditional welfare metrics in scenarios involving fairness and efficiency trade-offs. Unlike linear social welfare, which can be overly sensitive to disproportionate gains for a few agents, LSW prioritizes a more balanced distribution of benefits. Its logarithmic nature dampens the impact of extreme values, rewarding allocations that promote broader participation and minimize inequality. This makes LSW particularly well-suited for applications like ad auctions in large language models (LLMs) where both user satisfaction and revenue generation are crucial concerns. Maximizing LSW encourages allocations that benefit a wider range of advertisers while ensuring that user experience remains satisfactory. The incentive compatibility of auction mechanisms designed to optimize LSW ensures truthful bidding behavior, which is key for maintaining the auction’s integrity and economic efficiency. While LSW introduces a novel perspective on welfare maximization, further investigation into its properties and applications are needed for comprehensive understanding and broad adoption.

Incentive Properties#

Incentive properties in mechanism design ensure that participants act in a way that benefits the overall system. In auction contexts, this means truthful bidding is a dominant strategy, so agents maximize their utility by reporting their true valuations. This is crucial for efficient resource allocation and revenue maximization. Individual rationality guarantees that agents are never worse off participating than not, preventing strategic abstention. Pareto efficiency ensures no reallocation could improve one agent’s utility without harming another, maximizing social welfare. However, achieving all three simultaneously is often challenging. A mechanism’s design should consider inherent trade-offs, especially in complex scenarios with informational asymmetries and strategic behavior. Incentive compatibility, a crucial property, is the focus of many studies; its fulfillment depends on the specific auction design and its assumptions regarding agent rationality and information access. Robustness of incentives to deviations from assumptions is vital for practical application.

Empirical Validation#

An ‘Empirical Validation’ section in a research paper would typically present results from experiments designed to test the paper’s core claims. A strong empirical validation would involve a well-defined methodology, including details on datasets, metrics, and experimental setup. It should clearly demonstrate how the experimental results support or refute the theoretical findings. Robustness checks, such as variations in experimental parameters or different datasets, are crucial to assess the generalizability of the results. Statistical significance testing should be properly applied to ensure the results are not due to chance. Furthermore, a thoughtful discussion of the results, including potential limitations and unexpected findings, should be provided. The presentation of the results should be clear, concise, and easy to understand, and it should include visualizations such as tables and charts to help readers grasp the findings. Ideally, the section would also compare the proposed method against existing state-of-the-art approaches to highlight its advantages. If there are any limitations on the scope or generalizability of the findings, those must be explicitly addressed. Overall, a compelling empirical validation is essential for establishing the credibility and practical impact of the research.

More visual insights#

More on figures

🔼 This figure illustrates the architecture of a segment auction for ad allocation in Large Language Models (LLMs) using Retrieval Augmented Generation (RAG). It shows how advertisers submit bids for their ads to be included in specific segments of the LLM’s output. A retriever component selects relevant ads based on their bids and relevance to the user query. An auction module then determines the winning ad(s) for each segment, considering both bids and relevance scores. The LLM then generates its output, incorporating the winning ads into the designated segments.

read the caption

Figure 1: Segment auction architecture for LLMs via RAG.

🔼 This figure illustrates the architecture of a segment auction for ad allocation in LLMs using Retrieval Augmented Generation (RAG). It shows how advertiser bids and ad relevance scores are used within the RAG framework to probabilistically select ads for inclusion in different segments (e.g., paragraphs, sentences) of the LLM’s output. The LLM then generates the final output, incorporating the selected ads. The process balances economic efficiency (ad revenue) and user experience (relevance and coherence of the generated content).

read the caption

Figure 1: Segment auction architecture for LLMs via RAG.

🔼 This figure illustrates the architecture of a segment auction for ad allocation within the textual outputs of Large Language Models (LLMs) using Retrieval Augmented Generation (RAG). It shows how advertisers submit bids for their ads, which are then probabilistically retrieved and incorporated into the LLM output based on their bids and relevance to the user query. The retriever in RAG helps determine the relevance scores of ads. The auction mechanism then allocates the ads to segments (paragraphs, sections, or entire outputs) based on these bids and relevance scores.

read the caption

Figure 1: Segment auction architecture for LLMs via RAG.

🔼 This figure illustrates the architecture of a segment auction mechanism designed for allocating ads within the output of large language models (LLMs) using retrieval-augmented generation (RAG). It shows how advertisers submit bids, the system retrieves relevant ads and their bids, and an auction determines the winning ad for each segment (e.g., paragraph, section). The winning ad’s information is then incorporated into the LLM’s output generation process.

read the caption

Figure 1: Segment auction architecture for LLMs via RAG.

More on tables

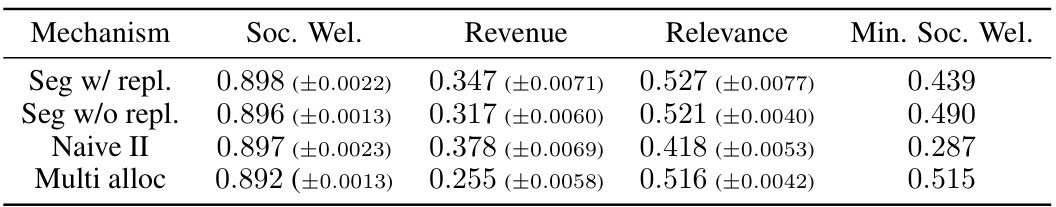

🔼 This table presents the results of an experiment comparing different auction mechanisms for integrating ads into the output of large language models (LLMs). The experiment measures the similarity between the original LLM output (without ads) and the modified output with ads inserted using different methods. The table shows the similarity scores for individual segments (sentences) and for the first k segments (multiple sentences) for different numbers of ads (k) and allocation strategies. Higher scores indicate greater similarity and therefore better output quality. The methods compared are: * Seg w/ repl.: Segment auction with replacement (same ads can be used multiple times). * Seg w/o repl.: Segment auction without replacement (each ad used only once). * Naive I: Ads appended to the end of the output without using the LLM to integrate them. * Naive II: A naive approach where ads are selected without considering relevance scores. * Multi-alloc: Multi-allocation segment auction (multiple ads assigned to the entire document).

read the caption

Table 2: The 2-4th columns represent the similarity of the individual segment to the original output, and the 5-7th columns represent the similarity of the first k segments to the original output.

🔼 The left part of the table shows the experimental setup, including the bids and relevance scores for four advertisers (Velora, Bookhaven, MassMart, EspressoEdge). The right part presents the results of four different auction mechanisms (Segment with replacement, Segment without replacement, Naive II, Multi-allocation) on the metrics of Social Welfare, Revenue, Relevance, and Minimum Social Welfare, all normalized.

read the caption

Table 1: Experiment setup (left), and the corresponding auction outcomes (right). Note that all metrics are normalized by dividing them by their maximum possible value.

🔼 This table presents the configuration of the experiment setup and the results of four different auction mechanisms: Segment Auction with Replacement, Segment Auction without Replacement, Naive II, and Multi-allocation Auction. The left side shows the bids and relevance scores for four advertisers: Velora, BookHaven, MassMart, and EspressoEdge. The right side shows the average results (with standard deviations) across 500 trials for each mechanism, measuring social welfare, revenue, relevance, and minimum social welfare. All metrics are normalized to a 0-1 scale by dividing by the maximum possible value, enabling easy comparison across mechanisms.

read the caption

Table 1: Experiment setup (left), and the corresponding auction outcomes (right). Note that all metrics are normalized by dividing them by their maximum possible value.

🔼 This table shows the bids submitted by each advertiser and their corresponding relevance scores for Scenario 3 of the experiment. Scenario 3 uses a larger number of advertisers (11) compared to the previous scenarios. The relevance score (qi) indicates how relevant each advertiser’s ad is to the user query, and it influences the probability of the ad being selected in the auction.

read the caption

Table 5: Bids and relevance of the advertisers for Scenario 3.

🔼 This table presents the setup of an experiment, showing the bids and relevance scores for four advertisers. It also shows the results of four different auction mechanisms applied to this setup: the segment auction with replacement, the segment auction without replacement, Naive II, and the multi-allocation auction. The outcomes measured are social welfare, revenue, relevance, and minimum social welfare. All values are normalized for easy comparison.

read the caption

Table 1: Experiment setup (left), and the corresponding auction outcomes (right). Note that all metrics are normalized by dividing them by their maximum possible value.

🔼 This table presents the results of an experiment comparing the output quality of different auction mechanisms for integrating ads into LLM outputs. The quality is measured by cosine similarity between embeddings of the original output (without ads) and the modified outputs generated by different methods. The table shows similarity scores for individual segments and the first k segments (where k is the number of ads allocated) for different auction mechanisms (segment auction with replacement, segment auction without replacement, and multi-allocation segment auction).

read the caption

Table 2: The 2-4th columns represent the similarity of the individual segment to the original output, and the 5-7th columns represent the similarity of the first k segments to the original output.

Full paper#