↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

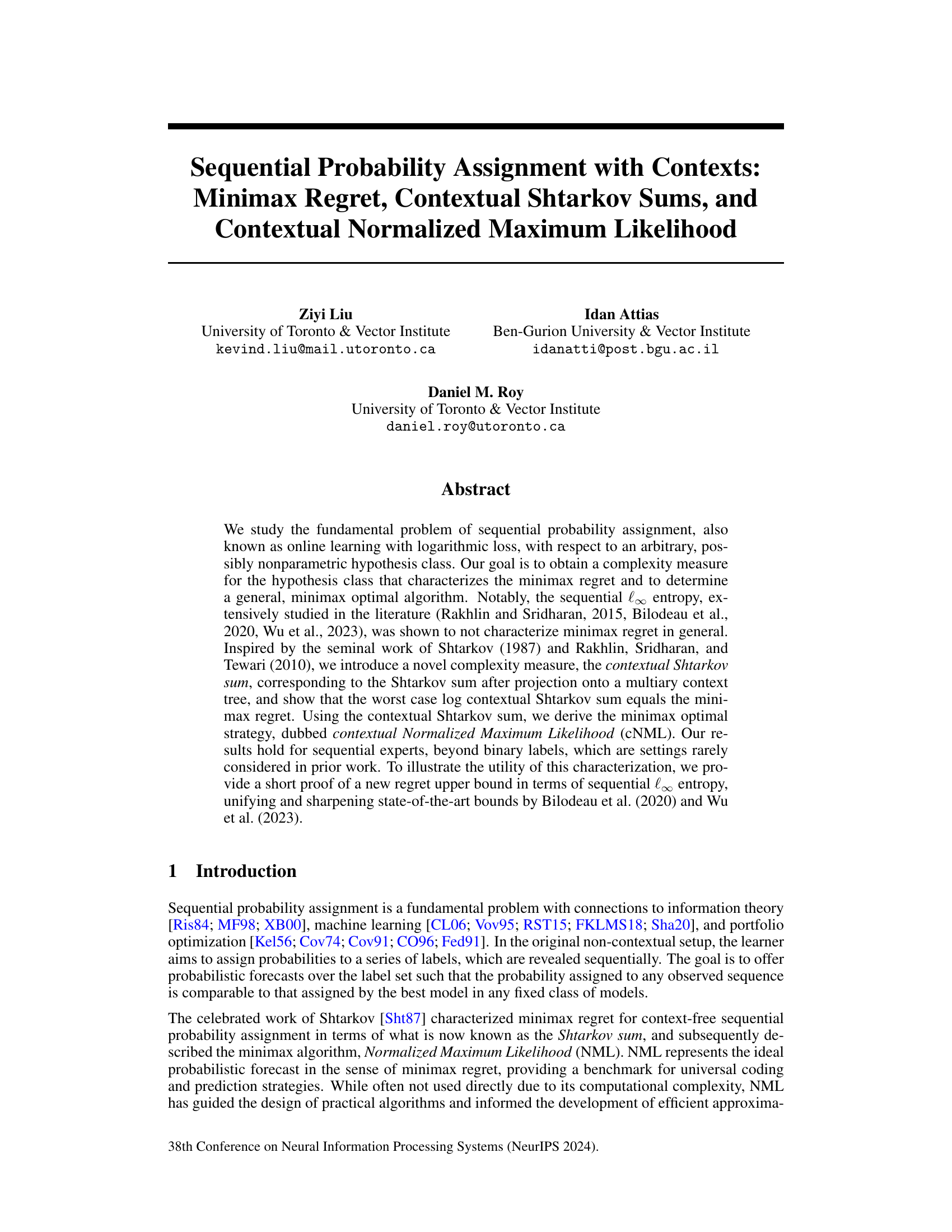

Sequential probability assignment, a fundamental problem in online learning and information theory, seeks algorithms minimizing regret against the best expert from a hypothesis class. Existing complexity measures like sequential entropy fail to fully characterize minimax regret, particularly with contexts (side information). The minimax optimal strategy remains elusive.

This research introduces contextual Shtarkov sums, a new complexity measure based on projecting Shtarkov sums onto context trees. It proves that the minimax regret equals the worst-case log contextual Shtarkov sum. Using this, it derives the minimax optimal algorithm, contextual NML, extending Normalized Maximum Likelihood to contextual settings. This work also offers a simplified proof for a tighter regret upper bound based on sequential entropy.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in online learning and information theory. It provides novel theoretical insights into sequential probability assignment, offers a new minimax optimal algorithm, and sharpens existing regret bounds. This work opens up exciting new avenues for research and has significant implications for developing more efficient and effective online learning algorithms.

Visual Insights#

Full paper#