↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Many modern machine learning models rely on Adam and Exponential Moving Average (EMA) for optimization during training, yet a comprehensive theoretical understanding of their effectiveness remained elusive. Existing analyses often produced results inconsistent with practical observations, lacking a full explanation for the techniques’ success. This paper tackled this challenge.

This research leverages the online-to-nonconvex conversion framework to analyze Adam with EMA. By focusing on the core elements of Adam (momentum and discounting factors) combined with EMA, the authors demonstrate that a clipped version of Adam with EMA achieves optimal convergence rates in various nonconvex settings, both smooth and nonsmooth. This new theoretical framework showcases the advantages of coordinate-wise adaptivity in situations with varying scales, thus offering a deeper understanding of Adam and EMA’s power.

Key Takeaways#

Why does it matter?#

This paper is important because it provides novel theoretical insights into the effectiveness of Adam and EMA in nonconvex optimization. It addresses a critical gap in understanding these widely used techniques, offering optimal convergence guarantees. This could lead to improved algorithm design and a better understanding of deep learning training dynamics, influencing future research in optimization and machine learning.

Visual Insights#

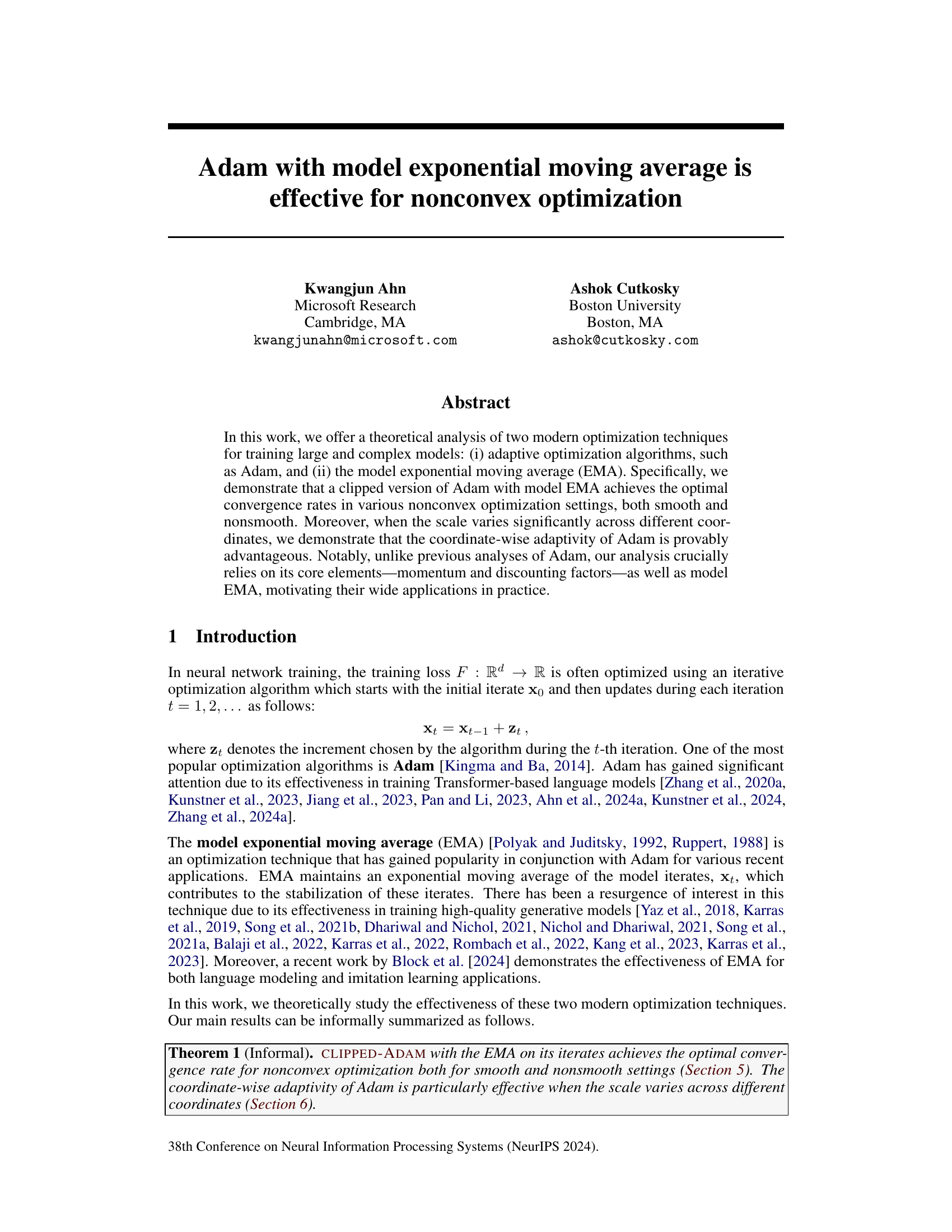

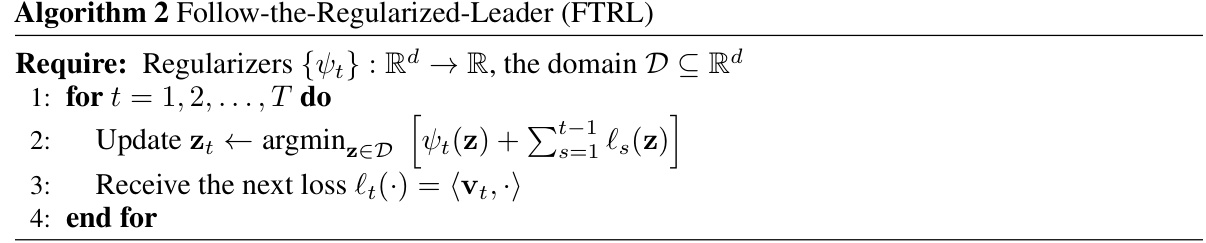

This table summarizes the convergence rates achieved by various optimization algorithms, including Adam, clipped Adam, and SGD, under different assumptions on the objective function (smooth, non-smooth, and strongly convex). It highlights the optimal convergence rates achievable in each setting and shows which algorithms attain these optimal rates.

Full paper#