↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Many real-world problems involve selecting the best subset from a large pool of items to maximize a certain objective, often under noisy feedback. This is particularly challenging when the objective function is complex but exhibits a desirable mathematical property called ‘submodularity’, indicating diminishing returns. Existing algorithms struggle with this problem in high-dimensional spaces with stochastic, noisy observations.

This paper tackles this challenge by presenting a novel algorithm that offers a much-improved guarantee in terms of regret, a metric representing the algorithm’s performance compared to the best possible outcome. It accomplishes this by carefully balancing exploration and exploitation of different subsets and achieving a theoretical optimal performance guarantee that matches a newly proven lower bound on regret, highlighting its efficiency and optimality.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in submodular optimization and bandit algorithms. It provides the first minimax optimal algorithm for maximizing submodular functions under bandit feedback, closing a long-standing gap in the literature. This opens avenues for research in more complex settings and related problems like combinatorial bandits with more sophisticated feedback models.

Visual Insights#

This figure compares the performance of three different algorithms for the weighted set cover problem: SUB-UCB (the authors’ proposed algorithm), UCB on all sets of size k, and ETCG (an existing explore-then-commit algorithm). The x-axis represents the time horizon T, and the y-axis represents the total regret. The plot shows that SUB-UCB consistently outperforms the other two algorithms across various time horizons, demonstrating its efficiency in minimizing regret for submodular maximization with bandit feedback.

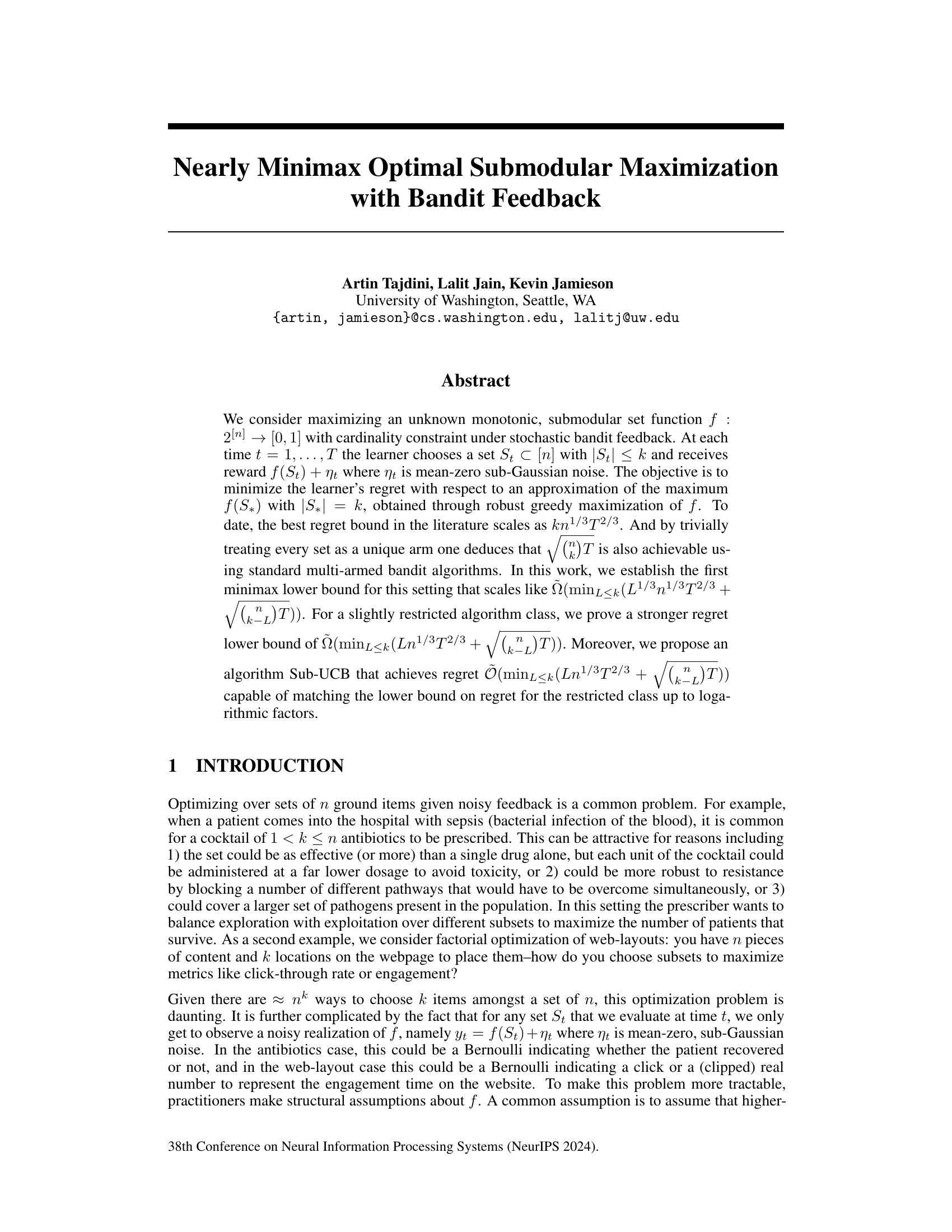

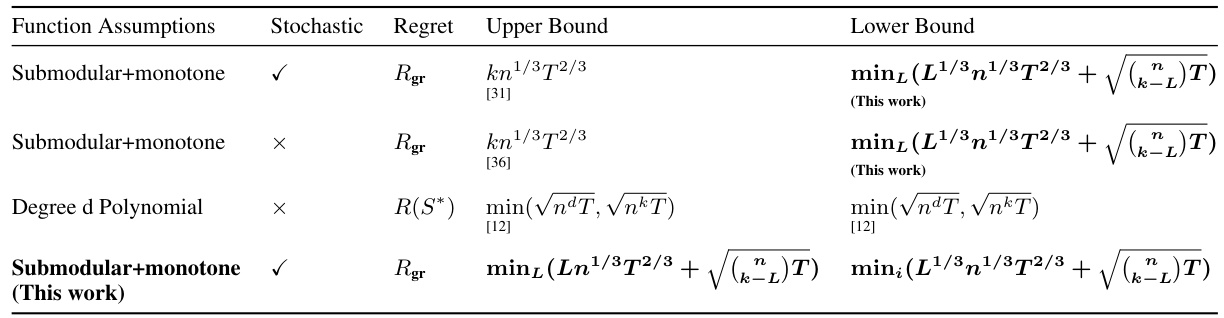

This table summarizes the state-of-the-art regret bounds for combinatorial multi-armed bandits, focusing on submodular maximization problems. It compares upper and lower bounds under various assumptions about the reward function (submodular and monotone, submodular without monotonicity, and degree-d polynomial), feedback type (stochastic and adversarial), and the measure of regret used (Rgr and R(S*)). The table highlights the contributions of the current work by presenting novel upper and lower bounds for submodular and monotone functions under stochastic feedback, which closes the gap between previous best known results.

Full paper#