↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Reinforcement learning (RL) has shown great empirical success but lacks theoretical understanding, especially in complex scenarios like infinite horizon average reward settings. Existing algorithms for this setting often rely on simplified assumptions like tabular structures or linear models, limiting their applicability to real-world problems. Kernel-based methods offer a powerful alternative with high representational capacity, but their theoretical analysis in this setting remains largely unexplored.

This paper introduces KUCB-RL, a novel optimistic algorithm using kernel-based function approximation. It leverages a novel confidence interval to construct an optimistic proxy of the value function. The authors prove that KUCB-RL achieves no-regret performance guarantees, a significant contribution to the field. The algorithm’s effectiveness extends beyond the typical assumptions of linear or tabular models, making it suitable for a wider range of applications. The theoretical results are backed by a rigorous mathematical analysis, enhancing the reliability and applicability of the approach.

Key Takeaways#

Why does it matter?#

This paper is crucial because it presents the first reinforcement learning algorithm with theoretical no-regret guarantees for the complex infinite horizon average reward setting, using non-linear function approximation. This significantly advances our understanding of RL in complex environments and paves the way for more efficient and theoretically sound algorithms in various real-world applications.

Visual Insights#

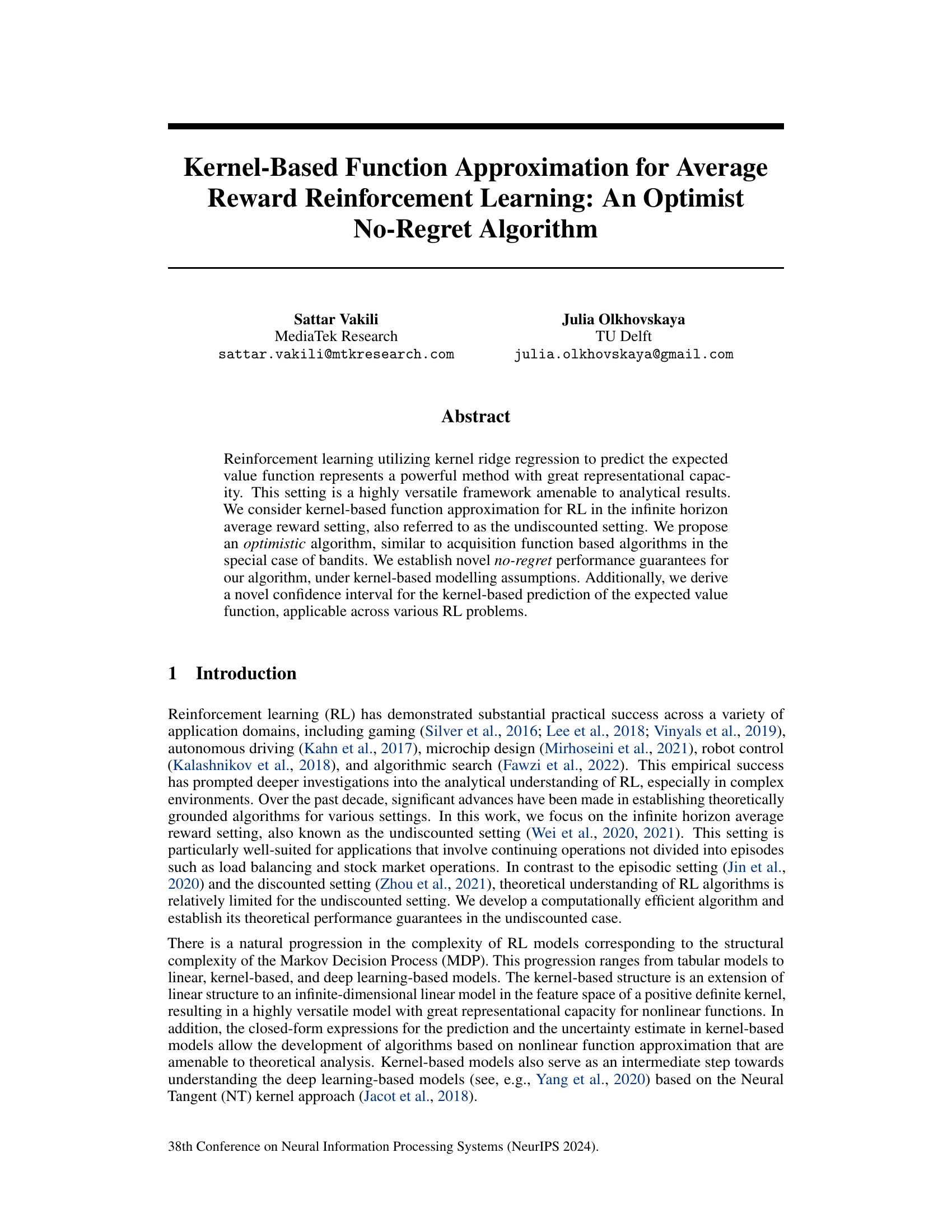

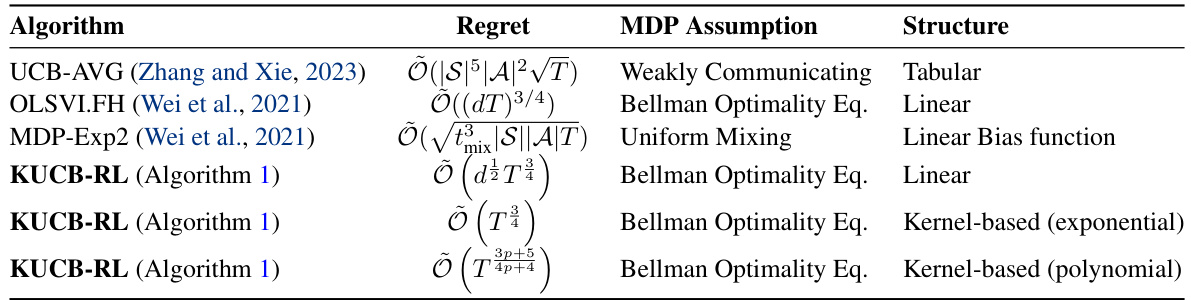

This table summarizes the regret bounds of existing algorithms for infinite horizon average reward reinforcement learning under various assumptions about the Markov Decision Process (MDP) and its structure. The algorithms are categorized by MDP structure (tabular, linear, kernel-based) and assumptions (weakly communicating MDP, Bellman optimality equation, uniform mixing). For each algorithm, the table shows its regret bound, MDP assumption, and structure.

Full paper#