↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Transfer learning helps train models on limited target data by leveraging data from related source tasks. However, the resulting models can be vulnerable to adversarial attacks, where small input perturbations cause significant prediction errors. This is a significant problem in real-world applications such as healthcare and finance. This research focuses on improving the robustness of multi-task representation learning (MTRL) methods against adversarial attacks.

The researchers present theoretical guarantees that learning a shared representation using adversarial training across diverse source tasks helps protect the model against adversarial attacks on a target task. They introduce novel theoretical bounds on adversarial transfer risk for both Lipschitz and smooth loss functions. These bounds show that the diversity of source tasks plays a crucial role in improving robustness, especially in data-scarce scenarios. The findings provide important guidelines for designing more robust MTRL systems.

Key Takeaways#

Why does it matter?#

This paper is crucial because it tackles the critical issue of adversarial robustness in transfer learning, a significant challenge in real-world applications. The theoretical framework developed provides novel insights and practical guidance for researchers building robust AI systems in data-scarce environments, pushing the boundaries of current research. The optimistic rates achieved offer a novel perspective on multi-task learning, which is of broad interest.

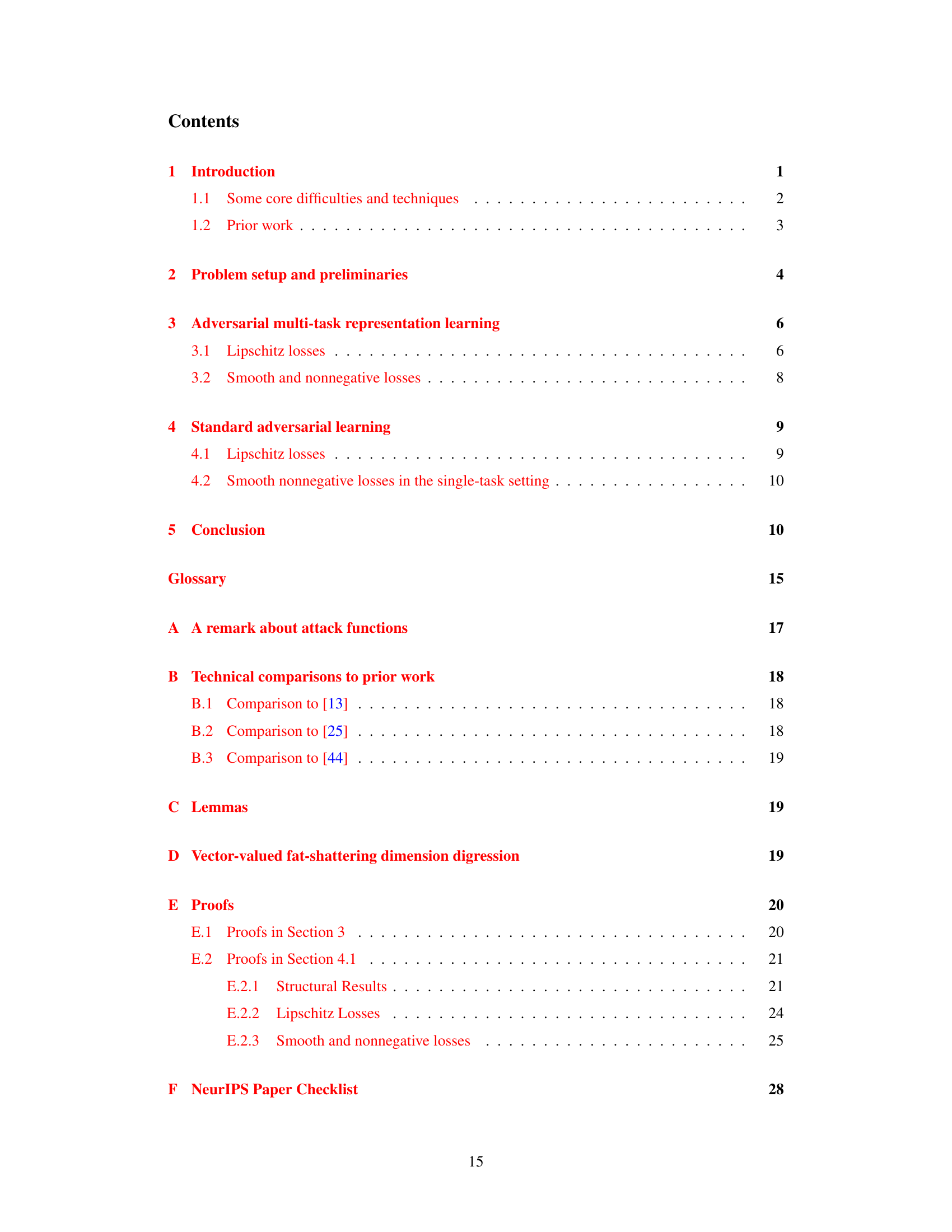

Visual Insights#

Full paper#