↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

This research delves into the training dynamics of transformers, focusing on how these models learn to recognize word co-occurrences, a critical ability for many natural language processing tasks. Existing research often uses simplifying assumptions, limiting their applicability to real-world scenarios. This work aims to provide a more comprehensive and accurate understanding of this process, devoid of such assumptions.

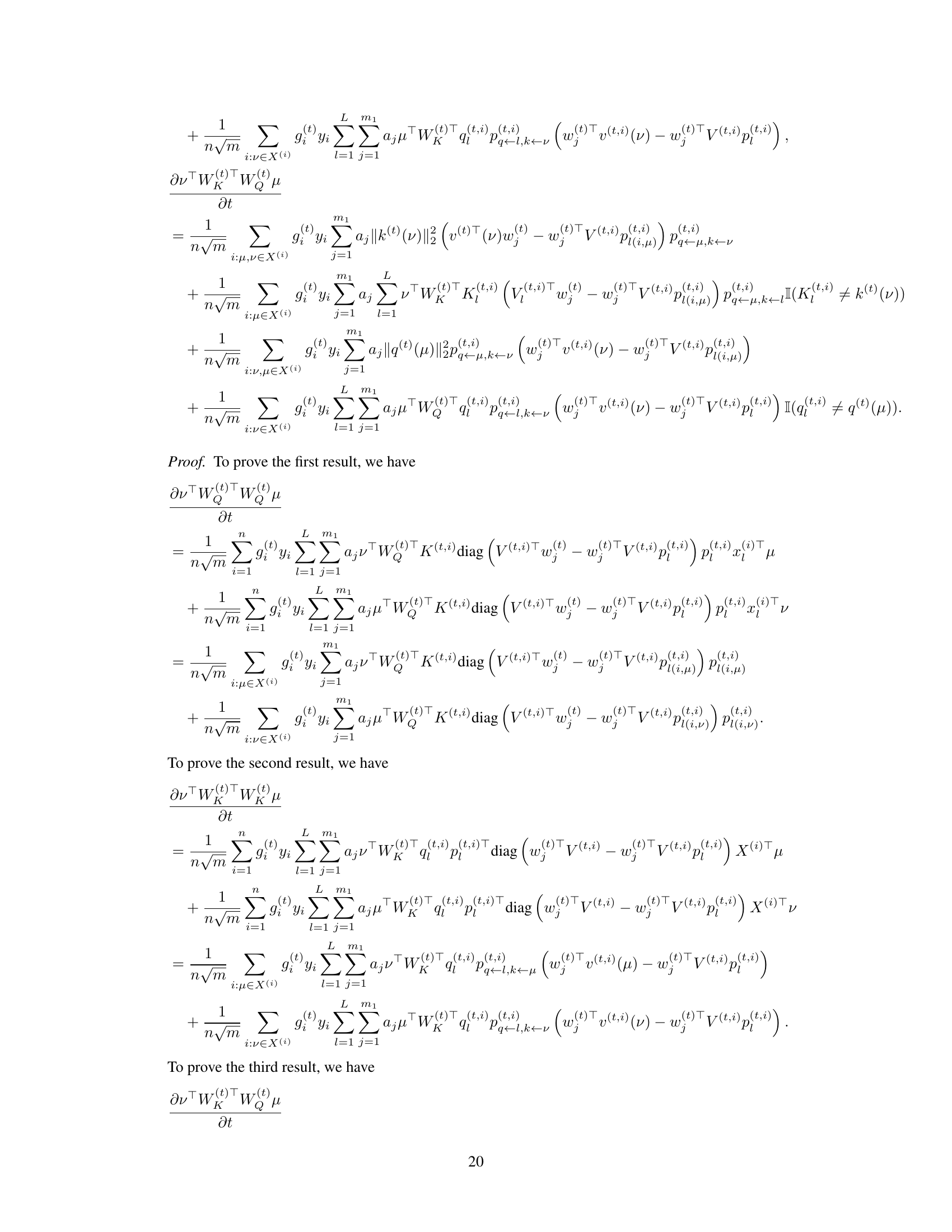

The researchers use gradient flow analysis of a simplified transformer model consisting of three attention matrices and a linear MLP layer. They demonstrate that the training process naturally divides into two phases. Phase 1 involves the MLP quickly aligning with target signals for accurate classification, while Phase 2 sees the attention matrices and MLP jointly refining to enhance classification margin and achieve near-minimum loss. The study also introduces a novel concept of ‘automatic balancing of gradients’, showcasing how different samples’ loss decreases at nearly the same rate, ultimately contributing to the proof of the near-minimum training loss. Experimental results support the theoretical findings.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in the field of transformer networks and large language models. It provides a novel theoretical framework for understanding training dynamics, moving beyond common simplifications. This opens doors for more robust and efficient training methods for transformers, enhancing their capabilities and addressing limitations of existing approaches. The work’s rigorous analysis and clear explanation of complex dynamics are particularly valuable.

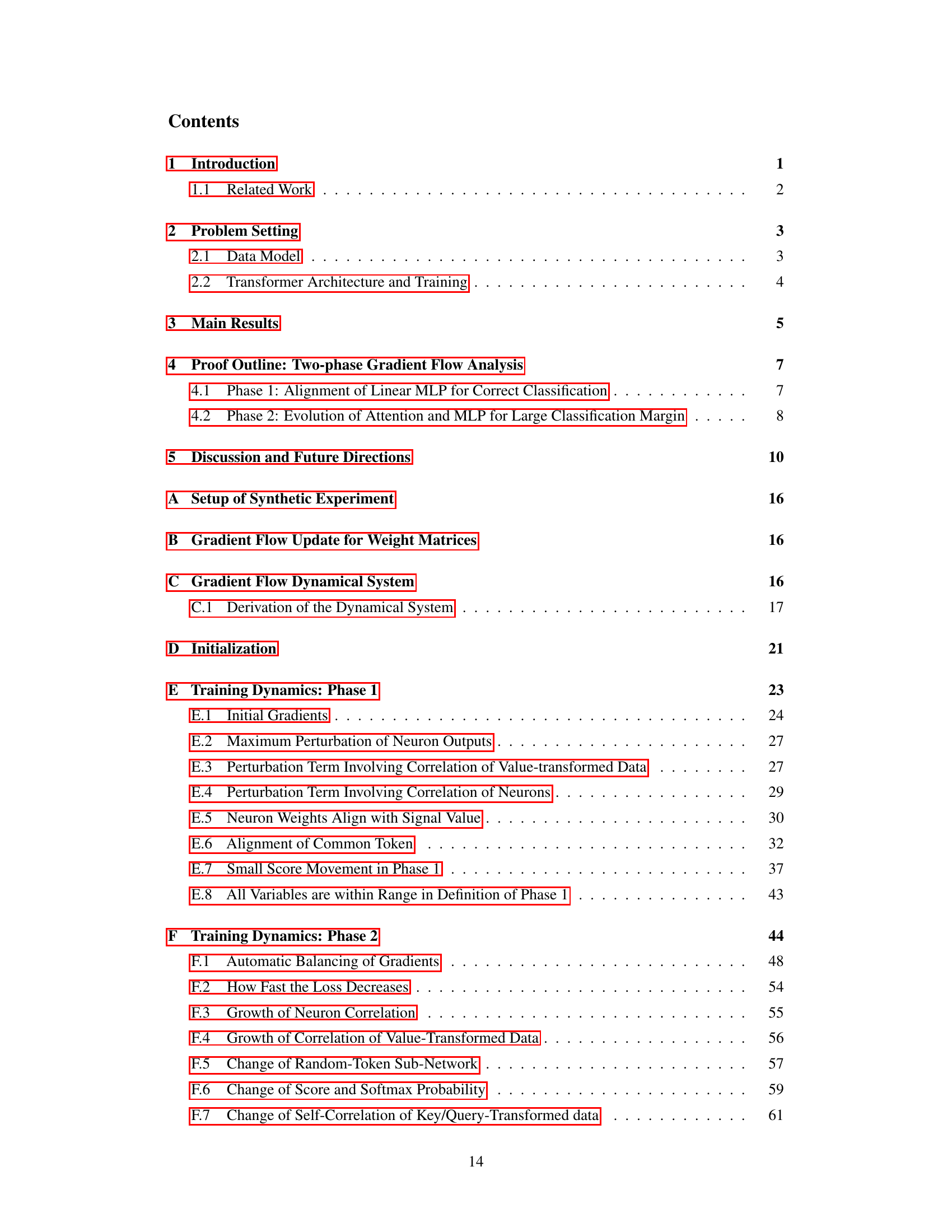

Visual Insights#

This figure visualizes the results of synthetic experiments designed to validate the paper’s theoretical findings on the two-phase training dynamics of transformers. Panel (a) shows the attention score correlation (inner product of query and key vectors) between different word embeddings (μ1, μ2, μ3) over the training process. The vertical red line separates the two phases. Phase 1 shows little change in attention scores, whereas Phase 2 shows a clear divergence, emphasizing the alignment of the linear MLP and the evolution of attention matrices to increase classification margin. Panel (b) illustrates the training loss over time, with different colored lines representing various data sample types (combinations of μ1, μ2, μ3). The training loss decreases rapidly in Phase 1 as the linear MLP classifies samples correctly, then continues dropping more gradually in Phase 2 as the model jointly refines attention matrices and the linear MLP to reduce the loss to near zero.

Full paper#