↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

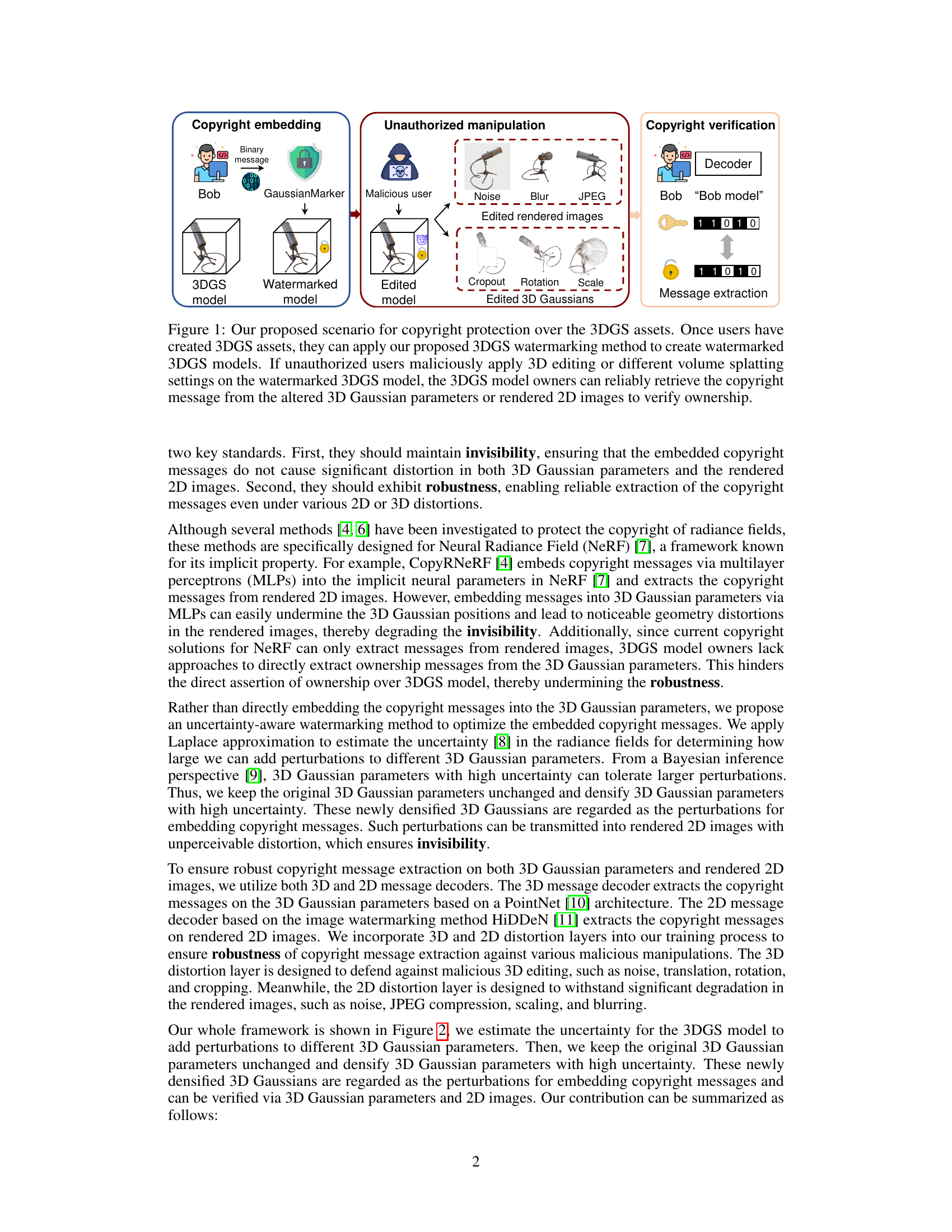

The rise of 3D Gaussian Splatting (3DGS) for creating 3D assets necessitates robust copyright protection. Existing watermarking methods for other 3D representations are unsuitable for 3DGS due to its unique structure. Naive watermarking on pre-trained 3DGS models causes noticeable distortions.

GaussianMarker introduces an uncertainty-aware method that cleverly addresses these issues. It leverages Laplace approximation to estimate uncertainty in radiance fields. Only 3D Gaussian parameters with high uncertainty receive perturbations for embedding copyright messages. This maintains invisibility and ensures robust extraction from both 3D Gaussians and 2D rendered images via specialized decoders, demonstrating state-of-the-art results on various datasets.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in 3D graphics and digital watermarking. It directly addresses the challenge of copyright protection for 3D Gaussian Splatting (3DGS) models, a rapidly growing area with significant commercial value. The proposed uncertainty-aware method provides a robust and effective technique that is highly relevant to current research trends in digital asset protection and deep learning. Further research can explore its applications in various other 3D model formats and improvements in robustness against more sophisticated attacks.

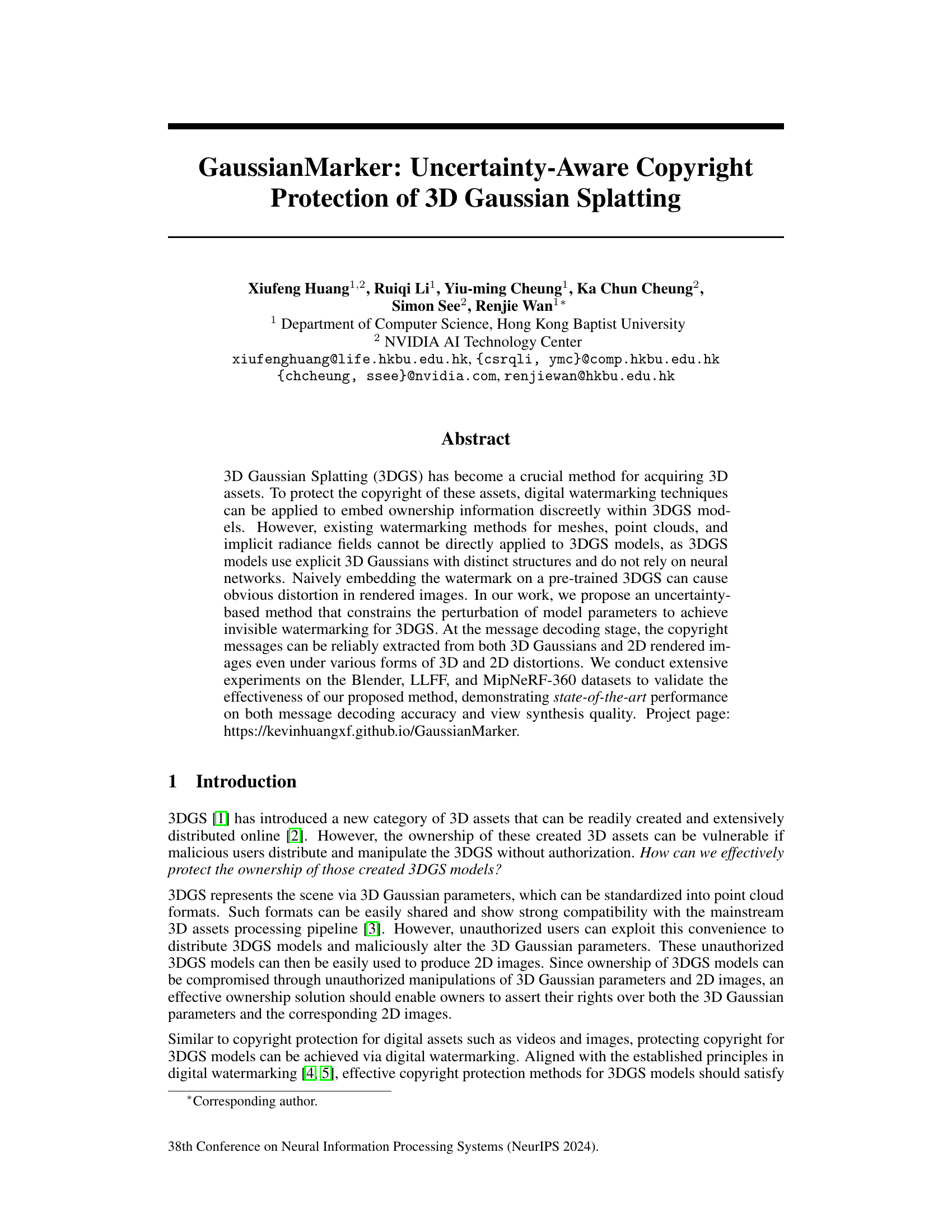

Visual Insights#

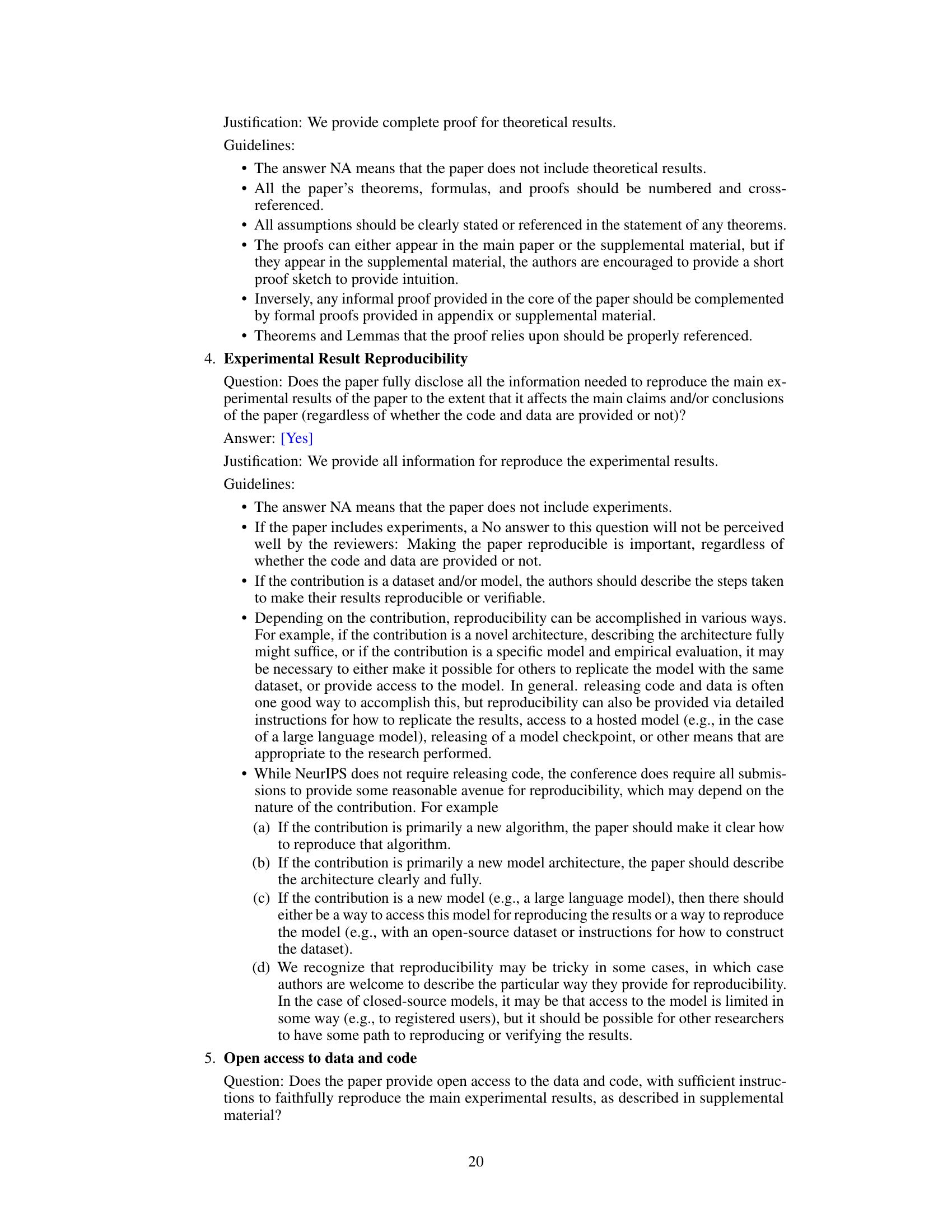

This figure illustrates the workflow of the proposed copyright protection system for 3D Gaussian Splatting (3DGS) models. It shows how a watermark is embedded into the 3DGS model, how unauthorized manipulation affects the model, and how the copyright owner can verify ownership by extracting the watermark from either the altered 3D Gaussian parameters or the rendered 2D images.

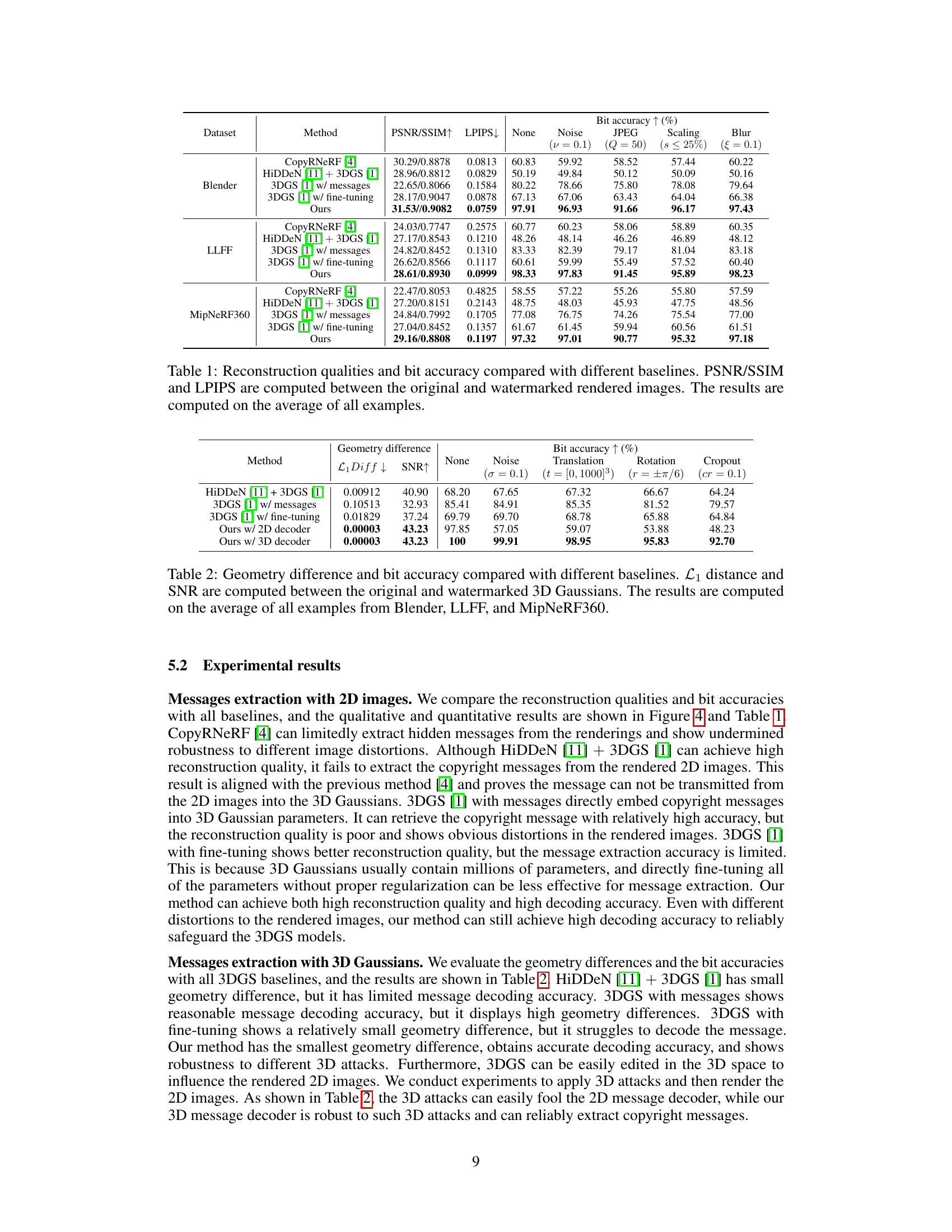

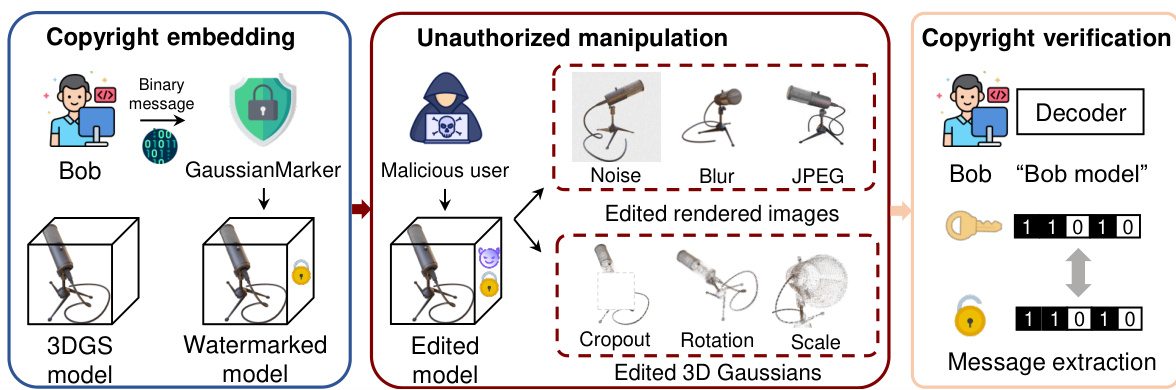

This table presents a comparison of the proposed method’s performance against various baselines in terms of reconstruction quality (PSNR, SSIM, LPIPS) and bit accuracy. The comparison is done across three datasets (Blender, LLFF, MipNeRF360) and various image distortions (noise, JPEG compression, scaling, and blur). Higher PSNR/SSIM and lower LPIPS scores indicate better reconstruction quality, while higher bit accuracy indicates better performance in preserving the embedded message.

In-depth insights#

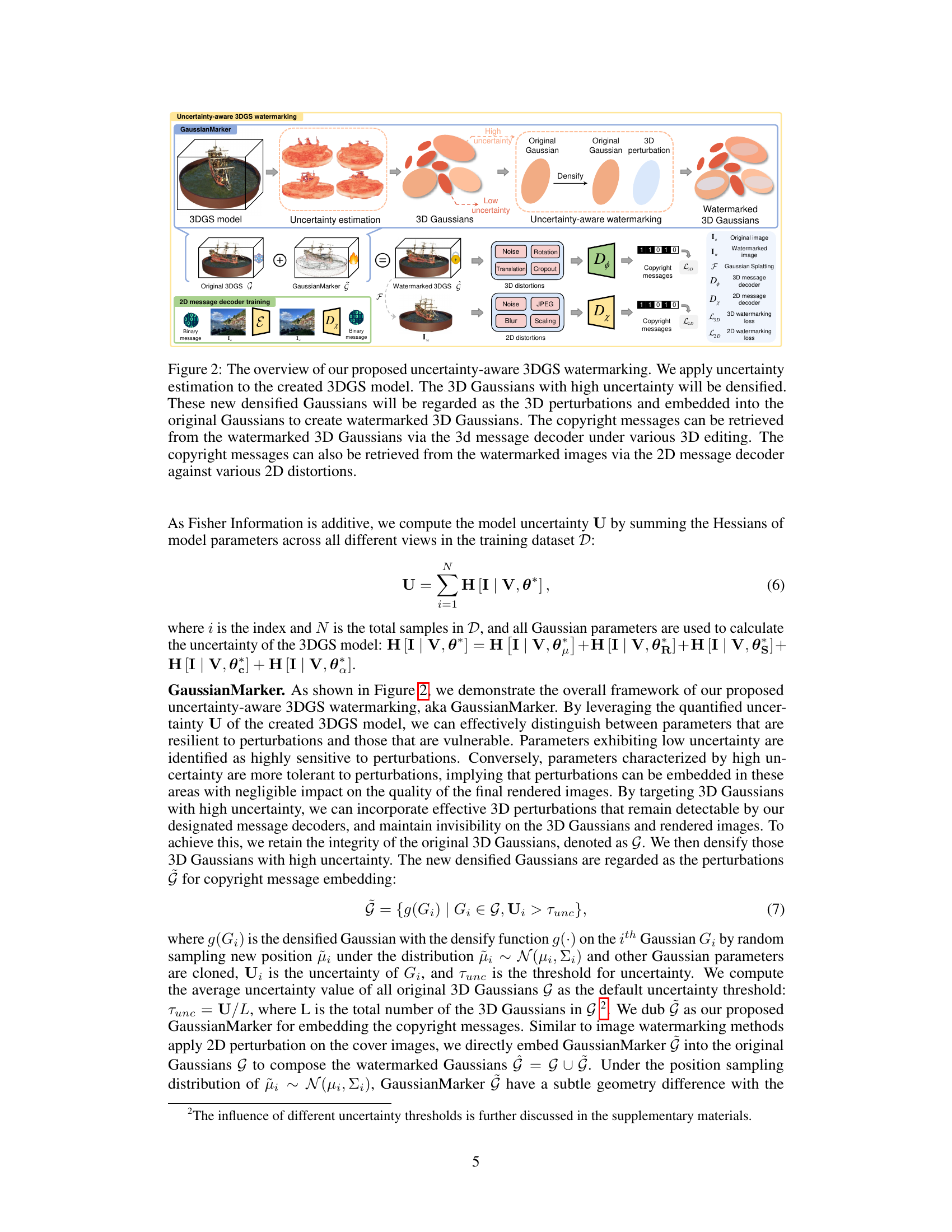

3DGS watermarking#

The core of this research lies in developing a novel and robust 3DGS watermarking technique. The approach cleverly leverages uncertainty estimation to embed copyright messages within the 3D Gaussian parameters of the model, focusing on areas with high uncertainty to minimize visible distortion. This method’s strength is its dual-decoding capability, extracting messages reliably from both the 3D Gaussian parameters and 2D rendered images, significantly enhancing robustness against various distortions and attacks. By using a Bayesian inference perspective, they identify parameters tolerant to larger perturbations, ensuring invisibility and preventing any perceivable alteration in the rendered scenes. The work introduces innovative decoders - one for 3D Gaussians (PointNet based) and another for 2D images (HiDDeN) - to handle different distortion types, further increasing robustness. Uncertainty-aware perturbation makes it highly resistant to manipulation while maintaining invisibility, leading to a truly effective solution for copyright protection of 3D Gaussian Splatting assets.

Uncertainty-aware#

The concept of ‘uncertainty-aware’ in the context of copyright protection for 3D Gaussian splatting models is crucial. It highlights the intelligent embedding of copyright information by leveraging the inherent uncertainty present within the model’s parameters. Instead of imposing potentially disruptive changes across all parameters, this approach strategically focuses on areas where modifications would be less noticeable. High-uncertainty parameters are identified, enabling the integration of watermarking signals without causing significant visual distortions in rendered images. This approach balances the need for robustness against various attacks and manipulations while ensuring invisibility of the watermark. It’s a significant advancement over previous methods that blindly perturb parameters, potentially leading to perceivable artifacts. This methodology represents a sophisticated balance between robust copyright protection and maintaining the quality of the 3D model and its associated renderings.

Robustness analysis#

A robust watermarking scheme needs to withstand various attacks. A thorough robustness analysis would evaluate performance under different distortions, including common image manipulations (e.g., JPEG compression, scaling, blurring, noise addition) and geometric transformations (rotation, cropping, translation) for 2D robustness. For 3D robustness, attacks might include noise injection to 3D Gaussian parameters, alterations to Gaussian positions and orientations, or even mesh-based attacks if the 3D representation allows for mesh extraction. The analysis should quantify the impact of each attack on watermark extraction accuracy, ideally providing metrics such as bit error rate and demonstrating resilience to adversarial attacks. Quantitative results, including precision and recall, should be presented across a wide range of attack strengths to fully characterize robustness. Visual examples of attacked watermarked images and 3D models alongside their successfully extracted watermarks further strengthen the analysis. The robustness analysis is critical to ascertain the practical applicability and reliability of the copyright protection method.

Message decoding#

The effectiveness of any watermarking scheme hinges on robust message decoding. In this research, the authors cleverly address this by employing a dual-decoder approach: one for 3D Gaussian parameters and another for 2D rendered images. This redundancy is crucial because it provides multiple avenues for recovering the embedded copyright message, even when one method may be compromised by distortions or manipulations. The 3D decoder leverages a PointNet architecture to extract the message directly from the 3D Gaussian parameters, while the 2D decoder utilizes a pre-trained HiDDeN model, trained to handle various image distortions. The use of a pre-trained HiDDeN model shows the authors’ understanding of existing robust techniques in the 2D image watermarking space and indicates a strategic choice, rather than reinventing the wheel. However, future work could explore developing a more unified or synergistic decoder architecture that leverages information from both domains concurrently for even more robust decoding, possibly using a joint optimization strategy. Furthermore, analysis of the decoders’ performance under various attack types and intensity levels would enhance the comprehensiveness of the evaluation, allowing for a deeper understanding of the system’s resilience and limitations.

Future directions#

Future research could explore enhancing the robustness of GaussianMarker in dynamic 3DGS scenarios. Motion transfer-based data augmentation could be particularly valuable here, aiming to maintain high bit accuracies while improving robustness. Investigating alternative methods for embedding copyright messages, potentially exploring techniques less reliant on uncertainty estimations, would be valuable. Exploring different deep learning architectures for the 3D and 2D message decoders, beyond PointNet and HiDDeN, might lead to improved performance. A thorough investigation into the computational efficiency of the watermarking process is needed. Optimizing for real-time performance is crucial for practical applications. Finally, further research should explore the potential vulnerabilities of this approach to sophisticated attacks and develop countermeasures, enhancing the overall security and robustness of the copyright protection method.

More visual insights#

More on figures

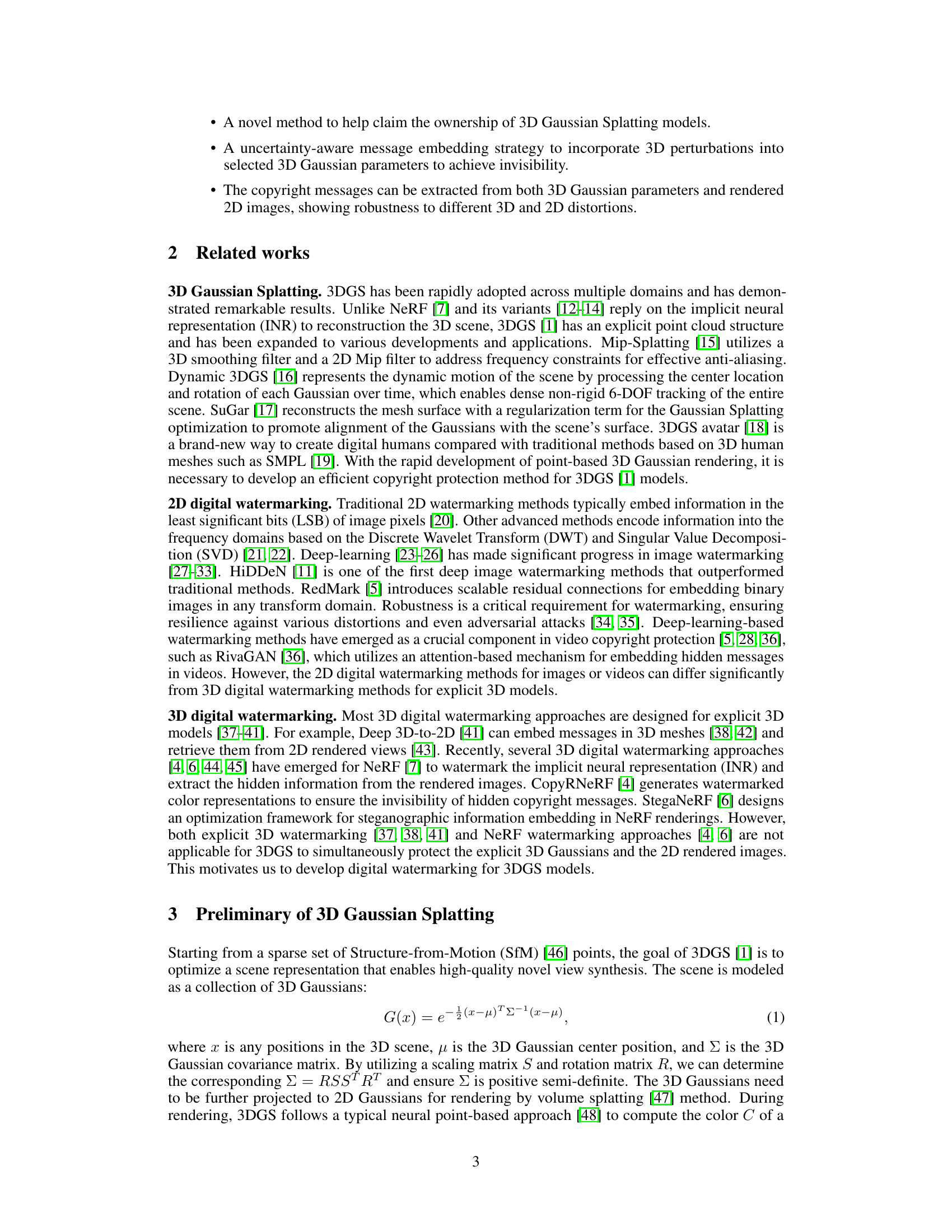

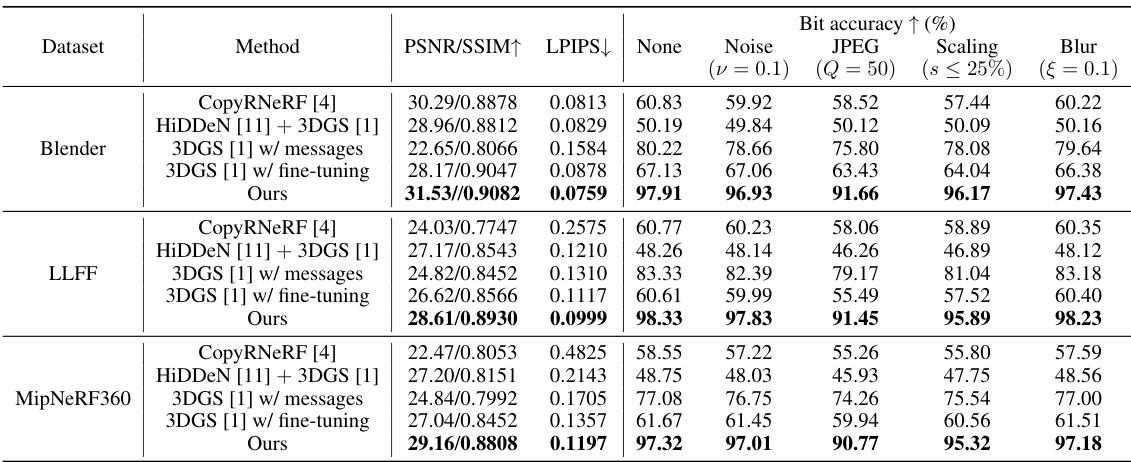

This figure provides a complete overview of the proposed uncertainty-aware 3DGS watermarking method. It illustrates the process, starting from uncertainty estimation of the 3D Gaussians in a 3DGS model, to the densification of high-uncertainty Gaussians, their embedding as perturbations into the original model to create watermarked 3D Gaussians, and finally, the extraction of copyright messages from both the watermarked 3D Gaussians and the resulting 2D images using 3D and 2D message decoders respectively, even under various 3D and 2D distortions.

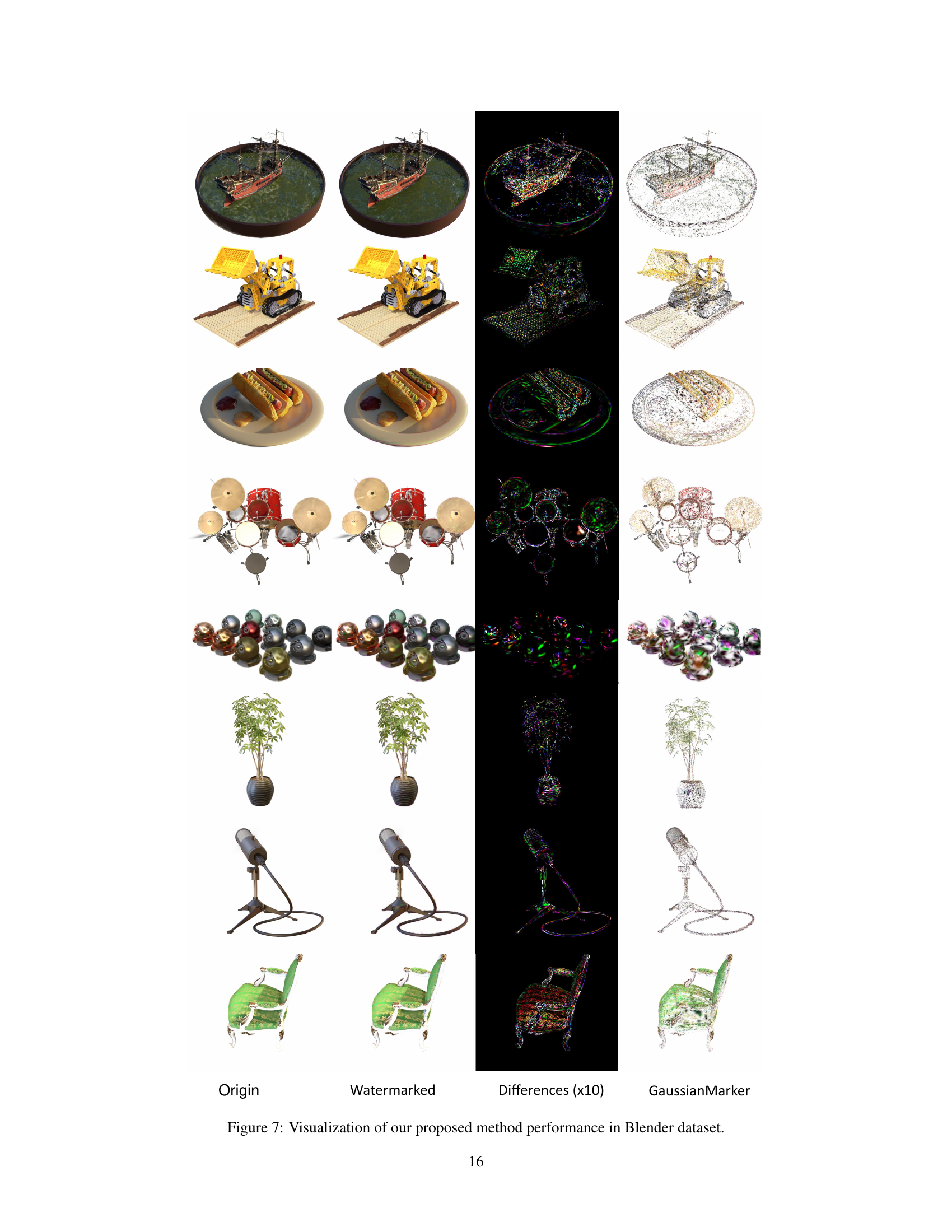

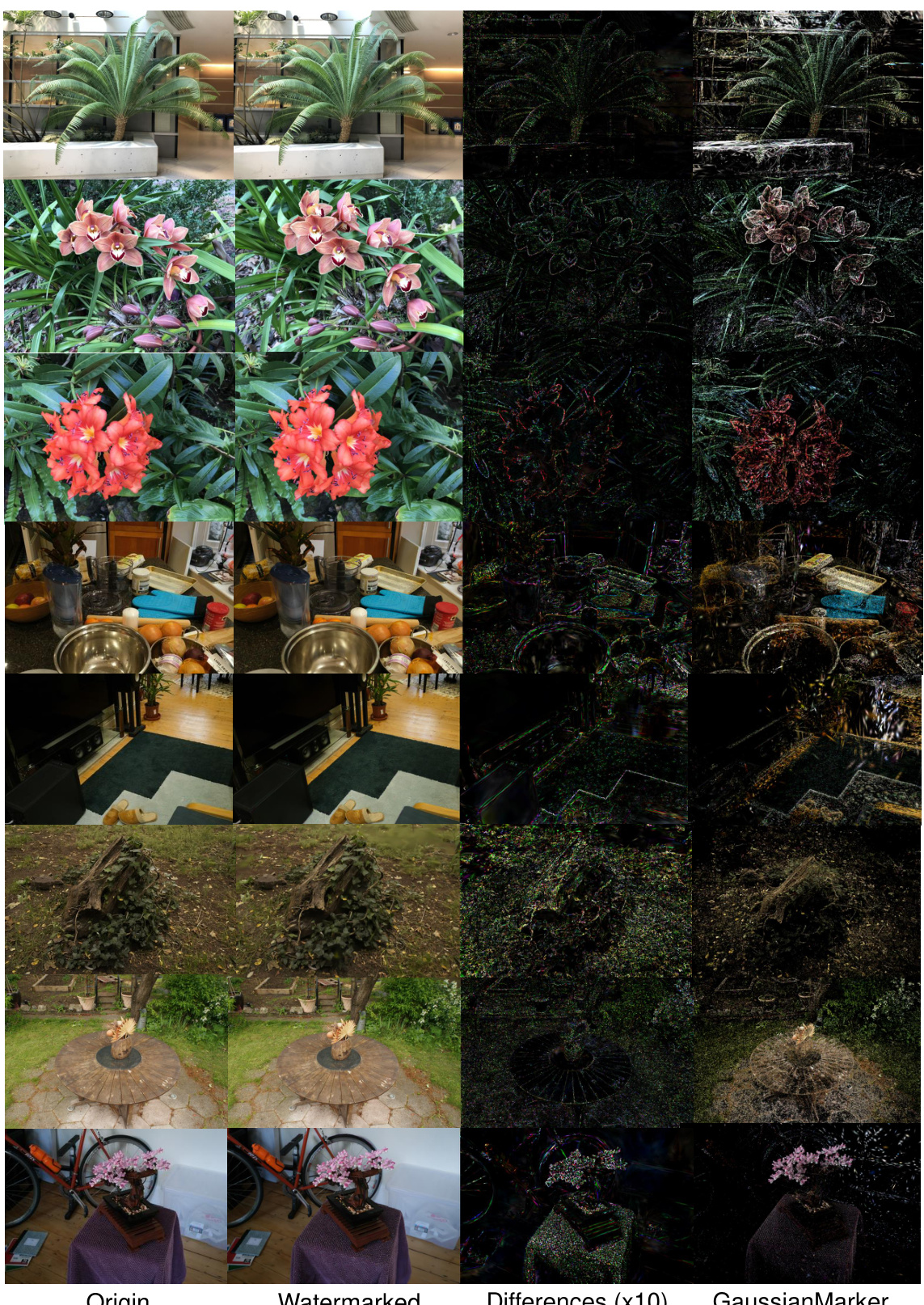

This figure visualizes the results of the proposed uncertainty-aware 3DGS watermarking method. Each row shows a comparison between the original 3DGS rendering, the watermarked rendering (with the embedded copyright message), the difference between the two magnified by a factor of 10 to highlight the subtle changes, and the GaussianMarker (the 3D perturbations used to embed the message). The differences are barely visible, demonstrating the invisibility of the watermarking process. The GaussianMarkers are scaled up for better visualization.

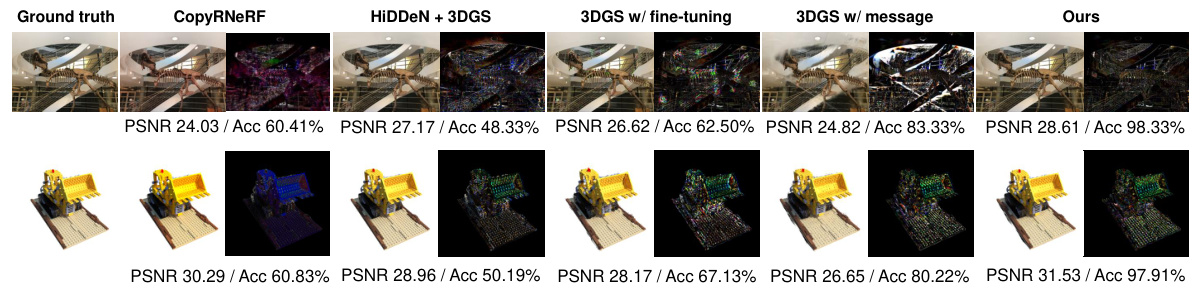

This figure compares the performance of the proposed GaussianMarker method with four baseline methods for copyright protection of 3D Gaussian Splatting models. The comparison is based on reconstruction quality (PSNR and SSIM) and bit accuracy. The figure shows that the GaussianMarker method achieves the highest PSNR and SSIM values, indicating superior reconstruction quality, along with the highest bit accuracy, demonstrating its effectiveness in preserving copyright information.

This figure illustrates the workflow of the proposed uncertainty-aware 3D Gaussian splatting watermarking method. It starts by estimating the uncertainty of the 3D Gaussian parameters in the input 3DGS model. Gaussians with high uncertainty are selected and densified, creating perturbations that serve as the watermark. These perturbations, along with the original model, form the watermarked model. The copyright message is then embedded in this watermarked model. Finally, both 2D rendered images and the 3D Gaussian parameters can be used to extract the copyright message, demonstrating robustness against various distortions and edits.

This figure shows a comparison of applying perturbation to high-uncertainty versus low-uncertainty 3D Gaussians in a 3D model. The original model is shown in (a). (b) demonstrates adding perturbations to high-uncertainty Gaussians, showing minimal visual distortion. Conversely, (c) shows the addition of perturbations to low-uncertainty Gaussians, resulting in noticeable artifacts and distortions, emphasizing the importance of targeting high-uncertainty Gaussians for invisible watermarking.

This figure shows a comparison of original and watermarked images from the LLFF and MipNeRF360 datasets. Each row presents an original image, its watermarked counterpart, the difference between the two (magnified 10x to highlight subtle changes), and a visualization of the added GaussianMarker perturbations used for watermark embedding. The GaussianMarkers are scaled for better visibility, showing their distribution and placement in the 3D scene.

This figure illustrates the proposed uncertainty-aware 3DGS watermarking method. It shows how uncertainty is estimated for 3D Gaussian parameters in a 3DGS model. High uncertainty Gaussians are identified and ‘densified’ (perturbed) to embed copyright messages. These perturbations are imperceptible in both 3D and rendered 2D images, allowing for robust message extraction even with distortions. A 3D message decoder and a 2D message decoder are used to extract the messages from the watermarked 3D Gaussians and 2D images respectively.

This figure shows a comparison between the original and watermarked 3D Gaussian splatting (3DGS) models. The leftmost image shows the original 3DGS model, the middle image shows the watermarked 3DGS model, and the third image shows the difference between the two. Finally, the rightmost image displays the added GaussianMarker perturbations that embed the copyright information. The visual differences between the original and watermarked images are minimal, demonstrating the invisibility of the watermark.

This figure visualizes the consistency of the proposed GaussianMarker across different viewpoints. Four images are shown representing the front, left, back, and right views of the scene. These views were not used during training. The consistent appearance of the GaussianMarker across all four viewpoints demonstrates the robustness of the method to changes in perspective and viewing angle.

More on tables

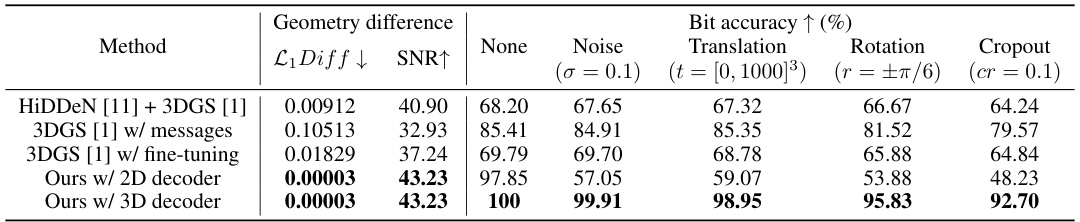

This table compares the performance of the proposed method against three baselines (HiDDeN [11] + 3DGS [1], 3DGS [1] w/ messages, 3DGS [1] w/ fine-tuning) across different distortion types (noise, translation, rotation, cropout). It presents two key metrics: the geometry difference (measured by L₁ distance and SNR) between the original and watermarked 3D Gaussians and the bit accuracy of message extraction under these distortions. The results are averaged across the Blender, LLFF, and MipNeRF360 datasets.

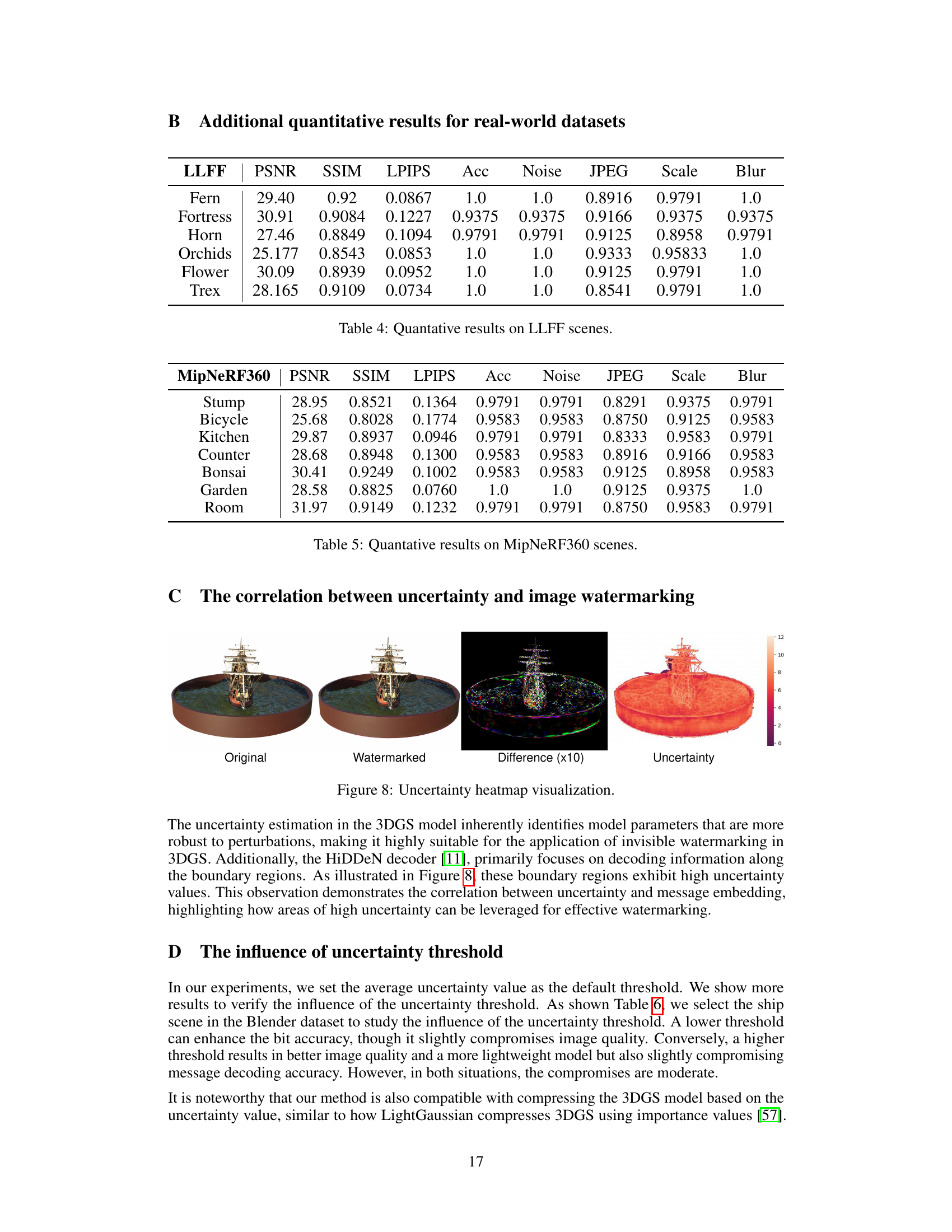

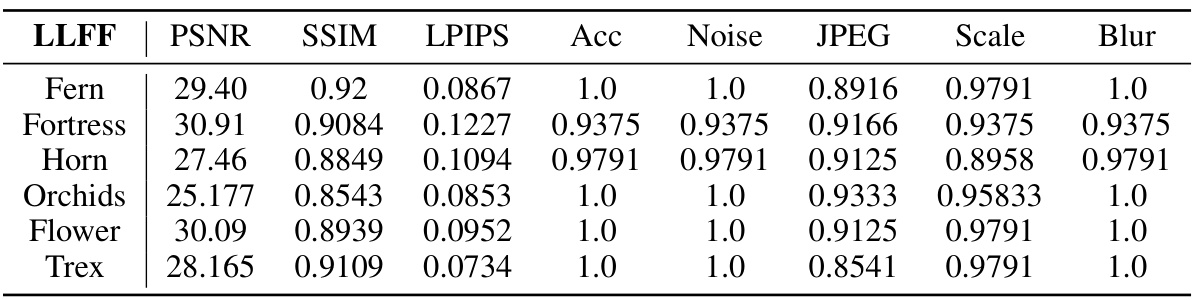

This table presents a quantitative evaluation of the proposed method’s performance on the LLFF dataset. It shows the PSNR, SSIM, LPIPS, and accuracy metrics for several scenes under different distortions (noise, JPEG compression, scaling, and blur). The results illustrate the method’s ability to maintain high reconstruction quality and bit accuracy even in the presence of various distortions.

This table presents a quantitative evaluation of the proposed method on the MipNeRF360 dataset. It shows the performance metrics including PSNR, SSIM, LPIPS, and bit accuracy. It also demonstrates the robustness of the method to various distortions like noise, JPEG compression, scaling, and blur, by providing the accuracy scores under those different distortion conditions. The results are presented for each scene in the MipNeRF360 dataset.

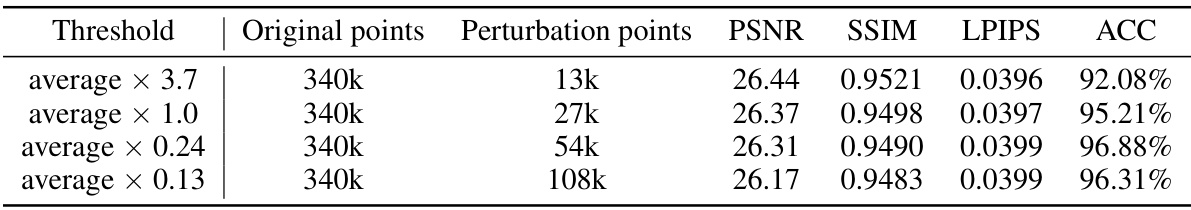

This table shows the impact of different uncertainty thresholds on the performance of the proposed GaussianMarker watermarking method. It presents the number of original and perturbation points, as well as the PSNR, SSIM, LPIPS, and accuracy metrics for each threshold. A lower threshold leads to higher accuracy but slightly lower image quality, while a higher threshold results in better image quality and a smaller model but slightly lower accuracy. The results suggest a trade-off between accuracy and efficiency.

This table shows the training time for 3DGS models and the time it takes to embed the copyright message using the proposed method. The results are broken down by dataset type (synthetic Blender dataset, real-world LLFF scenes, and real-world MipNeRF360 scenes). It highlights the significant efficiency gain of the message embedding process compared to the full 3DGS model training.

Full paper#