↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Current causal representation learning (CRL) heavily relies on the assumption of single-node interventions, meaning only one variable is manipulated at a time. This is unrealistic in many real-world applications, where multiple variables can be simultaneously affected by interventions. This limitation restricts the applicability of existing CRL methods to complex scenarios and systems with multiple interacting components.

This research tackles this limitation by focusing on interventional CRL under unknown multi-node (UMN) interventions. The authors establish the first identifiability results for general latent causal models under stochastic interventions and linear transformations. They also design CRL algorithms that achieve these identifiability guarantees, which match the best results for single-node interventions. This is a significant advancement, extending the reach and applicability of CRL to a wider range of practical problems.

Key Takeaways#

Why does it matter?#

This paper is crucial because it addresses a critical limitation in current causal representation learning (CRL) research, which often assumes single-node interventions. By tackling the more realistic scenario of unknown multi-node interventions, it opens doors for broader applications and more accurate causal modeling in various complex systems. The identifiability results and proposed algorithms are significant contributions, advancing the field and paving the way for future research in this direction. The work is also important for its rigorous theoretical analysis and constructive proofs.

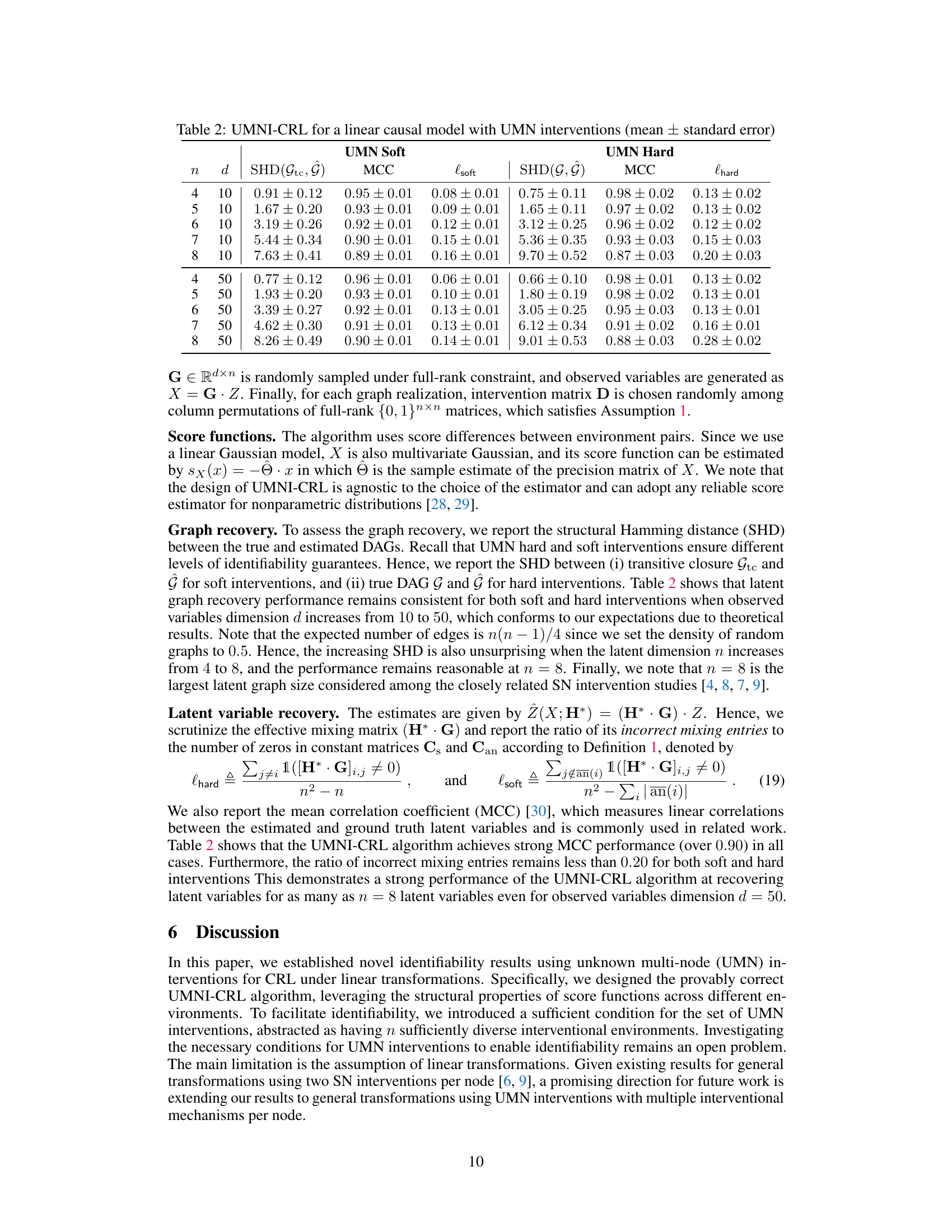

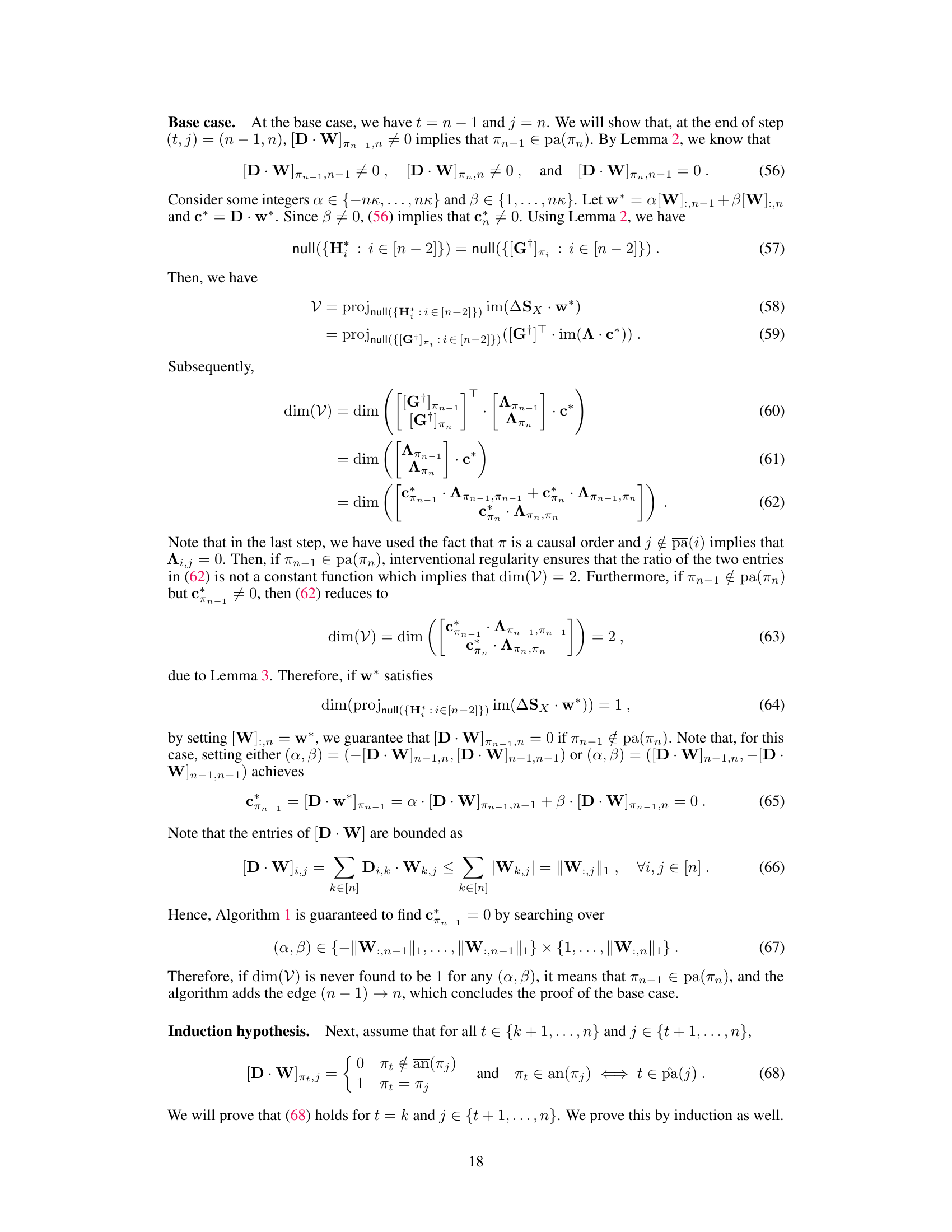

Visual Insights#

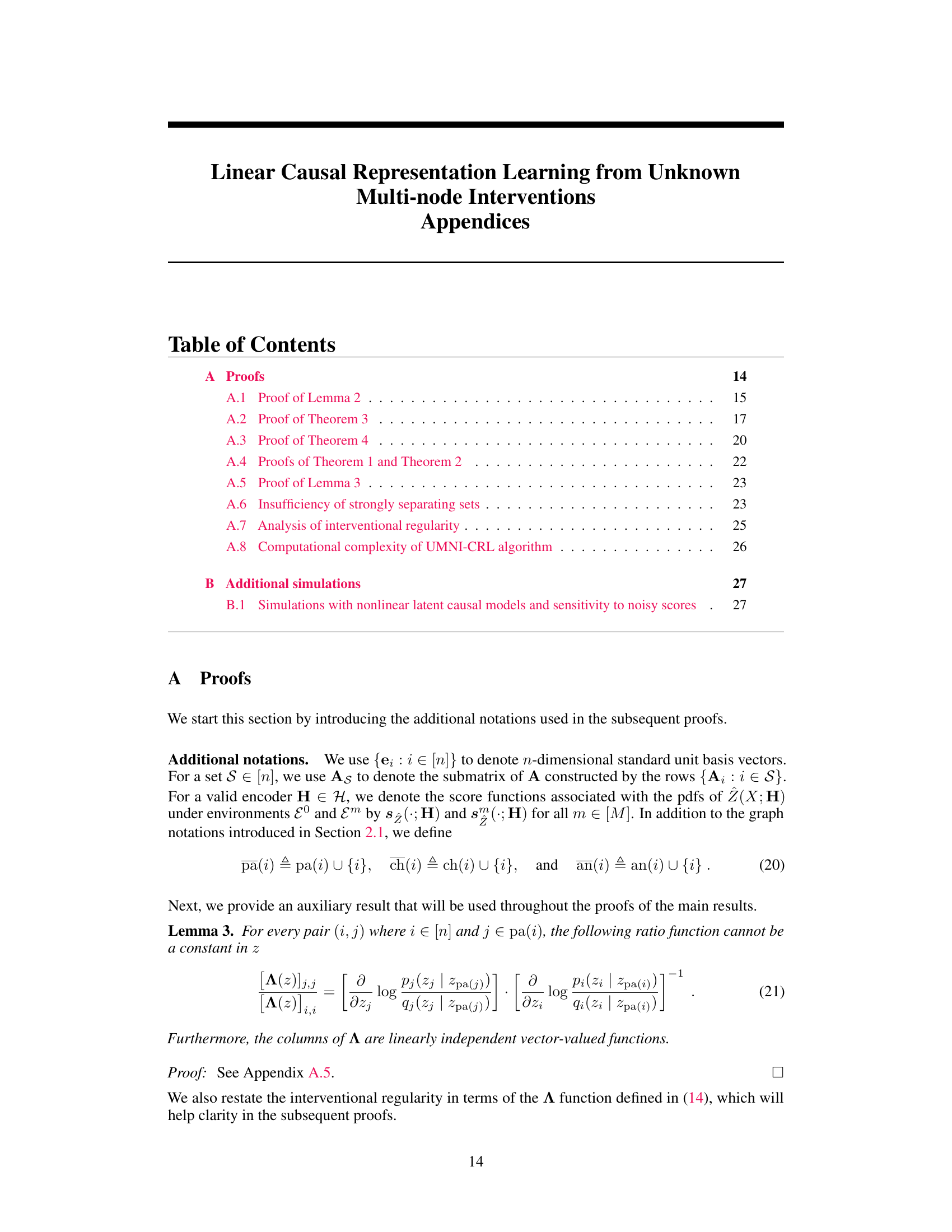

This figure shows the sensitivity analysis of the UMNI-CRL algorithm for quadratic latent causal models. Two subfigures are presented: (a) shows the structural Hamming distance (SHD) between the true DAG (for hard interventions) or its transitive closure (for soft interventions) and the estimated DAG, plotted against the signal-to-noise ratio (SNR). (b) shows the incorrect mixing ratio, a measure of latent variable recovery accuracy, also plotted against the SNR, for both soft and hard interventions.

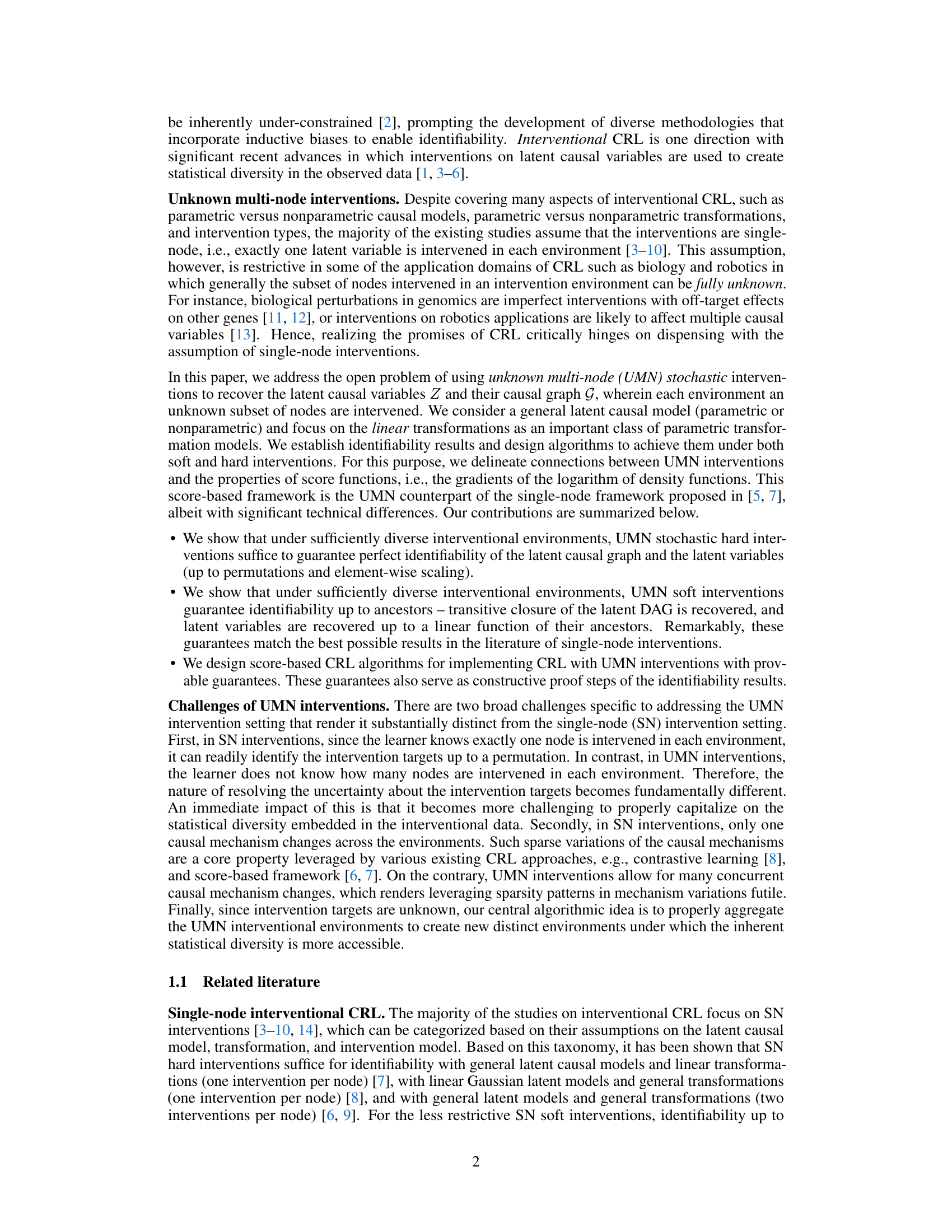

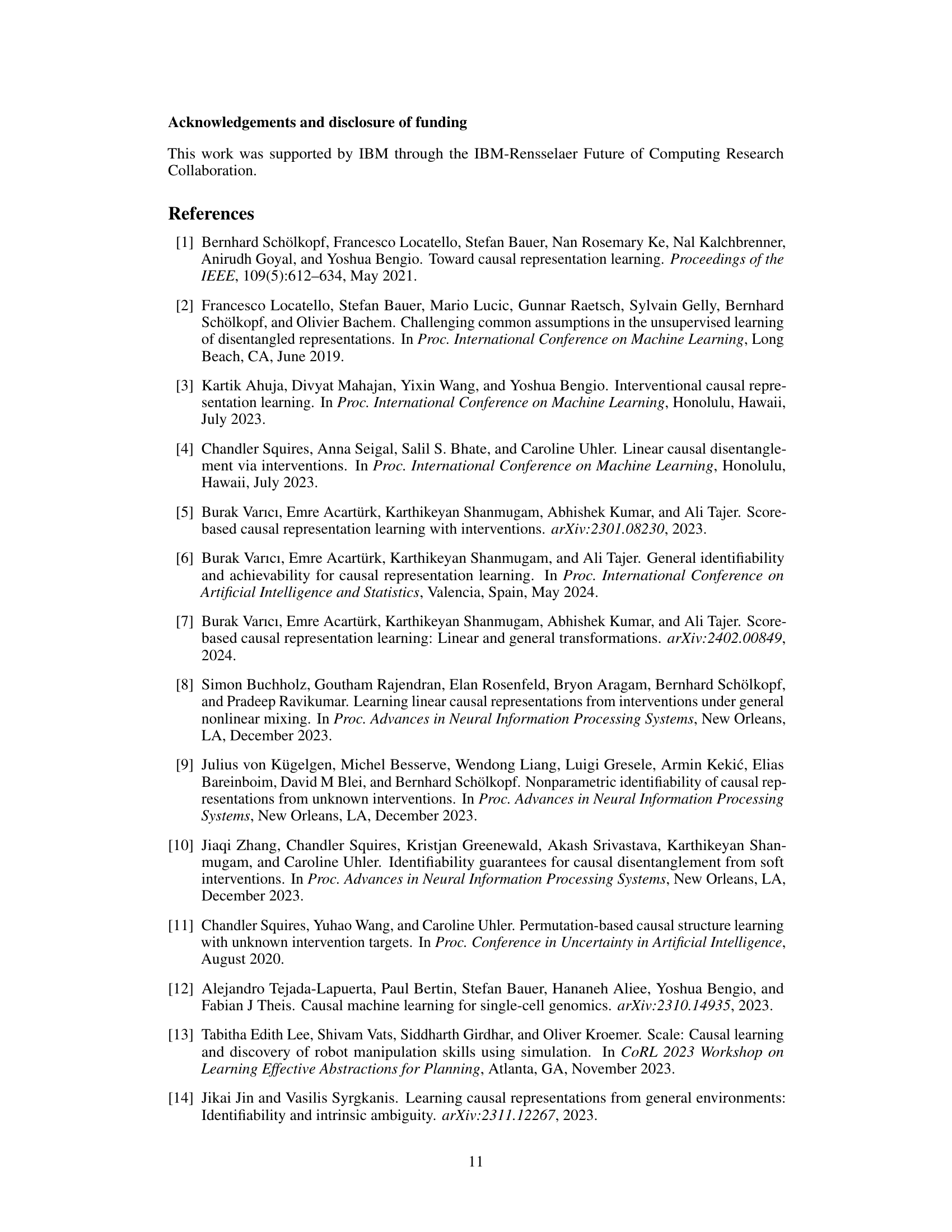

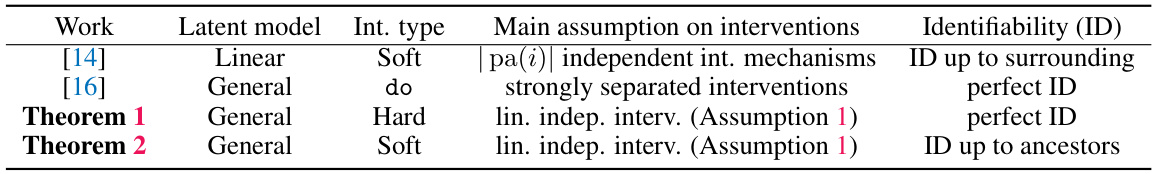

This table compares the identifiability results of the current paper’s proposed methods with those of existing works in multi-node interventional causal representation learning (CRL). It shows the latent model type (Linear or General), the intervention type (Soft, Hard, or do), the main assumptions made about the interventions, and the resulting identifiability (ID) achieved (Perfect ID, ID up to ancestors, or ID up to surrounding). The table highlights that the current paper achieves comparable or better identifiability guarantees even under more relaxed assumptions regarding the interventions.

Full paper#