↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

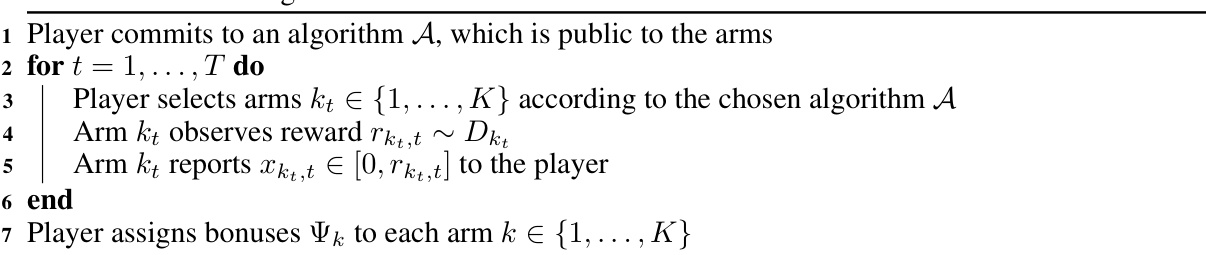

Classic multi-armed bandit problems assume passive arms. However, in many real-world scenarios, arms are strategic and might misreport rewards. This paper addresses this issue by considering a strategic multi-armed bandit problem with arms that can strategically retain a portion of their rewards and only disclose a fraction to the learning agent. This leads to a game where arms maximize their utility while the learning agent aims to minimize regret. Existing solutions often fail to achieve satisfactory regret bounds in these settings.

The paper proposes a novel mechanism that establishes an equilibrium where arms act truthfully, disclosing all their rewards. This mechanism involves a new incentive-aware algorithm that uses a bonus-based system to encourage truthful behavior. With this approach, the learning agent can attain the second-highest average reward among arms with a cumulative regret bounded by O(log(T)/Δ) or O(√T log(T)) depending on problem characteristics.

Key Takeaways#

Why does it matter?#

This paper is crucial because it tackles the strategic behavior of arms in multi-armed bandit problems, a significant challenge in real-world applications. Its debt-free reporting mechanism and incentive-aware algorithm provide a novel solution, improving the regret bound and ensuring truthful responses. This opens up new research avenues for designing robust and efficient mechanisms in various fields involving strategic interactions.

Visual Insights#

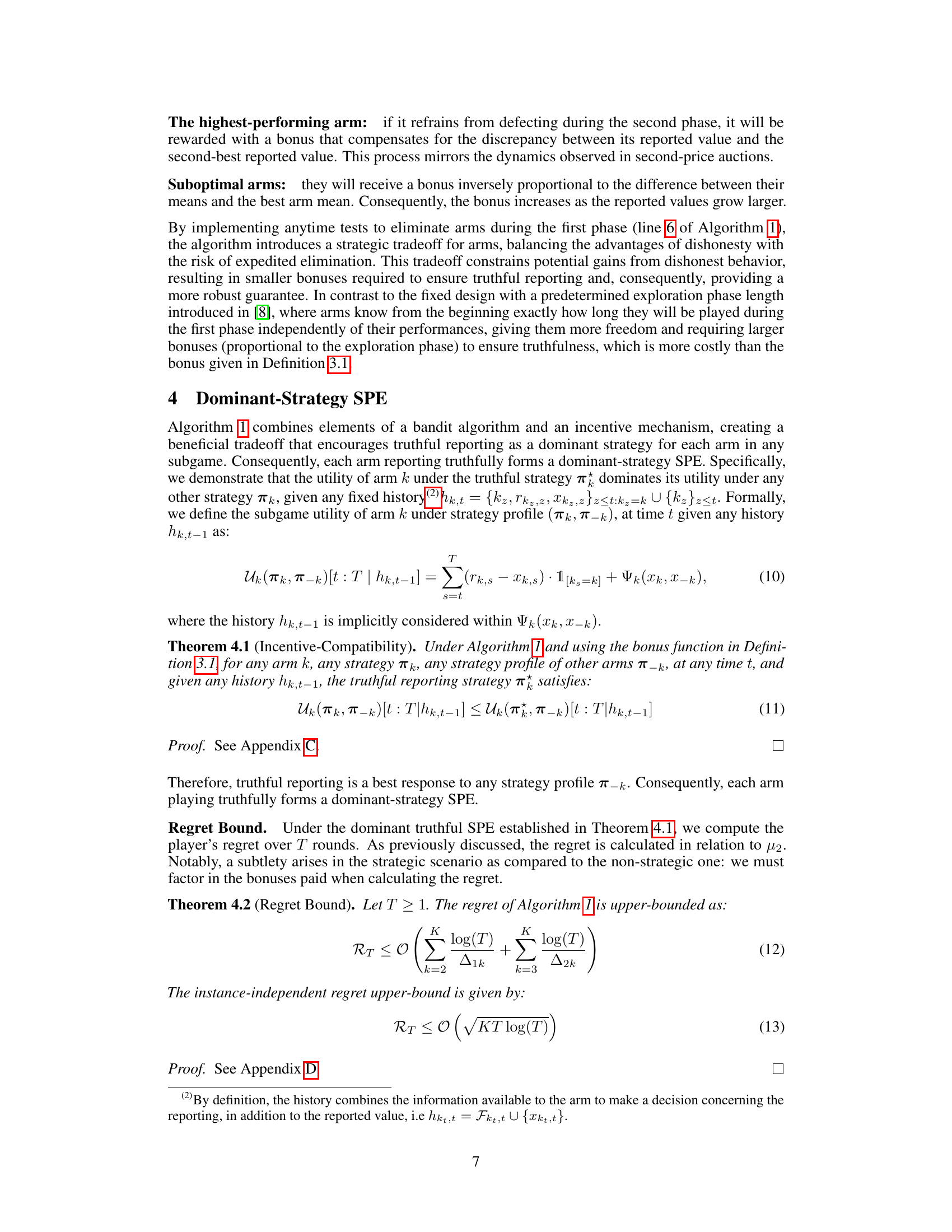

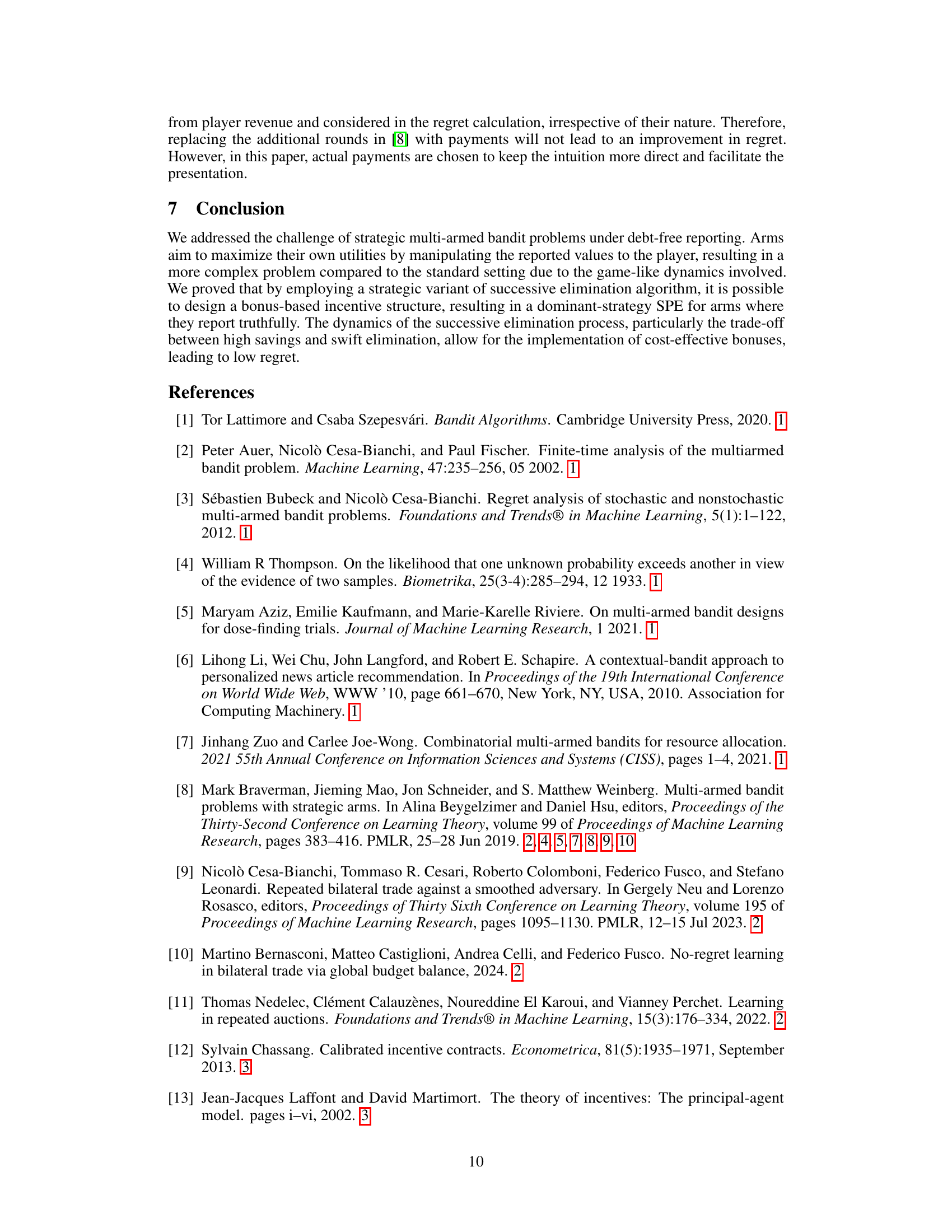

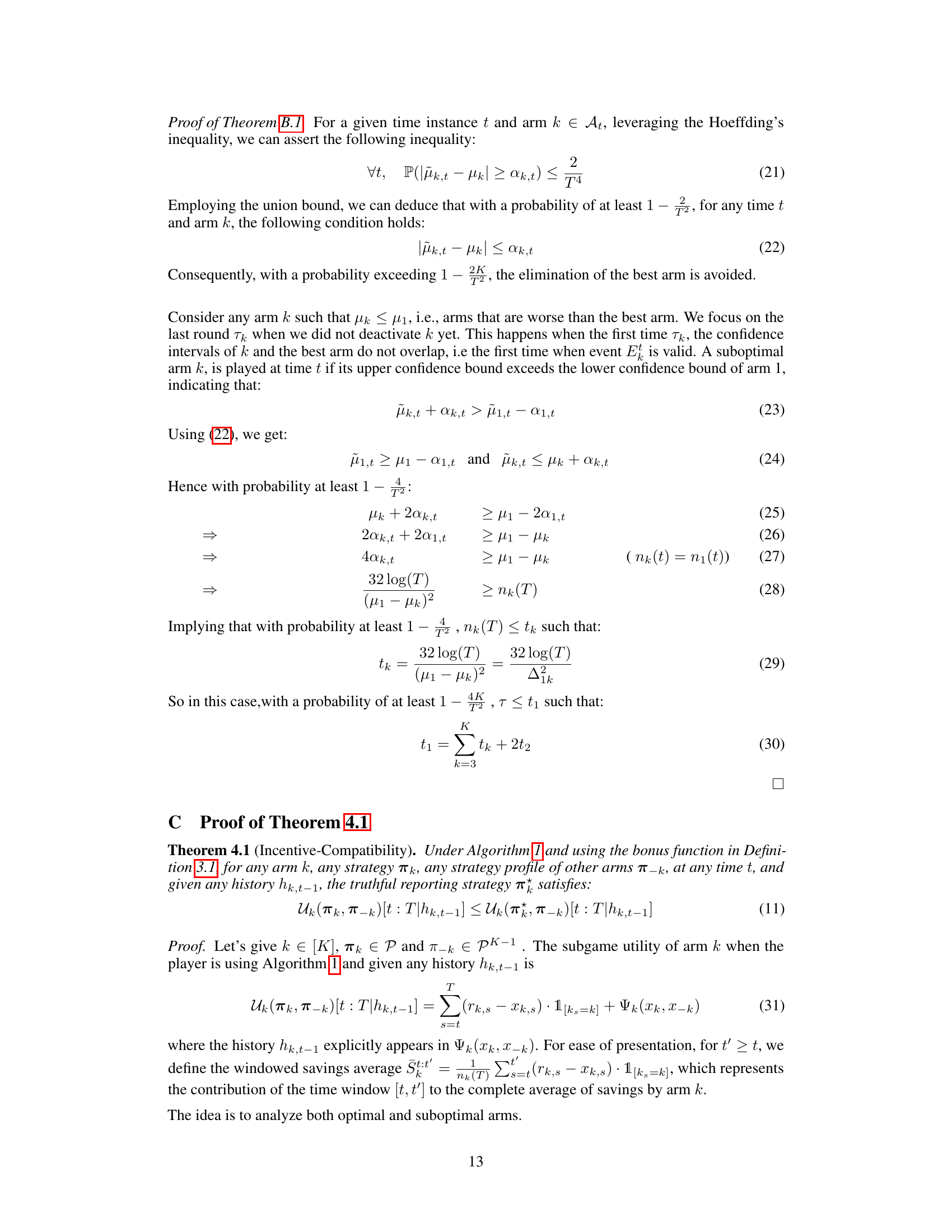

This figure compares the cumulative regret of three different strategies: untruthful arbitrary reporting (arms randomly withhold portions of rewards), truthful reporting (arms always report truthfully, which is a dominant strategy in the game), and ‘optimal’ reporting (only the two best arms report truthfully). The x-axis shows the time horizon (number of rounds), and the y-axis represents the cumulative regret. The plot demonstrates the superior performance of the truthful and ‘optimal’ strategies compared to the untruthful strategy.

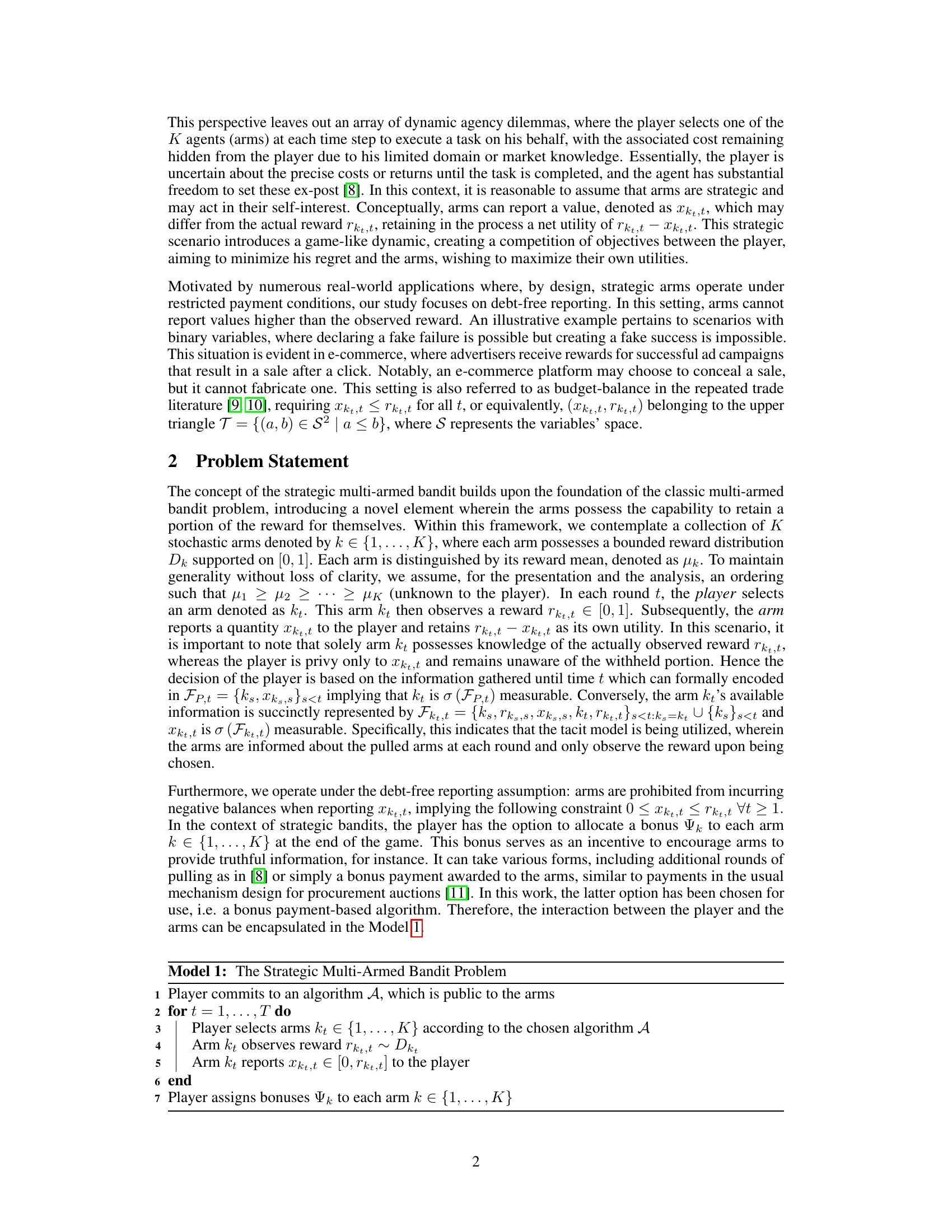

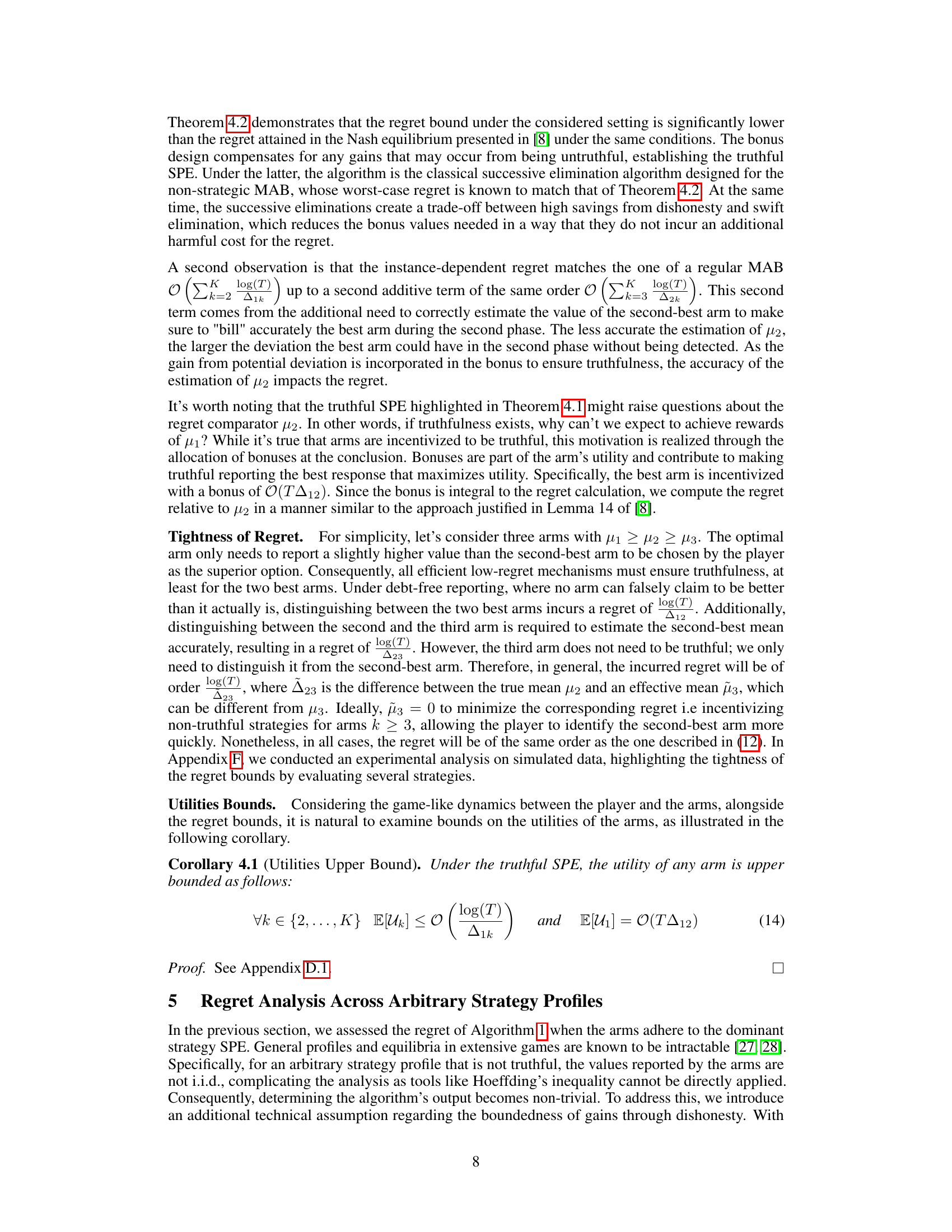

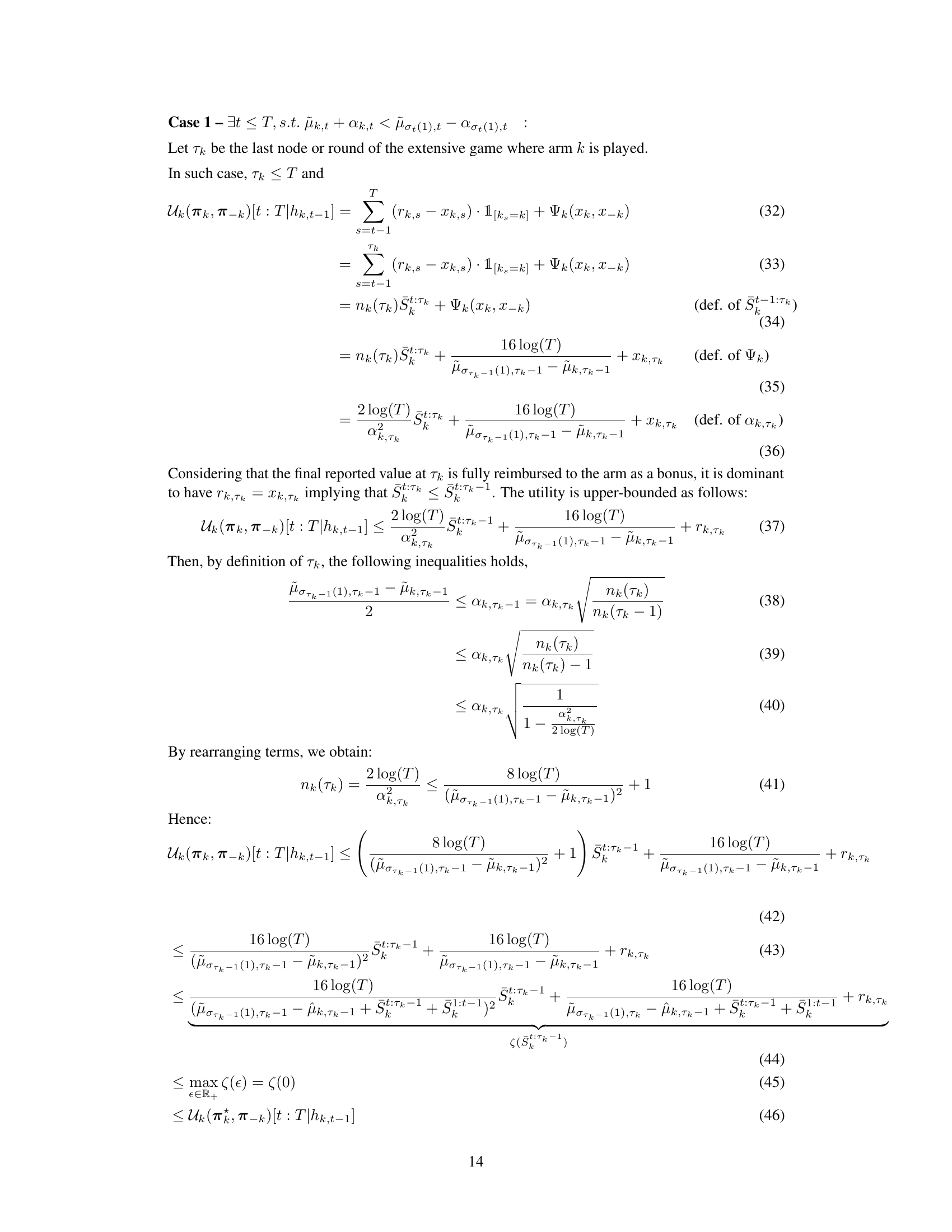

This table compares two algorithms, S-ETC and S-SE, focusing on their settings and the resulting regret. It highlights whether each algorithm uses a tacit model, debt-free reporting, the nature of the bonus system used (additional rounds vs. payment), and the resulting regret (problem-dependent vs. problem-independent). This provides a concise overview of the key differences and performance characteristics of the two methods.

In-depth insights#

Strategic Arms MAB#

The concept of “Strategic Arms MAB” introduces a fascinating twist to the classic Multi-Armed Bandit (MAB) problem. Instead of passive arms, strategic arms actively participate, aiming to maximize their own utility, potentially by withholding a portion of their rewards. This creates a game-theoretic setting where the learning agent (player) must contend with arms that might misreport their rewards. This necessitates the design of mechanisms that incentivize truthful reporting, perhaps through bonus systems or carefully structured payment schemes. A key challenge is balancing the exploration-exploitation dilemma with the strategic interactions, ensuring that the agent can achieve low regret while preventing manipulation by the arms. The debt-free reporting constraint, where arms cannot report rewards greater than they actually receive, further complicates the problem, potentially leading to different equilibrium outcomes than in scenarios with unrestricted reporting. Key research questions revolve around designing algorithms capable of reaching near-optimal performance in such complex environments. Analyzing the regret under both truthful and untruthful arm behaviors is critical. Finding dominant strategies for arms and optimal strategies for the agent are major focal points.

Debt-Free Incentive#

A debt-free incentive mechanism in a strategic multi-armed bandit setting presents a unique challenge. The core idea is to incentivize truthful reporting from strategic arms without allowing them to incur debt or negative utility. This constraint significantly impacts the design of the incentive scheme. A simple bonus system might not suffice, as arms could strategically withhold rewards to maximize their utility. Effective mechanisms require a delicate balance between providing sufficient incentive for truthfulness and avoiding excessive cost for the player. Adaptive algorithms that dynamically adjust incentives based on observed behavior are likely needed to achieve optimal performance. The design must consider the arms’ strategic behavior and its impact on the player’s regret. This requires a game-theoretic analysis to ensure the incentive mechanism yields a desirable equilibrium where arms report truthfully or adopt a truthful strategy. Furthermore, any algorithm designed must address the trade-off between exploration and exploitation, as well as the computational complexity of managing incentives across multiple arms. The overall effectiveness of a debt-free incentive system hinges on the careful integration of economic incentives and efficient bandit algorithms, requiring a multidisciplinary approach combining elements of mechanism design, online learning, and game theory.

Dominant SPE Proof#

A dominant strategy Subgame Perfect Equilibrium (SPE) proof within a strategic multi-armed bandit setting demonstrates that truthful reporting is the optimal strategy for all arms, regardless of the actions of other arms. This is achieved by carefully designing an incentive mechanism (bonus allocation) that makes deviating from truthful behavior less profitable than cooperating. The proof rigorously analyzes arm utilities under truthful and untruthful reporting, showing that truthful reporting always yields higher expected utility for each arm. The incentive mechanism effectively counteracts the arms’ strategic tendencies, ensuring the emergence of a socially desirable outcome for the overall game—minimizing the player’s regret. A key aspect is that the bonus structure depends on the observed rewards and the arms’ ranking at a specific point in the algorithm. This is crucial in creating the dominance and achieving the equilibrium. The analysis must thoroughly consider the interplay between the bonus payouts and the arms’ utility functions, demonstrating that truthful behavior provides the arms with the highest expected payoff.

Regret Analysis#

Regret analysis in multi-armed bandit problems assesses the difference between an agent’s cumulative reward and the reward achievable using optimal knowledge of the arms. In strategic settings, where arms act to maximize their own utilities, regret analysis becomes significantly more complex. The arms’ strategic behavior introduces a game-theoretic element, requiring consideration of equilibrium concepts such as Nash Equilibrium to evaluate the agent’s performance. Debt-free reporting, a constraint where arms cannot report rewards exceeding their true values, further complicates analysis, necessitating the design of incentive mechanisms to encourage truthful reporting. A key challenge lies in bounding the agent’s regret under such constraints while considering the arms’ strategic responses. Effective regret bounds often depend on parameters like the gap between the means of the best and second-best arms. Furthermore, the analysis might involve exploring different strategy profiles and determining the regret under various equilibria, moving beyond the simple truthful equilibrium.

Future Work#

The paper’s “Future Work” section hints at several promising research directions. Extending the S-SE algorithm’s applicability to other no-regret MAB algorithms, such as UCB, is crucial. This involves adapting incentive mechanisms to encourage truthful reporting while maintaining low regret. The current work focuses on successive elimination, but exploring alternative approaches could lead to significant improvements in efficiency and robustness. The impact of different bonus allocation schemes and their influence on the equilibrium dynamics warrant further investigation. This includes considering both bonus payment and alternative reward systems. Analyzing the algorithm’s performance under a wider range of strategic arm profiles, beyond the dominant truthful SPE, is also essential. This requires relaxing assumptions made in the current theoretical analysis and exploring more complex equilibrium behavior. Finally, empirical studies using real-world datasets are needed to validate the algorithm’s effectiveness and reveal its practical limitations. This would also shed light on the robustness of the model in realistic, noisy environments and with a greater range of arm strategies than the current simulations allow.

More visual insights#

More on figures

This figure compares the cumulative regret of three different arm reporting strategies. The first is a baseline where arms randomly withhold portions of their reward. The second shows the cumulative regret when all arms report truthfully, achieving a dominant-strategy subgame perfect equilibrium. The third strategy, called ‘optimal’, shows that only the two best-performing arms report truthfully, while the others withhold all rewards. This demonstrates the trade-off between incentivizing truthful reporting and achieving lower regret. The x-axis represents the time horizon, while the y-axis represents the cumulative regret.

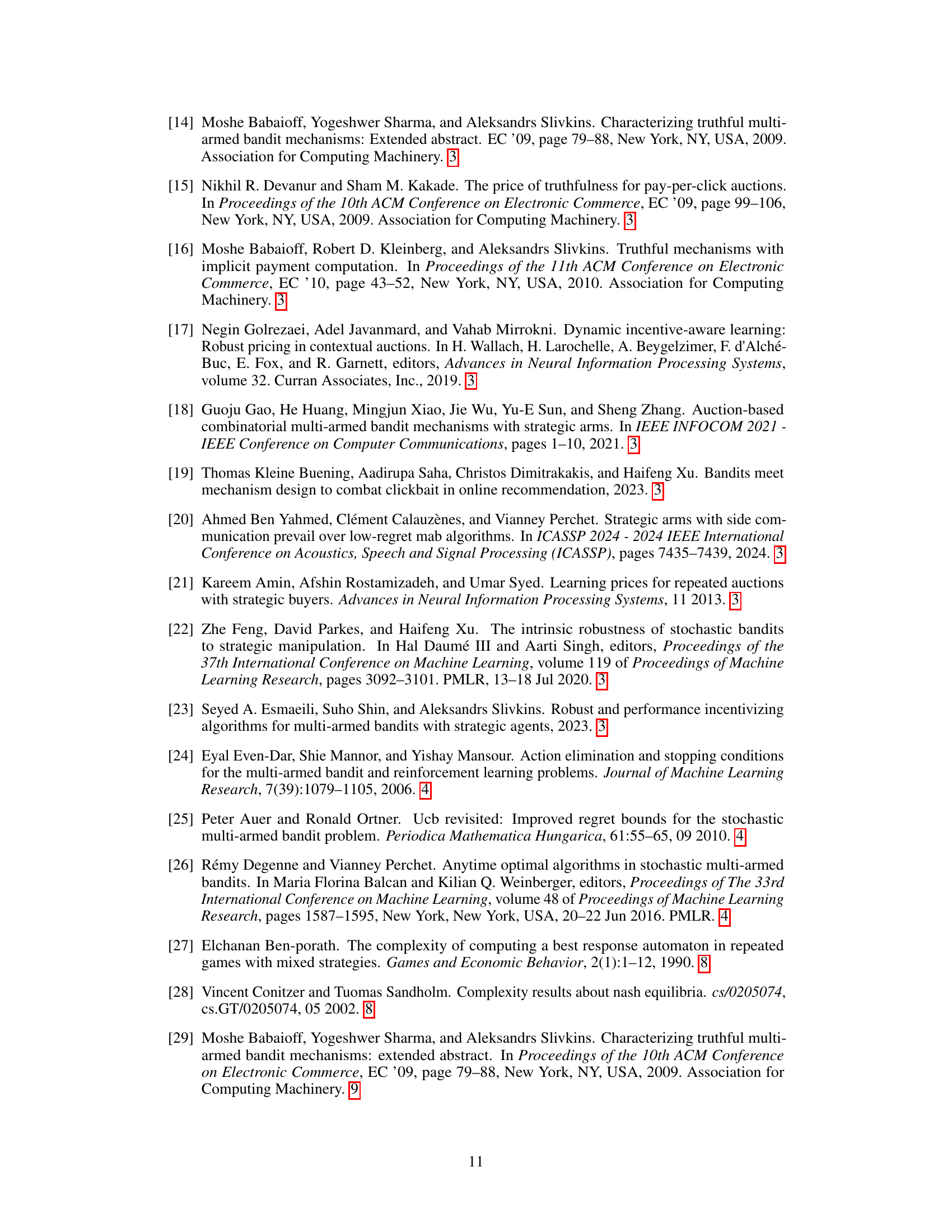

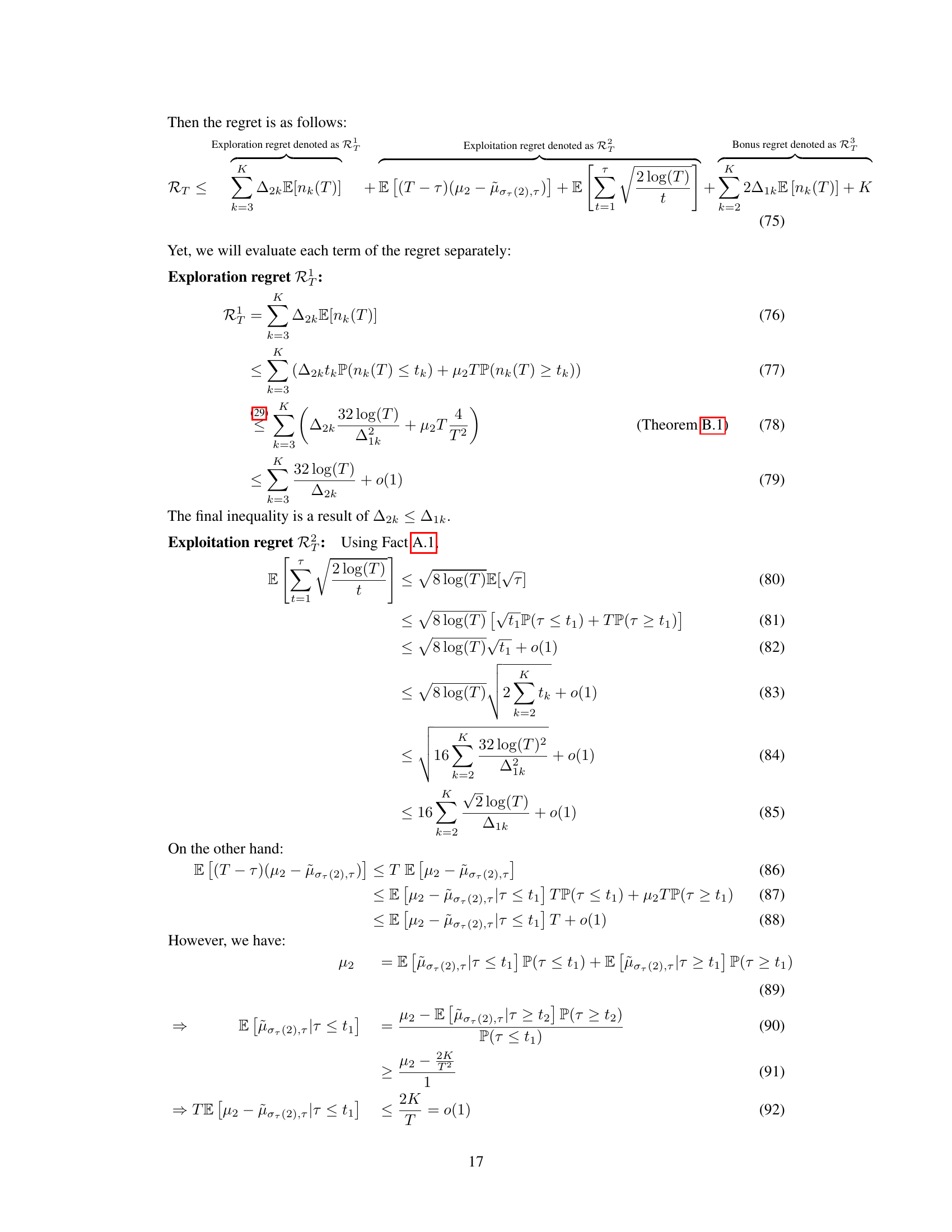

This figure compares the utilities of arms under two different reporting strategies: truthful reporting (where arms report their rewards honestly) and untruthful reporting (where arms randomly withhold portions of their rewards). The x-axis represents the arms (indexed from μ₁ to μ₆, indicating that μ₁ is the arm with the highest mean reward, and μ₆ is the lowest). The y-axis represents the utility (total reward retained by the arm) achieved by each arm under each strategy. The bars show that under truthful reporting, arms achieve higher utilities than under untruthful reporting, which is consistent with the paper’s theoretical analysis demonstrating that truthful reporting leads to a dominant strategy.

Full paper#