↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Many machine learning models struggle with out-of-distribution (OOD) generalization, meaning they perform poorly on data different from their training data. This is often due to spurious correlations: the model learns to associate features unrelated to the true class label (like the background color of a digit), leading to inaccurate predictions on new data. Existing methods often require pre-defined environment labels, which are unavailable in real-world scenarios.

This paper introduces Neural Collapse-inspired Feature Alignment (NCFAL), a novel method that addresses OOD generalization. NCFAL uses the concept of neural collapse, where features collapse to a specific geometric structure, to align semantic features across different environments. This alignment allows the model to focus on the actual, label-related features, rather than spurious ones. NCFAL can work with or without environment labels, automatically partitioning the environment if needed. Experiments on multiple datasets show that NCFAL significantly improves OOD performance compared to existing methods.

Key Takeaways#

Why does it matter?#

This paper is important because it offers a novel approach to improve out-of-distribution generalization in machine learning models by leveraging the concept of neural collapse. It addresses the prevalent issue of spurious correlations that hinder the performance of models when presented with data that differs significantly from their training data. By introducing a new method to align semantic features across environments, even without explicit environment labels, the research opens avenues for more robust and generalizable AI systems. The findings are significant for researchers working on improving the reliability and adaptability of AI models in real-world scenarios where data distribution may vary drastically. This method can improve the performance of AI in various applications, particularly those with limited data and high variability, and has the potential to substantially improve the real-world application of machine learning models.

Visual Insights#

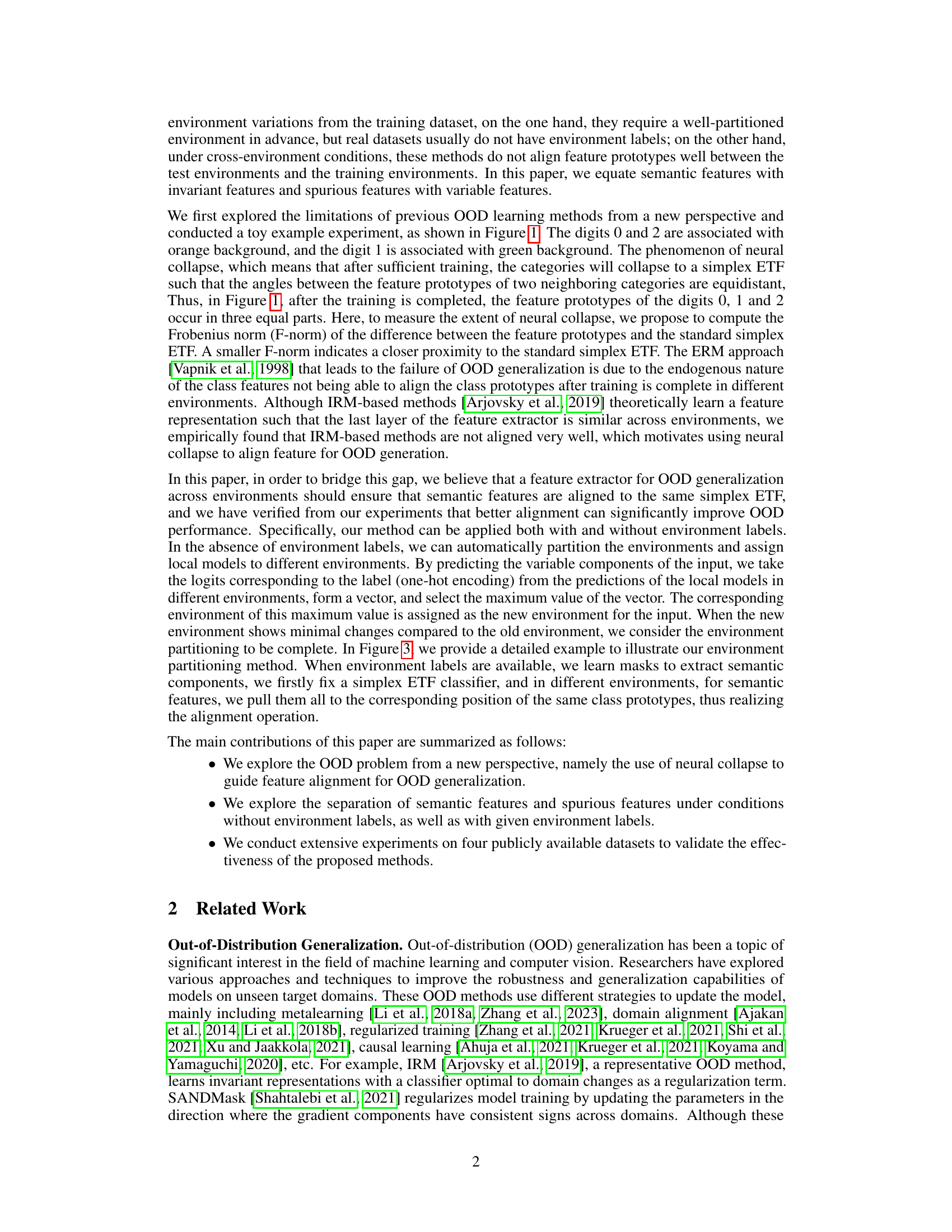

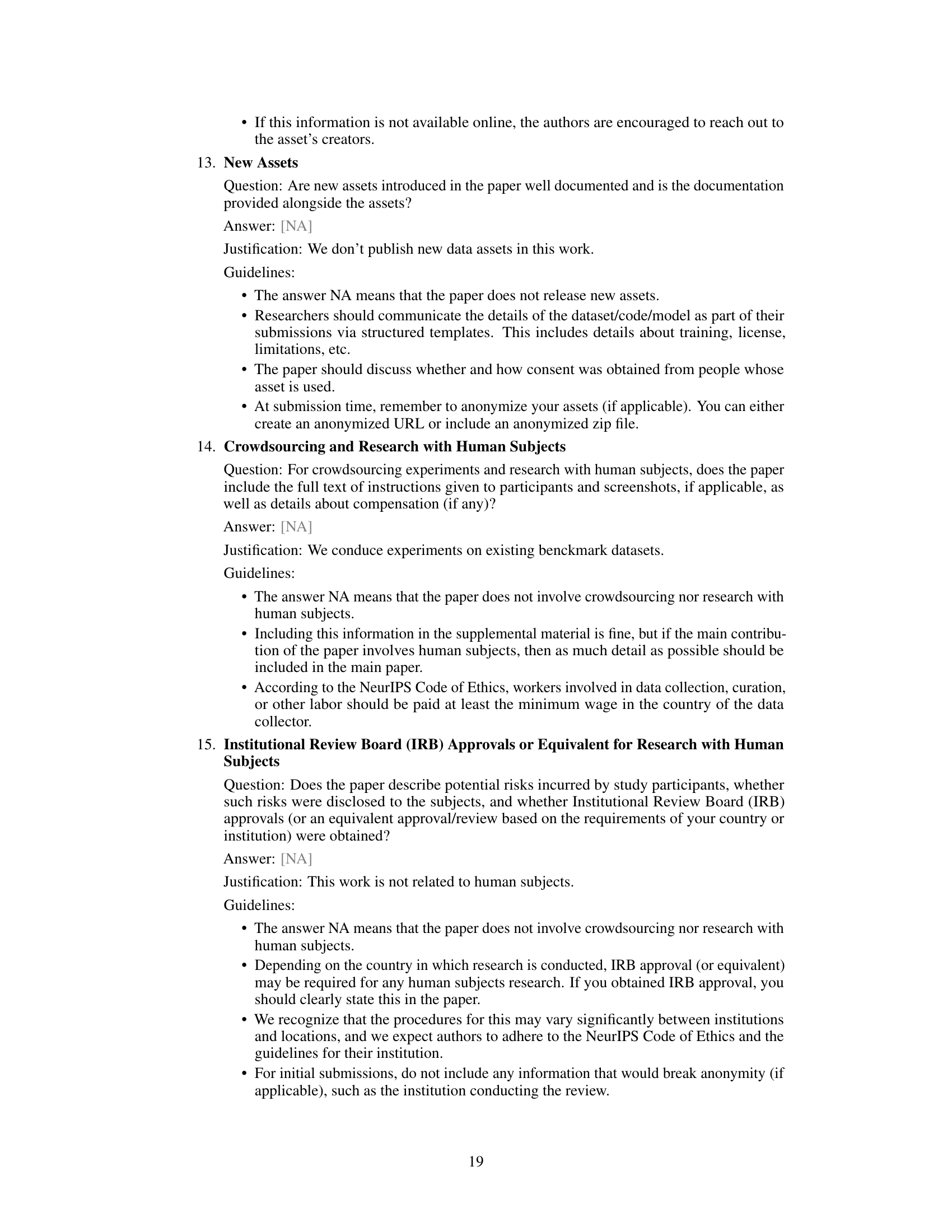

This figure compares the proposed method with ERM and IRM methods in terms of F-norm and OOD accuracy on a toy dataset. The left panel shows the visualization of how the three methods’ features prototypes are organized and aligned in the feature space. The right panel shows the OOD accuracy comparison in the bar chart. The results demonstrate the effectiveness of the proposed method in aligning semantic features across environments.

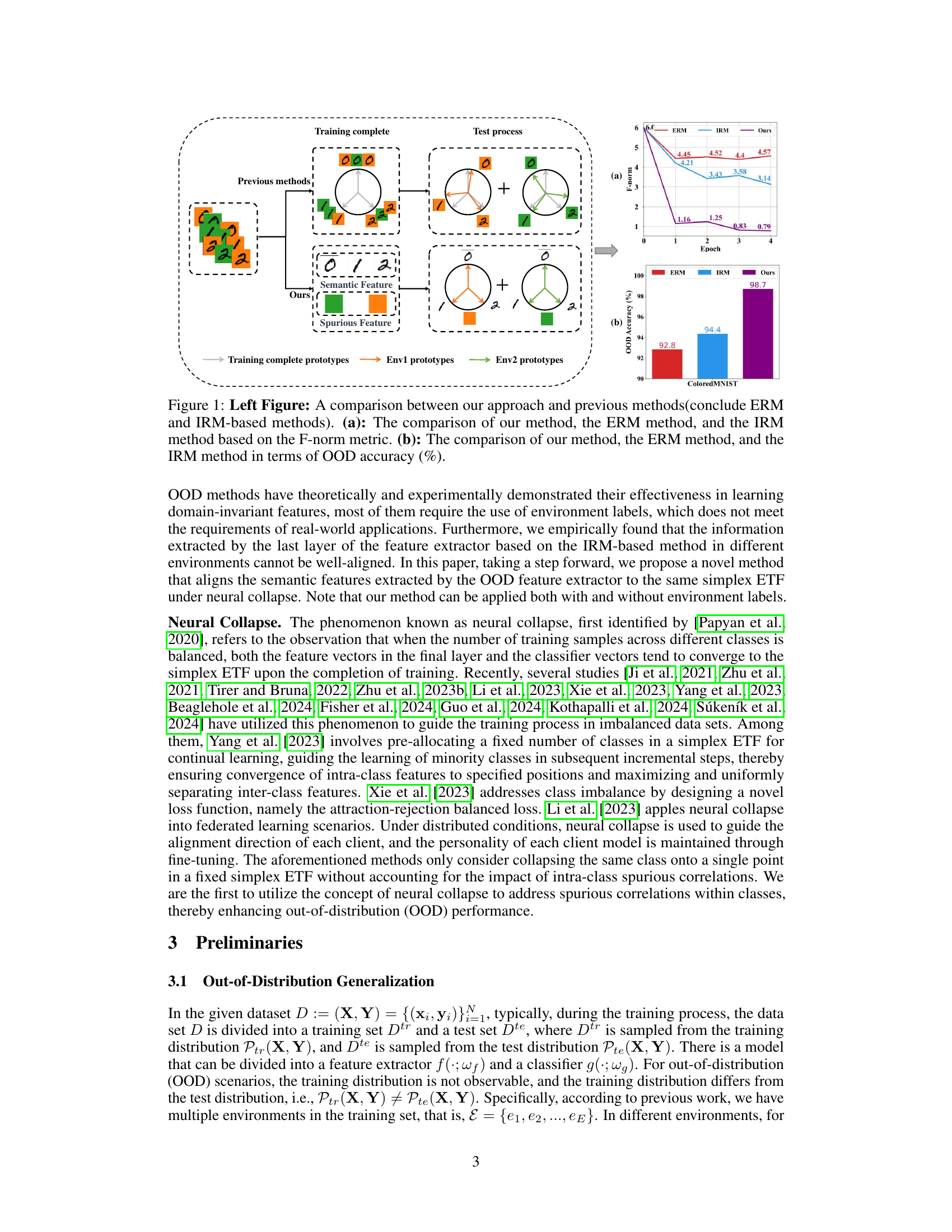

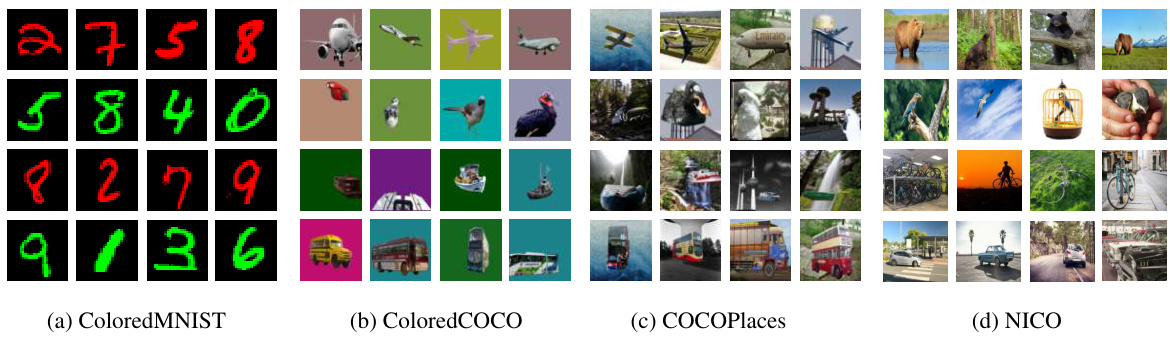

This table presents a comparison of the out-of-distribution (OOD) generalization performance of the proposed method (NCFAL) against 16 other OOD algorithms and the baseline ERM method across four datasets: ColoredMNIST, ColoredCOCO, COCOPlaces, and NICO. The results demonstrate the superior performance of NCFAL, particularly in scenarios with unknown environments where the method adapts to partition environments effectively.

In-depth insights#

Spurious Correlation#

Spurious correlations, where a statistical relationship exists but lacks a causal link, are a significant challenge in machine learning. In the context of image classification, spurious correlations often arise between image backgrounds and labels, leading models to rely on irrelevant features for prediction. This phenomenon severely impacts out-of-distribution (OOD) generalization, as models trained on spurious correlations fail to generalize to unseen data where those background features are different. Addressing this issue requires techniques that disentangle semantic features (true object characteristics) from spurious features (background or environmental information). This often involves techniques like environment partitioning (grouping similar backgrounds), learning semantic masks (isolating the object of interest), or employing adversarial training. The goal is to create models that focus on the essential features and are robust to spurious relationships, enhancing their performance and reliability across various domains and scenarios.

NC Feature Alignment#

The concept of ‘NC Feature Alignment’, likely referencing Neural Collapse feature alignment, proposes a novel approach to enhance out-of-distribution (OOD) generalization in machine learning models. The core idea revolves around leveraging the phenomenon of neural collapse, where features converge to a specific geometric structure (simplex ETF), to align semantic features across different environments. By enforcing this alignment, the method aims to decouple semantic information from spurious correlations with the environment, a common cause of poor OOD performance. This is achieved through techniques like environment partitioning and learning semantic masks, ensuring that learned representations are consistent regardless of environmental variations. A key advantage is the potential to work with or without explicit environment labels, making the approach more practical for real-world scenarios. The method’s effectiveness relies on the accurate separation of semantic and spurious features, the successful alignment of these features to the desired structure, and the robustness of the environment partitioning strategy. Further research could explore the scalability and computational efficiency of the proposed techniques and investigate its applicability to various data types and model architectures.

Env Partitioning#

The effectiveness of environment partitioning in addressing spurious correlations is a crucial aspect of the research. The method’s ability to automatically partition environments, especially when environment labels are unavailable, is a significant advantage. The process, involving iterative partitioning and local model training, aims to ensure that each environment reflects distinct spurious correlations. The selection of the maximum value from the vector of logits predicted by the local models in different environments determines the environment assignment. Convergence is carefully monitored to optimize environment partitioning. A key consideration is the trade-off between computational cost and the accuracy achieved through granular environment division. Further investigation into the optimal criteria for environment segmentation is warranted, along with analysis of its resilience to noise and variations in data distribution. Ultimately, the successful separation of spurious correlations through effective partitioning is critical to enhancing the model’s generalization ability and achieving superior out-of-distribution performance.

OOD Generalization#

Out-of-Distribution (OOD) generalization, a crucial aspect of robust machine learning, focuses on a model’s ability to generalize to data unseen during training. The core challenge lies in disentangling semantic features (relevant to the task) from spurious correlations (accidental associations in the training data). Failure to do so leads to poor performance on OOD data, as the model relies on irrelevant cues. Effective OOD generalization requires methods that learn invariant representations, focusing on the truly informative features rather than spurious ones. This often involves techniques like regularization, domain adaptation, or adversarial training, aiming to make the model less sensitive to environmental factors and more reliant on inherent semantic properties. Successfully achieving OOD generalization is essential for deploying models in real-world scenarios, where the test data distribution often differs from the training data. This remains an active area of research, with ongoing efforts to develop more robust and reliable methods.

Future Works#

The paper’s core contribution is a novel approach to out-of-distribution (OOD) generalization leveraging neural collapse. Future work should focus on enhancing the environment partitioning method. Currently, the method relies on a heuristic threshold; a more principled, data-driven approach, potentially incorporating clustering techniques or unsupervised learning, could significantly improve accuracy and robustness. Investigating the impact of different architectures and loss functions on the effectiveness of neural collapse for feature alignment is warranted. Furthermore, exploring the application of this method to other tasks beyond image classification, such as object detection and semantic segmentation, would demonstrate its generalizability and applicability. Finally, a thorough theoretical analysis of the interplay between neural collapse and OOD generalization is needed to establish a deeper understanding of its underlying mechanisms and limitations.

More visual insights#

More on figures

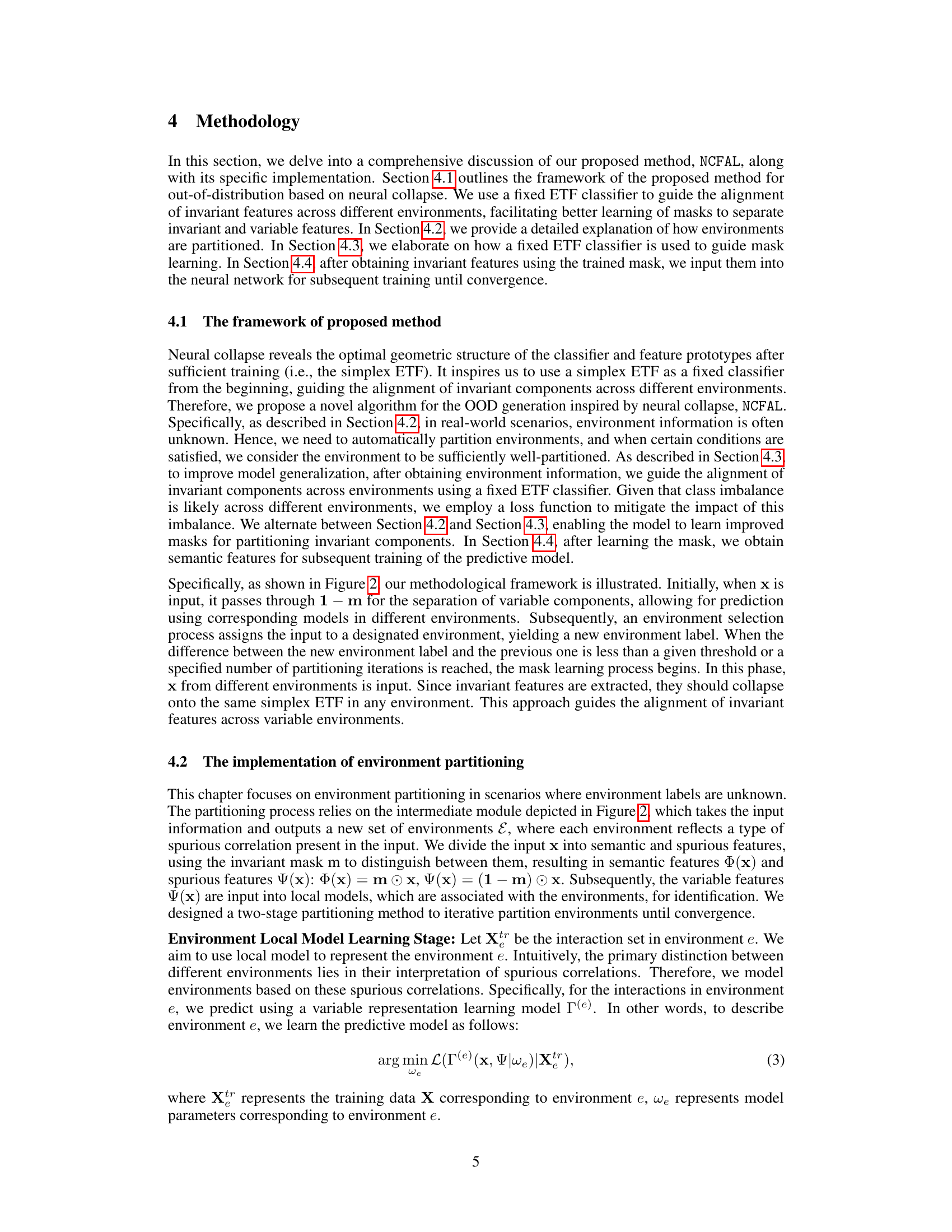

This figure illustrates the overall framework of the proposed Neural Collapse Inspired Feature Alignment (NCFAL) method for out-of-distribution generalization. It’s divided into three parts: 1. Left: Shows the input to the model, which includes the image features (x), the corresponding label (y), and the environment (env). The environment is represented by color, and a new mask (m) is applied to separate the semantic and spurious features. 2. Middle: Illustrates the environment partitioning process, crucial when environment labels are unknown. It uses local models trained on data from different environments to determine the environment of a new input sample by selecting the model with the maximum prediction probability. 3. Right: Focuses on the neural collapse mechanism for learning the mask. The separated semantic features are used with a fixed ETF (Equiangular Tight Frame) classifier to guide the alignment of semantic features across different environments. This ensures that the features learned collapse to the same simplex in each environment, effectively decoupling semantic and spurious features.

This figure illustrates the overall framework of the proposed Neural Collapse Inspired Feature Alignment (NCFAL) method. The left panel shows the input to the model, including the image, label, and environment information. The middle panel details the environment partitioning process for scenarios where environment labels are unknown, showing how the model predicts and selects the appropriate environment for each input. The right panel depicts the process of learning masks to extract invariant features, utilizing neural collapse to ensure alignment across environments. The masks are designed to effectively separate the invariant features (semantic components) from the variable ones (spurious components).

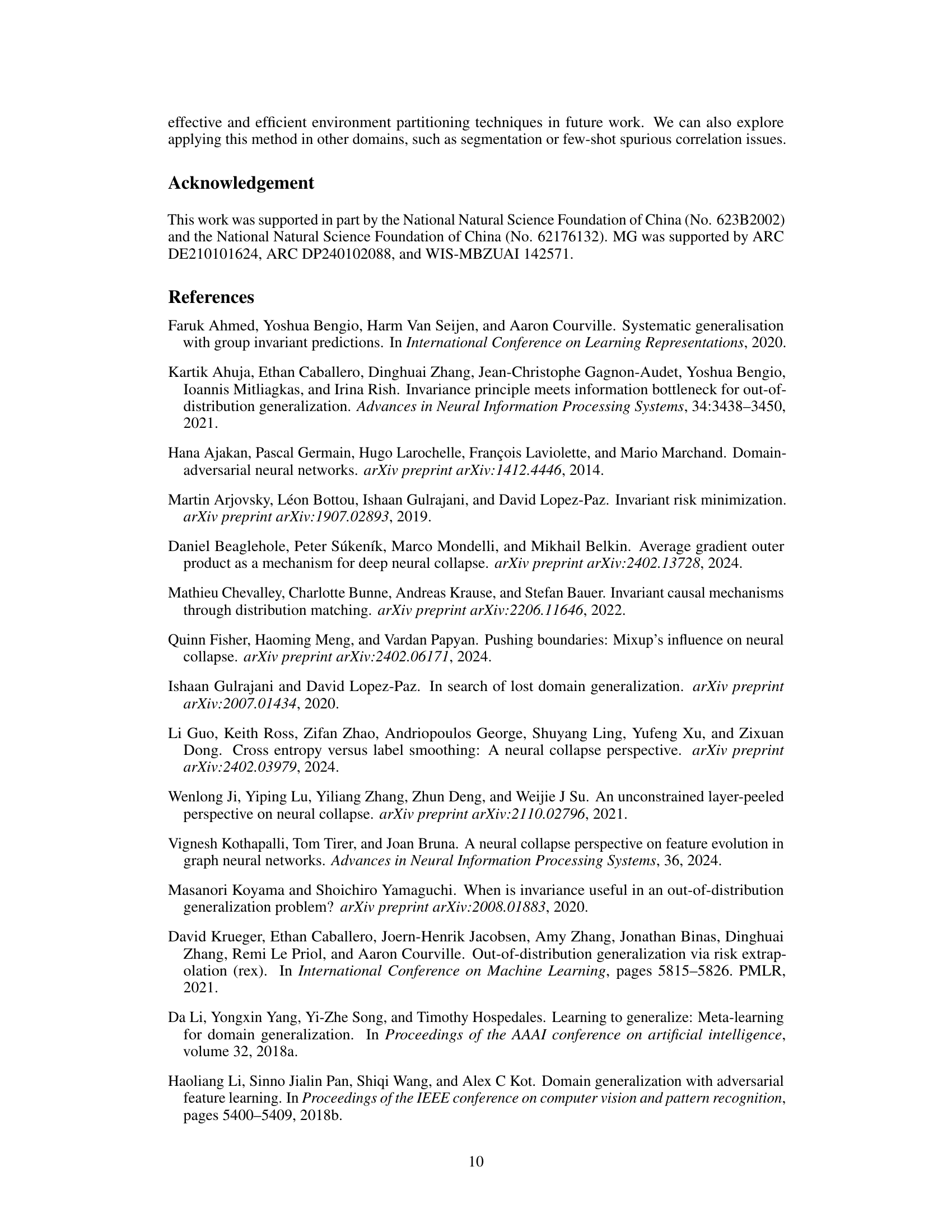

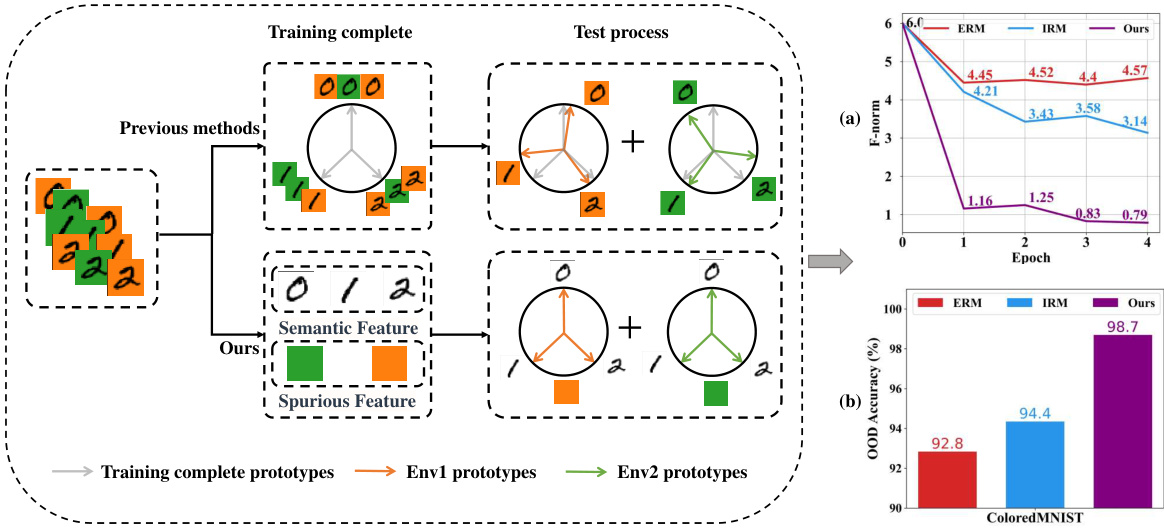

This figure compares the out-of-distribution (OOD) accuracy of the proposed method against a random environment splitting method. The results are shown for two datasets, ColoredMNIST and COCOPlaces. The proposed method demonstrates significantly better performance, indicating its effectiveness in partitioning environments for improved OOD generalization. The bars represent the average OOD accuracy and the error bars represent the standard deviations.

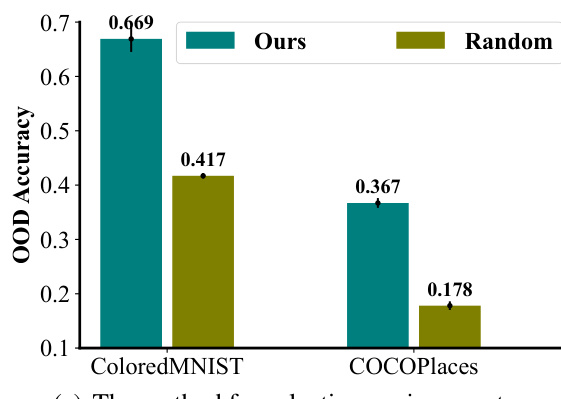

This figure compares the performance of the proposed method for environment partitioning against a random partitioning approach. The left subplot (a) shows that using maximum likelihood probability for environment assignment (the proposed method) outperforms random assignment on both ColoredMNIST and COCOPlaces datasets. The right subplot (b) demonstrates that the optimal performance is achieved when the number of partitioned environments is close to the actual number of environments, further validating the effectiveness of the proposed environment division method.

This figure compares the proposed method against ERM and IRM-based methods using two metrics: F-norm and OOD accuracy. The left panel (a) shows that the proposed method achieves a lower F-norm, indicating better alignment with the standard simplex ETF, a key aspect of neural collapse. The right panel (b) demonstrates superior OOD accuracy of the proposed method on the ColoredMNIST dataset.

More on tables

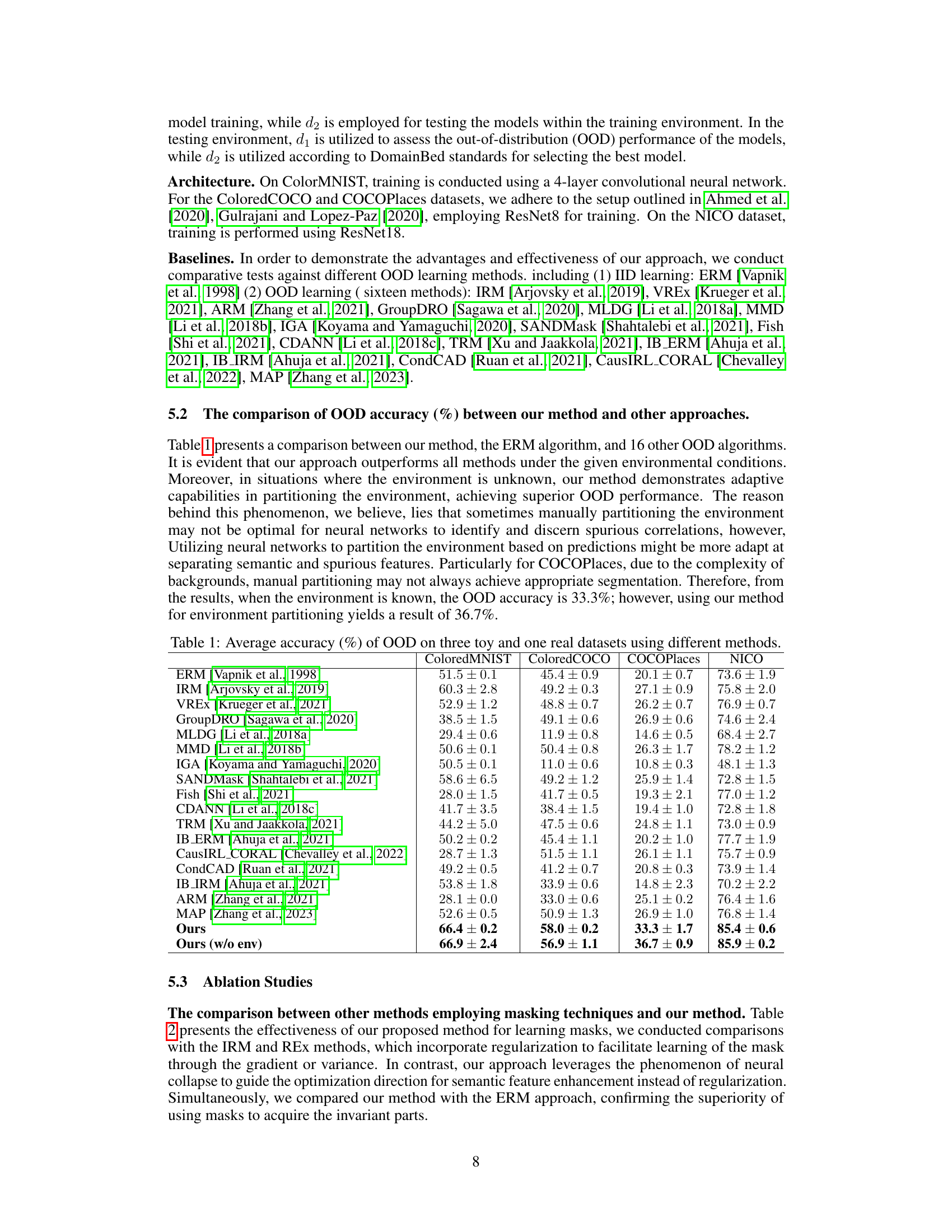

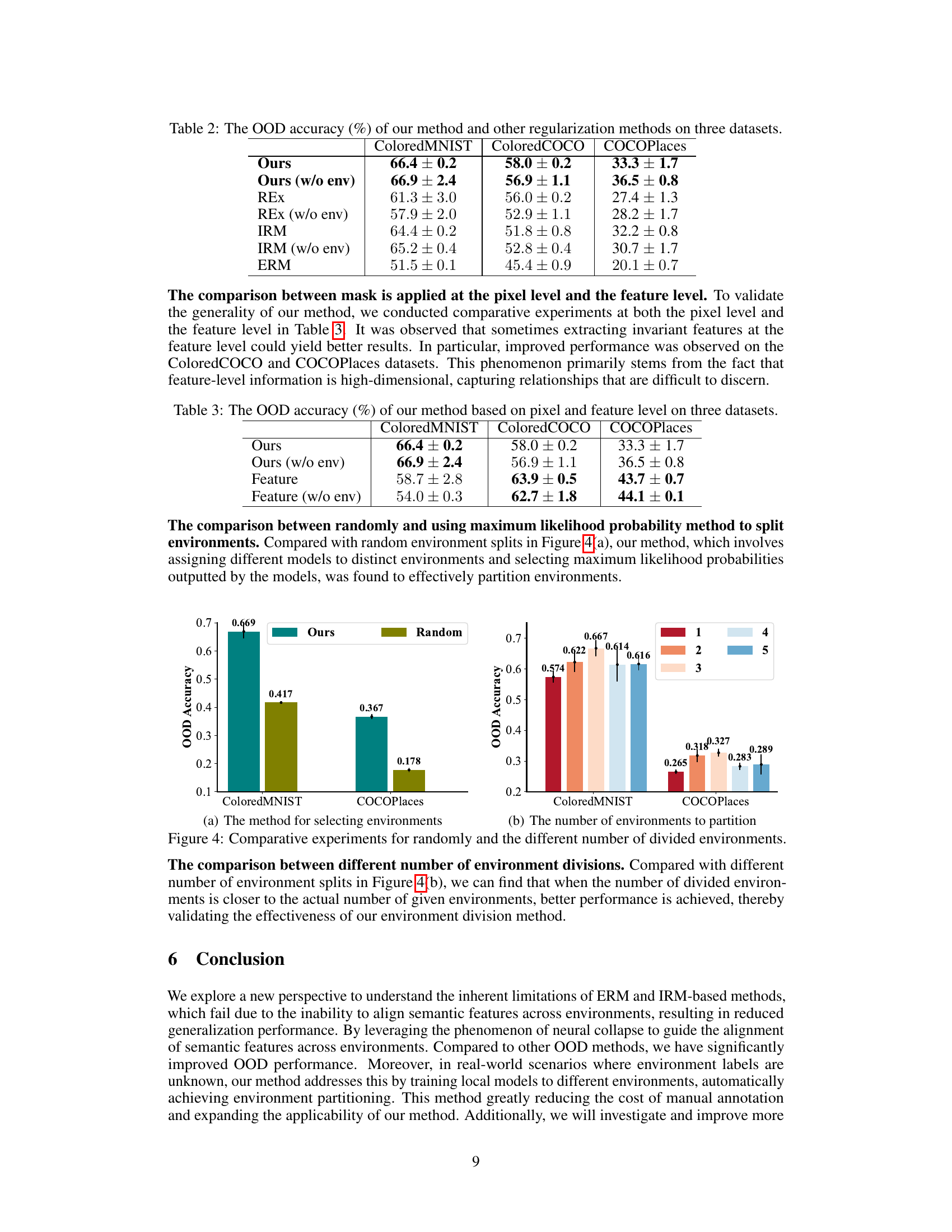

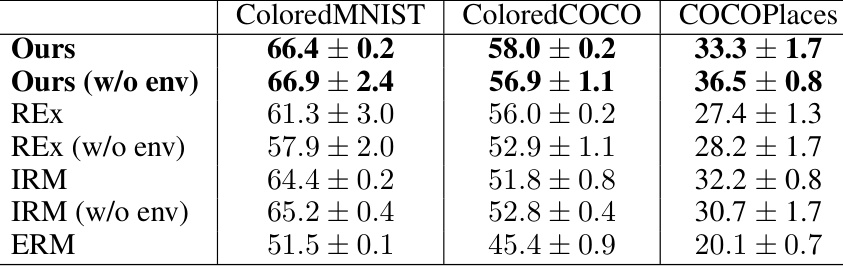

This table compares the out-of-distribution (OOD) accuracy of the proposed Neural Collapse Inspired Feature Alignment (NCFAL) method against other regularization methods (REX and IRM) and the standard Empirical Risk Minimization (ERM) approach on three datasets: ColoredMNIST, ColoredCOCO, and COCOPlaces. The results are shown for both the scenarios where environmental labels are available (the ‘Ours’ and ‘Ours (w/o env)’ rows) and those where they are not (the ‘Ours (w/o env)’ rows). It demonstrates the effectiveness of the proposed method, especially in handling the challenge of spurious correlation learning under OOD conditions.

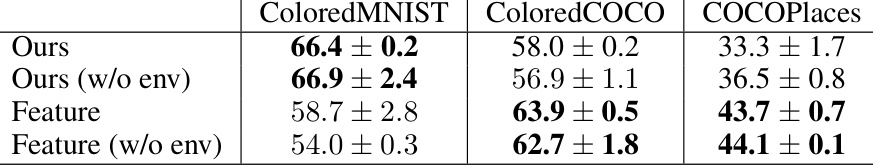

This table compares the out-of-distribution (OOD) accuracy of the proposed Neural Collapse Inspired Feature Alignment (NCFAL) method against other regularization methods across three datasets: ColoredMNIST, ColoredCOCO, and COCOPlaces. The comparison includes results both with and without environment labels, and shows the impact of applying the masking technique at the feature level vs. pixel level. The results demonstrate NCFAL’s performance improvement over other methods, particularly when environment labels are unknown.

Full paper#