↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Many machine learning and scientific computing applications rely on efficiently approximating invariant subspaces, often through techniques like Principal Component Analysis (PCA). Current methods face limitations in computational cost and accuracy, particularly for high-dimensional data. This research addresses these challenges by focusing on generalized eigenvalue problems (GEPs).

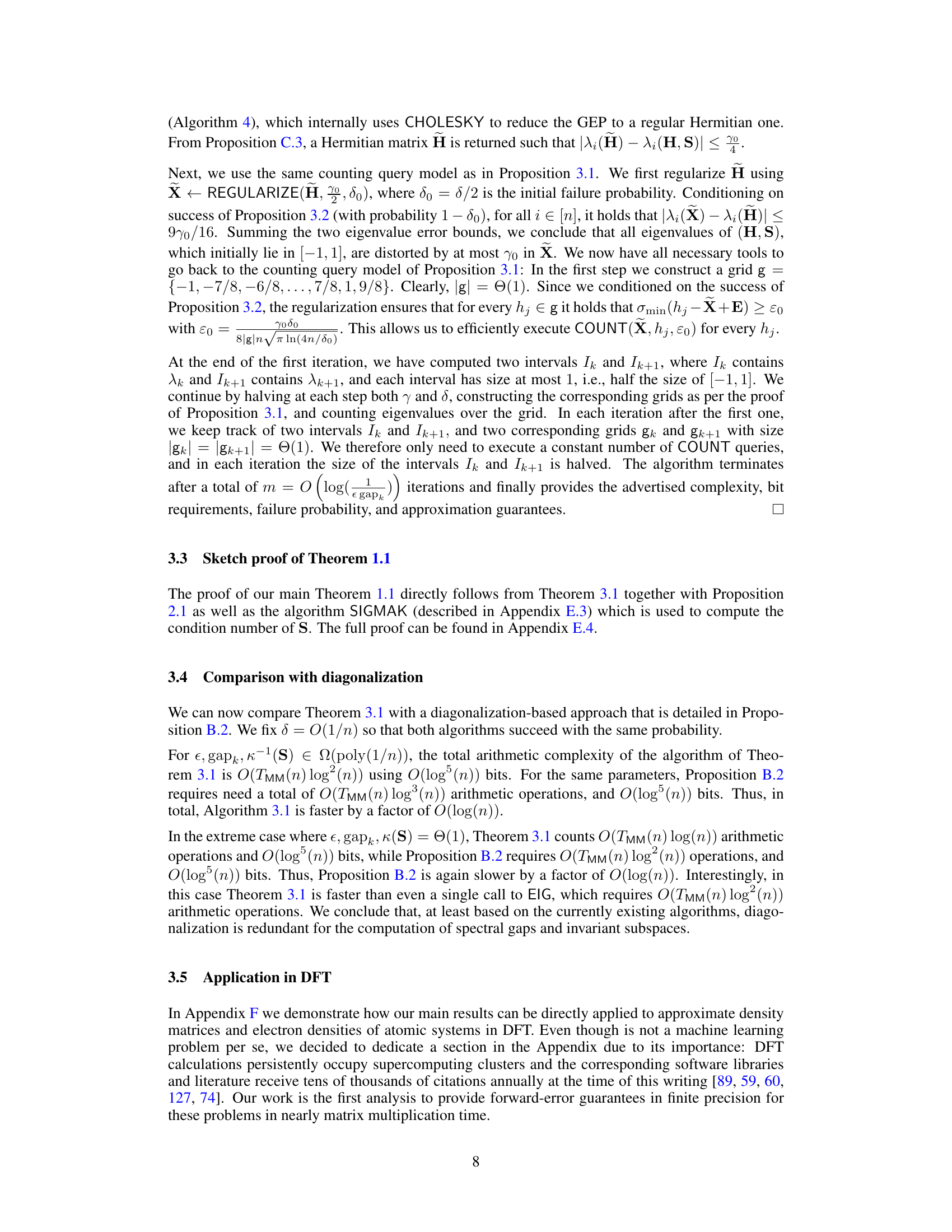

This paper introduces novel algorithms to solve GEPs. These algorithms leverage the symmetry inherent in many problems by using Cholesky factorization and smoothed analysis techniques. Crucially, they provide provable forward error guarantees, meaning the computed solution is demonstrably close to the true solution. The authors demonstrate significantly reduced computational complexity, achieving nearly matrix multiplication time for PCA. This represents a notable improvement in speed and accuracy for a fundamental computational problem.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in machine learning and scientific computing. It offers novel, efficient algorithms for approximating invariant subspaces, a fundamental problem in PCA and other applications. The provably accurate methods and improved complexity bounds are significant contributions, paving the way for faster and more reliable solutions. The improved analysis of the Cholesky factorization is also of independent interest.

Visual Insights#

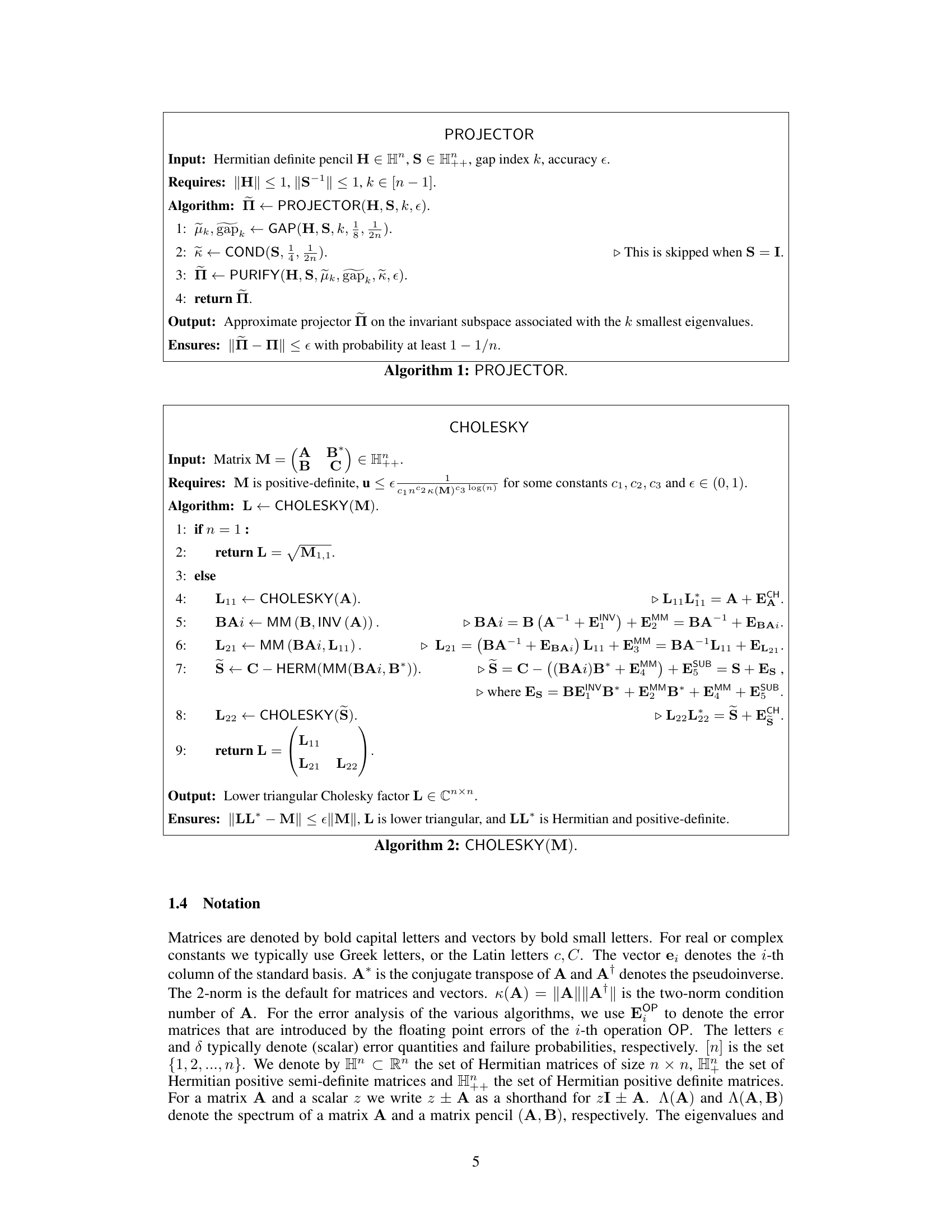

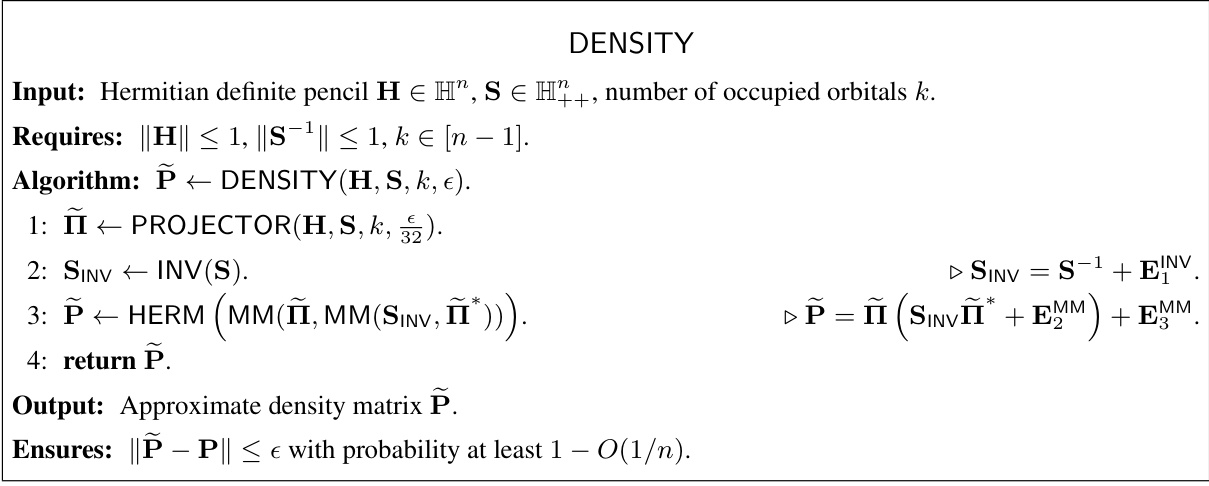

This algorithm takes as inputs a Hermitian definite pencil (H, S), an integer k denoting the number of occupied orbitals, and an accuracy parameter ε, and returns an approximate density matrix P. It first calls the PROJECTOR algorithm to compute an approximate spectral projector Π on the invariant subspace associated with the k smallest eigenvalues of the GEP. Then, it computes the inverse of S, using the INV algorithm from [39]. Finally, it computes the density matrix P using the formula P = ΠS⁻¹Π*. The algorithm ensures that ||P - P|| ≤ ε with probability at least 1 - O(1/n), where P is the true density matrix.

Full paper#