↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Existing research on Adam optimizer’s convergence often relies on restrictive assumptions, such as bounded gradients and specific noise models. This limits the applicability of these results to real-world scenarios. The non-convex smooth setting is further complicated by the adaptive step sizes used in Adam, which can interact complexly with the noise. This makes analysis challenging, and many existing studies provide weak convergence guarantees or require modifications to Adam’s algorithm.

This paper tackles these challenges head-on. It analyzes Adam’s convergence under relaxed assumptions, including unbounded gradients and a general noise model that encompasses various noise types commonly found in practice. Using novel techniques, the authors prove high probability convergence results for Adam, achieving a rate that matches the theoretical lower bound of stochastic first-order methods. The findings extend to a more practical generalized smooth condition, allowing the algorithm to handle a wider range of objective functions encountered in applications. These results are significant because they provide stronger theoretical backing for Adam and improve its practical applicability.

Key Takeaways#

Why does it matter?#

This paper is crucial because it addresses limitations in existing research on Adam’s convergence, especially under less restrictive conditions. This enhances our understanding of Adam’s behavior and expands the applicability of theoretical results to more realistic scenarios in machine learning. The generalized smooth condition used broadens applicability, and the high-probability convergence results are particularly valuable.

Visual Insights#

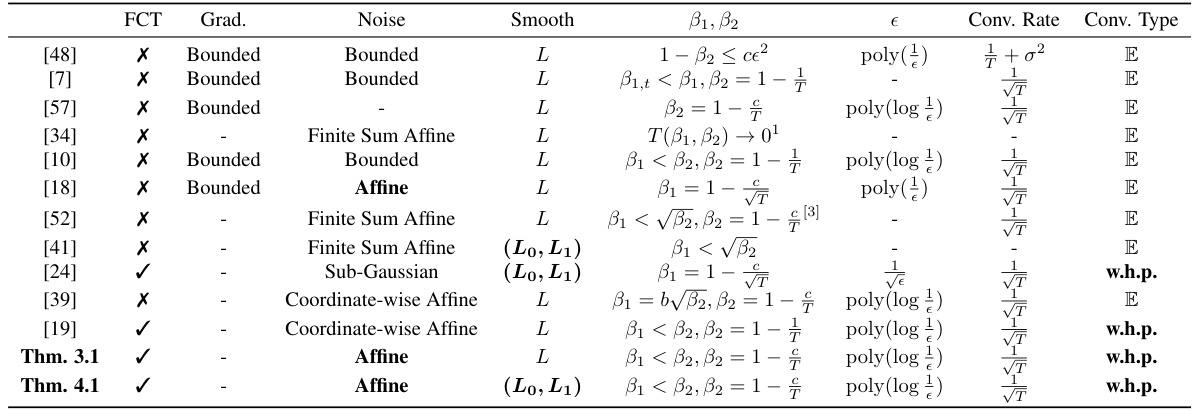

This table compares the convergence results of different Adam analysis methods under various conditions, including gradient type, noise model, smoothness assumption, hyper-parameter settings, and convergence rate. It highlights the differences and improvements made by the authors’ work.

Full paper#