↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Traditional methods for training recurrent neural networks, like backpropagation through time (BPTT), face challenges related to biological plausibility and computational cost. The brain’s learning process, however, remains largely unclear. This paper addresses the need to explore biologically plausible mechanisms for learning in recurrent neural networks.

This study investigates networks pre-trained with feedback control on a motor task. Researchers found that this feedback control approximates optimal gradients, resulting in rapid movement corrections and efficient adaptation to persistent errors. A biologically plausible learning rule enhanced by feedback control improved learning accuracy and efficiency. This research suggests a promising mechanism for biological credit assignment and has implications for both neuroscience and AI.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in neuroscience and machine learning because it offers a novel perspective on how the brain might perform credit assignment, a long-standing challenge in artificial neural networks. The findings provide insights into biologically plausible learning mechanisms and could potentially inform the design of more efficient and robust AI systems. It opens avenues for exploring the role of feedback control in various neural circuits and its implications for both learning and adaptive behavior.

Visual Insights#

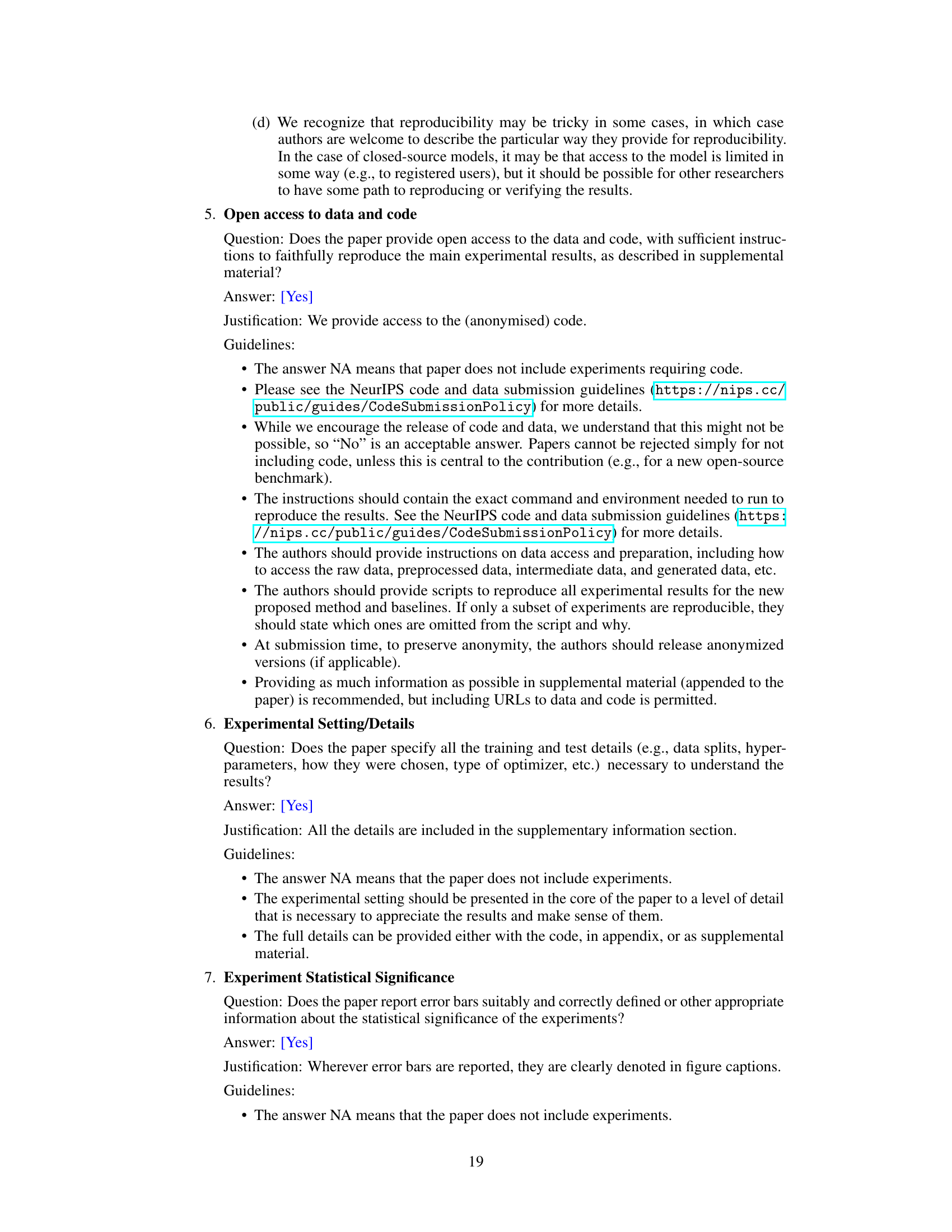

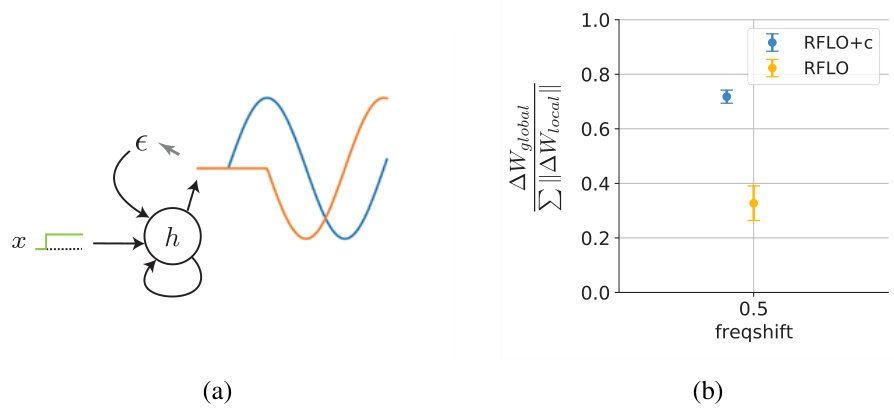

This figure shows the results of a recurrent neural network with feedback control on a motor adaptation task. The network is pre-trained to generate a 2D sigmoidal velocity profile given a target and a go cue. Then it’s tested by rotating the target 30°. (a) shows the immediate adaptation to the perturbation enabled by feedback control. (b) shows that after learning, the network improves further, overcoming the perturbation without ongoing feedback control. This demonstrates the effectiveness of feedback control for motor learning.

In-depth insights#

Feedback Control#

The concept of ‘Feedback Control’ in the context of recurrent neural networks (RNNs) is explored in this research. The core idea revolves around using feedback signals to guide credit assignment and improve learning efficiency in these networks. This approach tackles the challenge of backpropagation through time (BPTT), a computationally intensive method, by approximating the gradient descent using readily available feedback during the network’s operation. This makes the learning process faster and more accurate. The authors demonstrate how this approach leads to rapid adaptation to changing conditions, even when dealing with persistent perturbations. The feedback mechanism implicitly injects a second-order gradient into the system, improving the optimization process. Moreover, local learning rules become more efficient and accurate when working under feedback control due to a decoupling effect that reduces the influence of past activity on current learning. The research highlights a potential biological mechanism of credit assignment in the brain. This approach is biologically plausible and offers a promising direction for future RNN research and our understanding of biological learning.

RNN Adaptation#

Recurrent Neural Networks (RNNs) are powerful tools for sequential data processing, but their adaptation to changing environments remains a challenge. Effective RNN adaptation requires mechanisms that allow the network to efficiently update its internal representations in response to novel input patterns or task demands. This necessitates a balance between stability (maintaining previously learned knowledge) and plasticity (adapting to new information). Several approaches exist, including methods based on incremental learning, where new data is incorporated without catastrophic forgetting of previous knowledge, and meta-learning, where the network learns how to learn new tasks efficiently. Feedback control emerges as a promising technique; it involves injecting error signals to modulate the network’s internal dynamics, guiding the adaptation process. Furthermore, local learning rules that update network weights based on recent activity and error signals offer a biologically plausible mechanism for adaptation. These techniques must address challenges like vanishing/exploding gradients which hinder effective training and learning in RNNs. Research into novel architectures, such as those with specialized feedback pathways or adaptive learning rules, is crucial for achieving robust and efficient RNN adaptation in dynamic systems.

Gradient Approx#

Approximating gradients is crucial for biologically plausible learning in recurrent neural networks (RNNs) due to the inherent difficulties of implementing backpropagation through time (BPTT) in biological systems. The core challenge lies in the network’s recurrent architecture, where errors propagate through time and space, making exact gradient calculation computationally expensive. The paper likely explores various approximation strategies, such as local learning rules that operate on smaller portions of the network, or feedback mechanisms that help guide credit assignment by providing online error signals. These techniques trade-off accuracy for biological feasibility. A key area of investigation might be how well these approximations capture the true gradient, especially in the presence of feedback control, which itself might be considered a form of gradient approximation. The analysis would likely involve comparing the approximated gradient to the true gradient under various conditions, potentially evaluating their impact on learning speed and accuracy. The results would likely show a trade-off between approximation accuracy and learning efficiency, suggesting that even imperfect gradient approximations can be effective in RNNs, particularly when combined with adaptive feedback control.

Bio-Plausible Rules#

The concept of “Bio-Plausible Rules” in the context of neural network research is crucial for bridging the gap between artificial and biological learning mechanisms. It emphasizes the need for learning rules that closely mirror the processes occurring in biological neural systems, rather than relying solely on computationally efficient, but biologically unrealistic, algorithms such as backpropagation. Bio-plausible rules often incorporate local learning, meaning that synaptic weight adjustments are based solely on information available at the synapse or local neuronal neighborhood. This contrasts with backpropagation, which requires global information about errors throughout the network. Another key aspect is the reliance on biologically realistic neural dynamics and timing. Biological neurons exhibit complex temporal behavior, and bio-plausible learning rules should explicitly model this behavior. These rules might involve spike-timing-dependent plasticity (STDP) or other biologically observed phenomena. The development and testing of bio-plausible rules is a critical area of research that could lead to deeper understanding of biological learning and more robust, efficient, and biologically inspired artificial neural networks.

Future Work#

The paper’s discussion of future work suggests several promising research directions. Extending the findings to more complex network architectures and a wider range of tasks is crucial for establishing the generality of the feedback control mechanism. Investigating different biological implementations of the feedback signal and local learning rules would greatly enhance the model’s biological plausibility. Furthermore, exploring less explicit feedback mechanisms, moving beyond the simple linear projection used in this study, presents an exciting challenge that could yield valuable insights into the brain’s learning processes. Analyzing the impact of noise and other biological constraints on the performance of feedback-controlled networks is essential for building more realistic models. Finally, exploring the interaction between feedback control and other learning mechanisms is needed to achieve a more complete understanding of how the brain learns. These future investigations have the potential to significantly advance both our understanding of biological learning and the development of more efficient and biologically inspired artificial learning systems.

More visual insights#

More on figures

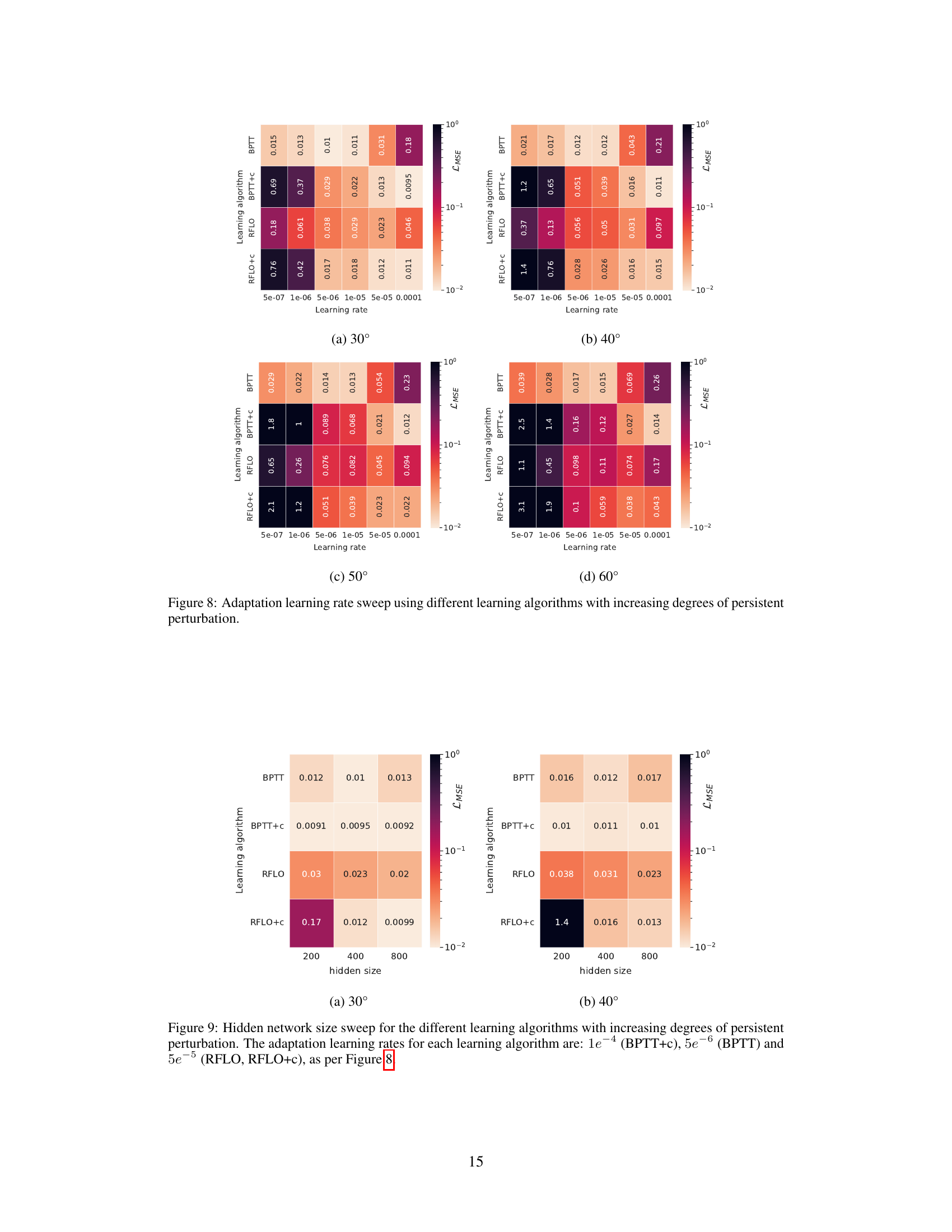

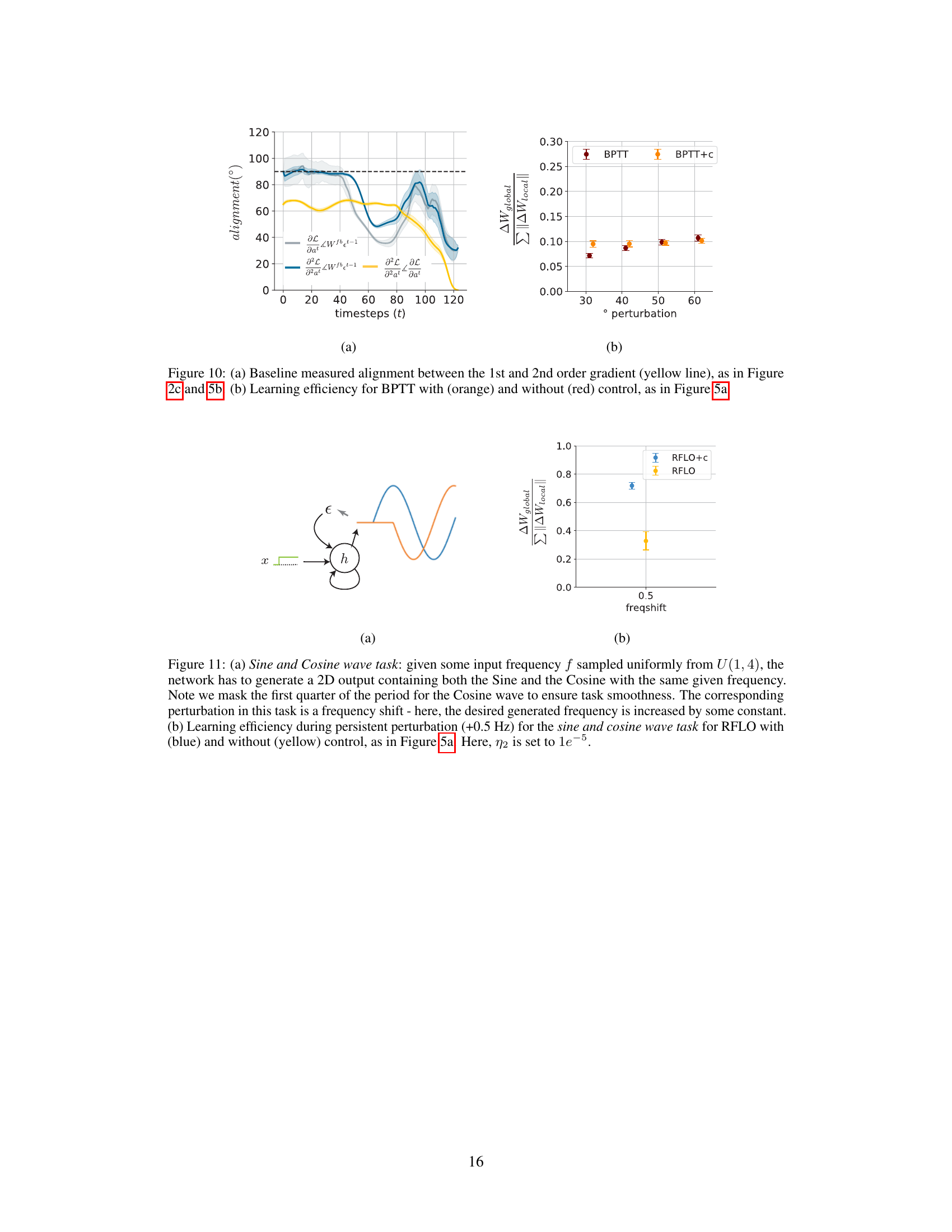

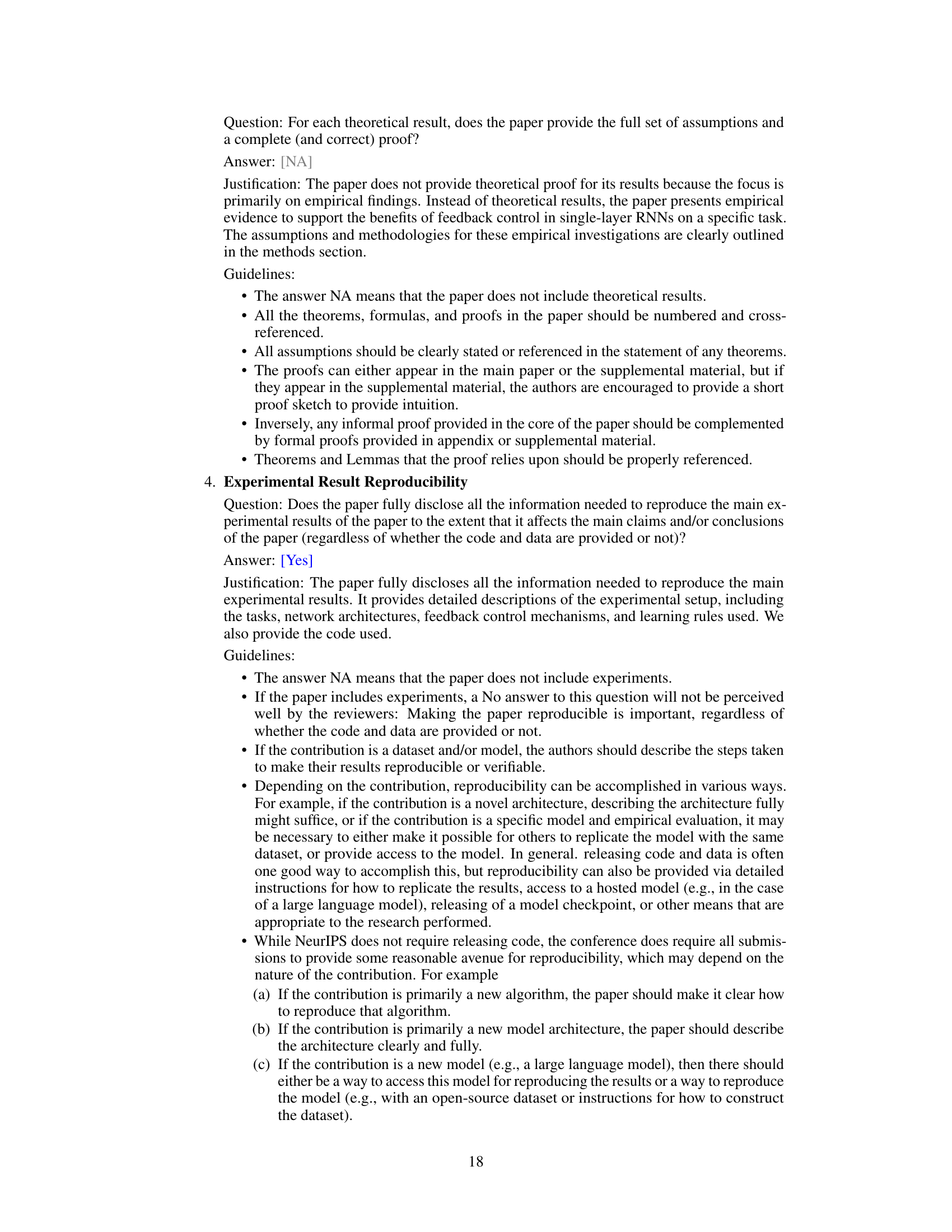

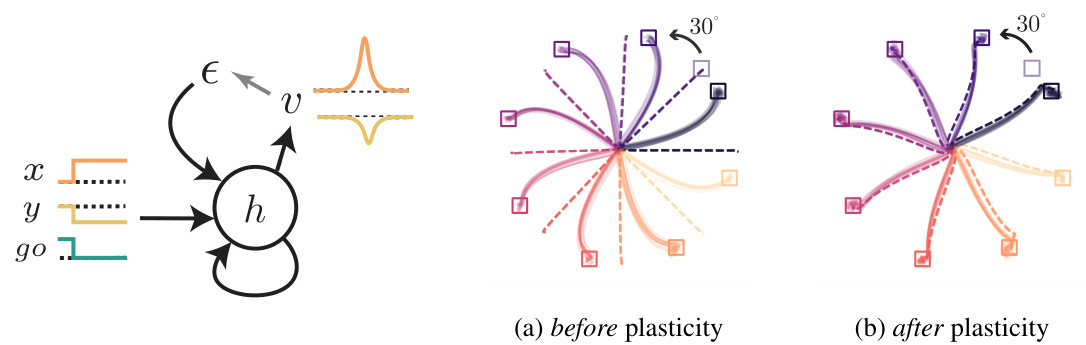

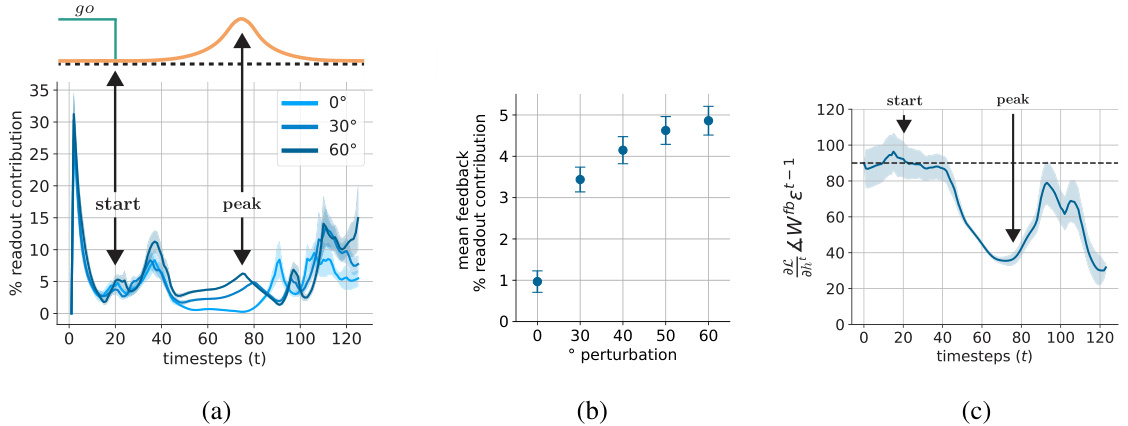

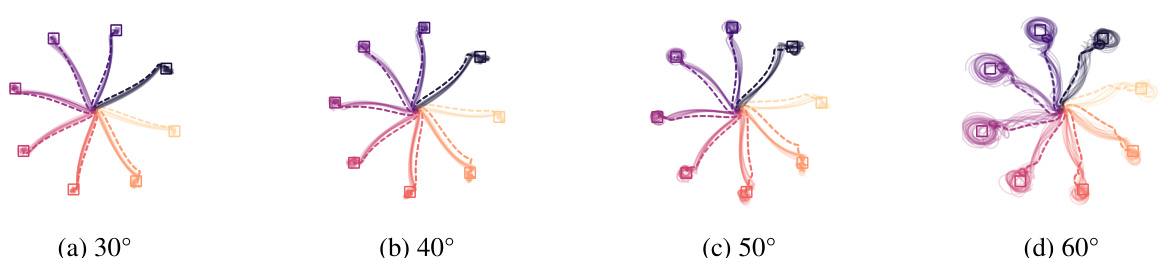

This figure demonstrates the ability of recurrent neural networks with feedback control to adapt to both acute and persistent task perturbations. In (a), a network pre-trained on a reaching task is shown adapting to an acute 30° rotation of the target during a single trial. The network with feedback control adapts rapidly, whereas a network without feedback control does not. In (b), the same network is shown adapting to the persistent perturbation using a local learning rule. With feedback control, the performance improves over multiple trials; without feedback control, it does not.

This figure shows the results of the experiment comparing the performance of recurrent neural networks with and without feedback control during persistent task perturbation. Panel (a) shows the training loss over time for networks trained with feedback-driven local learning (RFLO+c), and networks trained with the same rule without feedback control. The results indicate that while the feedback control helps initially, the networks eventually become independent of it. Panel (b) shows the final test performance after 1000 trials (with feedback control turned off) for networks trained with RFLO with and without feedback control, and for networks trained with online BPTT with and without feedback control. The results reveal that feedback control significantly improves the accuracy of local learning during persistent perturbation.

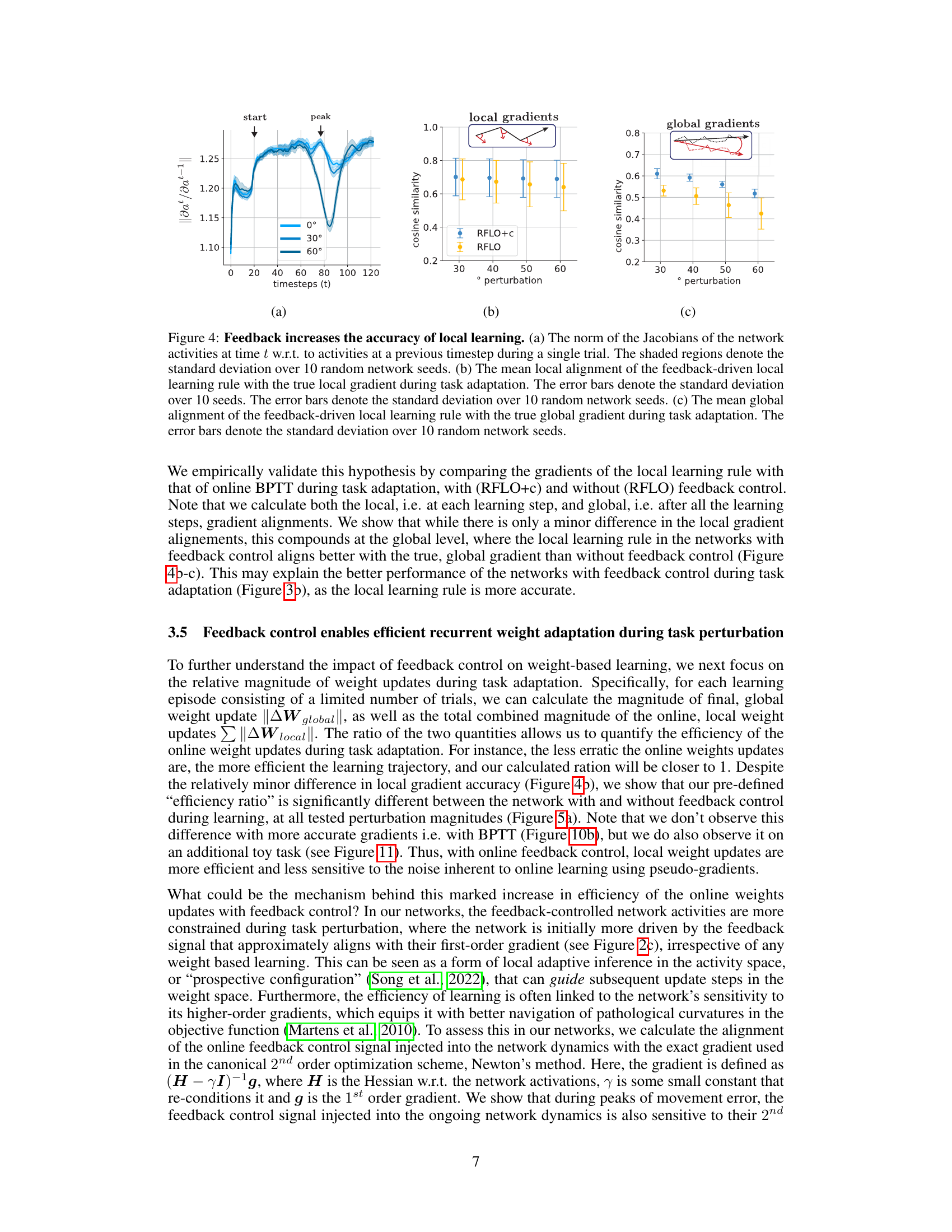

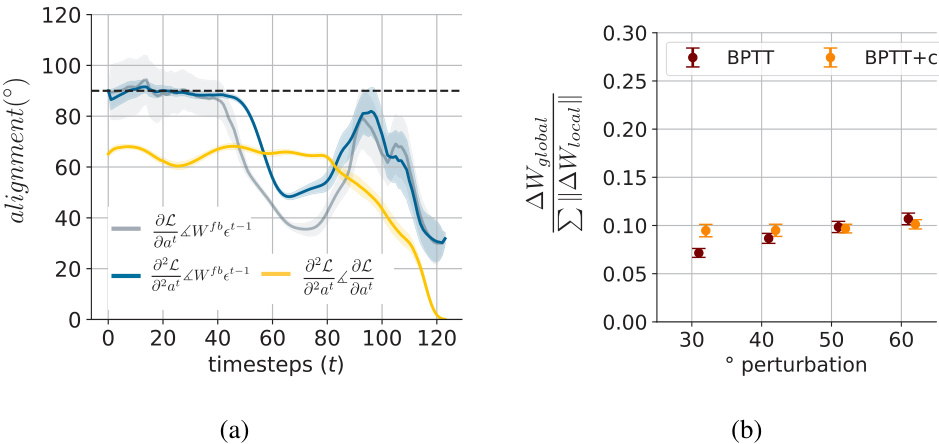

This figure shows how feedback control improves the accuracy of local learning rules during task adaptation. Panel (a) displays the norm of the Jacobians of network activities at time t relative to activities at a previous timestep (t-1) during a single trial, demonstrating the impact of task perturbation. Panels (b) and (c) show the mean local and global alignment between the feedback-driven local learning rule and the true gradients during task adaptation, indicating improved accuracy with feedback control.

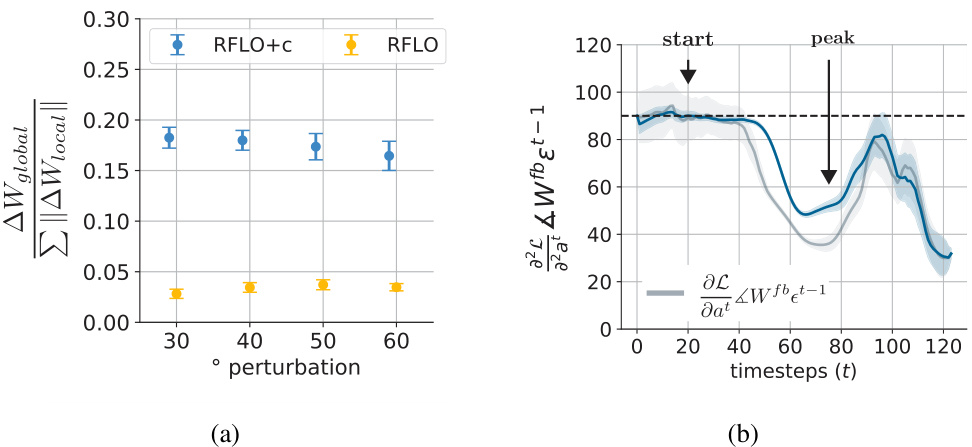

This figure demonstrates the impact of feedback control on the efficiency of local learning during task adaptation. Panel (a) shows that the ratio of global to local weight updates is significantly higher for networks with feedback control, indicating improved efficiency. Panel (b) shows that the feedback control signal aligns well with the second-order gradient, suggesting that feedback control implicitly injects second-order information, improving learning efficiency.

This figure demonstrates the rapid adaptation of recurrent neural networks with feedback control to both acute and persistent task perturbations. Part (a) shows the immediate adaptation during the first trial of a 30° rotation in target position, highlighting the superior performance with feedback control. Part (b) shows the further improvement in performance when the network uses a local learning rule with persistent perturbation.

This figure shows the results of an experiment where recurrent neural networks (RNNs) with and without feedback control were tested on a motor task with a 30° perturbation. The RNN with feedback control adapted rapidly to the acute perturbation during the first trial. Additionally, with a local learning rule, the feedback-controlled RNN improved further during persistent perturbation, demonstrating the benefit of feedback control for adapting to both acute and persistent perturbations.

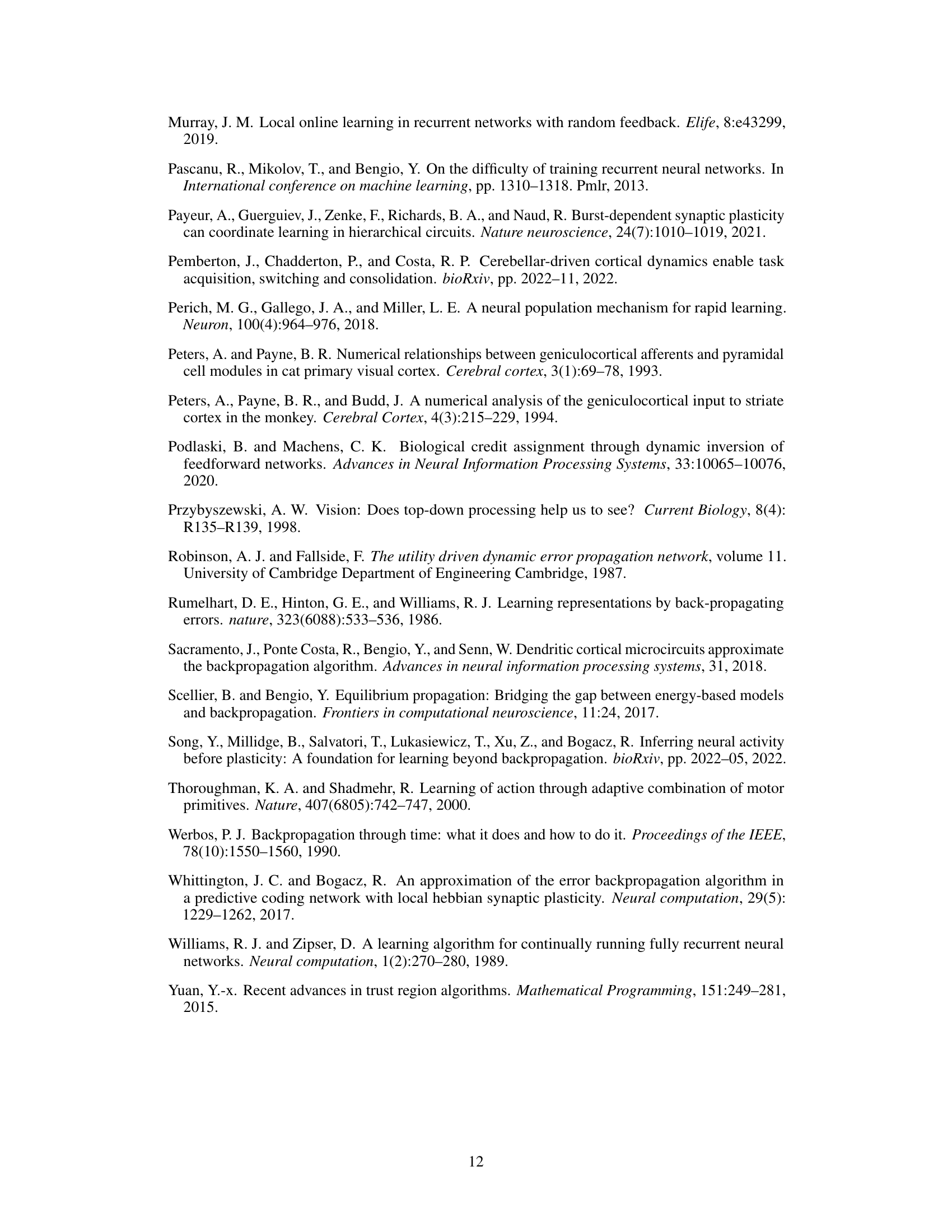

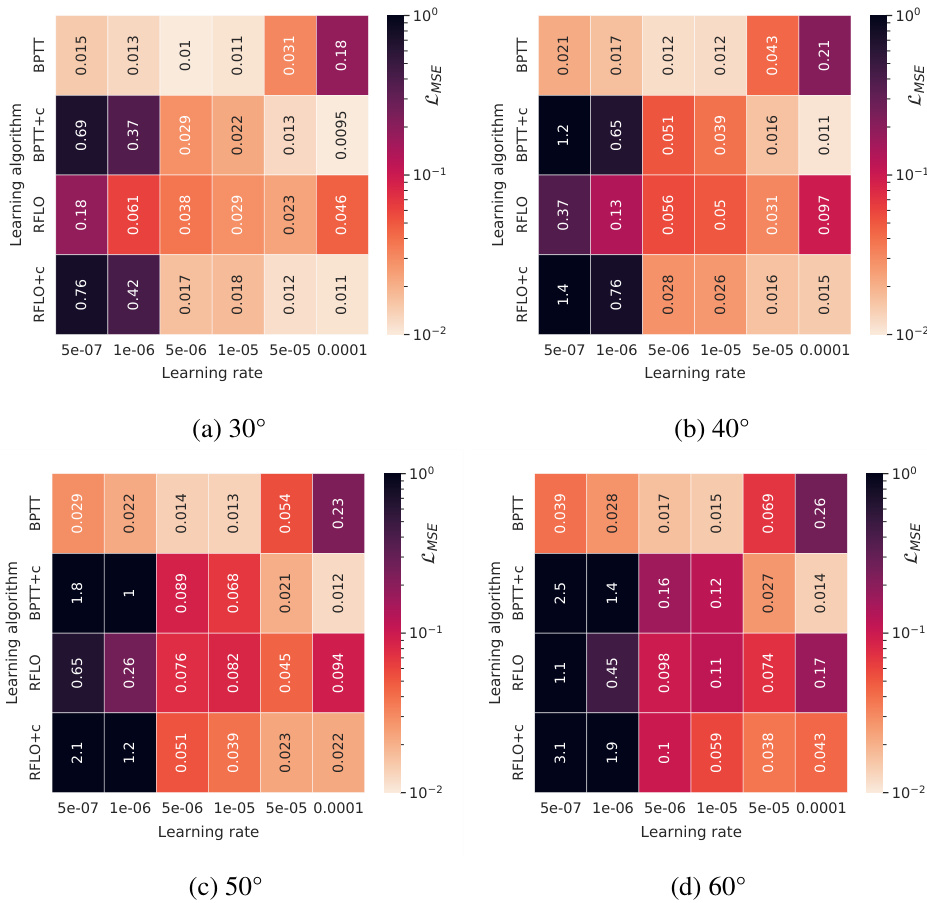

This figure shows the results of a learning rate sweep for different learning algorithms (RFLO+c, RFLO, BPTT+c, BPTT) across various degrees of persistent perturbation (30°, 40°, 50°, 60°). Each heatmap visualizes the mean squared error (MSE) for each algorithm at different learning rates. The color intensity represents the MSE value, with darker colors indicating lower error. This helps to determine the optimal learning rate for each algorithm under different levels of perturbation.

This figure displays the results of a learning rate sweep conducted on four different learning algorithms (BPTT, BPTT+c, RFLO, and RFLO+c) at various degrees of persistent perturbation. The heatmaps show the mean squared error (MSE) achieved by each algorithm across different learning rates, with higher MSE indicating poorer performance. The results suggest that RFLO+c (which incorporates feedback control and a local learning rule) achieves the best performance, particularly at higher perturbation magnitudes.

This figure demonstrates how feedback control improves the accuracy of local learning rules in recurrent neural networks during task adaptation. Panel (a) shows the norm of the Jacobians of the network activities, indicating how sensitive the network is to its past activity. Panel (b) shows the local alignment of the feedback-driven local learning rule with the true local gradient, demonstrating that feedback increases accuracy. Panel (c) shows the global alignment, further supporting the benefit of feedback in improving accuracy.

This figure shows how recurrent neural networks with feedback control adapt to task perturbations. Panel (a) demonstrates rapid adaptation to an acute perturbation during the first trial, highlighting the benefit of feedback control. Panel (b) illustrates further performance improvement when using a local learning rule during persistent perturbation, showcasing the network’s ability to learn and correct for ongoing errors.

Full paper#