↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

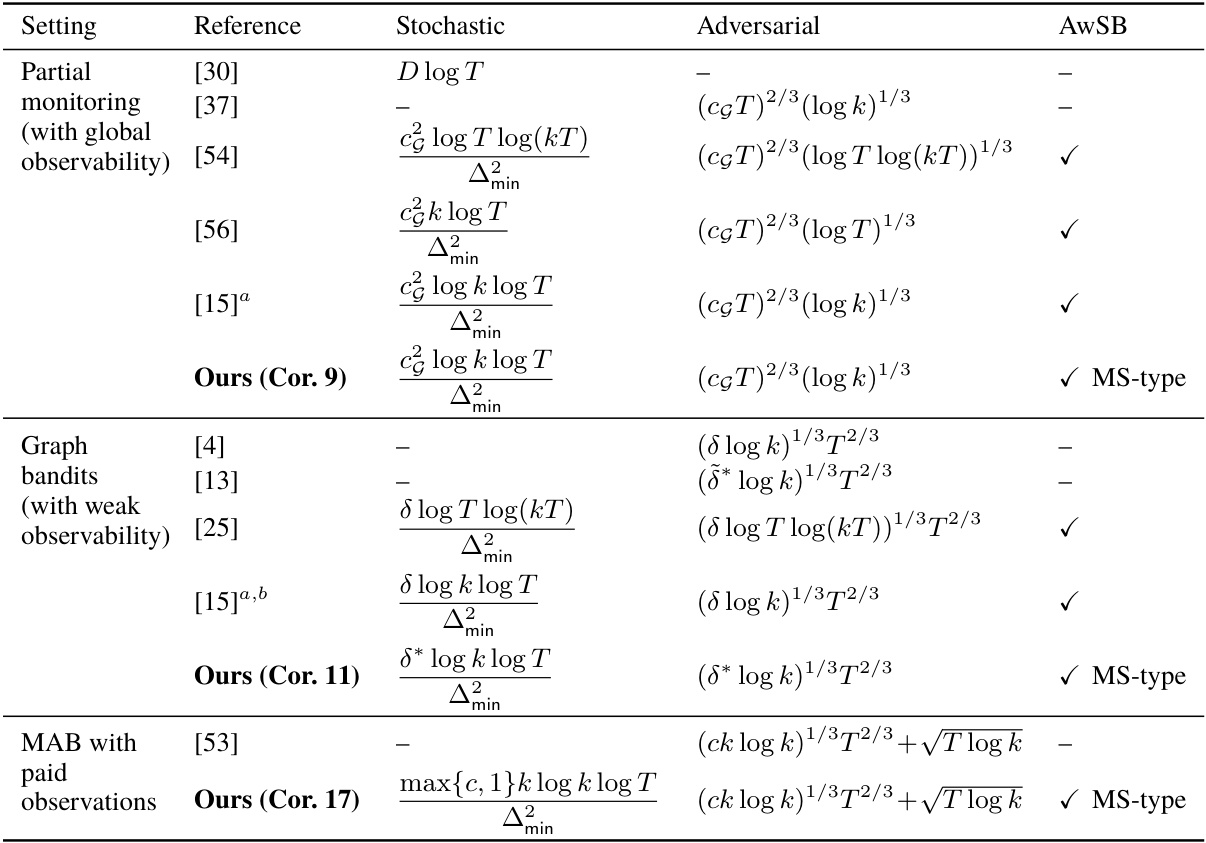

Many online learning problems, such as partial monitoring and graph bandits, exhibit a minimax regret of O(T²/³), representing a significant challenge for algorithm designers. Existing adaptive learning rates primarily focus on problems with a different regret bound, lacking efficient solutions for these “hard” problems. Additionally, most adaptive learning rates aren’t designed to seamlessly handle both stochastic and adversarial environments.

This research introduces a novel adaptive learning rate framework for FTRL, which greatly simplifies the design of online learning algorithms. By meticulously matching stability, penalty, and bias terms in the regret bound, a surprisingly simple learning rate is obtained. This method improves existing best-of-both-worlds regret upper bounds for partial monitoring, graph bandits, and multi-armed bandits with paid observations. The resulting learning rates are surprisingly simple compared to existing ones and achieve nearly optimal regret bounds in both stochastic and adversarial scenarios.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in online learning because it introduces a novel adaptive learning rate framework for problems with a minimax regret of O(T²/³). This expands the applicability of Follow-the-Regularized-Leader (FTRL) algorithms, which are known for their effectiveness in various online learning scenarios, but have been limited in their use with indirect feedback. The proposed framework offers significantly simpler algorithms with improved regret bounds compared to existing approaches. It also provides a unified approach to achieving the best-of-both-worlds (BOBW) guarantee for hard problems, significantly advancing the field.

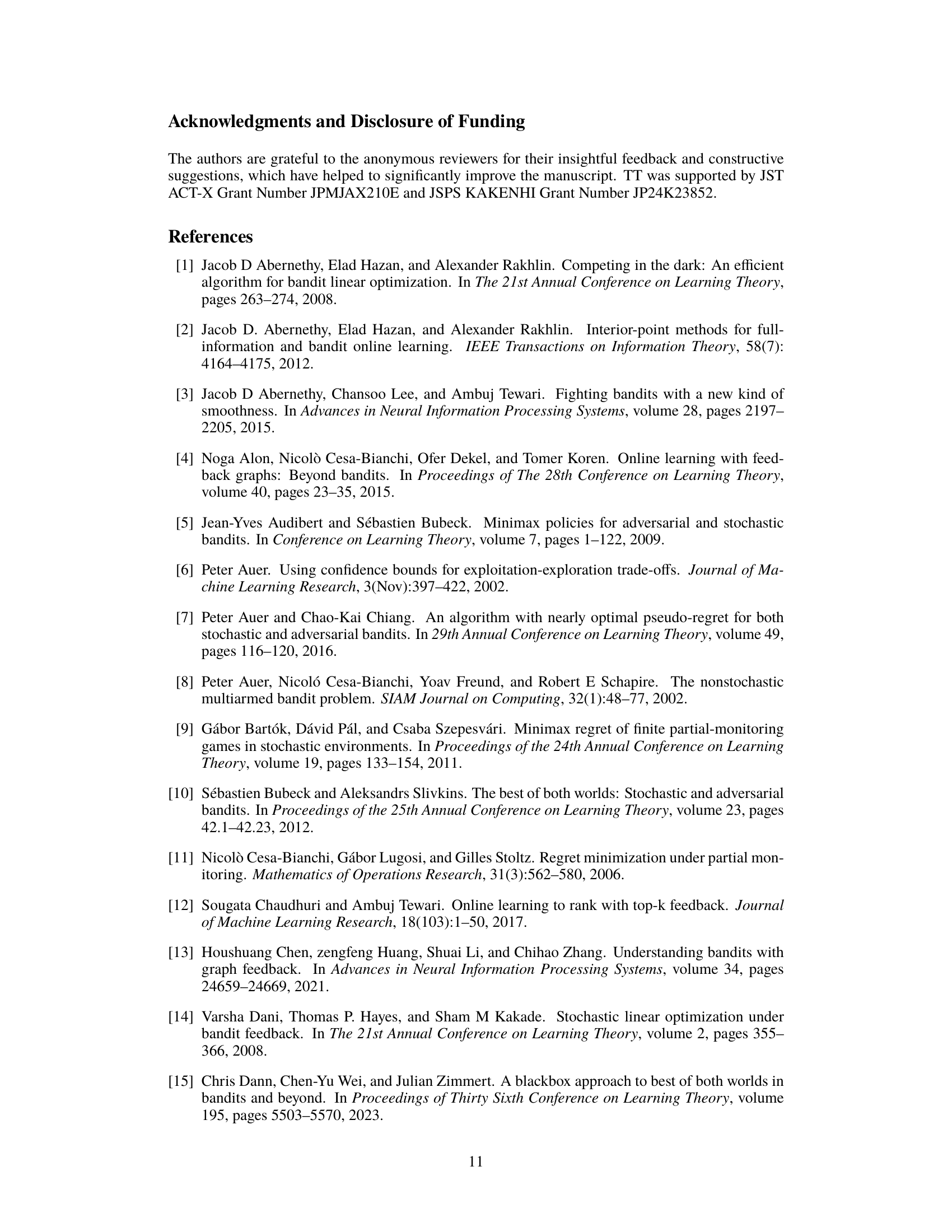

Visual Insights#

This table compares the regret bounds (in both stochastic and adversarial settings, and adversarial setting with self-bounding constraint) achieved by different algorithms for three online learning problems: partial monitoring, graph bandits, and multi-armed bandits with paid observations. The comparison highlights the improvements achieved by the proposed algorithm, particularly in achieving the best-of-both-worlds (BOBW) property.

Full paper#