↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Many real-world problems involve uncertainty in data patterns. Online convex optimization (OCO) tackles this by repeatedly making decisions based on sequentially revealed information; however, existing universal OCO algorithms are computationally expensive, requiring numerous projections onto the domain for each decision. This poses a significant hurdle for large-scale applications.

This research presents a novel universal OCO algorithm that addresses this efficiency issue. By employing a surrogate loss function and a unique expert-loss design, the algorithm is able to achieve optimal regret bounds for multiple types of convex functions with just one projection per round. This substantial efficiency gain is demonstrated through rigorous theoretical analysis and empirical experiments.

Key Takeaways#

Why does it matter?#

This paper is important because it significantly improves the efficiency of universal online convex optimization algorithms. Reducing the number of projections to only one per round dramatically decreases computational cost, making these algorithms practical for large-scale applications. This work also offers optimal regret bounds for various function types, advancing the state-of-the-art in online learning. The new techniques developed could inspire further research in projection-efficient algorithms and universal online learning.

Visual Insights#

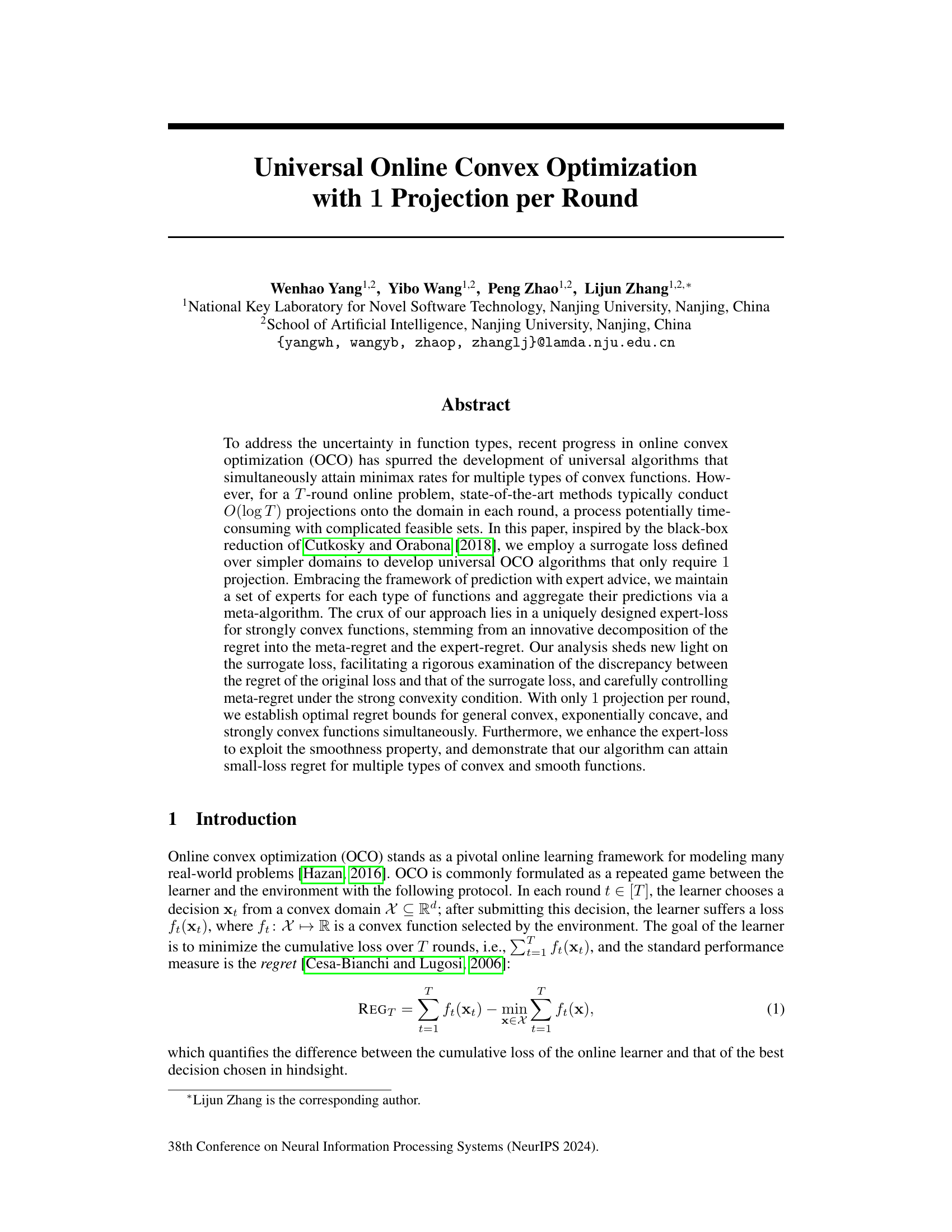

This figure compares the performance of the proposed algorithm against several other universal online convex optimization algorithms. It shows both the cumulative regret (the difference between the algorithm’s cumulative loss and the loss of the best single decision in hindsight) and the running time for three types of convex functions: exp-concave, strongly convex, and general convex functions. The results demonstrate that the proposed algorithm achieves comparable or better regret while having significantly faster running times than other methods.

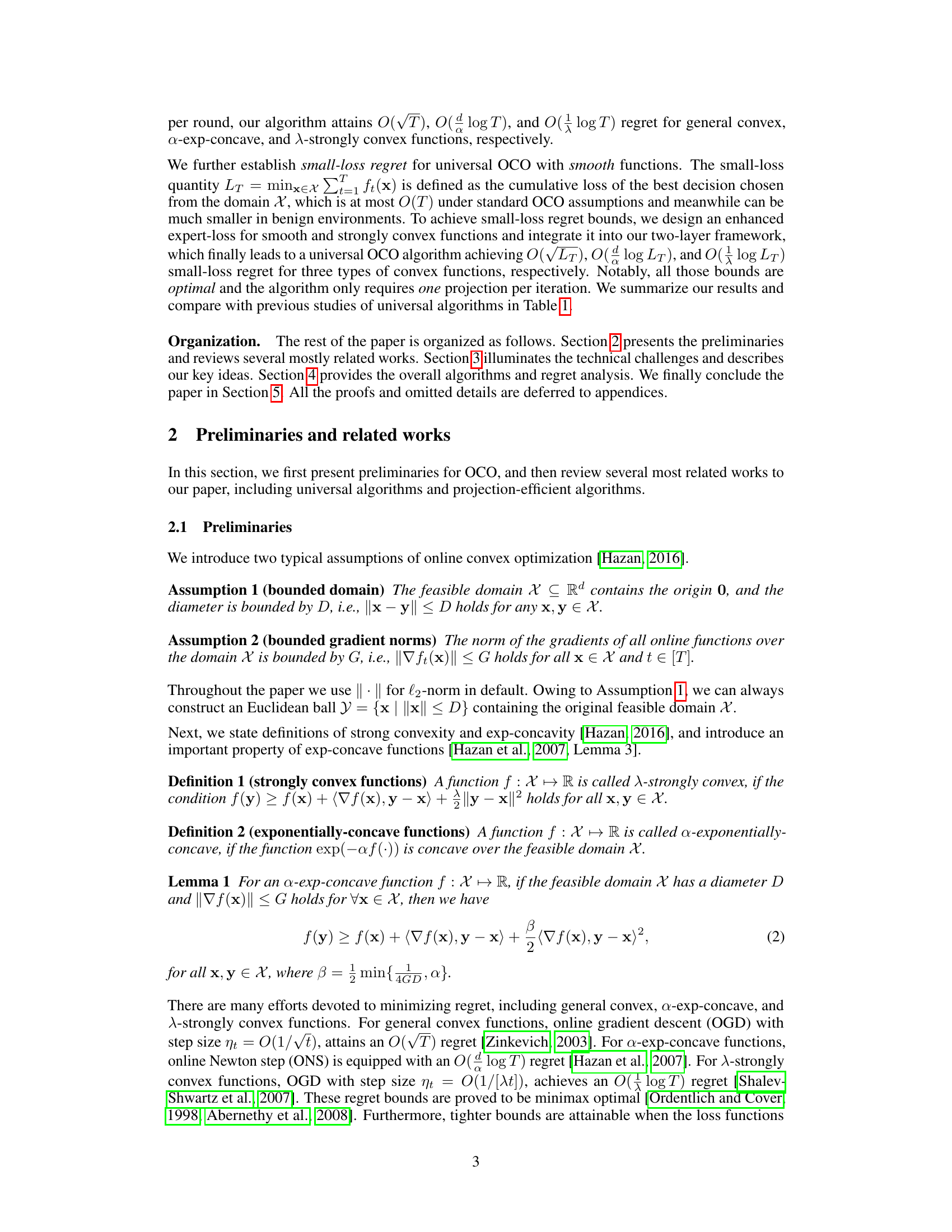

This table compares the proposed universal online convex optimization (OCO) algorithm with existing methods. It shows the regret bounds achieved by each algorithm for different types of convex functions (convex, exponentially concave, strongly convex), along with the number of projections required per round. The table highlights that the proposed algorithm achieves optimal regret bounds with only 1 projection per round, unlike existing methods that typically require O(log T) projections.

Full paper#