↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Inverse rendering aims to extract geometry, materials and lighting from 2D images, but existing methods struggle with high-illumination scenes containing shadows and reflections which hinder accurate material reconstruction. These scenes present challenges because shadows and reflections complicate the separation of object properties (albedo, roughness) from lighting conditions, leading to inaccurate results.

RobIR tackles this by employing ACES tone mapping to handle intense lighting non-linearly. A regularized visibility estimation method improves the accuracy of direct light modeling. Combining these techniques with indirect radiance field modeling allows accurate decoupling of environment lighting and object materials. This leads to high-quality albedo and roughness reconstruction, even in scenes with strong shadows and reflections, outperforming existing implicit inverse rendering methods. The provided code enables further research and development.

Key Takeaways#

Why does it matter?#

This paper is important because it presents a novel approach to inverse rendering, a crucial problem in computer graphics and computer vision. Its ability to accurately reconstruct materials even in challenging high-illumination scenes opens new avenues for research in areas such as realistic rendering, augmented reality, and scene understanding. The method’s robustness and improved accuracy will likely have a significant impact on various applications requiring realistic 3D scene reconstruction.

Visual Insights#

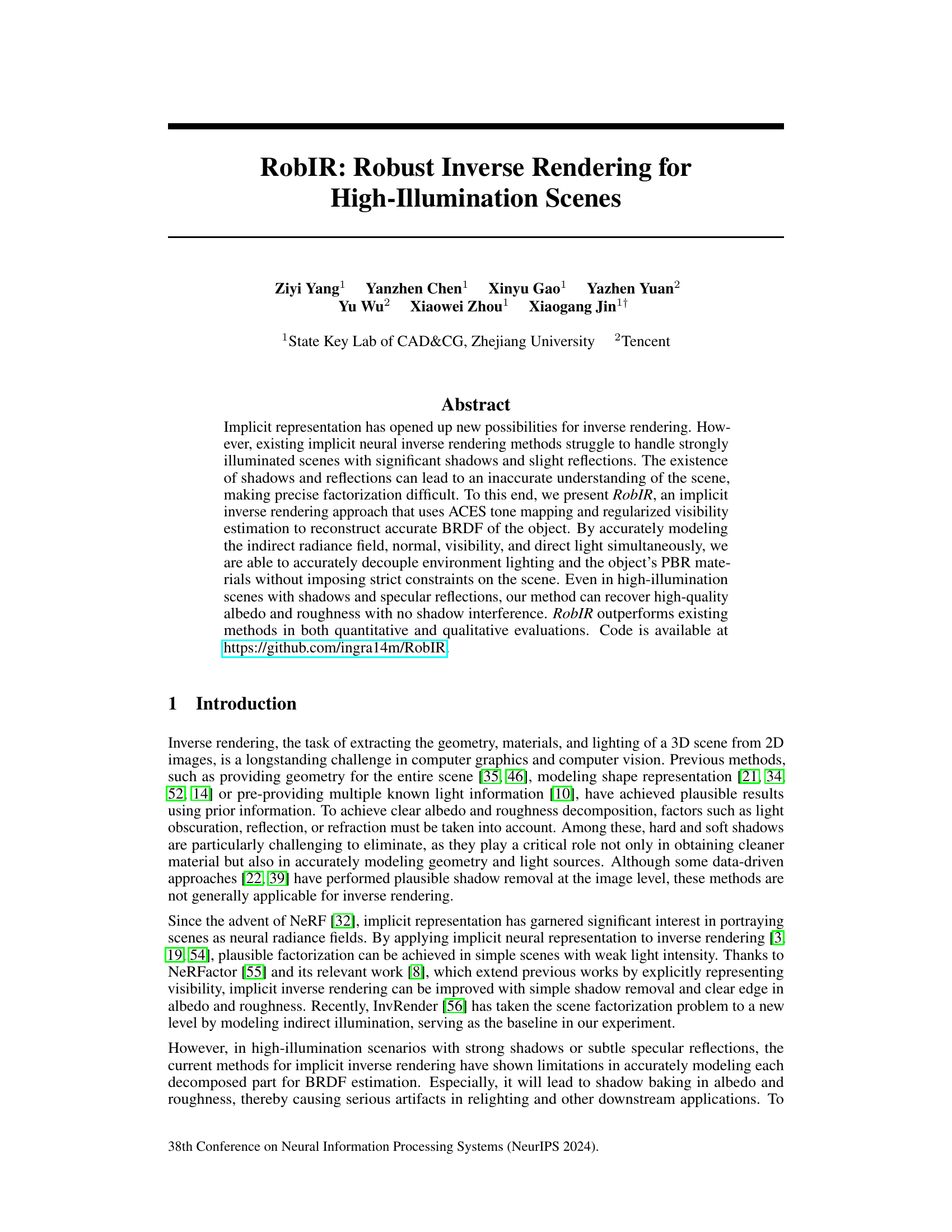

This figure illustrates the two-stage pipeline of RobIR, a novel implicit inverse rendering approach. The pre-processing stage uses NeuS to reconstruct the scene and extract scene priors (normal, visibility, and indirect illumination). The BRDF estimation stage then optimizes direct illumination and a scaled parameter (γ) to accurately reconstruct BRDF while using the extracted priors. Regularized visibility estimation is applied after 100 epochs to further enhance accuracy. The figure visually represents each stage and the data flow between them.

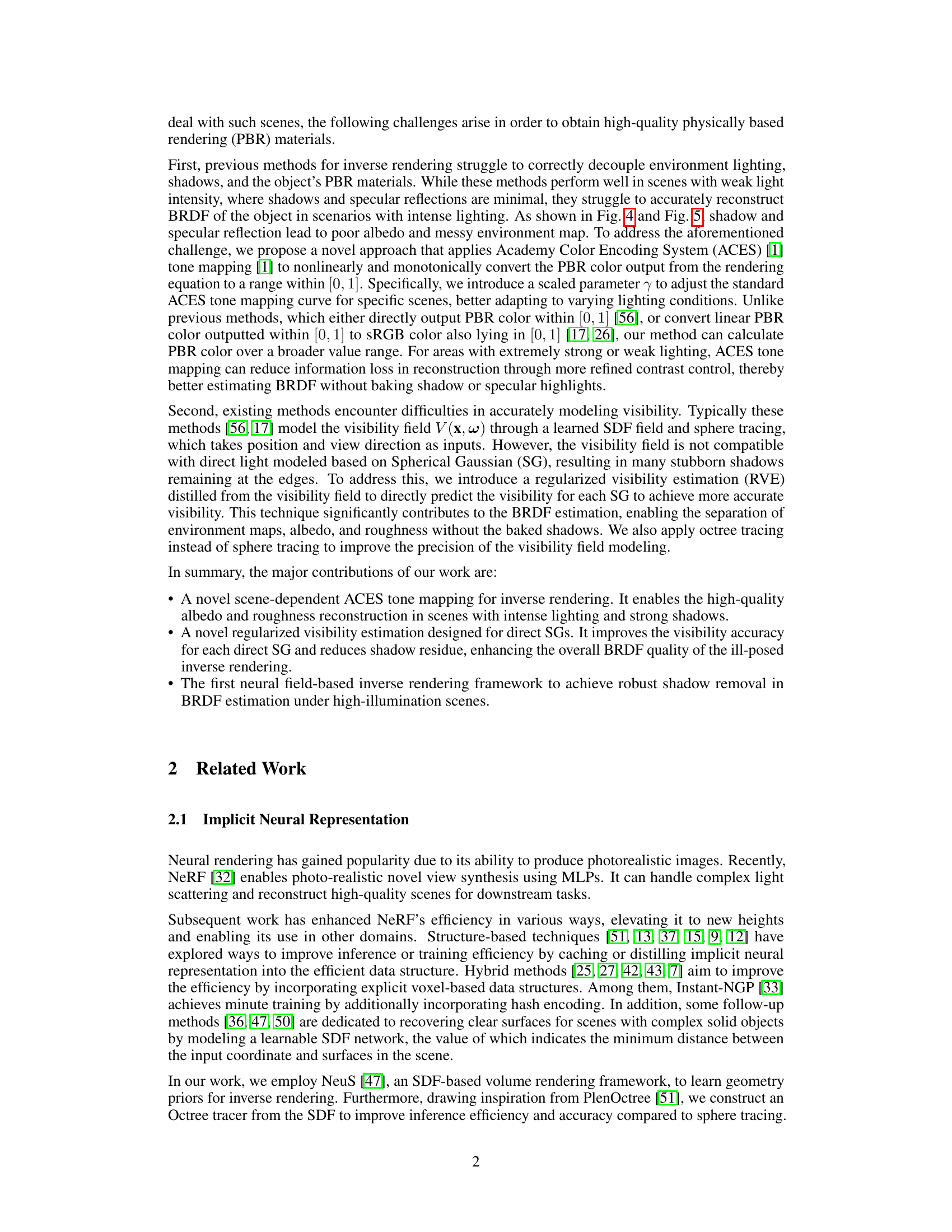

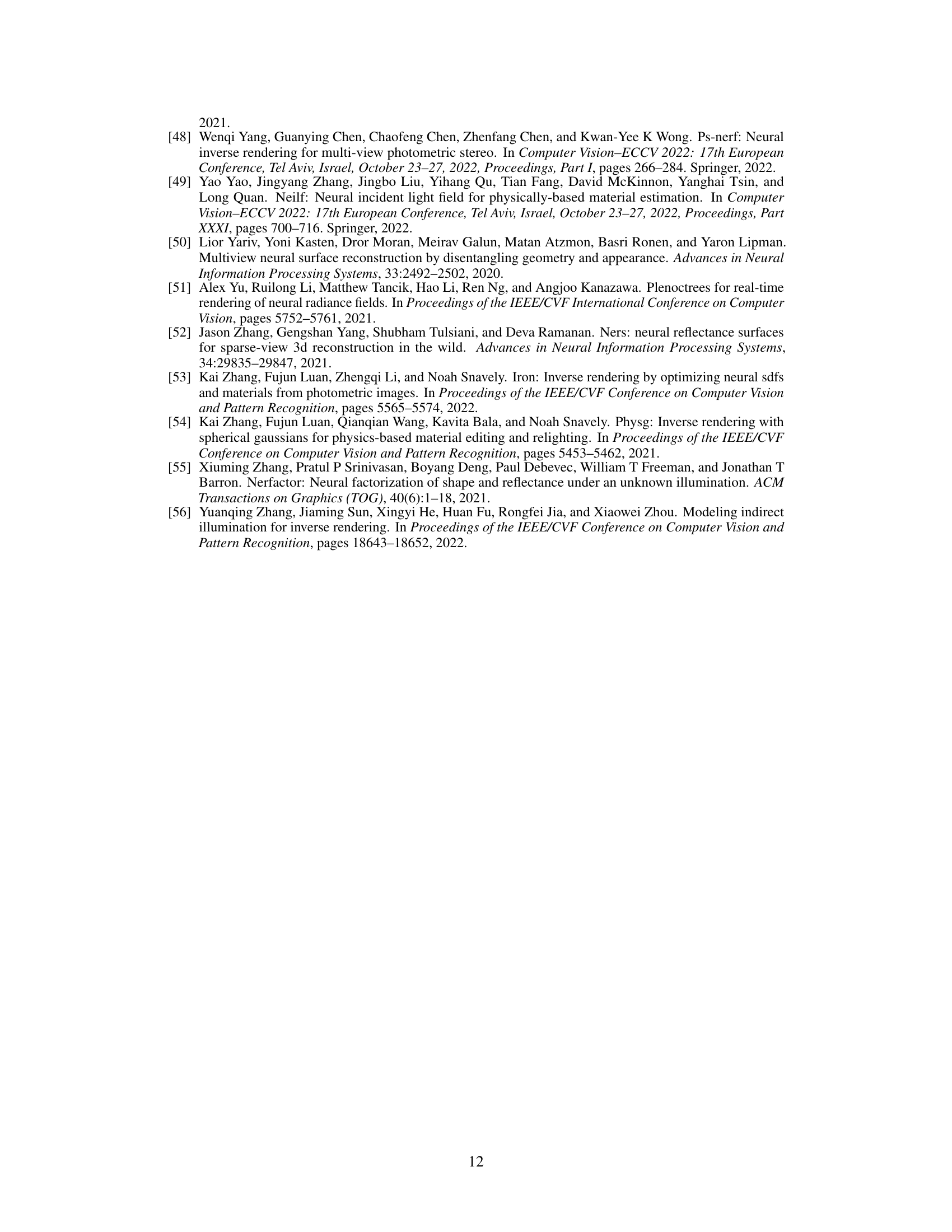

This table presents a quantitative comparison of different inverse rendering methods on synthetic scenes. The metrics used are PSNR, SSIM, and LPIPS for albedo, environment map, and relighting. MAE is also reported for roughness. The table highlights the superior performance of the proposed method (Ours) in achieving high-quality results across all metrics. The coloring helps to quickly identify the best, second best, and third-best performing methods for each metric.

In-depth insights#

Implicit InvRender#

Implicit neural representations have revolutionized inverse rendering, and a hypothetical ‘Implicit InvRender’ method would likely leverage this. It would represent scene geometry, materials, and lighting implicitly using neural networks, avoiding explicit geometric representations. This approach could offer advantages in handling complex scenes with intricate details and subtle lighting effects. A key challenge for such a method would be accurate disentanglement of scene components, particularly in high-illumination scenarios with strong shadows and specular reflections, where separating lighting from surface properties becomes significantly difficult. Robust handling of indirect illumination and accurate visibility estimation are crucial for success. Such a method would likely incorporate advanced techniques like ACES tone mapping and regularized visibility estimation to improve the accuracy and robustness of BRDF reconstruction. The efficiency of inference and training would also be a significant consideration, especially for high-resolution scenes, necessitating optimization strategies such as hierarchical scene representations or efficient neural architectures. Finally, the ability to handle real-world data and the generalizability of the approach would be important considerations, likely demanding extensive evaluation on diverse datasets.

ACES Tone Mapping#

The integration of ACES tone mapping within the inverse rendering framework represents a significant advancement in handling high-illumination scenes. Traditional methods often struggle with intense lighting conditions, resulting in artifacts like shadow baking into albedo and roughness estimations. ACES’s ability to nonlinearly map colors across a wide dynamic range is key, mitigating information loss associated with extremely bright or dark areas. The method’s scene-dependent adaptation of the ACES curve, parameterized by γ, offers further robustness, allowing for optimal contrast and detail preservation across diverse lighting conditions. This approach addresses a crucial limitation in previous implicit inverse rendering techniques, leading to more physically plausible and accurate BRDF estimations, particularly in challenging, high-illumination scenarios. This improved accuracy directly benefits downstream applications relying on realistic scene reconstruction.

Regularized Visibility#

Regularized visibility, in the context of inverse rendering, addresses the persistent challenge of accurately modeling visibility in complex scenes with shadows and reflections. Standard methods often struggle to precisely decouple visibility from other scene factors like lighting and material properties, leading to artifacts like shadow baking in albedo and roughness estimations. A regularized approach aims to mitigate these inaccuracies by employing techniques that constrain the visibility estimations, making them more robust and less susceptible to noise or artifacts. This might involve incorporating prior knowledge about the scene geometry, using regularized loss functions during training, or employing advanced techniques like octree tracing instead of computationally expensive sphere tracing. The key benefit is improved accuracy in BRDF estimation, enabling a clearer separation of scene components, which directly translates to higher-quality and more physically plausible material reconstruction. By introducing regularity, the overall quality of the reconstructed albedo and roughness is greatly improved, enabling more robust and realistic relighting applications.

High-Illumination BRDF#

High-illumination BRDF presents a significant challenge in inverse rendering due to the complexities introduced by strong lighting conditions. Shadows and specular reflections interfere with accurate material decomposition, making it difficult to decouple environment lighting, albedo, and roughness. Existing methods often fail to accurately model visibility in these scenarios, leading to artifacts such as shadow baking in albedo and roughness estimates. Addressing this necessitates robust techniques, for example, advanced tone mapping (like ACES) to handle the wide dynamic range of intensities and regularized visibility estimation to improve the accuracy of direct and indirect light calculations, enabling more precise BRDF recovery. Robust solutions require careful consideration of indirect illumination, incorporating accurate visibility modeling to avoid inaccuracies caused by shadow interference. This leads to a more physically accurate and robust BRDF reconstruction in complex, high-illumination scenes.

Future Enhancements#

Future enhancements for this research could focus on several key areas. Addressing limitations in handling complex scenes with intricate geometry and diverse materials is crucial. The current method’s reliance on simplified BRDF models could be improved by incorporating more sophisticated and physically accurate representations, enabling more realistic relighting and material estimation. Expanding the dataset to include more varied and challenging scenarios, particularly those with dynamic lighting conditions, would enhance the robustness and generalizability of the approach. Improving efficiency is also essential. The current method can be computationally intensive, hindering its applicability to real-time or large-scale applications. Exploring techniques to optimize computational performance, potentially through improved network architectures or efficient rendering strategies, would be valuable. Finally, investigating the application of this research to other inverse rendering problems, such as recovering material properties from spectral images or estimating scene illumination from multiple cameras, could broaden its impact.

More visual insights#

More on figures

This figure shows a comparison of normal maps generated with and without a smooth loss. The image on the left (w/o smooth loss) shows a normal map with visible discontinuities and noise, while the image on the right (w/ smooth loss) shows a much smoother normal map with fewer artifacts. The smooth loss helps to regularize the normal map, making it more suitable for subsequent BRDF estimation. The smooth loss prevents broken normals caused by specular reflection, which results in a higher quality normal map.

This figure shows the effect of applying a smooth loss to the normal vectors predicted by the model. The leftmost image is the input, the middle image shows the normal vectors before the smooth loss is applied, which contain artifacts and are noisy. The rightmost image shows the improved normal vectors after applying the smooth loss, resulting in smoother and more accurate normal prediction.

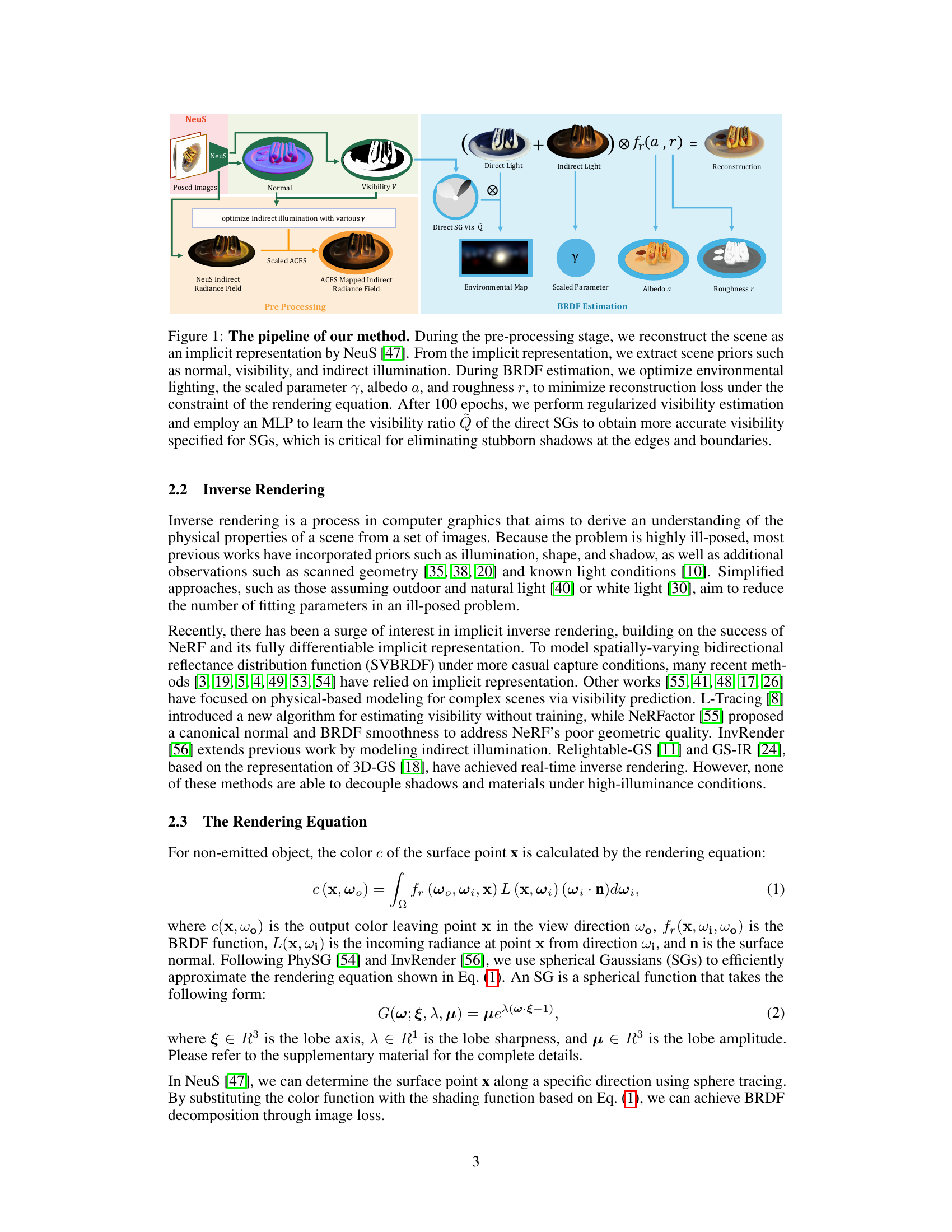

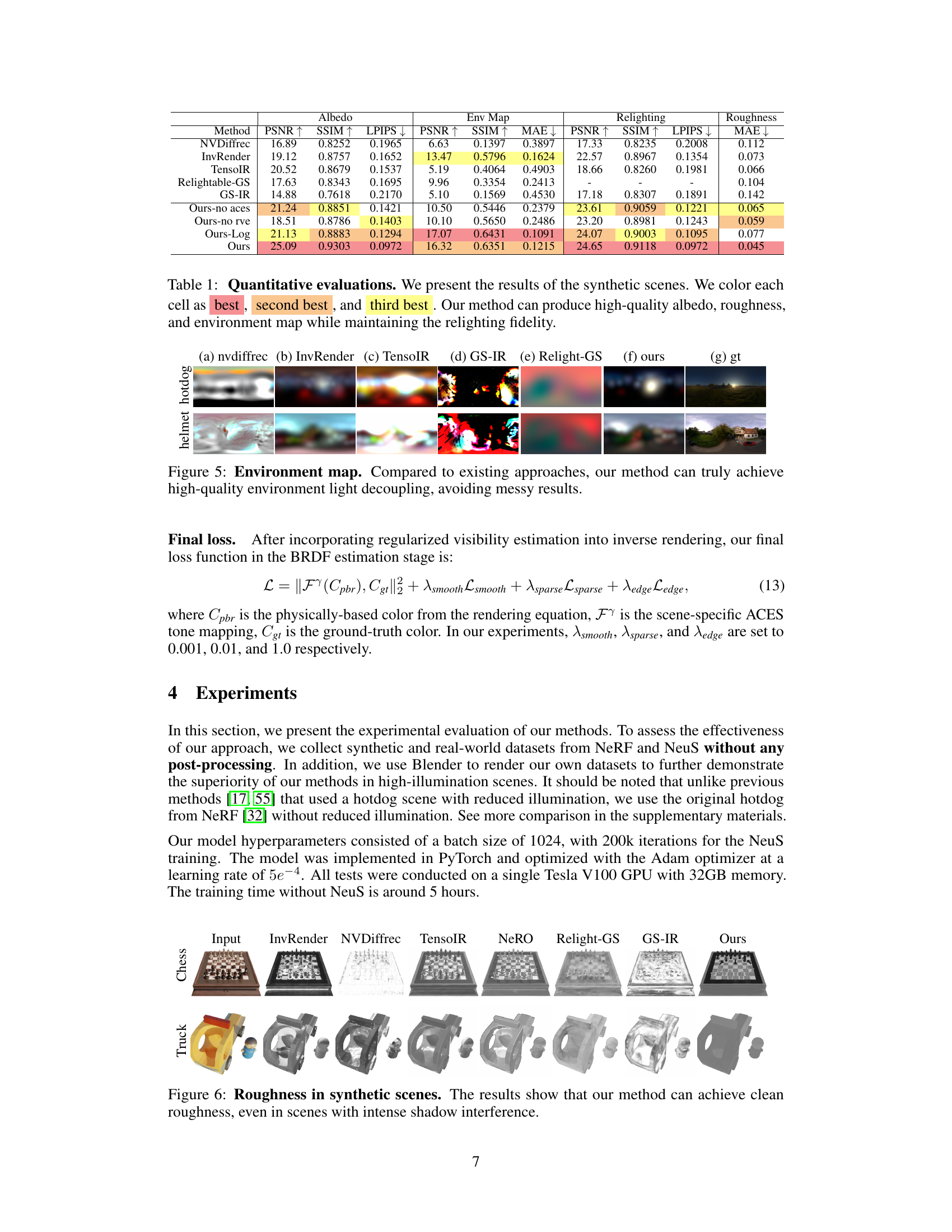

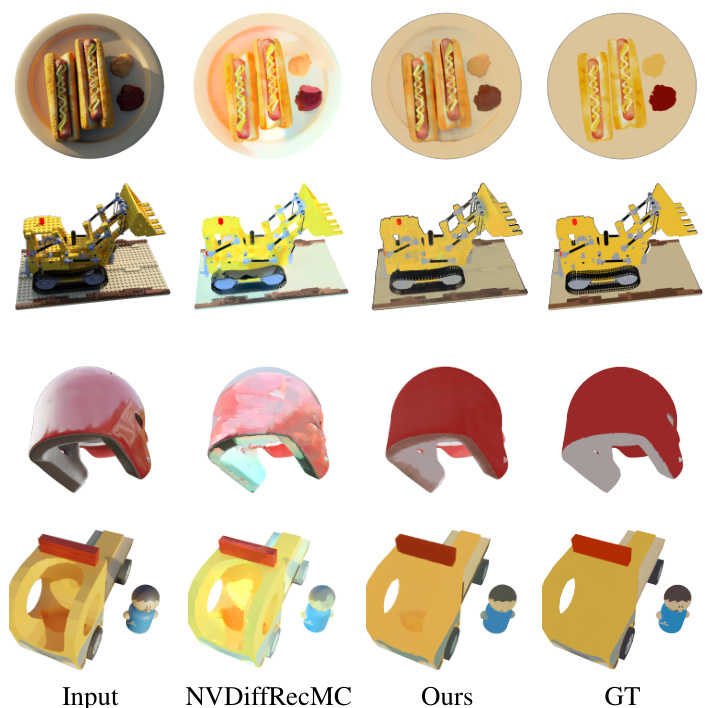

This figure compares the albedo (the base color of an object) produced by RobIR and several other state-of-the-art inverse rendering methods. RobIR’s results show cleaner albedo than others, not having artifacts from shadows or reflections baked in.

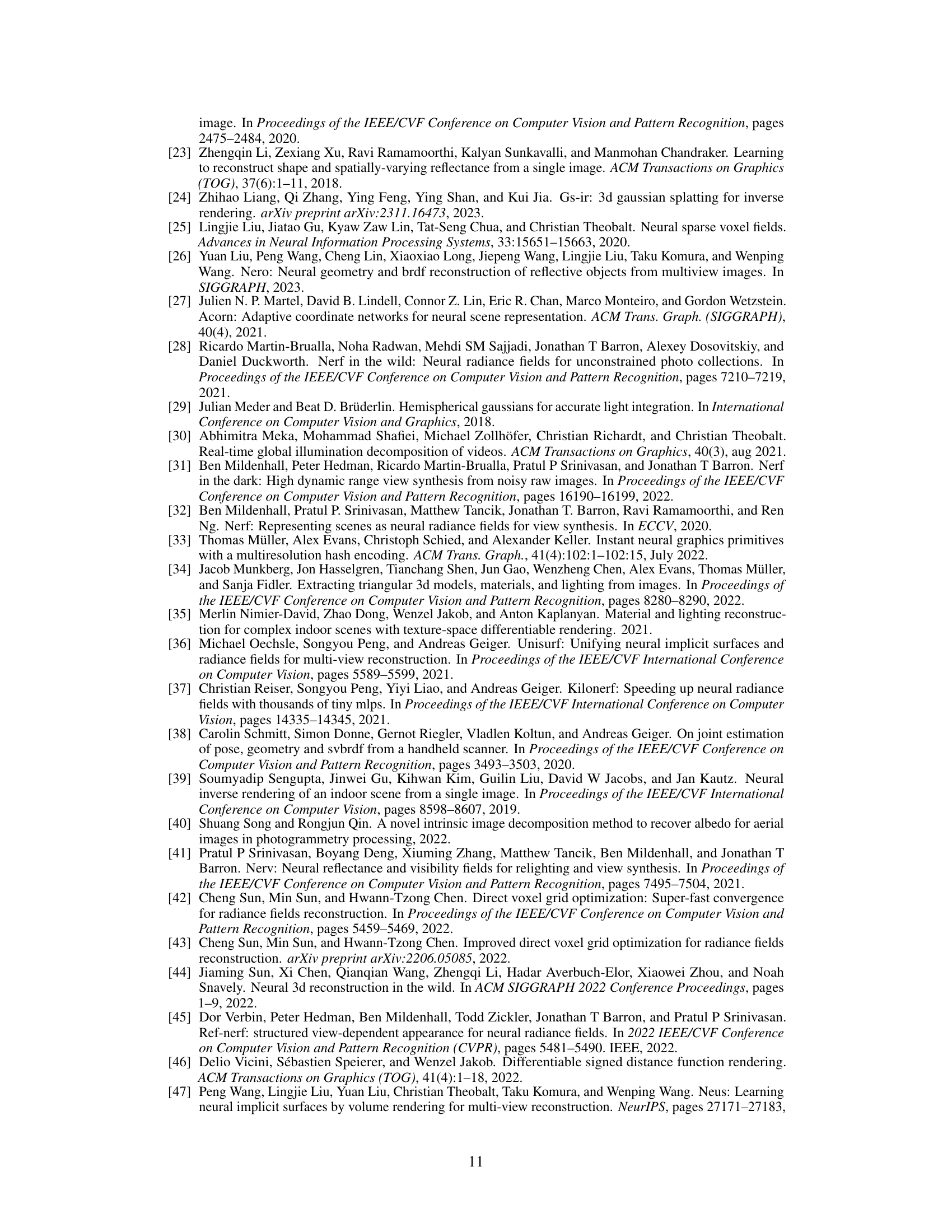

This figure compares the results of different inverse rendering methods on a challenging high-illumination dataset. It shows that previous methods struggle to separate shadows from the object’s material properties (albedo and roughness), while the proposed method, RobIR, effectively decouples them, resulting in more accurate material estimation even in difficult lighting conditions.

This figure compares the albedo reconstruction results of RobIR against several state-of-the-art methods on synthetic scenes. It visually demonstrates RobIR’s superior performance in accurately decoupling shadows and specular reflections from the albedo, avoiding artifacts seen in other methods that bake these effects into the albedo map.

This figure shows a comparison of albedo and roughness results on real-world scenes between the proposed method (Ours) and several other state-of-the-art methods (InvRender, TensoIR, Relight-GS). The results demonstrate that the proposed method effectively decouples shadows and materials, leading to higher-quality albedo and roughness estimations, even in complex real-world scenarios.

This figure shows the results of ablation studies conducted on the key components of the proposed BRDF estimation framework. The ablation experiments systematically remove one component at a time (e.g., ACES tone mapping, regularized visibility estimation) to assess its individual contribution to the overall quality of the albedo reconstruction. The results highlight the significance of each component in achieving high-quality albedo.

This figure demonstrates the de-shadowing capability of the proposed method. Three different scenes are shown, each with an input image, a rendering with shadows, and a de-shadowed rendering. The de-shadowed renderings show the successful removal of shadows while preserving the overall quality of the image.

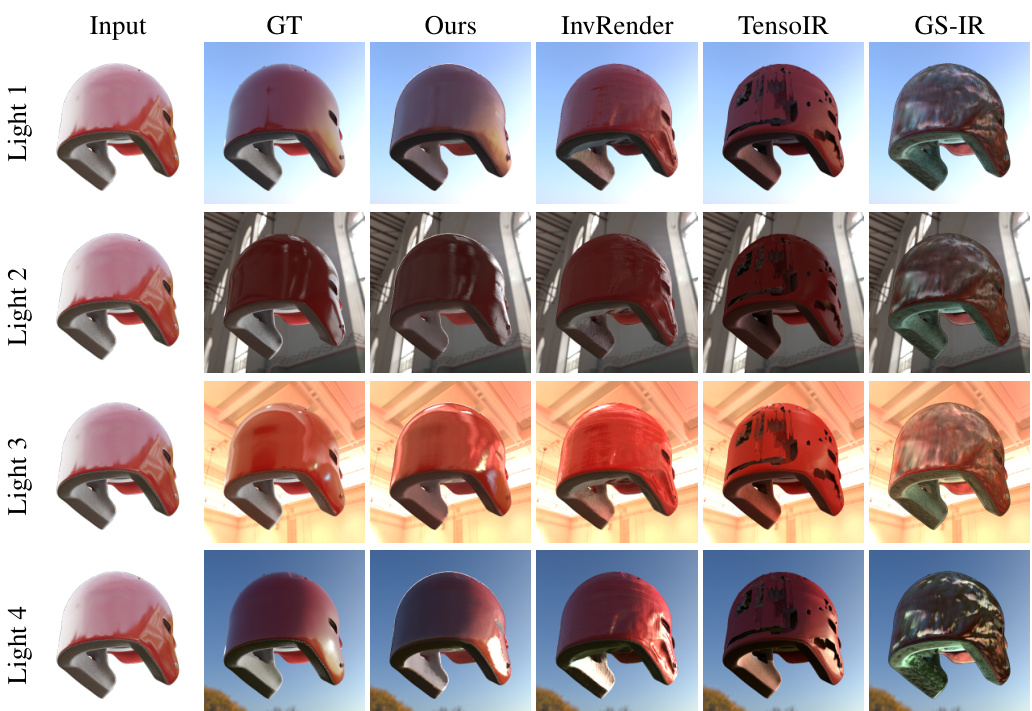

This figure shows a comparison of relighting results for a helmet between the proposed method and several other state-of-the-art methods. The proposed method demonstrates high-quality results even in the presence of specular highlights and reflections, unlike other methods which suffer from artifacts such as shadow baking and inaccurate material representation. The results are shown for four different lighting conditions (Light 0 - Light 3).

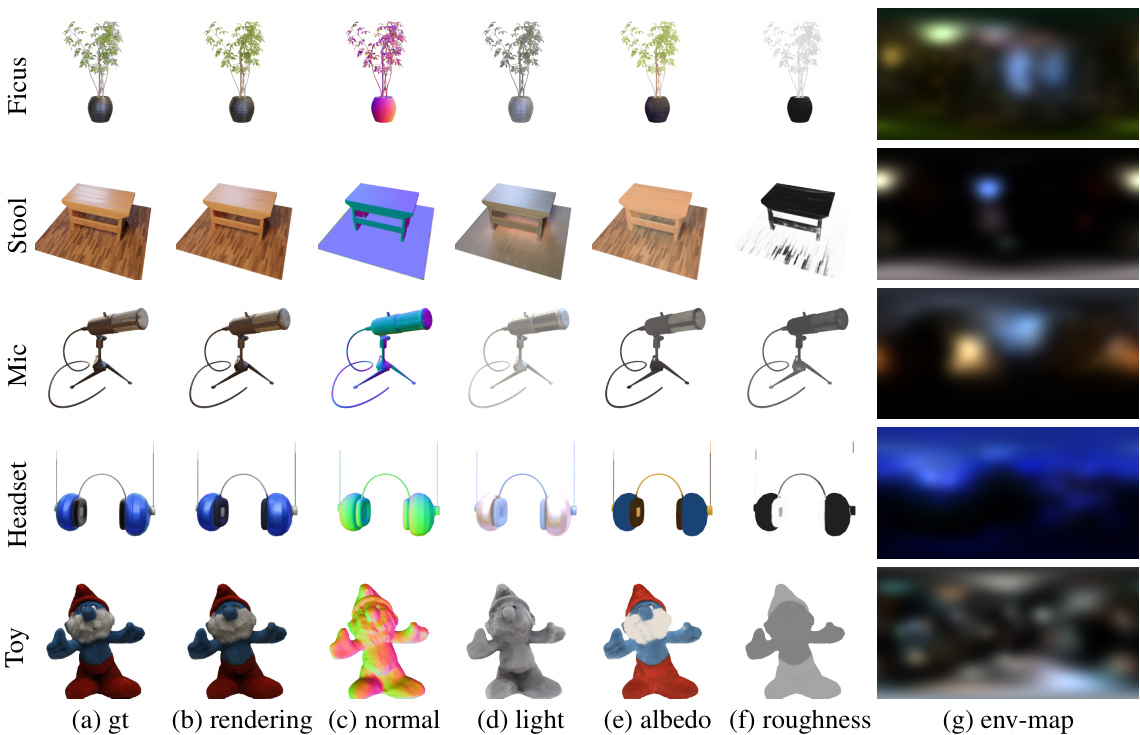

This figure shows several examples of the RobIR method applied to different scenes. Each row represents a different scene, showing the ground truth image, the results obtained using the RobIR method, and the extracted normal map, lighting, albedo, and roughness. This showcases the versatility and high-quality results of the proposed method across diverse scenes and data sets.

This figure demonstrates the relighting capabilities of the proposed method, RobIR, on a helmet model. It showcases the ability to produce high-quality results even in complex scenes containing specular highlights and reflections, which are challenging for existing inverse rendering techniques. The comparison highlights RobIR’s superior performance in decoupling shadows and material properties, resulting in more accurate and realistic relighting.

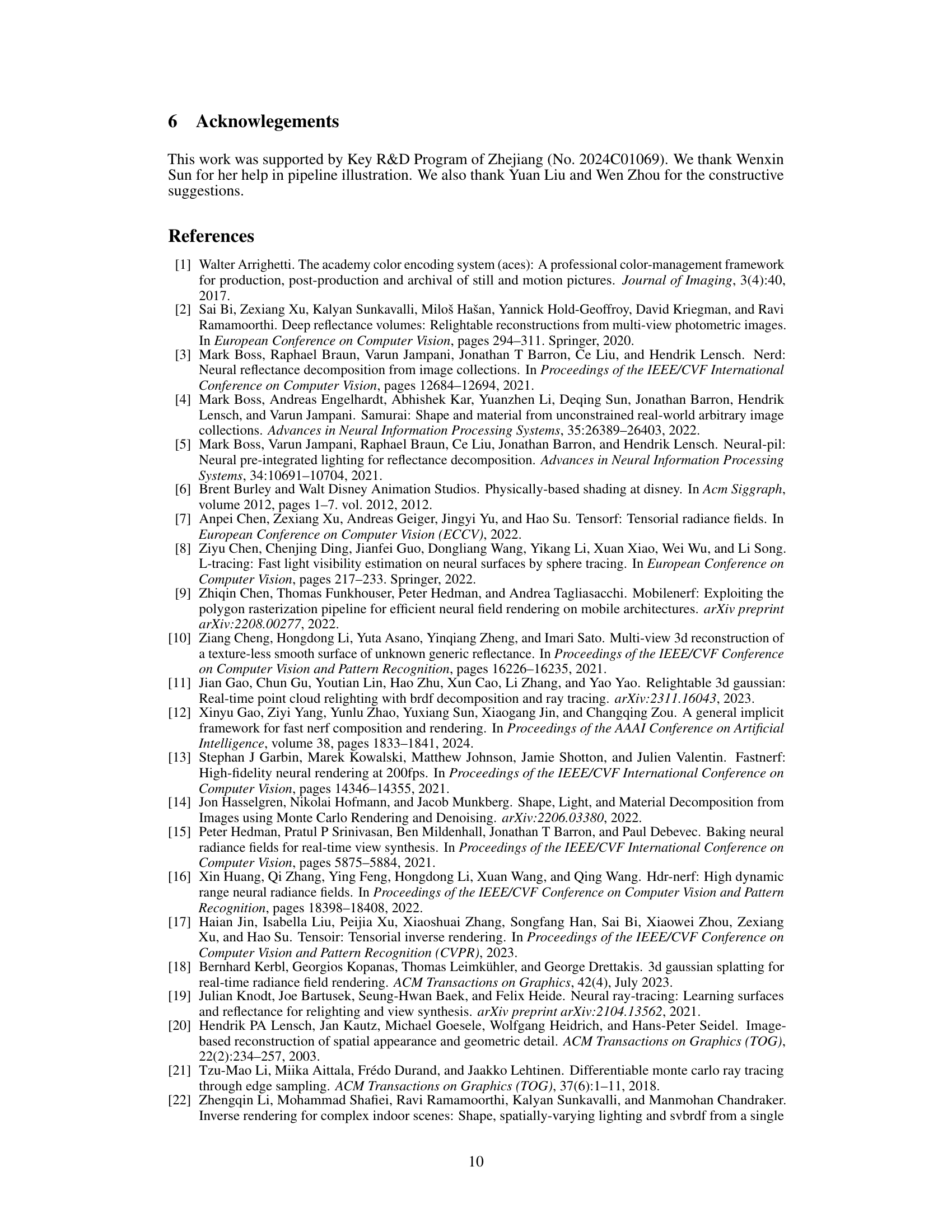

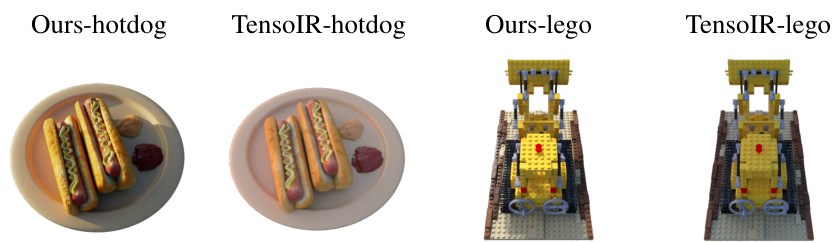

This figure compares the results of the proposed method (Ours) and a previous method (TensoIR) on two different scenes with strong illumination: a hotdog and a Lego construction. The comparison highlights the proposed method’s ability to accurately decouple shadows from the object’s material properties (albedo), unlike the previous method which struggles to separate them under intense lighting conditions. This showcases the robustness of the new approach in handling high-illumination scenarios.

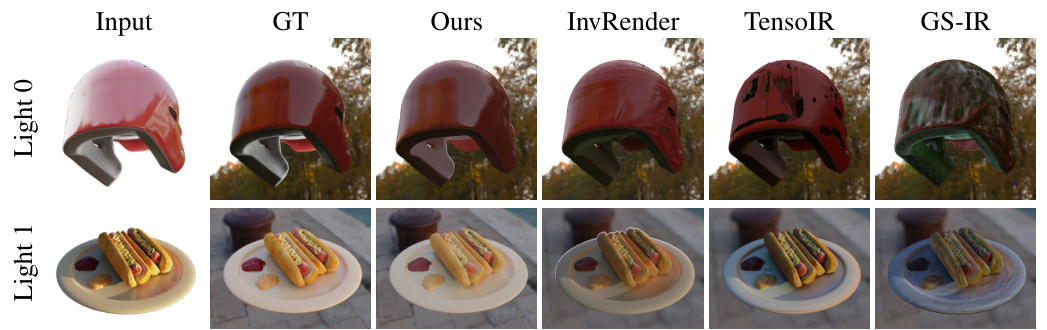

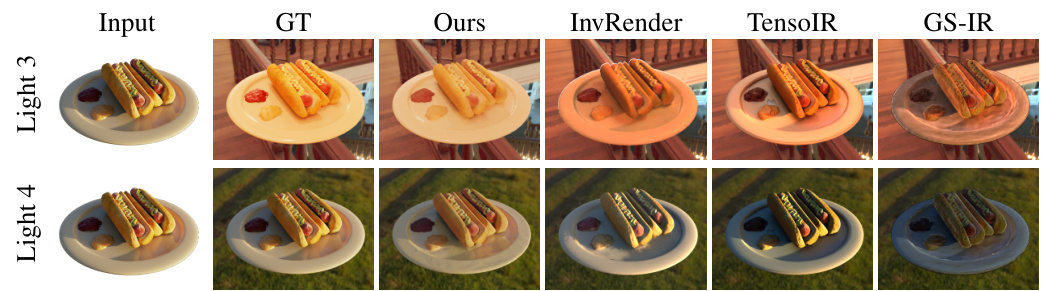

This figure showcases the relighting capabilities of the proposed RobIR method. It compares the relighting results of RobIR against other state-of-the-art methods (InvRender, TensoIR, and GS-IR) in scenarios with significant specular reflections and shadows. The comparison highlights RobIR’s ability to accurately reconstruct the scene’s BRDF, resulting in realistic and high-quality relighting, free from artifacts caused by baked shadows.

This figure compares the results of different inverse rendering methods on a challenging dataset with high illumination. It shows that existing methods struggle to separate shadows from the object’s material properties (albedo, roughness), while the proposed method (Ours) achieves better separation, leading to higher-quality reconstructions.

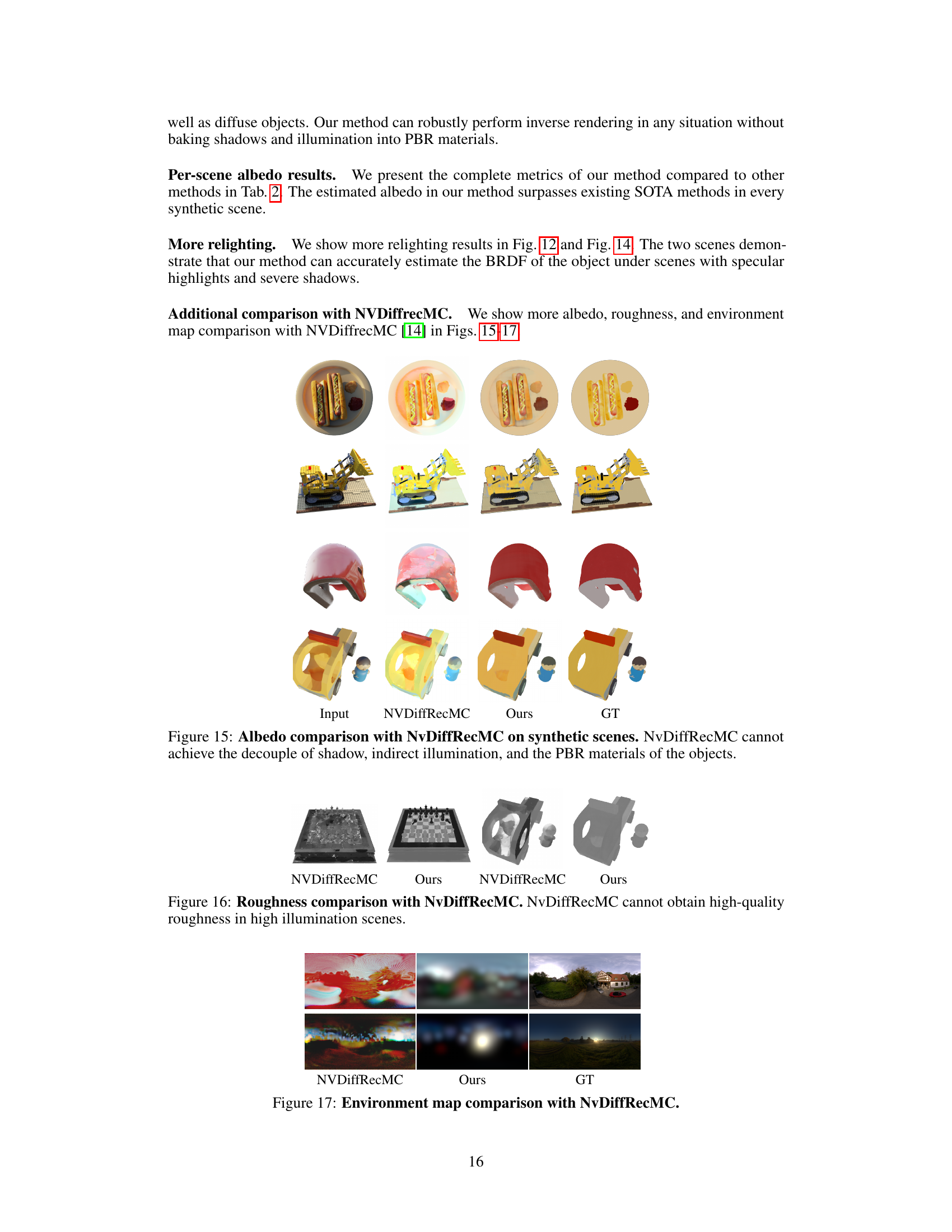

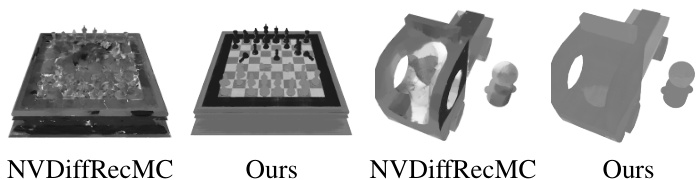

This figure compares the albedo reconstruction results of the proposed method (RobIR) and NVDiffRecMC on four synthetic scenes. The comparison highlights RobIR’s superior ability to decouple shadow and indirect illumination from the object’s physical properties, leading to a cleaner and more accurate albedo reconstruction. NVDiffRecMC, in contrast, struggles with this decoupling, resulting in albedo that is contaminated by shadows and indirect lighting effects.

This figure compares the environment maps generated by the proposed method (Ours), the NVDiffRecMC method, and the ground truth (GT) for three different scenes. The top row shows the environment maps for a scene with a red, textured surface, and a blurry background. The middle row shows the environment maps for a scene with a dark, textured area and a bright light source. The bottom row shows the environment maps for an outdoor scene with a house, a car, and some greenery. The comparison highlights the ability of the proposed method to accurately reconstruct environment lighting, even in challenging scenes with high dynamic range and complex lighting conditions.

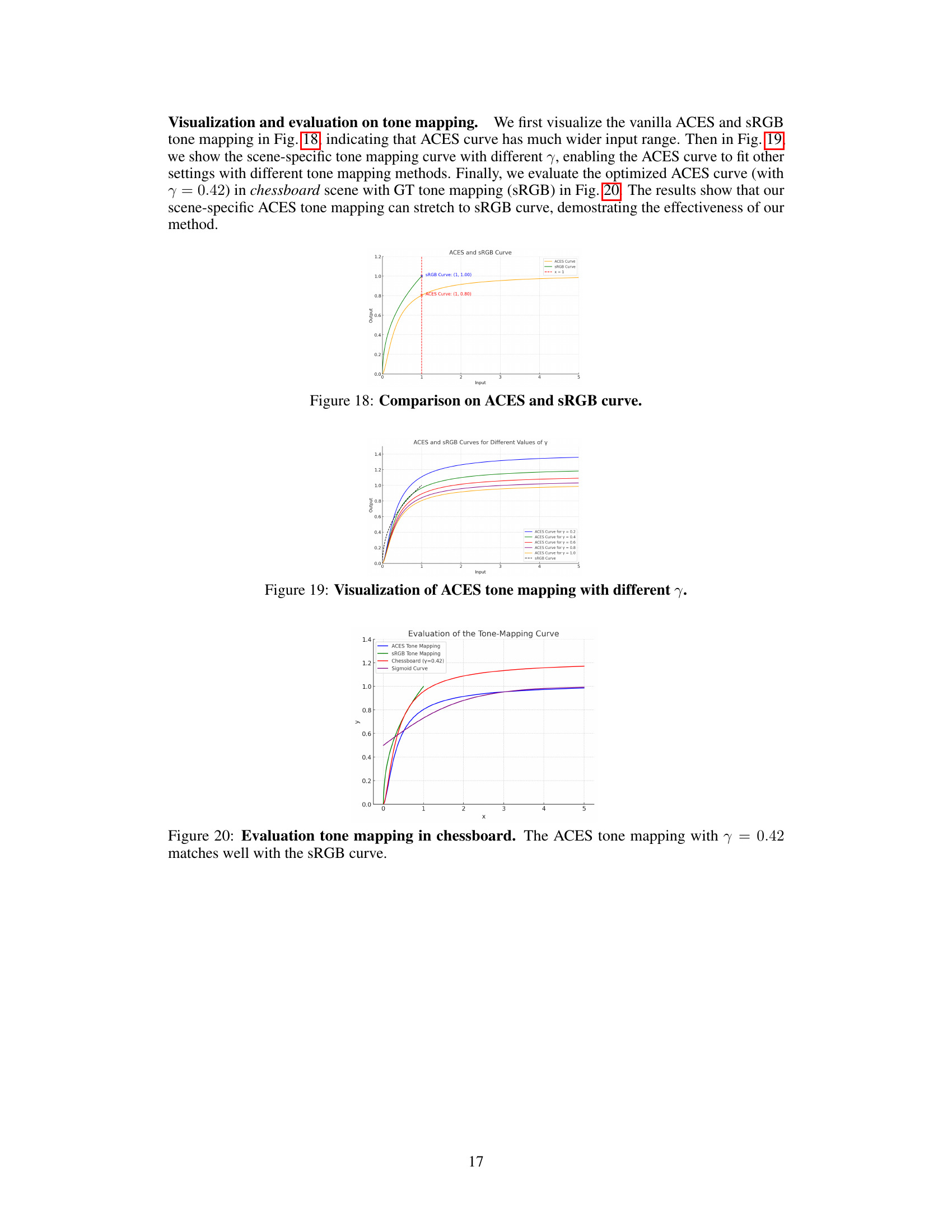

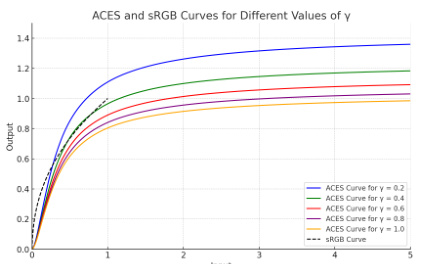

This figure compares the ACES and sRGB tone mapping curves. The ACES curve shows a much wider input range than the sRGB curve, capable of handling a greater dynamic range of light intensities. This is significant because the ACES tone mapping is used to convert the PBR color output from the rendering equation to a range within [0, 1], making it suitable for use in the proposed inverse rendering method even in high-illumination scenes.

This figure illustrates the two-stage pipeline of the proposed method, RobIR, for robust inverse rendering in high-illumination scenes. The first stage involves pre-processing using NeuS to create an implicit scene representation from which normal, visibility, and indirect illumination are extracted. The second stage focuses on BRDF estimation, optimizing environmental lighting, a scaled parameter (γ), albedo (α), and roughness (r) to minimize reconstruction loss using the rendering equation. Regularized visibility estimation is applied after 100 epochs to improve accuracy by learning the visibility ratio (Q) of direct spherical Gaussians (SGs), effectively removing persistent shadows.

This figure compares the ACES tone mapping curve with different gamma values (γ) and the standard sRGB curve, demonstrating the effectiveness of a scene-specific ACES tone mapping approach. The plot shows that an optimized ACES curve (γ = 0.42) closely matches the sRGB curve in a chessboard scene. This highlights the method’s ability to adapt to various lighting conditions and reduce information loss.

Full paper#