↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Sparse linear regression struggles with adversarial attacks (corrupted data) and heavy-tailed noise. Existing methods often fail to provide accurate estimations in high-dimensional settings where the number of variables exceeds the number of observations. Robust estimators are needed that are both computationally efficient and resistant to these attacks.

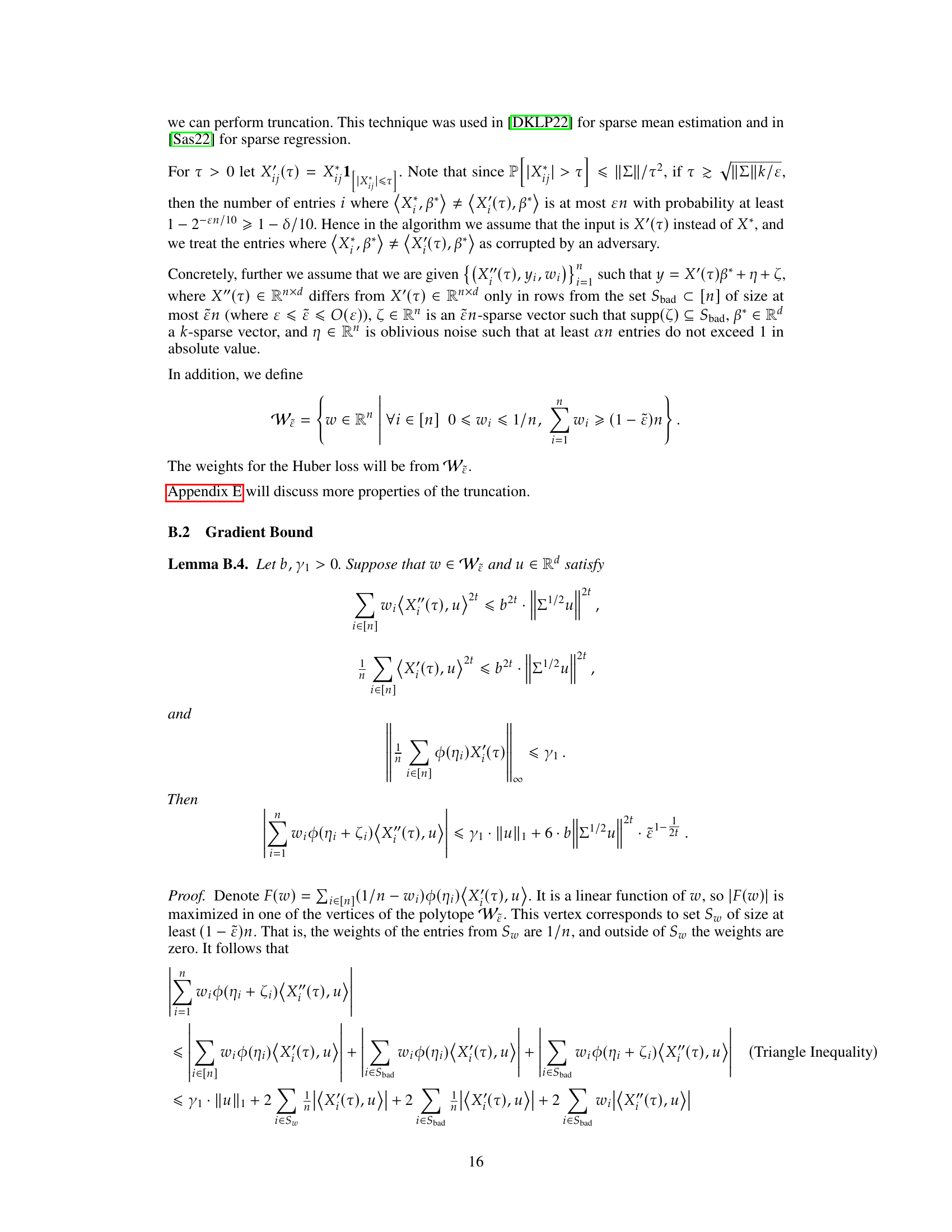

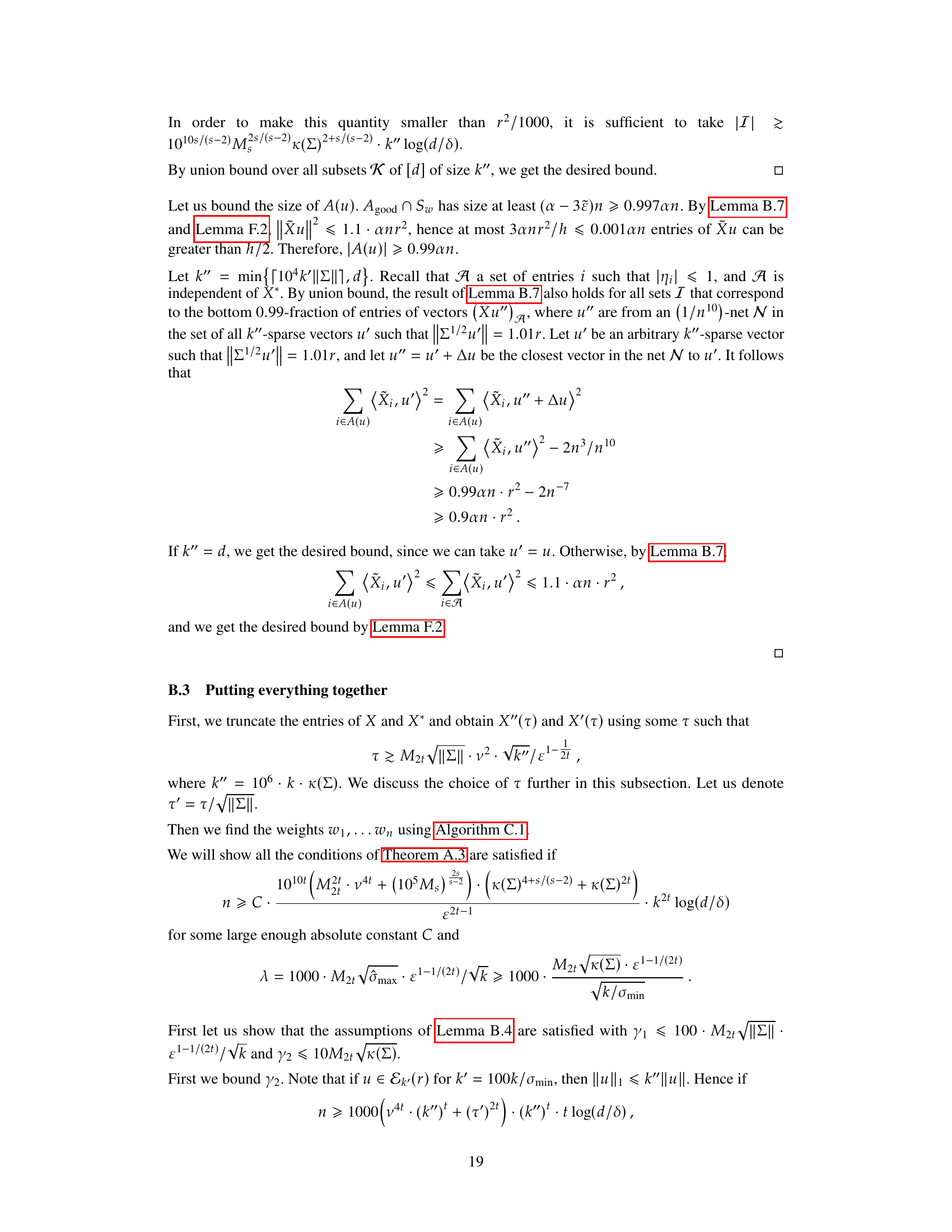

This research presents new polynomial-time algorithms that achieve substantially better error rates in robust sparse linear regression. The algorithms leverage filtering techniques to remove corrupted data points and utilize a weighted Huber loss function to minimize the impact of outliers. The key contributions include improved error bounds (o(√ε)) under specific assumptions on data distribution and rigorous theoretical analysis, including novel statistical query lower bounds to support the near optimality of these algorithms.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers working on robust statistics and high-dimensional data analysis. It introduces novel algorithms that significantly improve the accuracy of sparse linear regression, even when dealing with adversarial attacks on both data and noise. This advance is highly relevant to various fields relying on robust statistical modeling, such as machine learning and data science, and opens new avenues for designing more resilient and efficient estimators.

Visual Insights#

Full paper#