↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Existing reference-based scene stylization methods struggle with slow rendering times due to reliance on neural radiance fields (NeRFs). These methods also suffer from an inability to accurately capture fine texture details in the reference image when editing the appearance of pre-trained NeRFs. This paper introduces ReGS, which leverages 3D Gaussian Splatting for significantly faster rendering while addressing these texture limitations.

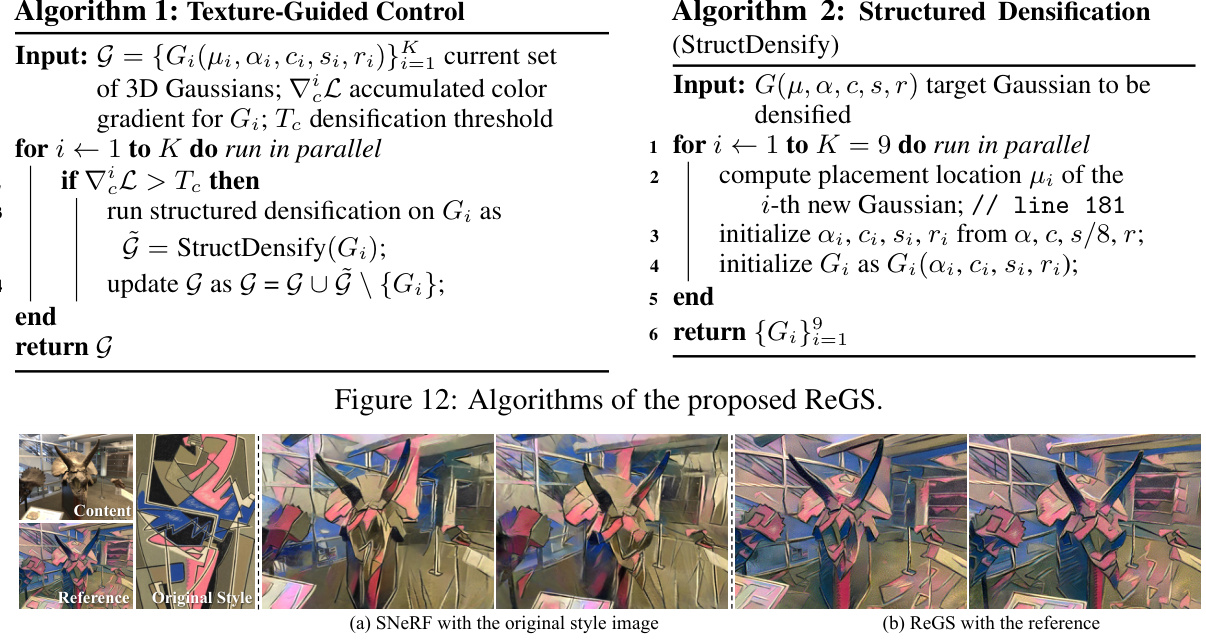

ReGS introduces a novel texture-guided control mechanism. This method identifies areas where the appearance needs improvement, then adapts the underlying Gaussian splat geometry for better matching to the reference image. The method also incorporates depth regularization to preserve the original scene geometry. Experimental results demonstrate that ReGS achieves state-of-the-art stylization quality with real-time performance, surpassing existing NeRF-based approaches in speed and accuracy.

Key Takeaways#

Why does it matter?#

This paper is significant for researchers in computer graphics and vision because it presents ReGS, a novel method for real-time scene stylization that outperforms existing approaches. It addresses the limitations of previous methods by combining 3D Gaussian Splatting with a texture-guided control mechanism, achieving high-fidelity results while maintaining real-time performance. This opens up new possibilities for interactive applications and inspires further research in efficient 3D scene manipulation and stylization techniques.

Visual Insights#

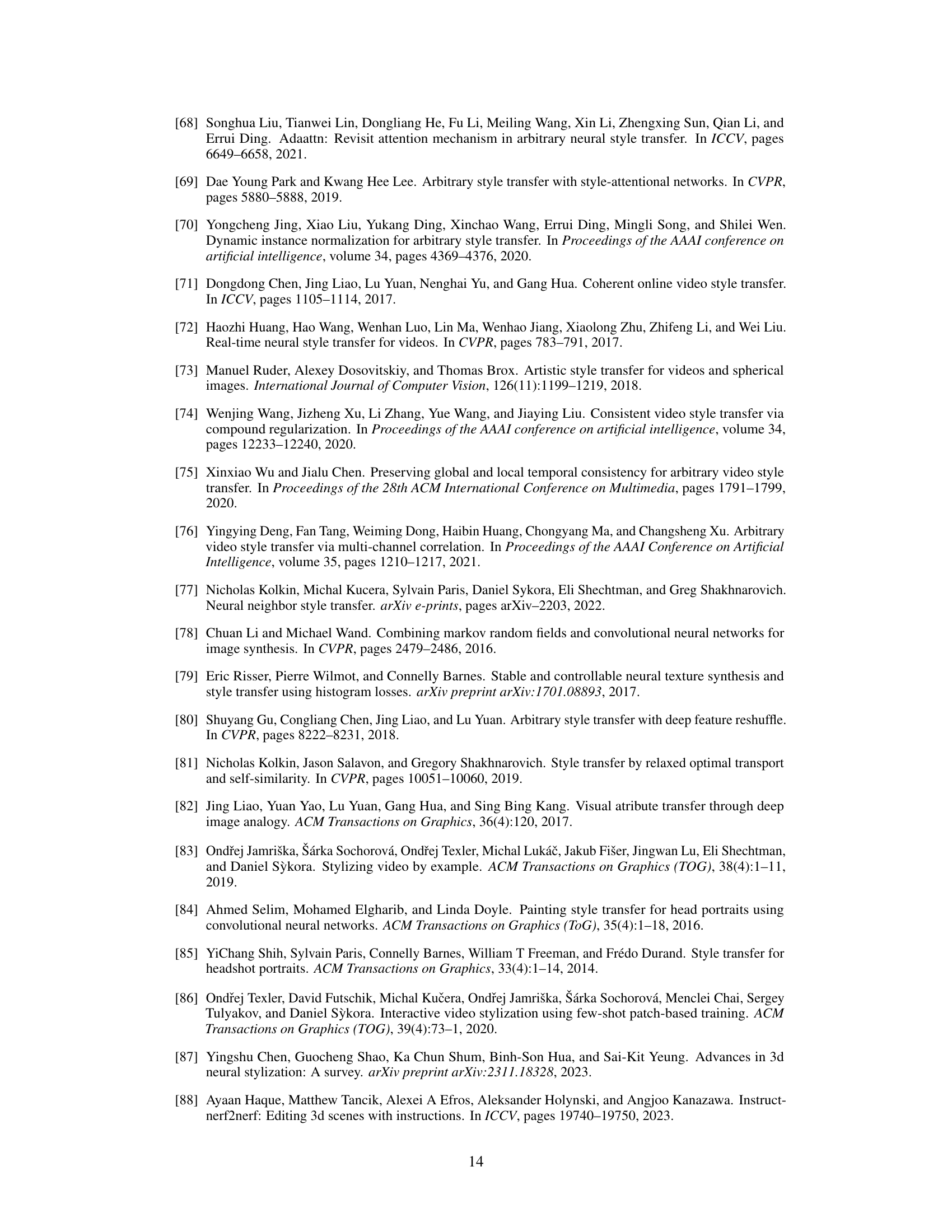

This figure compares the results of ReGS with a naive approach for reference-based scene stylization using 3D Gaussian Splatting. ReGS demonstrates its ability to generate high-fidelity, real-time stylized views by effectively addressing texture underfitting, resulting in more detailed and accurate texture matching compared to simply optimizing the appearance.

This table presents a quantitative comparison of ReGS against three state-of-the-art methods: ARF [8], SNeRF [4], and Ref-NPR [10]. The metrics used for comparison are Ref-LPIPS (lower is better, indicating better perceptual similarity to the reference), Robustness (higher is better, indicating consistent stylization across viewpoints), and Speed (frames per second, higher is better, indicating faster rendering). The results demonstrate that ReGS significantly outperforms the baselines in both quality and speed.

In-depth insights#

Ref-based 3D Stylization#

Reference-based 3D stylization is a rapidly evolving field aiming to transfer the style of a 2D reference image onto a 3D scene. Early approaches often suffered from limitations in handling complex geometries and achieving high-fidelity texture transfer. Neural radiance fields (NeRFs), while offering high-quality results, often prove computationally expensive, making real-time applications challenging. Gaussian splatting emerges as a promising technique due to its efficiency. However, directly applying 2D stylization techniques to 3D Gaussian splatting faces hurdles as appearance is tightly coupled with geometry. Therefore, novel methods focus on decoupling appearance and geometry, often involving texture-guided control mechanisms to manipulate Gaussian parameters and ensure fine-grained texture control without compromising the original 3D structure. Real-time performance is a significant goal, requiring efficient rendering and optimization techniques. Future research will likely explore enhancing controllability, addressing limitations in handling occlusions and complex textures, and further optimizing for speed and scalability.

Gaussian Splatting#

Gaussian splatting, a novel 3D scene representation technique, presents a compelling alternative to traditional volumetric rendering methods. Its core innovation lies in representing scenes using millions of colored 3D Gaussians, each with learnable attributes like position, color, opacity, and covariance. This discrete representation, unlike continuous radiance fields, allows for highly efficient differentiable rendering via splatting-based rasterization. This approach significantly accelerates view synthesis, enabling real-time performance and free-view navigation, which is often challenging with traditional NeRFs. However, the discrete nature of Gaussian splatting also presents challenges. Directly optimizing the appearance of pre-trained Gaussians is often insufficient for capturing fine details and continuous textures. The inherent entanglement of geometry and appearance in the Gaussian representation requires more sophisticated editing techniques beyond simple appearance optimization. Therefore, methods like texture-guided control are crucial for effective stylization and high-fidelity texture editing while preserving original scene geometry.

Texture-Guided Control#

The proposed ‘Texture-Guided Gaussian Control’ method is a crucial innovation for high-fidelity texture editing in 3D scenes. It directly addresses the limitations of prior methods that struggle with continuous textures by adaptively adjusting the arrangement of local Gaussians. Instead of solely optimizing appearance, this method leverages texture clues to identify and modify the relevant Gaussians, ensuring the desired texture details are precisely reflected. Depth-based regularization prevents undesired geometric distortions, maintaining the scene’s structural integrity. The use of color gradients as guidance offers a significant advantage over positional gradients by more effectively pinpointing areas needing texture refinement. This texture-guided approach makes high-fidelity appearance editing more achievable, resulting in state-of-the-art stylization results while maintaining real-time performance, as demonstrated by the high frame rate reported. This intelligent control mechanism truly unlocks the potential of Gaussian splatting for realistic scene stylization.

Real-time Rendering#

Real-time rendering in 3D scene stylization presents a significant challenge, demanding efficient techniques to process and display complex visual data rapidly. The paper addresses this by leveraging 3D Gaussian Splatting (3DGS), a method known for its speed. However, directly applying 3DGS to stylization is nontrivial due to its discrete Gaussian representation. The core innovation lies in the texture-guided Gaussian control, which dynamically adjusts the spatial arrangement of Gaussians to achieve high-fidelity texture reproduction, balancing speed and quality. The effectiveness of this approach is clearly demonstrated with quantitative metrics like FPS, showcasing considerable improvement over existing NeRF-based methods. While real-time rendering is achieved, the trade-off between speed and the quality of texture details remains a factor. Future work could investigate further optimizations and explore different trade-offs, especially for high-resolution scenes and complex stylization effects. The real-time aspect is crucial for interactive applications, enabling seamless navigation and manipulation of the stylized 3D scenes. This contributes significantly to user experience in fields such as digital art, film production, and virtual reality.

Future Directions#

The paper’s ‘Future Directions’ section would ideally explore extending ReGS’s capabilities beyond image-based stylization. Investigating text-driven or multi-modal control would significantly enhance the system’s versatility. Furthermore, addressing the current limitation of handling only minor geometric changes by incorporating more sophisticated geometry editing techniques, perhaps leveraging generative models or learning shape transformations from reference images, is crucial. Improving efficiency is also critical; reducing computational demands could facilitate real-time applications on more constrained devices. Finally, a thorough exploration of the robustness and generalizability of ReGS across various scene types, texture characteristics, and styles is necessary, along with careful consideration of potential ethical implications, especially regarding the creation of realistic yet manipulated content.

More visual insights#

More on figures

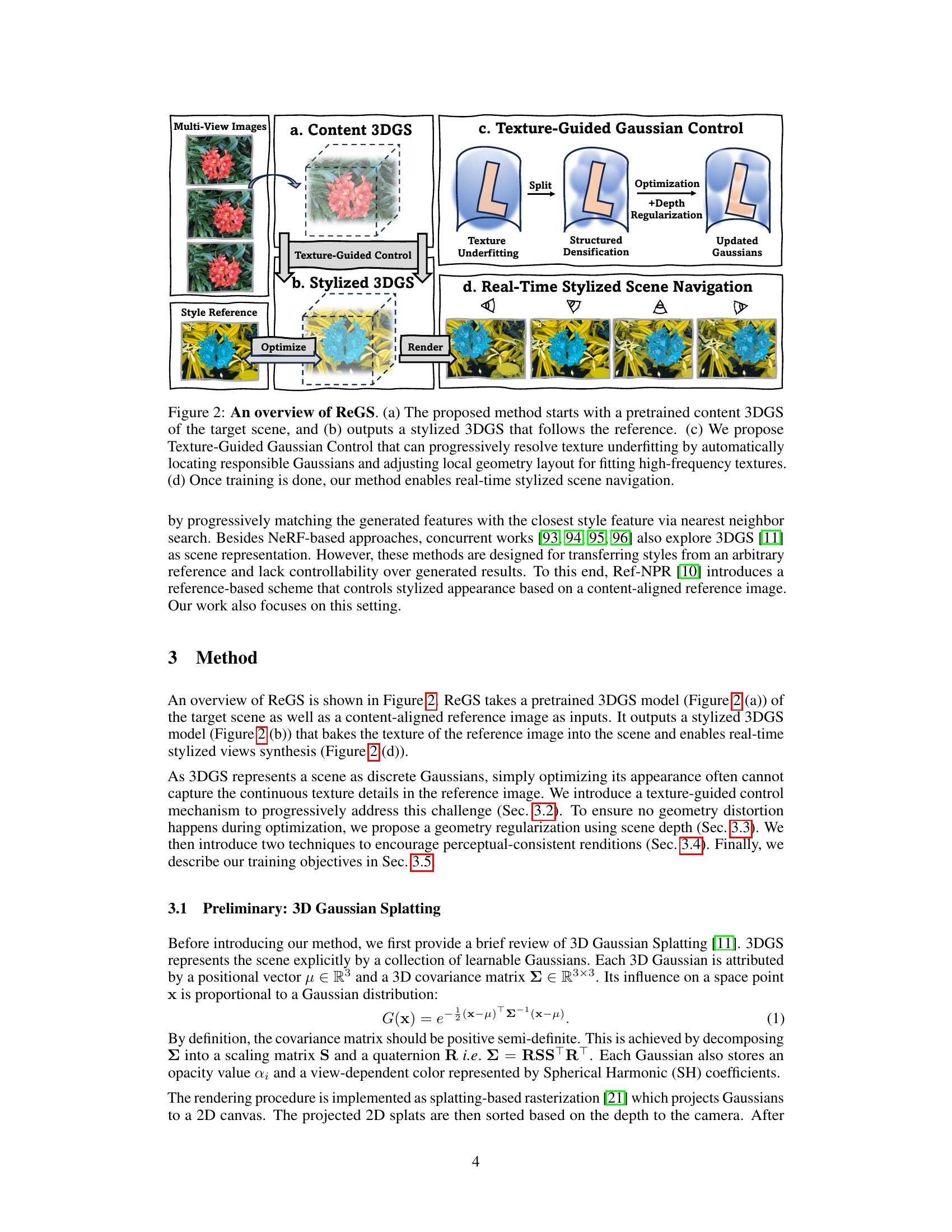

This figure shows the process of ReGS. First, a pretrained 3D Gaussian splatting (3DGS) model of the target scene is used as input (a). Then, the stylized 3DGS model is obtained by applying Texture-Guided Gaussian Control to resolve texture underfitting and adjust local Gaussian geometry (b, c). Finally, real-time stylized scene navigation is enabled by rendering the stylized 3DGS model (d).

This figure shows examples of rendered depth maps and synthesized stylized pseudo views. The depth maps (a) are created using equation 3 from the paper, representing the depth information of the scene. These depth maps are then used to generate stylized pseudo views (b) through a depth-based warping technique, creating views that are consistent with the reference style image but from slightly different viewpoints. These pseudo views provide additional training supervision during the stylization process, helping to ensure view consistency in the final stylized output.

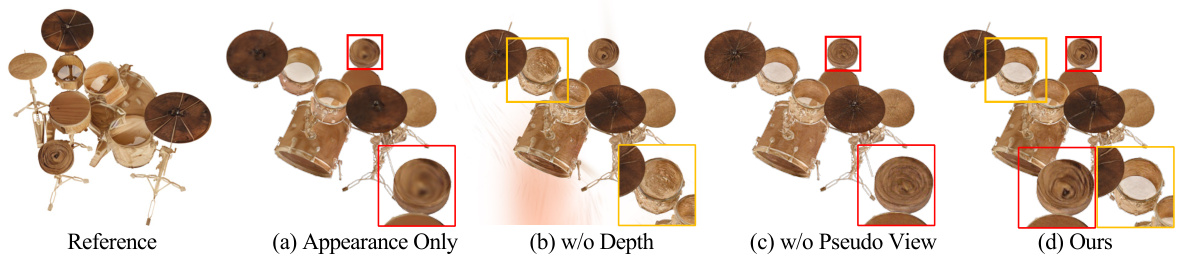

This ablation study shows the importance of each component in ReGS. (a) demonstrates that simply adjusting appearance is insufficient for high-fidelity texture reproduction. (b) shows how depth regularization prevents geometric distortions. (c) highlights the need for pseudo-view supervision to maintain view consistency. (d) showcases the superior results of the complete ReGS model.

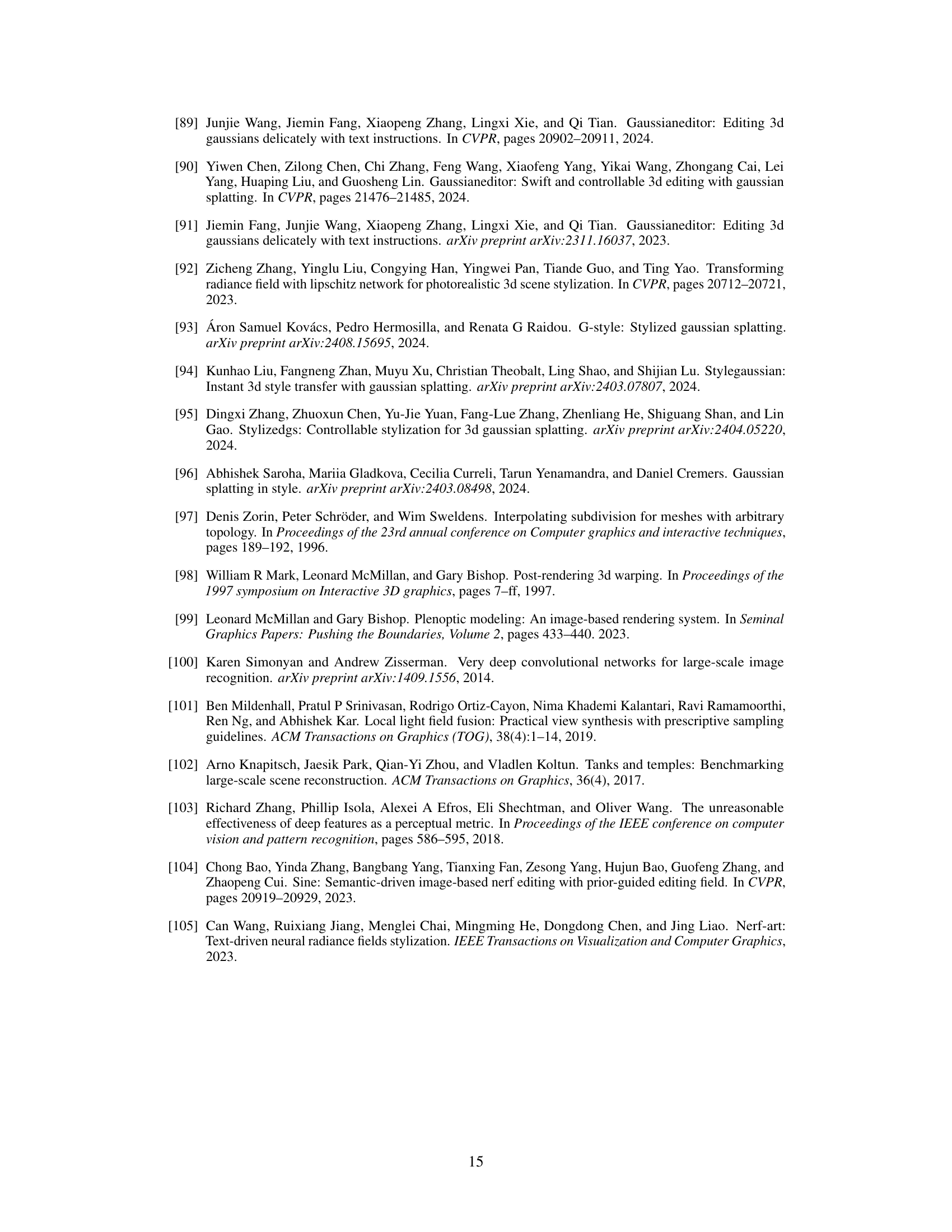

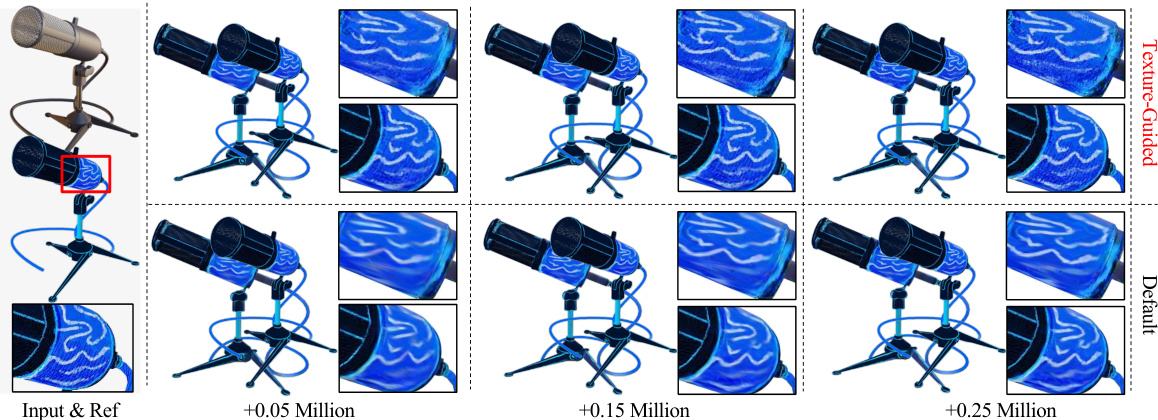

This figure compares the results of using the proposed texture-guided control method versus the default density control method in 3D Gaussian Splatting for scene stylization. By limiting the number of new Gaussians added during optimization, the experiment shows that the texture-guided approach can achieve significantly better results in capturing fine details, even with a much smaller number of additional Gaussians. The default approach struggles to capture high-frequency texture details, even when a larger number of Gaussians is added.

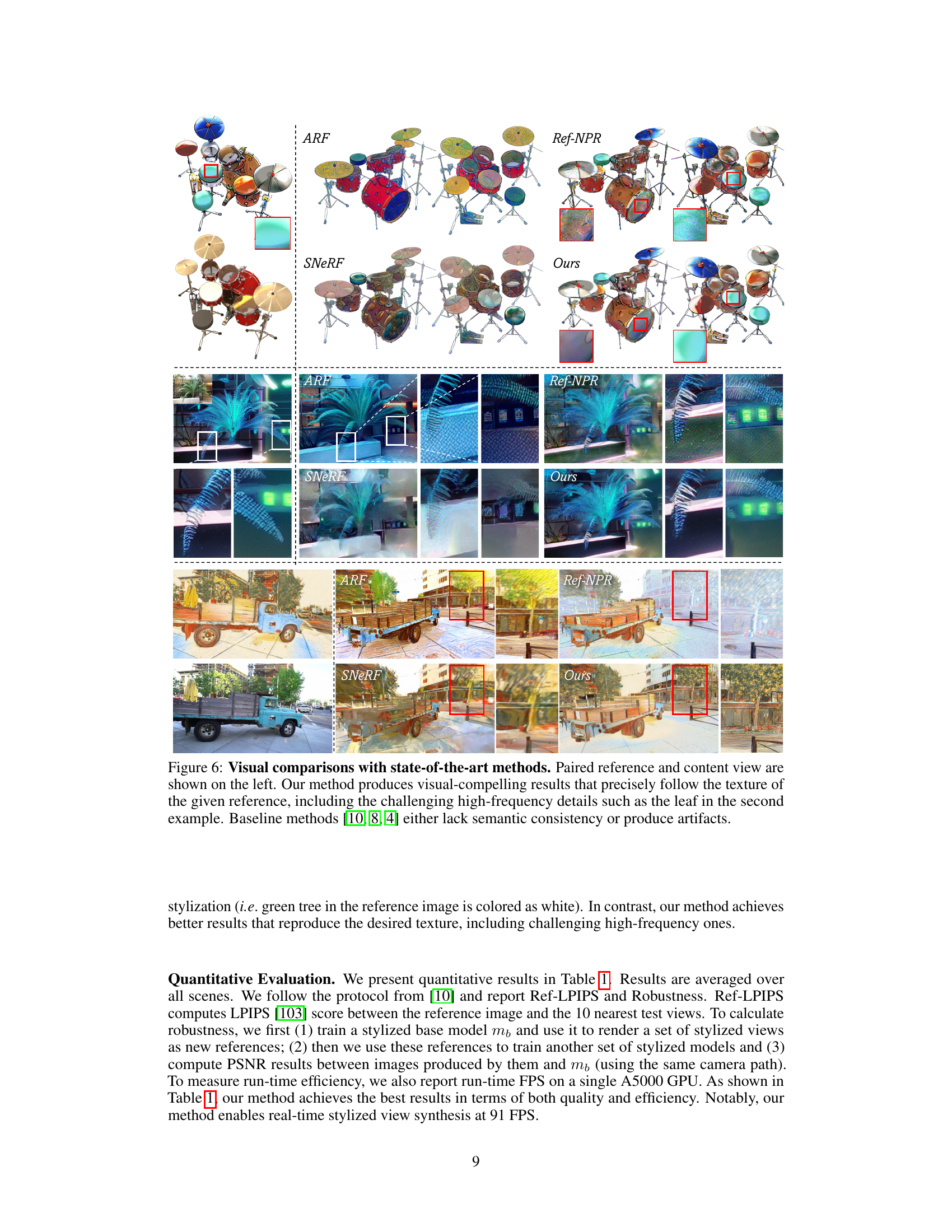

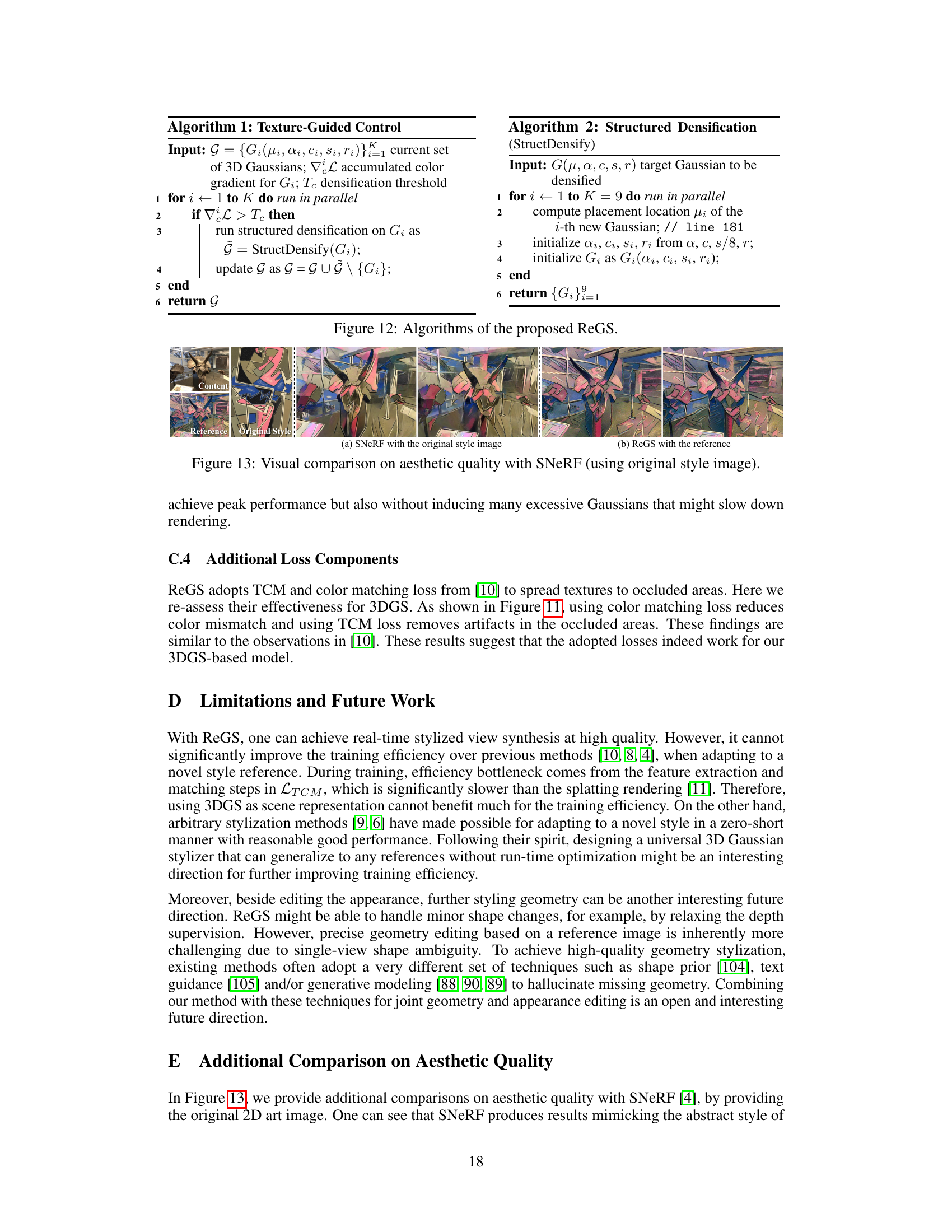

This figure compares the results of ReGS against three state-of-the-art methods (ARF, Ref-NPR, and SNeRF) on three different scenes. Each row shows a scene with its reference image and the results from each method. ReGS demonstrates a superior capability to accurately reproduce the textures from the reference image, especially high-frequency details that other methods struggle to capture. The other methods either miss crucial texture details or introduce visual inconsistencies.

This figure demonstrates the application of ReGS for appearance editing. A pre-trained 3D Gaussian Splatting (3DGS) model is used as a starting point. The user can directly edit the appearance of the scene by drawing on a 2D rendering. The key takeaway is that ReGS can seamlessly integrate these edits back into the 3D model, unlike methods that only optimize appearance, which struggle to handle user edits effectively, especially on surfaces with high detail.

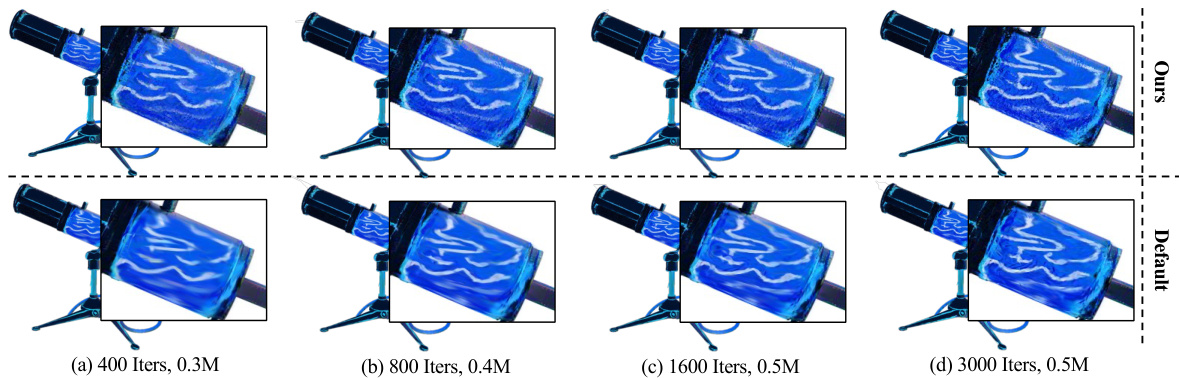

This figure shows an ablation study comparing the proposed structured densification method with the default method. The structured densification method is shown to effectively capture high-frequency details with fewer Gaussians, while the default method struggles to do so even with a large number of Gaussians.

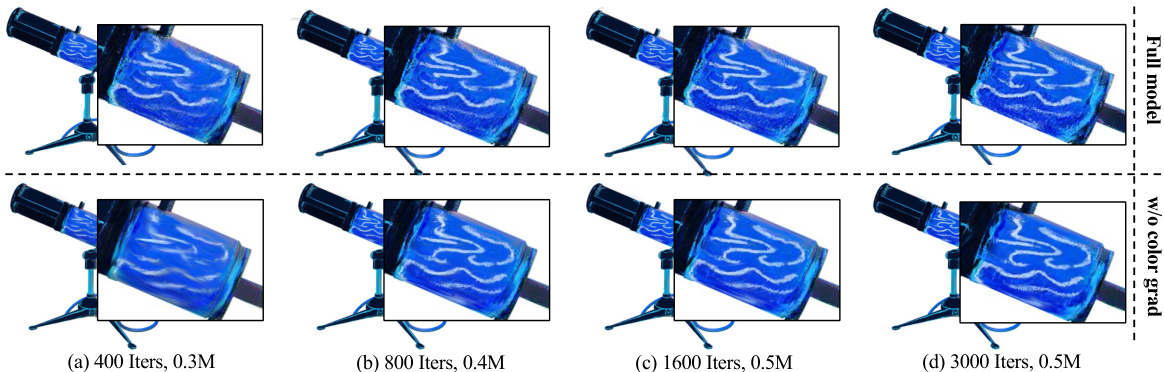

This ablation study compares the performance of ReGS using color-gradient guidance versus positional-gradient guidance. The results show that using color gradients to guide the texture-based control mechanism is crucial for capturing high-frequency texture details. In contrast, positional gradients are insufficient for accurately reproducing fine details of the reference texture.

This ablation study explores the impact of varying the number of new Gaussians generated when splitting a responsible Gaussian during the texture-guided control process. The results demonstrate that using a small number of Gaussians fails to capture fine details, while increasing the number beyond a certain point yields diminishing returns, indicating saturation in performance improvement. The optimal balance is found at a specific number of new Gaussians, demonstrating that the proposed structured densification strategy effectively enhances high-frequency texture representation without unnecessary computational overhead.

This ablation study demonstrates the importance of each component in the ReGS model. (a) shows that only optimizing appearance leads to a lack of fine texture detail. (b) illustrates that the depth regularization is crucial for maintaining the correct geometry. (c) highlights the necessity of pseudo-view supervision for view consistency. Finally, (d) showcases the superior performance of the complete ReGS model, accurately reproducing the reference texture.

This figure provides a visual overview of the ReGS method. It shows the process, starting with a pretrained 3D Gaussian Splatting (3DGS) model of the target scene and a style reference image. The core of the method, Texture-Guided Gaussian Control, is highlighted, showing how it addresses texture underfitting by adjusting the geometry and arrangement of Gaussians. The final result is real-time stylized scene navigation.

This figure compares the results of ReGS against three other state-of-the-art methods for reference-based 3D scene stylization: ARF, SNeRF, and Ref-NPR. Each row shows a different scene with its reference image on the far left. The following columns show the results from each method, highlighting the superior detail and accuracy of ReGS, especially regarding high-frequency textures. ARF and SNeRF often fail to achieve the level of detail or semantic consistency as ReGS, while Ref-NPR produces some artifacts.

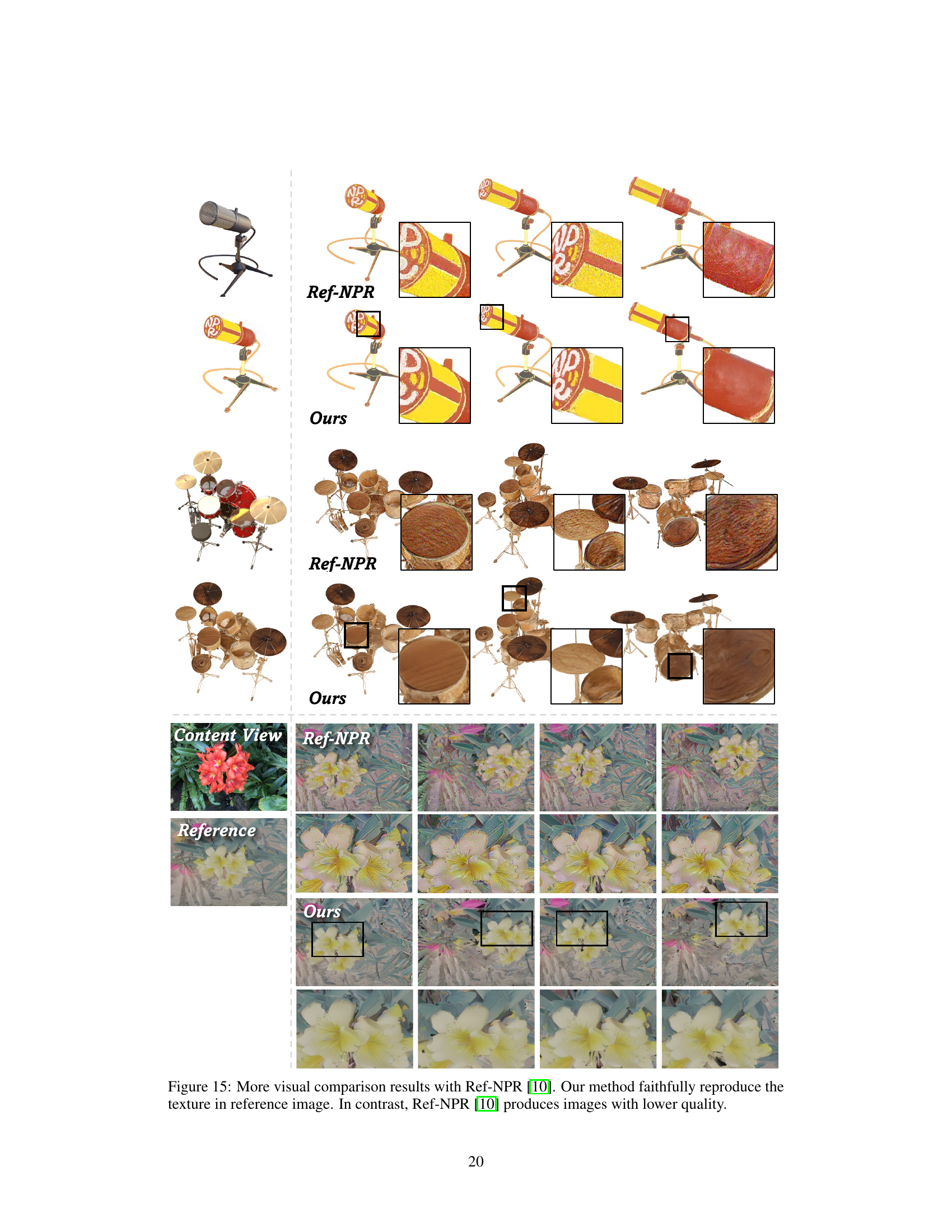

This figure compares the results of the proposed method, ReGS, against Ref-NPR, another reference-based stylization method. It shows three different scenes: microphones, drums, and flowers. For each scene, the content view, reference image, Ref-NPR results, and ReGS results are presented. The results illustrate that ReGS better reproduces the texture of the reference image compared to Ref-NPR, which shows some artifacts.

Full paper#