↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Constrained Reinforcement Learning (CRL) traditionally focuses on stochastic policies, but deterministic policies are often preferred for their predictability and robustness in real-world applications like self-driving cars and medical decision-making. However, finding optimal deterministic policies in CRL problems has been proven NP-hard for most popular constraints. This poses a significant challenge, as stochastic policies can exhibit undesirable randomness and unpredictability.

This research introduces a novel algorithm that overcomes this limitation by efficiently computing near-optimal deterministic policies for a broad range of constraints. The algorithm leverages three key ideas: value-demand augmentation, action-space approximate dynamic programming, and time-space rounding. The result is a fully polynomial-time approximation scheme (FPTAS), providing a provably efficient solution to the problem. This significantly advances the field of CRL, enabling the development of more reliable and predictable AI systems.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in reinforcement learning and related fields because it provides the first fully polynomial-time approximation scheme (FPTAS) for computing near-optimal deterministic policies in constrained Markov Decision Processes (CMDPs). This addresses a long-standing challenge and opens new avenues for solving complex real-world problems where deterministic and constrained policies are essential. The algorithms developed are practically relevant and can directly lead to more robust, trustworthy, and predictable AI systems. It also solves previously open problems regarding anytime-constrained, almost-sure-constrained, and deterministic expectation-constrained policies.

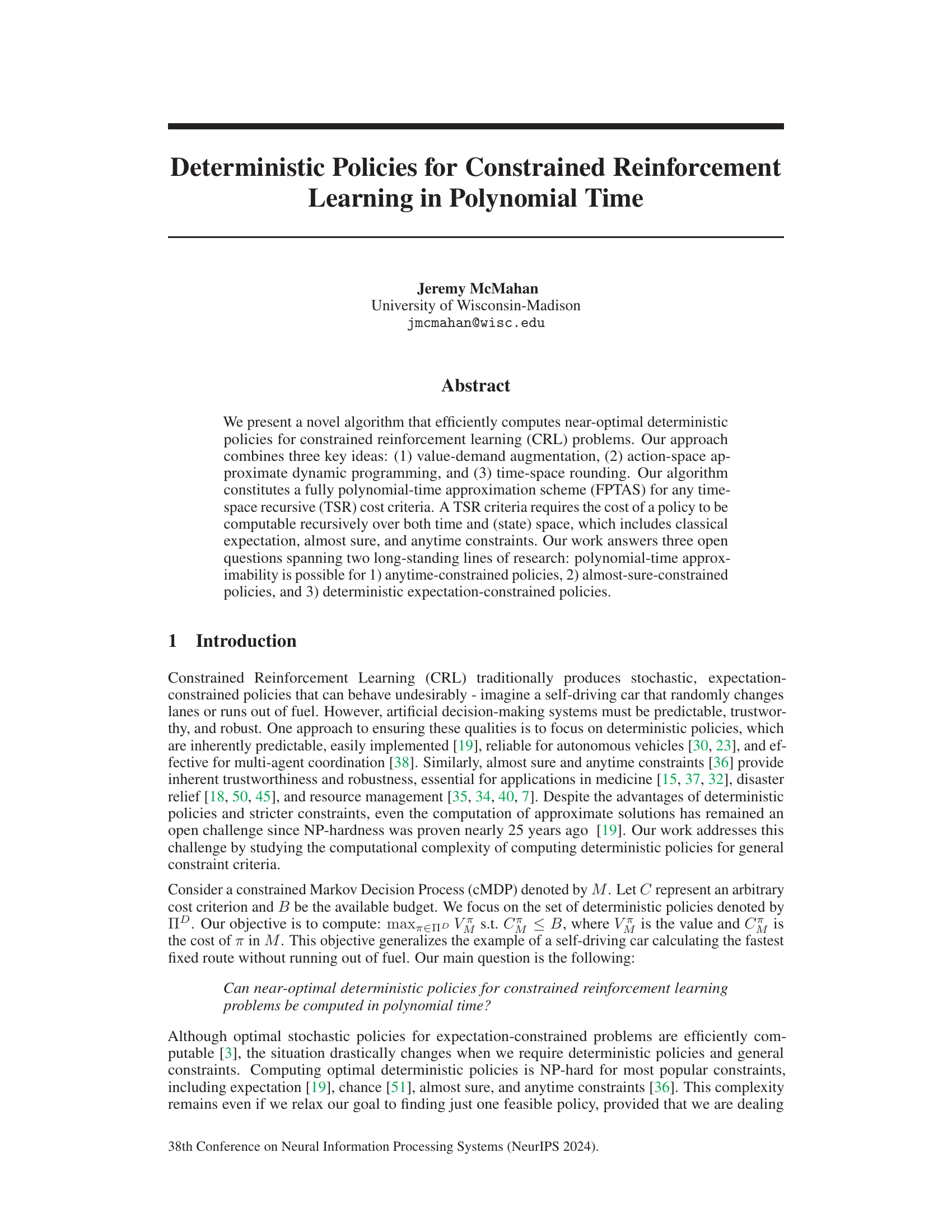

Visual Insights#

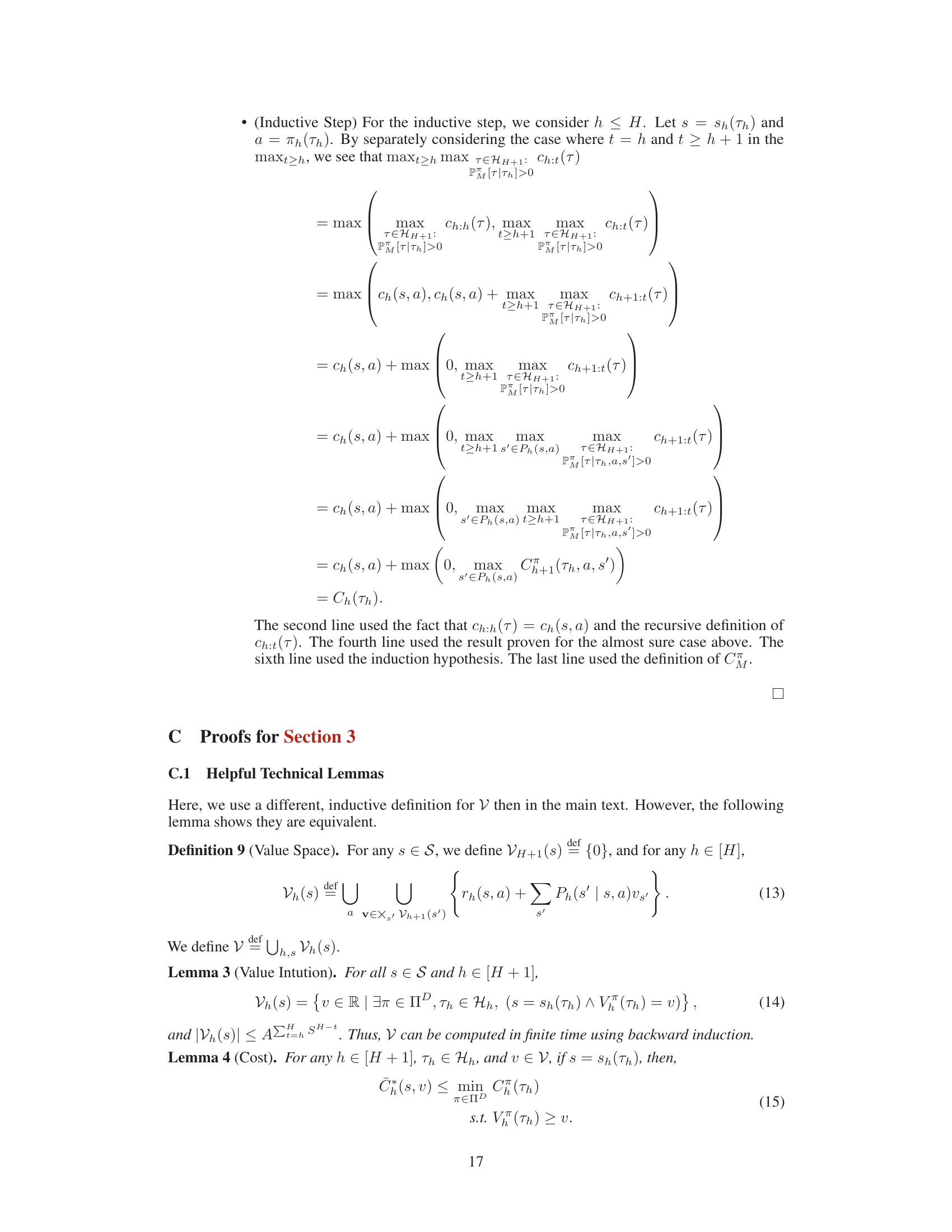

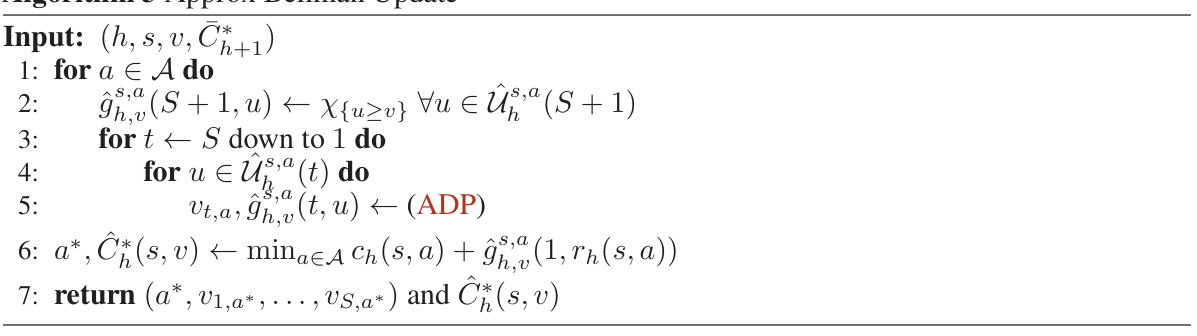

This algorithm reduces the constrained reinforcement learning problem to an unconstrained Markov Decision Process (MDP) problem by augmenting the state space with value demands. The algorithm first transforms the original constrained MDP and cost criterion into a new MDP with a modified cost criterion. Then, it solves the new MDP using a cost-minimizing algorithm. Finally, it checks if the new MDP’s cost is within the budget. If the cost is within the budget, then the algorithm outputs a feasible policy; otherwise, it outputs ‘infeasible’.

In-depth insights#

CRL’s Deterministic Turn#

The heading “CRL’s Deterministic Turn” suggests a shift in constrained reinforcement learning (CRL) from traditional stochastic approaches towards deterministic policies. This is a significant departure, as stochasticity, while offering exploration and robustness, can lead to unpredictable behaviors in applications requiring reliable decision-making. The deterministic turn likely focuses on the advantages of predictability and trustworthiness inherent in deterministic policies. The core challenge in this paradigm shift likely centers on the increased computational complexity, potentially resolving the long-standing NP-hardness problem in computing optimal deterministic policies. The research probably investigates approximation algorithms to find near-optimal solutions efficiently. The exploration of different constraint types (expectation, almost sure, anytime) within a deterministic framework is another important aspect. The study could explore the trade-off between solution optimality, computational efficiency and the types of constraints considered, potentially proposing novel algorithms and theoretical guarantees for achieving a fully polynomial-time approximation scheme (FPTAS). The focus on deterministic policies enhances the practicality and real-world applicability of CRL in safety-critical domains such as autonomous driving and robotics, where deterministic control is crucial for safety and reliability.

FPTAS for Constrained RL#

The prospect of achieving a Fully Polynomial Time Approximation Scheme (FPTAS) for constrained reinforcement learning (CRL) is a significant advancement in the field. This implies the existence of an algorithm that can find near-optimal solutions to complex CRL problems in polynomial time, a crucial improvement over existing methods which often face NP-hardness. The development of such an algorithm would have profound implications for the applicability of CRL in various real-world settings where computational efficiency is paramount, such as robotics, resource management, and healthcare. The core challenge lies in addressing the inherent computational complexity of CRL, particularly when dealing with deterministic policies and stricter constraints like anytime or almost-sure constraints, which are more robust and trustworthy but computationally harder to solve than traditional expectation constraints. An FPTAS would provide a balance between solution quality and computational tractability, allowing the use of powerful CRL methods in scenarios with stringent resource constraints. The key innovations likely involve sophisticated algorithmic techniques such as dynamic programming approximations, clever rounding methods, and possibly novel state-space augmentation strategies to overcome the computational hurdles of CRL. However, achieving an FPTAS will likely require careful consideration of the problem’s specific constraints and may involve assumptions on the cost structure and problem dynamics. Regardless, the goal of an FPTAS remains a compelling target for future research in constrained RL.

Value-Demand Augmentation#

The concept of “Value-Demand Augmentation” presents a novel approach to constrained reinforcement learning (CRL) by augmenting the state space with value demands. This clever technique effectively transforms the original constrained optimization problem into an equivalent unconstrained one, significantly simplifying the computational complexity. By incorporating value demands directly into the state representation, the agent can now reason recursively about the cost of achieving a desired value while simultaneously satisfying the constraint. This approach elegantly addresses the challenge of cyclic dependencies, a significant hurdle in many conventional approaches, thereby enabling the use of efficient dynamic programming methods. The effectiveness hinges on carefully managing the trade-off between the increased state space dimensionality and the computational gain derived from transforming the problem. The algorithm’s effectiveness relies on the ability to accurately round the augmented values, a crucial element for ensuring provable approximation guarantees. This method proves particularly useful for handling diverse constraint types, including expectation, almost sure, and anytime constraints, all encapsulated within a general framework. In essence, “Value-Demand Augmentation” provides a powerful tool for efficiently finding near-optimal deterministic policies in CRL, paving the way for more robust and predictable AI systems in critical applications.

Time-Space Rounding#

The concept of “Time-Space Rounding” in the context of constrained reinforcement learning algorithms is a crucial innovation to address the computational complexity associated with finding near-optimal deterministic policies. The core idea is to simultaneously round the value demands in both the temporal (time) and spatial (state) dimensions. This approach directly combats the exponential blowup in the action space that typically arises from value augmentation in these problems. By carefully controlling the rounding error in both dimensions, the algorithm ensures that feasibility is maintained, and the approximation remains within provable error bounds. This is a key departure from traditional approaches to similar problems, which often struggle with the cyclic dependencies arising from the interrelation of costs and values. The effectiveness of Time-Space Rounding hinges on the carefully chosen rounding function, which needs to balance the accuracy of approximation with the need to control the size of the resulting search space. This trade-off is essential for achieving a fully polynomial-time approximation scheme (FPTAS). The analysis must demonstrate that the error introduced by rounding remains bounded and controllable, both across time and states, to guarantee the algorithm’s performance. The specific rounding function and error analysis are therefore pivotal to the success of the method.

Approximation Algorithm#

Approximation algorithms are crucial when dealing with computationally hard problems, as is often the case in constrained reinforcement learning (CRL). The heading ‘Approximation Algorithm’ suggests a section dedicated to addressing the inherent NP-hardness of finding optimal deterministic policies in CRL. This likely involves techniques to trade off optimality for computational tractability. The authors probably present algorithms that guarantee a solution within a certain bound of the optimal solution, or achieve a specific level of approximation with a proven time complexity. The description of the algorithms’ performance, including error bounds and runtime guarantees, is key here. The discussion might also highlight the trade-off between accuracy and computational efficiency, explaining the choice of specific approximation parameters. It would likely explain how the choice of those parameters affects the algorithm’s performance, including both its runtime and accuracy. It may also cover different approximation schemes, such as additive or relative approximations, and might discuss which one is more suitable under varying circumstances or problem characteristics. The section likely concludes by establishing the computational complexity of the proposed algorithm, ideally proving that it is a fully polynomial-time approximation scheme (FPTAS). Such a result would be a significant contribution, showcasing polynomial-time complexity despite the NP-hard nature of the problem. This would likely be the major focus of this section.

More visual insights#

More on tables

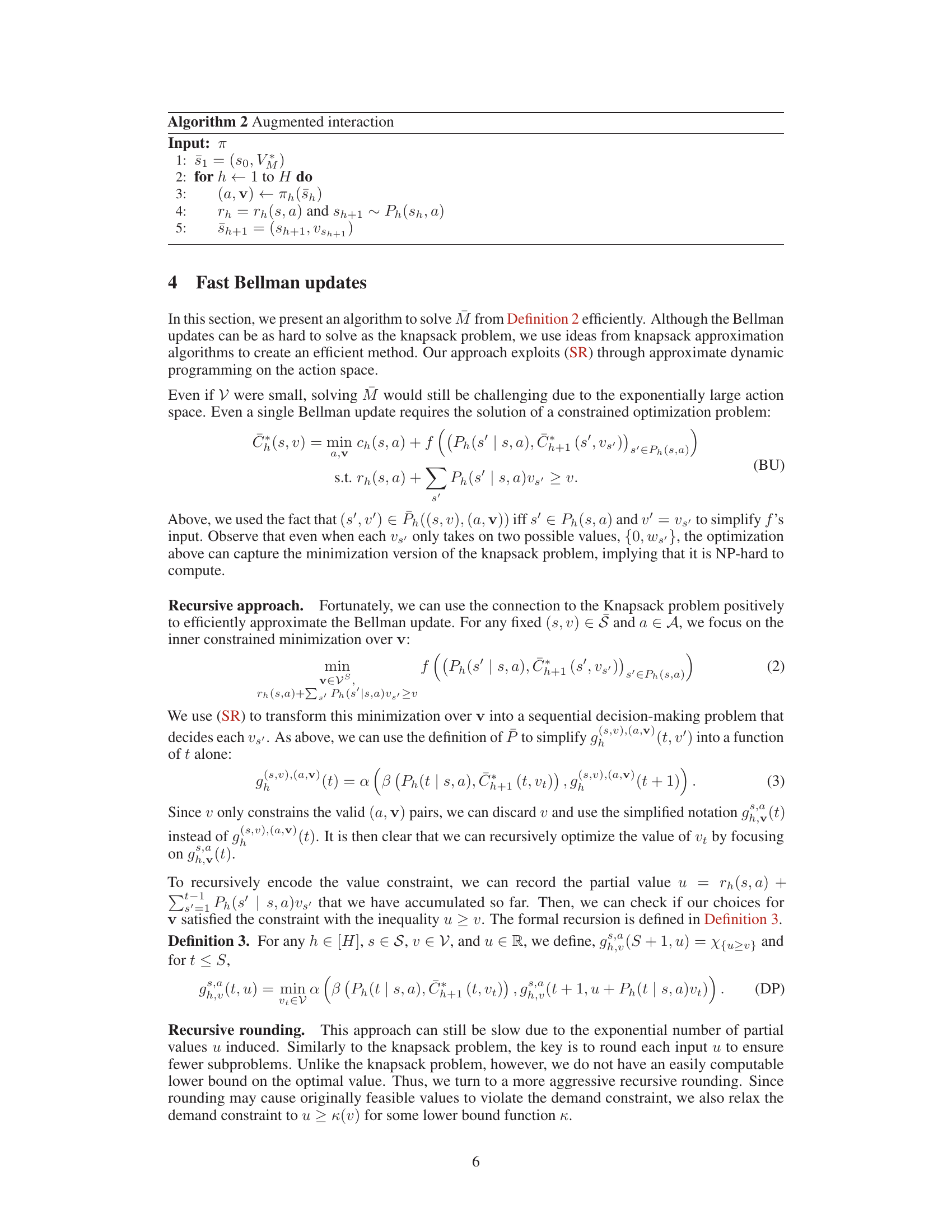

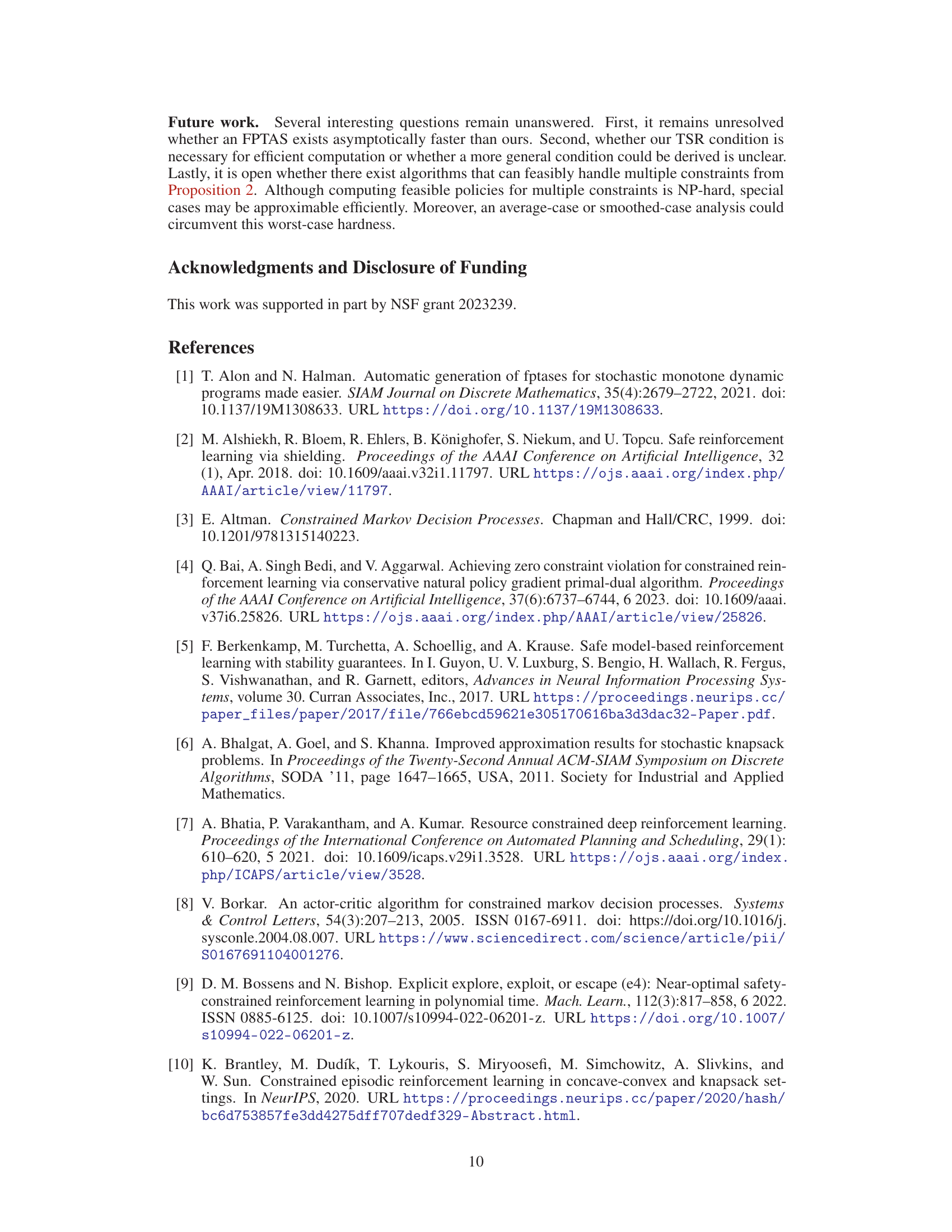

This algorithm details the steps for approximating the Bellman update in the context of the covering program. It uses a recursive approach and incorporates value rounding to efficiently compute the update. The algorithm takes as input the current time step, state, value demand, and next-step cost and outputs the optimal action and its associated cost.

This algorithm provides an approximation scheme for solving the constrained optimization problem (CON) by carefully rounding value demands. It first defines an approximate MDP (Markov Decision Process) using a rounding function and a lower bound function. Then, it solves this approximate MDP using Algorithm 4. The algorithm returns either ‘Infeasible’ if no feasible policy is found, or a near-optimal deterministic policy π.

Full paper#