↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Reinforcement learning (RL) often uses function approximation to handle large state and action spaces. Linear approximation is common but limited; non-linear methods like multinomial logit (MNL) offer greater expressiveness, but introduce computational and statistical challenges, especially regarding regret (difference between optimal and achieved reward). Previous work achieved optimal regret in terms of the number of episodes but incurred high per-episode computational costs.

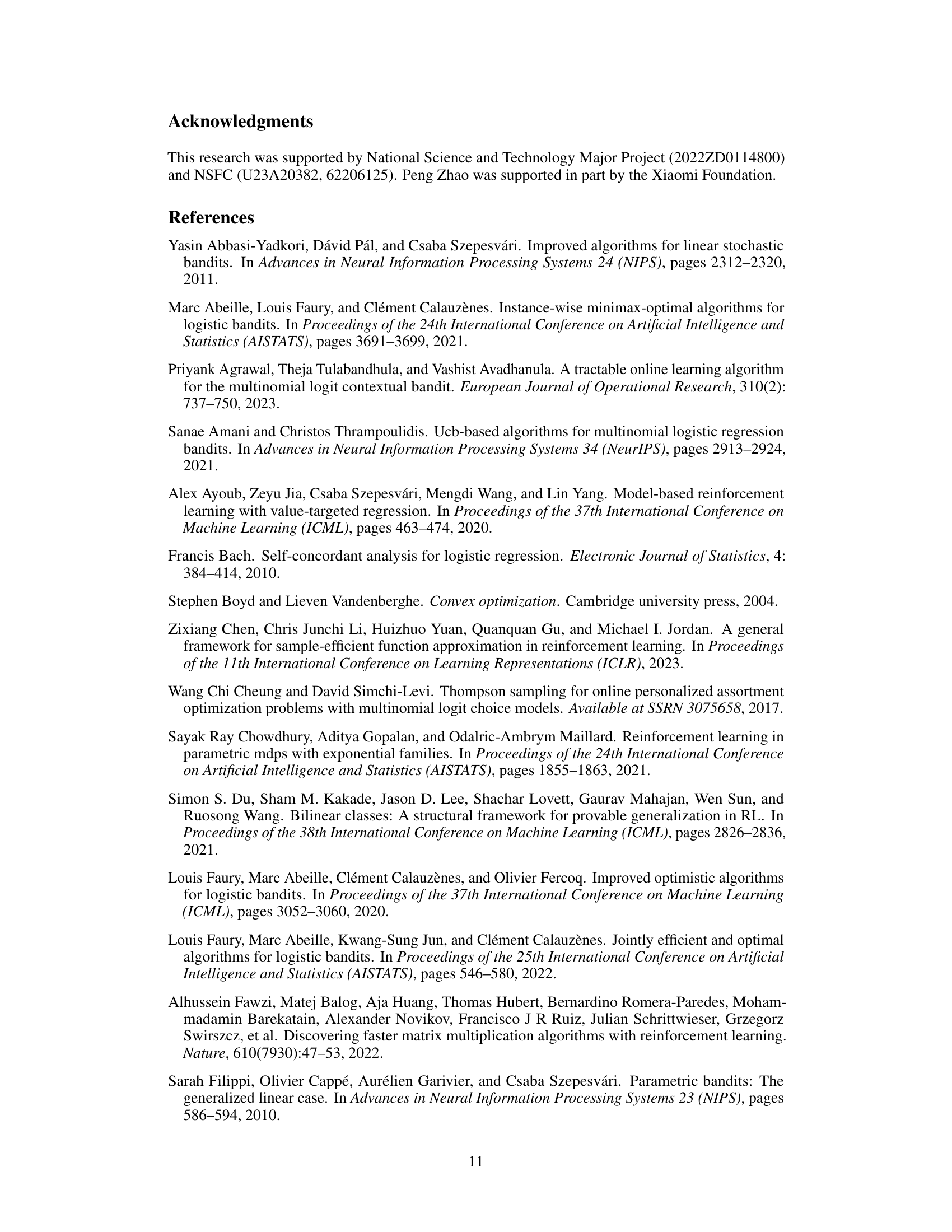

This paper addresses these limitations by introducing two new algorithms (UCRL-MNL-OL and UCRL-MNL-LL) for MDPs using MNL approximation. UCRL-MNL-OL matches the best-known regret while drastically reducing per-episode cost to O(1). UCRL-MNL-LL further improves the regret bound by leveraging local information, almost matching linear methods’ efficiency and nearly closing the gap. The paper also provides the first lower bound, supporting the optimality of the improved results.

Key Takeaways#

Why does it matter?#

This paper is crucial because it tackles the computational and statistical inefficiencies inherent in using multinomial logit (MNL) function approximation in reinforcement learning (RL). By developing novel algorithms with O(1) computational and storage costs, and achieving an improved regret bound, it bridges the gap between linear and non-linear function approximation in RL. This opens doors for applying MNL approximation to larger-scale problems and inspires further research into efficient non-linear function approximation methods for RL.

Visual Insights#

This table compares the regret, computation cost, and storage cost of different reinforcement learning algorithms for Markov Decision Processes (MDPs) that use multinomial logit (MNL) function approximation. It shows how the proposed algorithms (UCRL-MNL-OL and UCRL-MNL-LL) improve upon the existing state-of-the-art (Hwang and Oh [2023]) in terms of computational and storage efficiency while achieving comparable or better regret bounds. The table also presents a lower bound on the regret, highlighting the near-optimality of the proposed algorithms.

Full paper#