↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

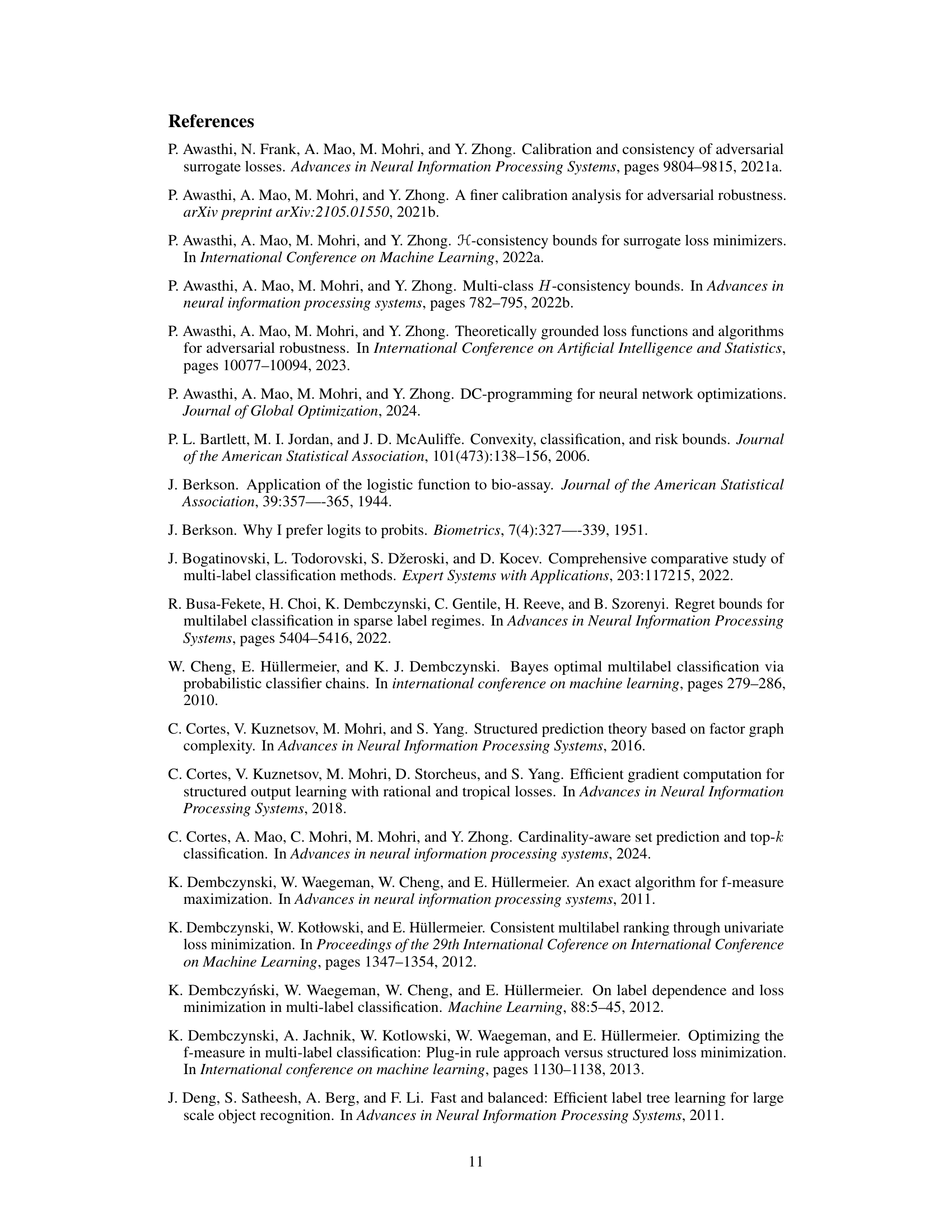

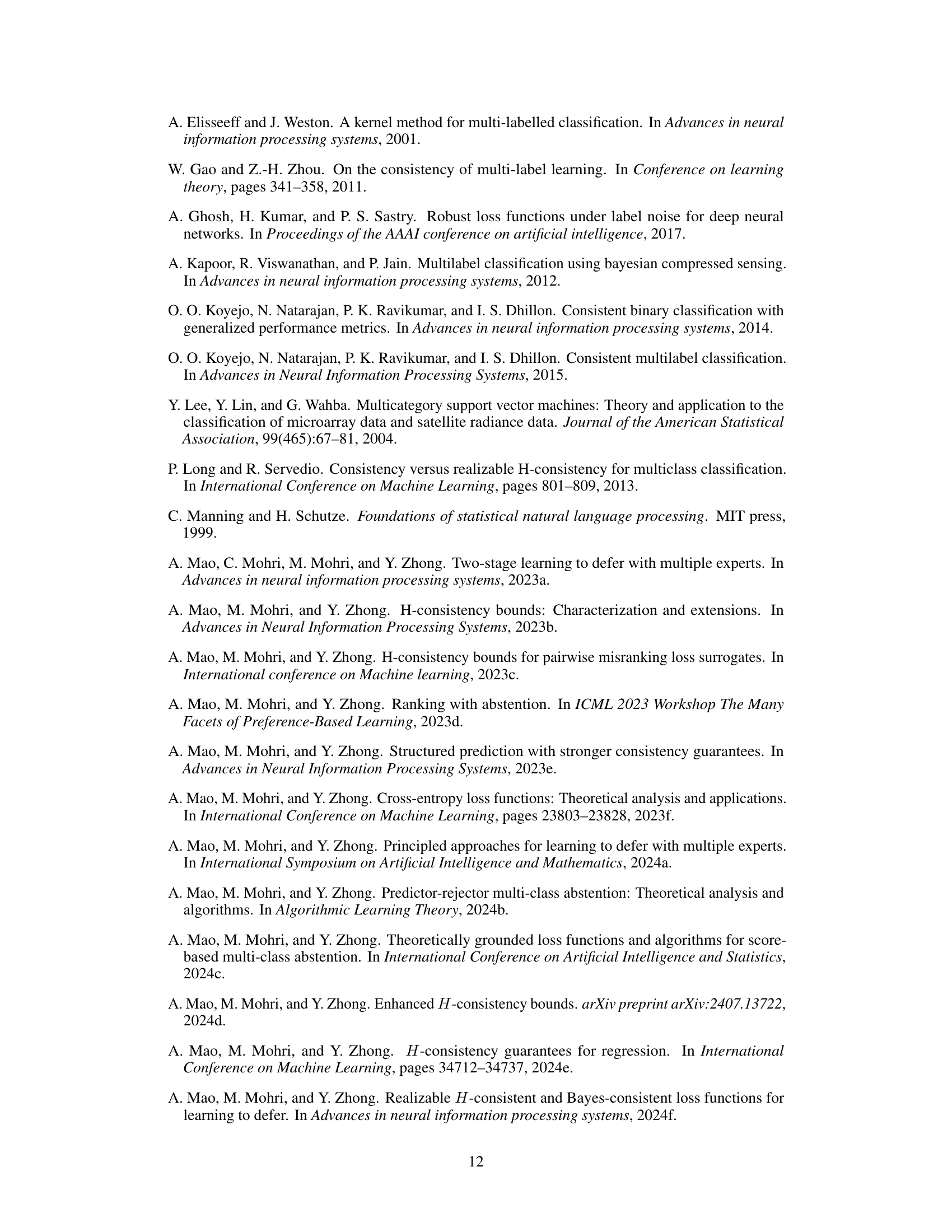

Multi-label learning, assigning multiple labels to data instances, faces challenges with existing surrogate losses. These losses often suffer from suboptimal dependencies on the number of labels and fail to capture label correlations, hindering efficient and reliable learning. This results in weaker theoretical guarantees and less-effective algorithms.

This research introduces novel surrogate losses: multi-label logistic loss and comp-sum losses. These losses address the limitations of existing methods by offering label-independent H-consistency bounds and accounting for label correlations. A unified framework is developed, benefiting from strong consistency guarantees. The paper further provides efficient gradient computation algorithms, making the approach practical for various multi-label learning tasks.

Key Takeaways#

Why does it matter?#

This paper is crucial because it offers a unified framework for multi-label learning with stronger consistency guarantees. This addresses a critical limitation of previous work, which only established such guarantees for specific loss functions. The results are significant because they directly impact algorithm design and theoretical understanding, paving the way for more efficient and reliable multi-label learning applications. The introduction of novel surrogate losses (multi-label logistic loss and comp-sum losses), along with efficient gradient computation algorithms, makes this work highly relevant to researchers and practitioners.

Visual Insights#

Full paper#