↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Inverse Reinforcement Learning (IRL) faces a significant hurdle: scaling to large state spaces. Existing methods struggle to efficiently estimate the feasible reward set, which encompasses all rewards explaining observed expert behavior. This is problematic because the size of the feasible set grows exponentially with state space dimensions. This paper addresses these issues.

The solution proposed is CATY-IRL, a novel algorithm based on the concept of ‘rewards compatibility’. CATY-IRL efficiently classifies rewards according to their compatibility with expert demonstrations, sidestepping the need to explicitly compute the computationally expensive feasible set. Its complexity is independent of the state space size in linear MDPs. For tabular settings, the algorithm’s efficiency is proven to be minimax optimal, improving existing bounds on the related problem of reward-free exploration.

Key Takeaways#

Why does it matter?#

This paper is crucial because it tackles the scalability challenges of Inverse Reinforcement Learning (IRL) in large state spaces, a critical limitation hindering real-world applications. The proposed CATY-IRL algorithm offers a provably efficient solution, overcoming existing limitations and opening new avenues for research in efficient IRL methods and the related field of Reward-Free Exploration.

Visual Insights#

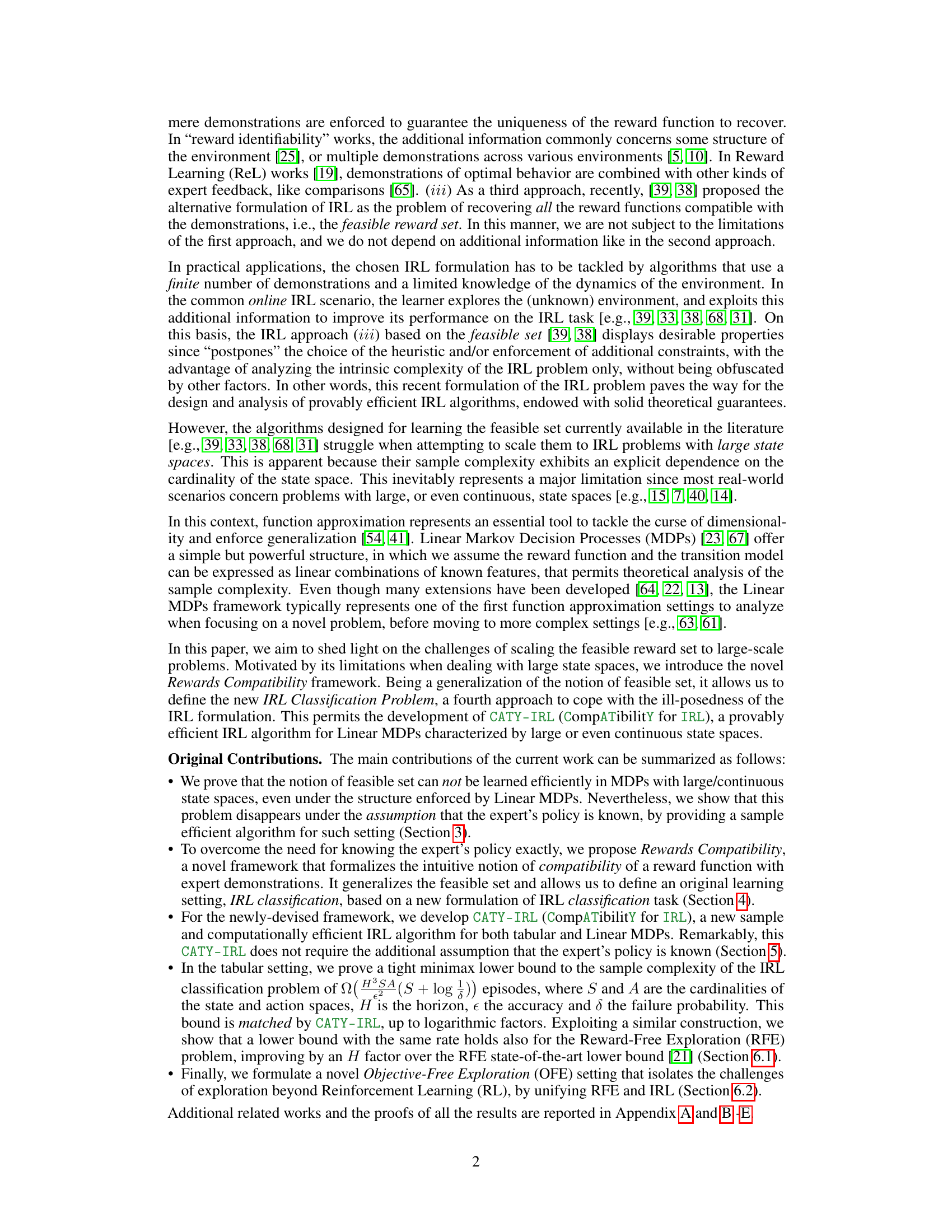

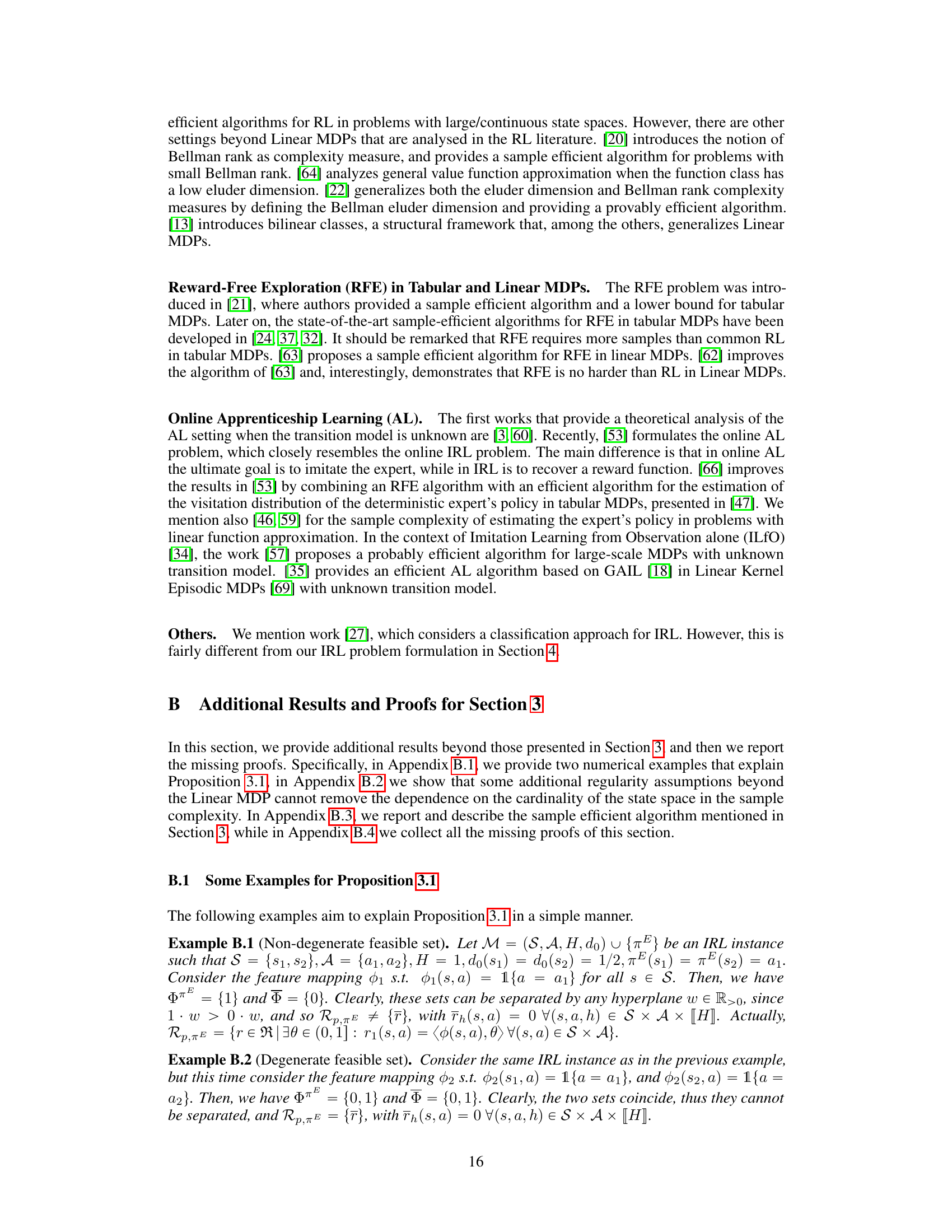

The figure illustrates the concept of PAC framework for IRL classification problem. It shows how rewards are classified based on their (non)compatibility with the expert policy. The rewards whose true (non)compatibility is far from the threshold are correctly classified, while those near the threshold can be misclassified. The uncertainty region shrinks as the accuracy increases.

This table summarizes four related problems: Reward-Free Exploration (RFE), Inverse Reinforcement Learning (IRL), Matching Performance (MP), and Imitation Learning from Demonstrations alone (ILfO). It outlines the input (what the learner receives), the assumptions made, the output the algorithm provides, and the goal of each problem. The problems share similarities, particularly in the use of exploration to learn about an unknown environment before solving a specific task, but differ in the type of task and the information provided.

In-depth insights#

IRL’s State Space Issue#

The core challenge highlighted in the provided text is IRL’s scalability to large state spaces. Traditional IRL methods struggle because they often attempt to characterize the entire feasible reward set—all reward functions consistent with observed expert behavior. This approach’s complexity grows exponentially with the size of the state space, leading to computational and statistical inefficiency. The paper addresses this by introducing a novel concept of rewards compatibility, generalizing the feasible set to efficiently classify rewards based on their compatibility with expert demonstrations. This shift to a classification framework enables the development of sample-efficient algorithms, significantly mitigating the state space issue and improving scalability. Linear MDPs are used as a crucial structure to enable provable efficiency. However, the paper also demonstrates that even the Linear MDP structure is insufficient for efficient feasible set learning with large state spaces. The proposed solution, therefore, offers a paradigm shift in tackling the core limitations of existing IRL approaches when facing the curse of dimensionality in large real-world applications.

CATY-IRL Algorithm#

The CATY-IRL algorithm is presented as a sample-efficient solution to the inverse reinforcement learning (IRL) problem, particularly addressing the challenge of scaling to large state spaces. Unlike previous IRL methods that struggle with large state spaces due to their reliance on estimating the entire feasible reward set, CATY-IRL leverages a novel framework called “rewards compatibility.” This framework generalizes the notion of the feasible set and formulates the IRL problem as a classification task. Crucially, the algorithm’s complexity is shown to be independent of the state space cardinality. The algorithm’s efficiency is further demonstrated through theoretical analysis and comparison to existing baselines. Minimax optimality is proven up to logarithmic factors in tabular settings and CATY-IRL’s effectiveness is contrasted with the limitations of learning the feasible reward set directly in large state spaces, highlighting its advantages. The algorithm’s practical implementation and potential in real-world applications are discussed. Its ability to unify IRL with reward-free exploration is also explored.

Rewards Compatibility#

The proposed “Rewards Compatibility” framework offers a novel approach to address the inherent ill-posedness of Inverse Reinforcement Learning (IRL). Instead of focusing solely on the feasible reward set—which suffers from scalability issues in large state spaces—this method introduces a measure of compatibility between rewards and expert demonstrations. This approach shifts the problem from reward identification to reward classification, enabling the development of more efficient algorithms. By quantifying the suboptimality of the expert policy under candidate rewards, the framework moves beyond a binary notion of compatibility, offering a richer and more nuanced understanding of how well a given reward explains the observed behavior. This allows for the incorporation of heuristics or additional constraints in a more principled way, potentially leading to more robust and generalizable solutions. The focus on compatibility opens new avenues for theoretically sound algorithm design and provides a more practical framework for real-world applications of IRL, where complete reward identifiability might not be achievable or desirable.

Minimax Optimality#

Minimax optimality, in the context of machine learning, signifies achieving the best possible performance in the worst-case scenario. It’s a crucial concept when dealing with adversarial settings or situations with high uncertainty, where an algorithm’s performance could be significantly affected by unforeseen circumstances. The goal is to design algorithms that minimize the maximum possible loss, ensuring robustness against unexpected data or attacks. This contrasts with average-case optimization, which focuses on minimizing expected loss, making minimax approaches better suited for risk-averse applications. A key challenge in establishing minimax optimality is proving lower bounds on the performance of any algorithm, demonstrating that no algorithm can achieve better performance in the worst case. Tight minimax bounds show a near-optimal solution, where the upper bound of a specific algorithm’s performance closely matches the lower bound. This provides a strong guarantee of the algorithm’s effectiveness in the worst-case scenario, making minimax a powerful tool for analyzing algorithm robustness and efficiency, especially in scenarios where worst-case performance is critical.

Future of Exploration#

The “Future of Exploration” in reinforcement learning (RL) and inverse RL (IRL) hinges on addressing the limitations of current approaches. Scaling to large state spaces remains a critical challenge, demanding more efficient algorithms and function approximation techniques beyond linear models. Bridging the gap between theoretical guarantees and practical implementations is crucial. While the feasible reward set offers a theoretically sound framework, its computational cost prohibits real-world application; alternative formulations emphasizing reward compatibility are promising. Unifying exploration frameworks that integrate RL and IRL into a single paradigm, as proposed with Objective-Free Exploration, offer a novel direction that simplifies algorithm design and improves sample efficiency. Furthermore, research should focus on robustness to noise and uncertainty, particularly in handling suboptimal demonstrations, and exploring different feedback mechanisms beyond demonstrations. Ultimately, the future of exploration lies in developing provably efficient algorithms that generalize effectively to complex real-world scenarios.

More visual insights#

More on figures

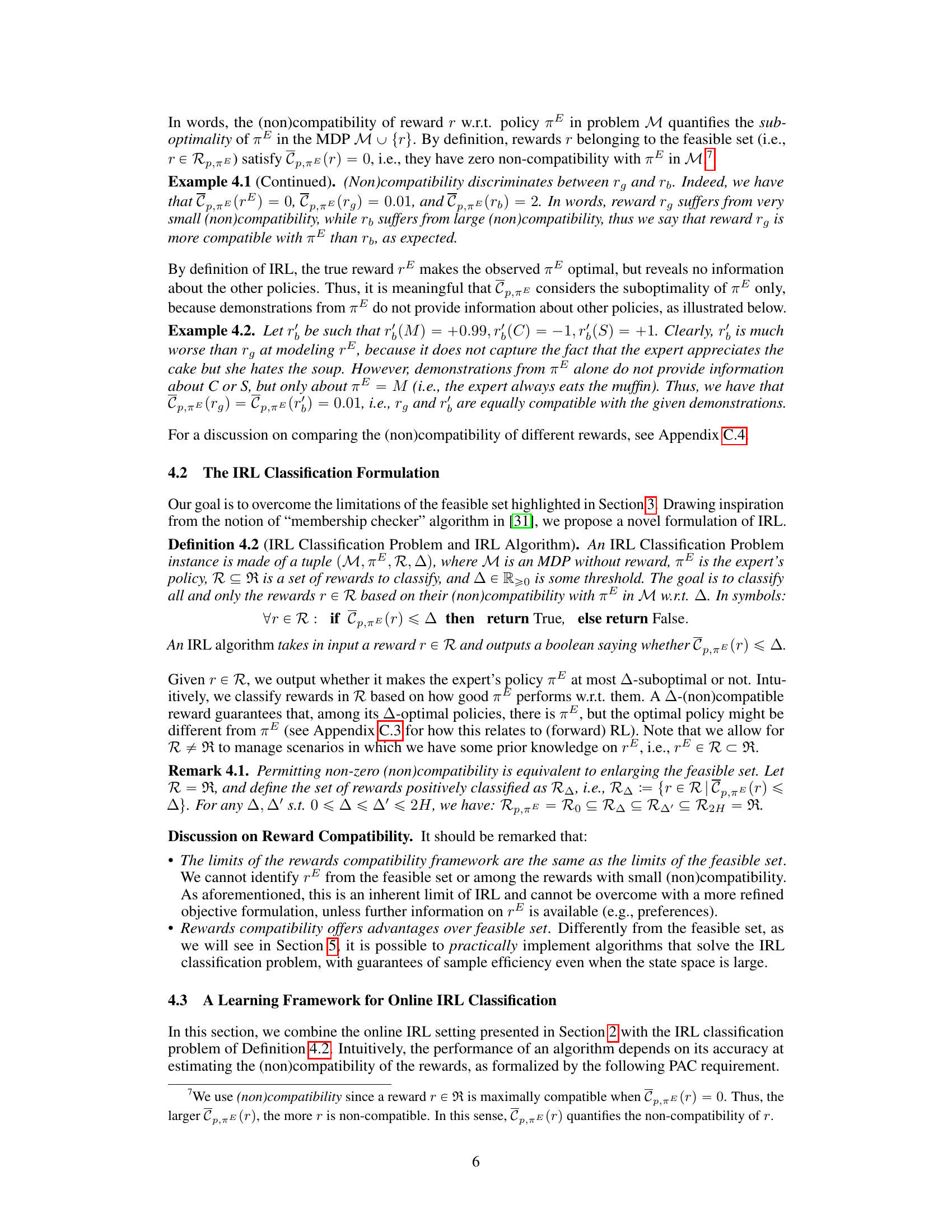

The figure shows a flowchart of the CATY-IRL algorithm. The algorithm consists of two phases: exploration and classification. In the exploration phase, the algorithm collects a dataset D. In the classification phase, the algorithm estimates the expert’s performance JE(r) using the expert dataset DE and estimates the optimal performance J*(r) via planning with dataset D. The algorithm then classifies the reward r based on the (non)compatibility C(r) = J*(r) - JE(r) and a given threshold Δ.

The figure shows the occupancy measures of different policies as rays starting from the initial state. Policies with similar occupancy measures have rays close together, while those with dissimilar measures have rays far apart. The red area highlights the policies close to the expert’s policy in the L1 norm.

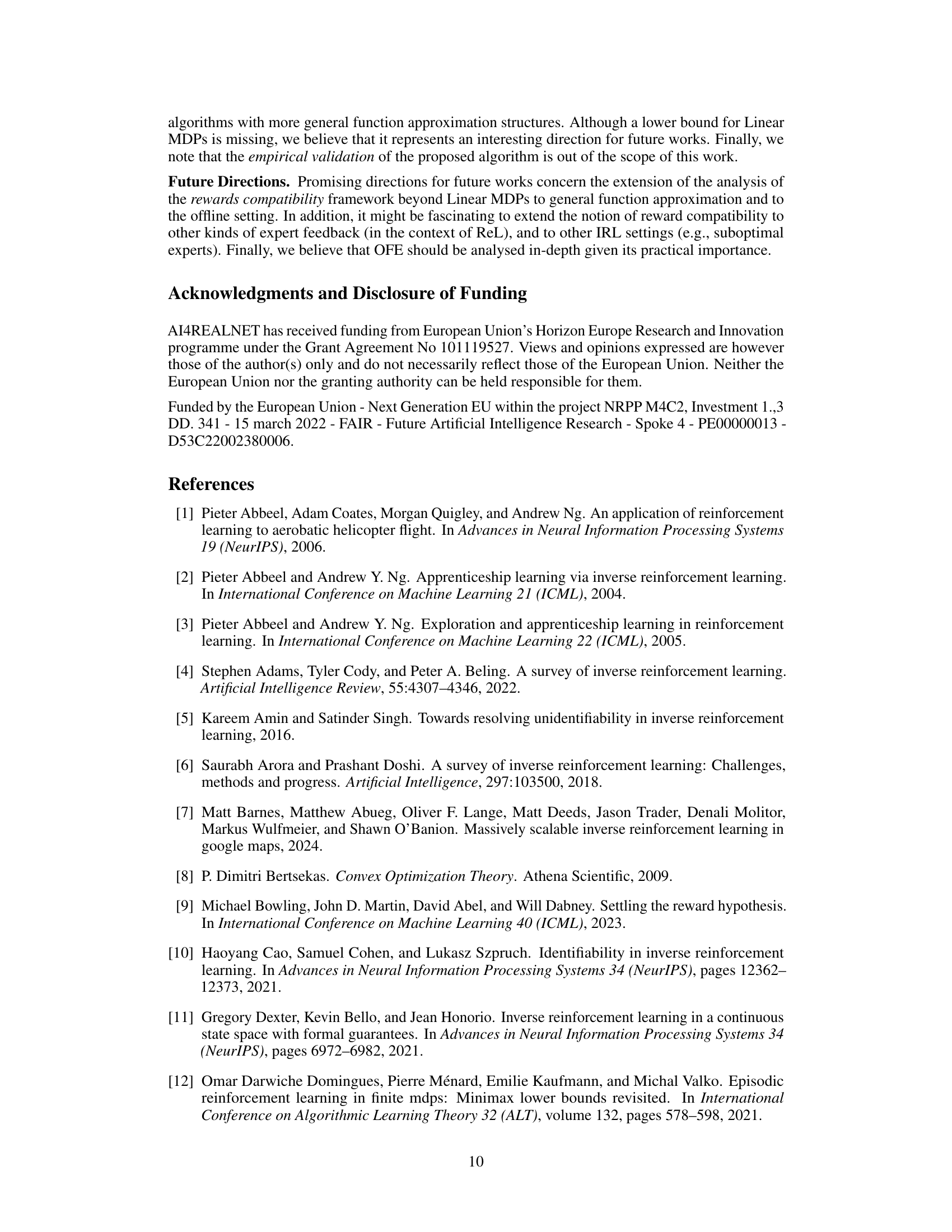

This figure depicts the hard instances used to prove the lower bound for the sample complexity of RFE and IRL. The key components are the initial state (sw), absorbing states (sg, sb, sE), and a full A-ary tree of depth d-1 with root sroot and leaves L. The expert policy (πE) leads to the absorbing state sE, while other actions lead to the tree. Transitions from leaves lead to sg (good state with reward 1) or sb (bad state with reward 0) with probabilities determined by parameter ε’. This construction forces any algorithm to explore a significant portion of the state space to distinguish between MDP instances that are close in terms of the Hausdorff distance.

The figure shows hard instances used in the lower bound proof. The instances are constructed using a binary tree structure, where the root node is connected to two subtrees. Each subtree consists of multiple absorbing states. The expert’s policy leads to an absorbing state that gives a reward, and other actions lead to other absorbing states with either reward +1 or 0. The transitions to these absorbing states are probabilistic. It is used to demonstrate that a certain number of samples are needed to distinguish between two instances.

Full paper#