↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

The paper addresses the challenge of fitting high-dimensional sparse linear regression models when data is provided by self-interested agents who prioritize data privacy. Traditional methods struggle to incentivize truthful data reporting while ensuring privacy and accuracy, especially in high dimensions. This creates a critical need for mechanisms that balance these competing concerns.

The researchers propose a novel mechanism with a closed-form private estimator. This estimator is designed to be jointly differentially private, meaning it protects the privacy of individual data contributions. The mechanism also incorporates a payment scheme, making it truthful, so most agents are incentivized to honestly report their data. Importantly, the mechanism is shown to have low error and a small payment budget, offering a practical solution to the problem. This work is groundbreaking for proposing the first truthful and privacy-preserving mechanism designed for high-dimensional sparse linear regression.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in differential privacy, mechanism design, and high-dimensional statistics. It bridges the gap between theoretical guarantees and practical applicability by providing a novel, efficient, and truthful mechanism for high-dimensional sparse linear regression. The closed-form solution and asymptotic analysis offer valuable insights for future research in privacy-preserving machine learning.

Visual Insights#

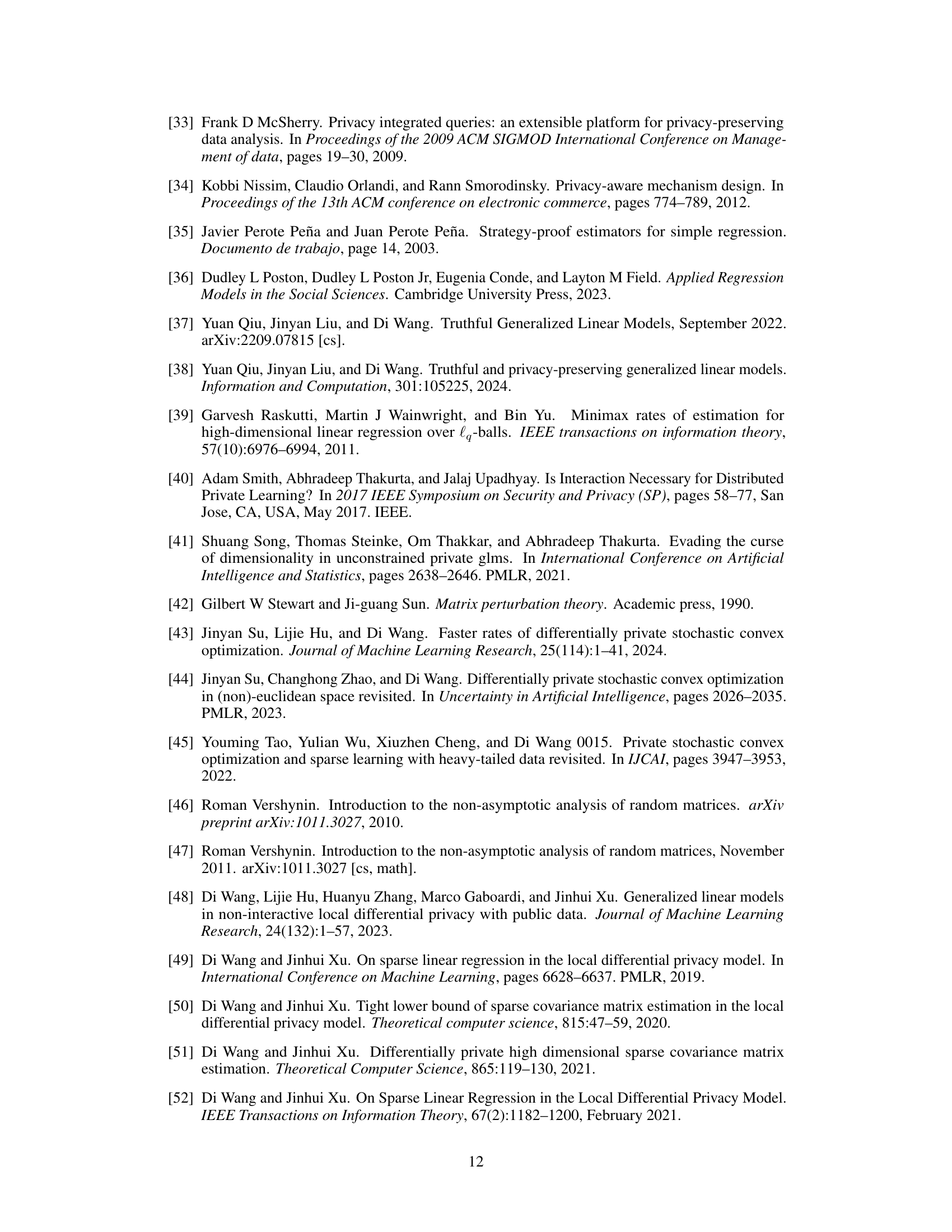

This table lists notations used throughout the paper. It includes mathematical symbols representing various variables, parameters, and concepts such as the number of agents, dimensionality, feature vectors, responses, covariance matrices, norms, privacy cost, payment, and the private estimator.

Full paper#