↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

The human brain’s cognitive processes can be modeled using Bayesian computation, which offers a novel approach to distributed information processing. However, applying these models to real-world scenarios remains challenging. Event cameras provide asynchronous event streams representing spatiotemporal data, but inferring motion targets from these streams without prior knowledge is difficult. Existing Bayesian inference-based Expectation-Maximization (EM) frameworks are effective but computationally expensive.

This research proposes a novel spike-based Bayesian computation framework for efficiently decoupling event streams of different motions. It leverages the Winner-Take-All (WTA) circuits for event assignment and Spike Timing Dependent Plasticity (STDP) for parameter optimization. Theoretical analysis and experiments demonstrate the effectiveness of this approach, showing that STDP-based learning maximizes the contrast of warped events under mixed motion models and enabling effective motion segmentation. This work contributes a biologically plausible, energy-efficient method for event-based motion segmentation, bridging the gap between theoretical models and real-world applications.

Key Takeaways#

Why does it matter?#

This paper is important because it bridges the gap between biologically-inspired computation and real-world applications. It provides a theoretically sound and experimentally validated framework for event-based motion segmentation, a crucial task in various fields like robotics and autonomous driving. The use of spiking neural networks offers potential for low-power, efficient processing, which is particularly relevant for edge computing and neuromorphic hardware. The study also opens up new avenues for exploring Bayesian computation in neuromorphic systems, which is a significant area of current research.

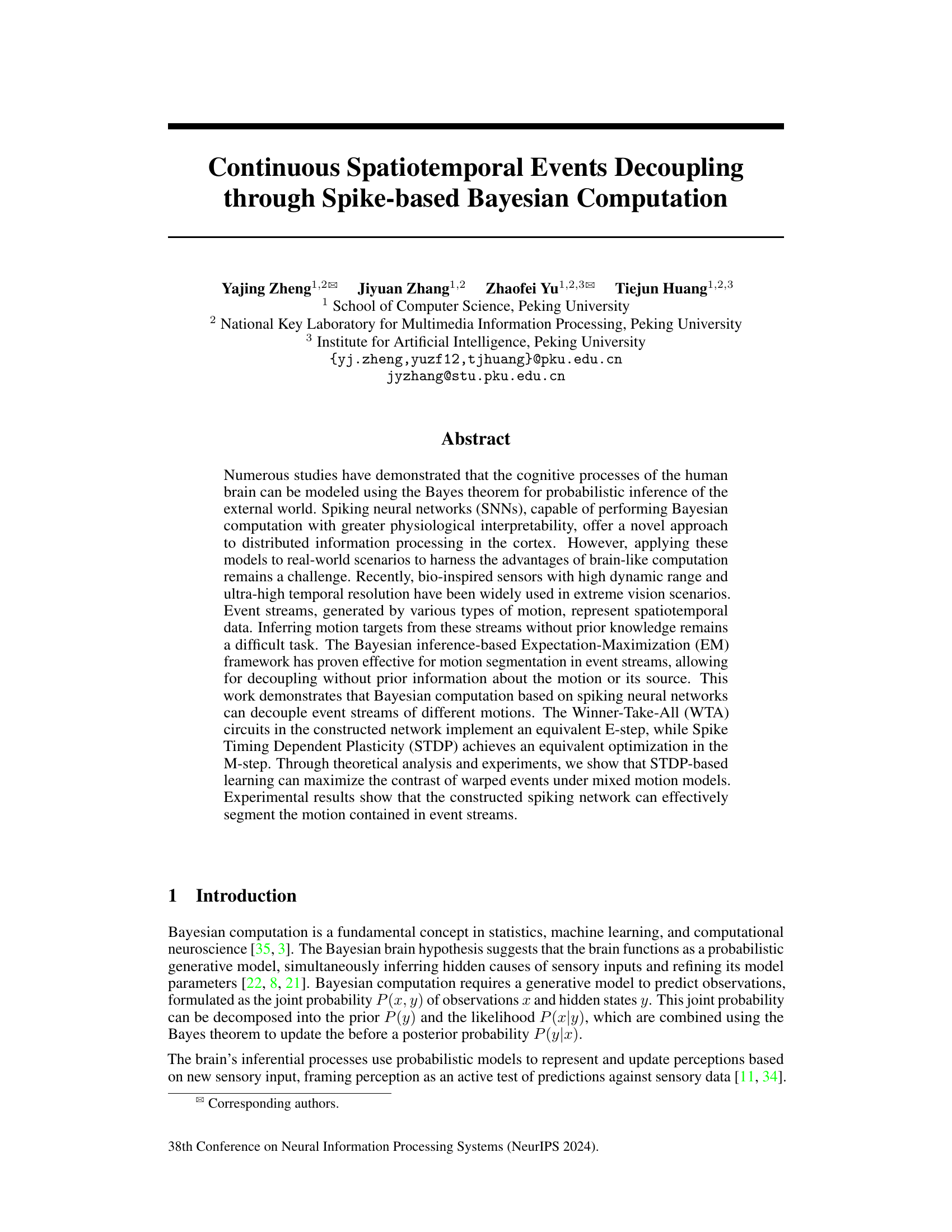

Visual Insights#

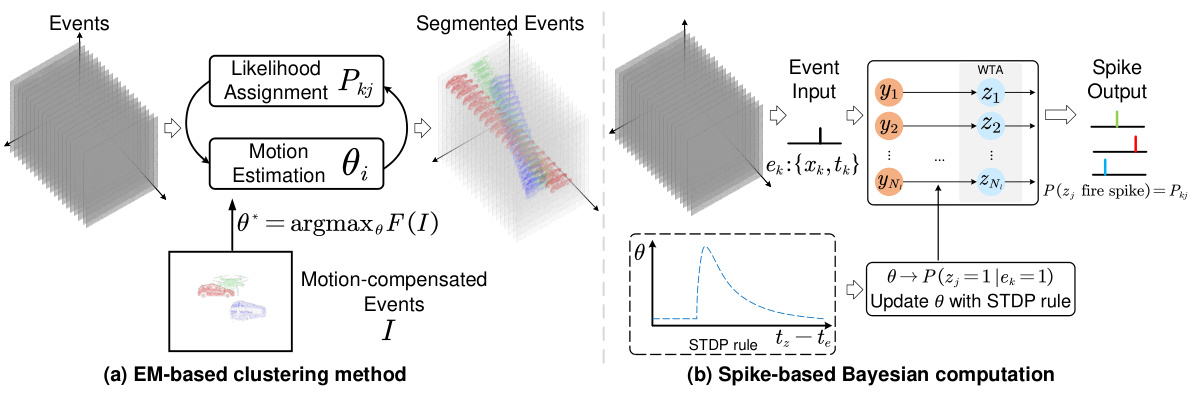

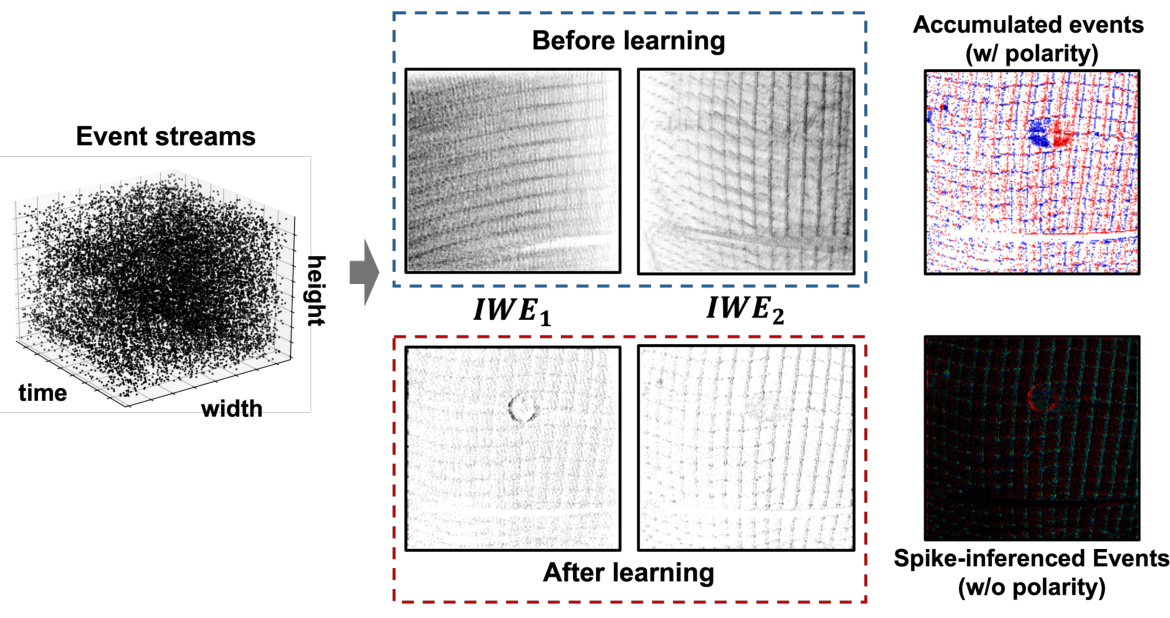

This figure compares two methods for event decoupling: EM-based clustering and the proposed spike-based Bayesian computation. The EM-based clustering method uses an iterative algorithm to group events based on their likelihood of belonging to different motion models, while the spike-based Bayesian computation method uses a network of spiking neurons to perform Bayesian inference and decouple the event streams. The figure shows the steps involved in each method, including the assignment of events to clusters, the estimation of motion parameters, and the update of model parameters based on the data. The spike-based Bayesian computation method is shown to be more efficient and accurate than the EM-based clustering method.

In-depth insights#

Spatiotemporal Decoupling#

Spatiotemporal decoupling, in the context of event-based vision, refers to the ability to disentangle and separate different motion patterns from a mixture of events. This is a challenging task because events are inherently spatiotemporally coupled; an object’s motion creates a cascade of events that blend together, making it difficult to assign individual events to their correct motion sources. The core idea is to break this entanglement, isolating distinct motion components within the event stream. This can be achieved using various methods, including probabilistic inference and optimization algorithms like Expectation-Maximization (EM), often combined with techniques for motion compensation and model fitting. The use of spiking neural networks (SNNs) offers a unique approach, potentially providing bio-inspired solutions that mimic the brain’s efficient information processing capabilities. By exploiting the temporal precision of event cameras and the properties of SNNs such as spike timing-dependent plasticity (STDP) and Winner-Take-All (WTA) circuits, it may be possible to perform this decoupling online, rapidly adapting to dynamic changes in the scene and efficiently segmenting the motion components. This contrasts sharply with traditional frame-based methods, which often struggle with motion blur and require significant pre-processing.

Spike-based Bayesian#

The concept of “Spike-based Bayesian” computation merges the probabilistic framework of Bayesian inference with the biological plausibility of spiking neural networks (SNNs). SNNs mimic the brain’s communication style using spikes, offering advantages in energy efficiency and biological realism. By implementing Bayesian methods within this framework, researchers aim to create models that can perform probabilistic inference in a manner similar to the brain, addressing challenges in traditional machine learning. This approach is particularly appealing for spatiotemporal data processing, where the timing of events holds critical information, like in event-based vision. The combination tackles complex tasks such as motion segmentation in event streams. Key to this approach is exploiting the inherent timing-dependent properties of SNNs, like Spike-Timing-Dependent Plasticity (STDP), to facilitate efficient learning. This results in a system capable of online learning and adaptation, a significant feature mirroring the brain’s adaptability. However, challenges remain in scaling and ensuring robustness, particularly with the complexity of real-world data and the inherent stochasticity of spike-based systems.

STDP Learning#

Spike-Timing-Dependent Plasticity (STDP) is a crucial learning mechanism in the paper, used to optimize the network’s motion parameters. STDP’s role is to maximize the contrast of warped events, effectively segmenting motion streams. The theoretical analysis demonstrates that STDP, combined with Winner-Take-All (WTA) circuits, closely approximates the M-step of the Expectation-Maximization (EM) algorithm. The learning rule adjusts synaptic weights based on precise spike timing, strengthening connections when pre- and post-synaptic spikes are closely timed. This mechanism is computationally efficient and biologically plausible. Experimental results confirm that STDP-based learning improves motion segmentation by enhancing contrast. However, STDP’s reliance on local learning rules potentially limits convergence to a globally optimal solution, underscoring the importance of effective parameter initialization strategies. Despite this limitation, the paper demonstrates the effectiveness of STDP in a neuromorphic computing context, suggesting its potential for real-world applications.

Event-based Motion#

Event-based motion analysis processes visual information differently than traditional frame-based methods. Instead of relying on sequential frames, it leverages asynchronous events generated by sensors like Dynamic Vision Sensors (DVS). These events, representing changes in pixel intensity, provide high temporal resolution and reduced data redundancy. This approach is particularly effective in dynamic scenes with high-speed motion, where frame-based methods suffer from motion blur. Event-based techniques often use Bayesian computation and Expectation-Maximization (EM) algorithms. These algorithms are powerful because they can deal with uncertainty inherent in event data and effectively segment various moving objects within a scene. The challenge lies in decoupling different movements, particularly when events from various sources overlap. Spike-based Bayesian computation offers a bio-inspired solution that mimics the brain’s information processing mechanisms using spiking neural networks. This approach combines the advantages of event-based sensing with the efficiency of neural computations, enabling real-time processing and potentially low-power implementation on neuromorphic hardware.

Neuromorphic Vision#

Neuromorphic vision systems mimic the biological visual system’s architecture and functionality using neuromorphic hardware. This approach offers significant advantages, including high energy efficiency, real-time processing capabilities, and robustness to varying conditions. By using event-driven sensors, neuromorphic vision reduces data redundancy inherent in traditional frame-based cameras, leading to decreased power consumption. Spiking neural networks (SNNs), a key component of these systems, provide a biologically plausible way to process information represented by spikes. This design has the potential to surpass the performance of traditional computer vision systems, especially in dynamic and unpredictable environments. However, challenges remain, particularly concerning efficient learning algorithms for SNNs, managing the high dimensionality of data from event cameras, and developing robust algorithms that can cope with noisy events. The field also requires further exploration of novel architectures and algorithms for more complex vision tasks.

More visual insights#

More on figures

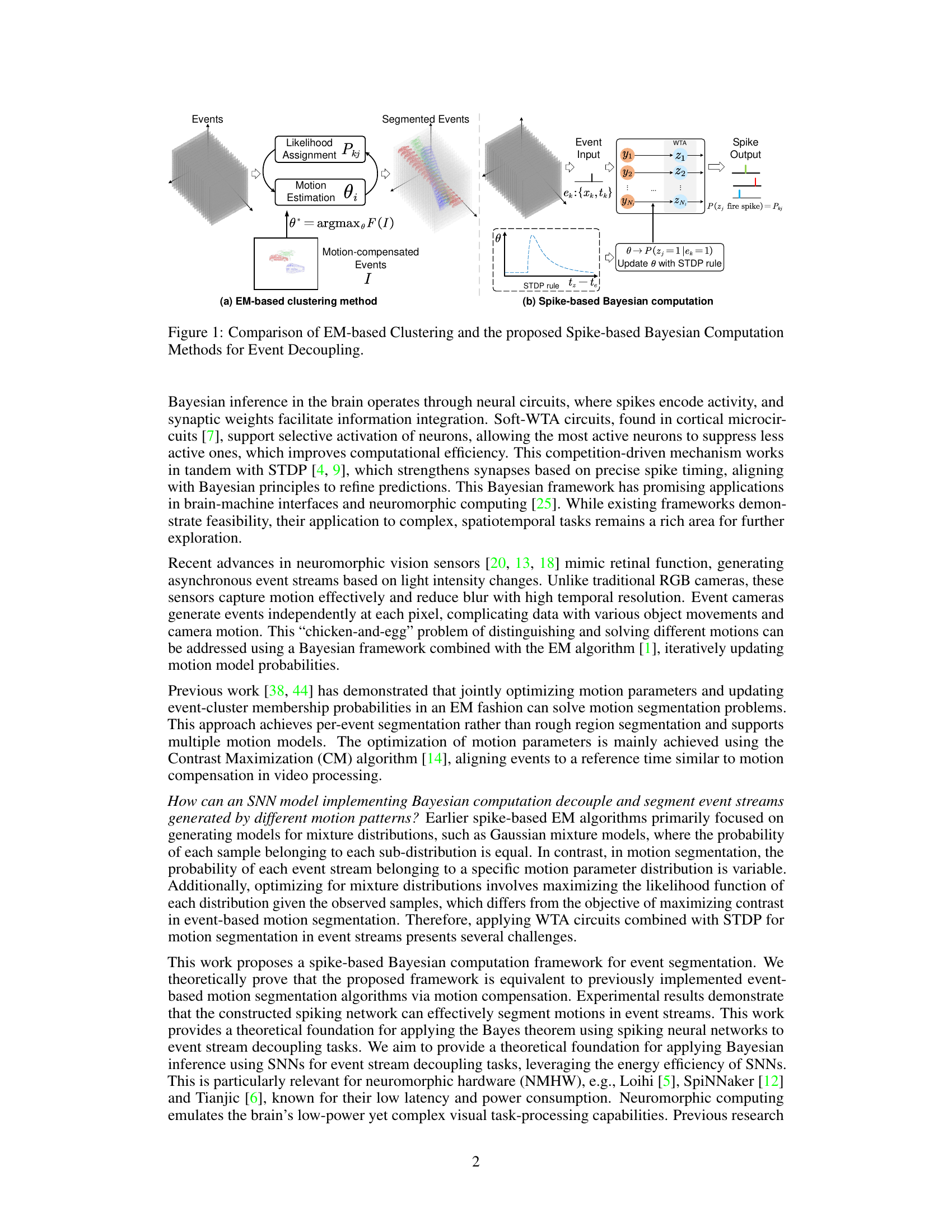

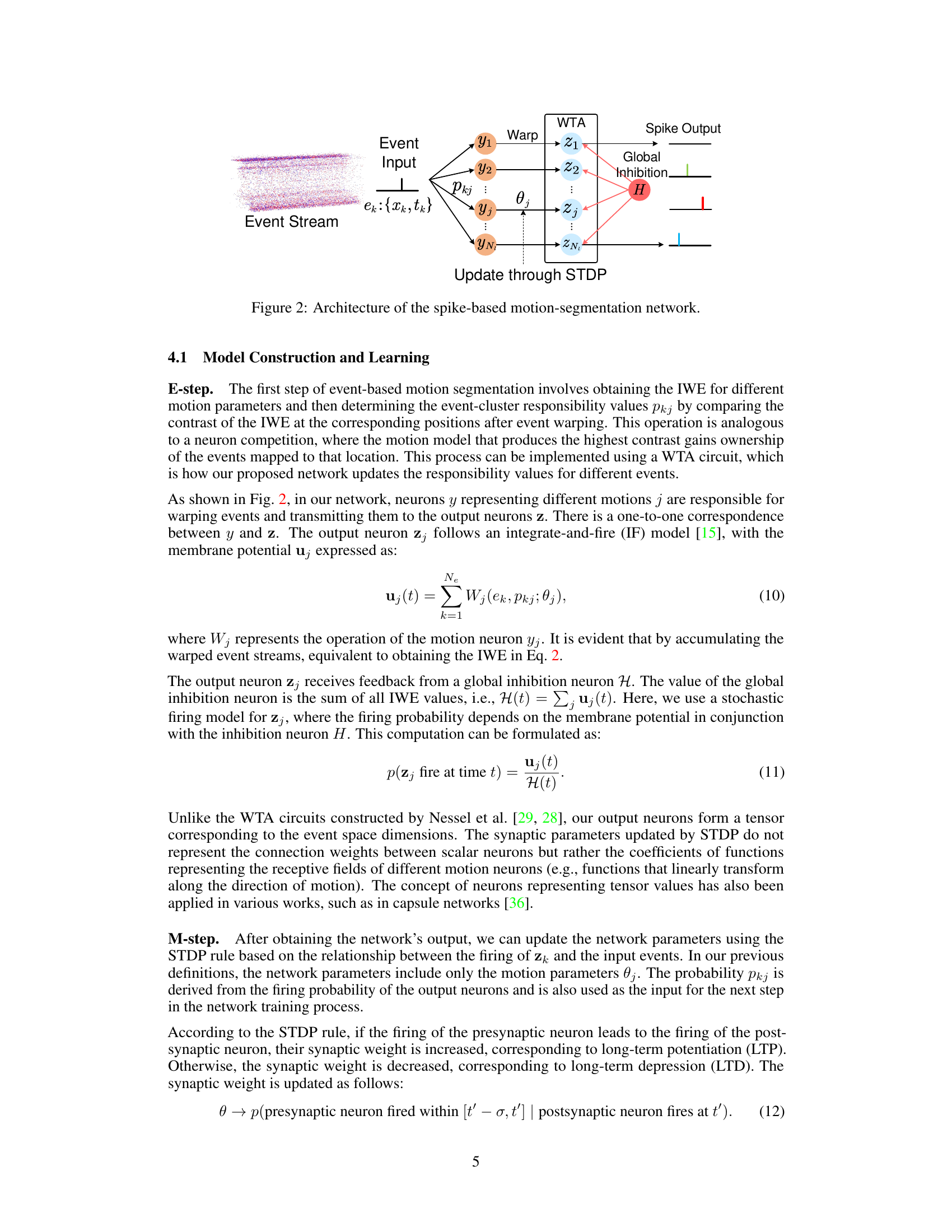

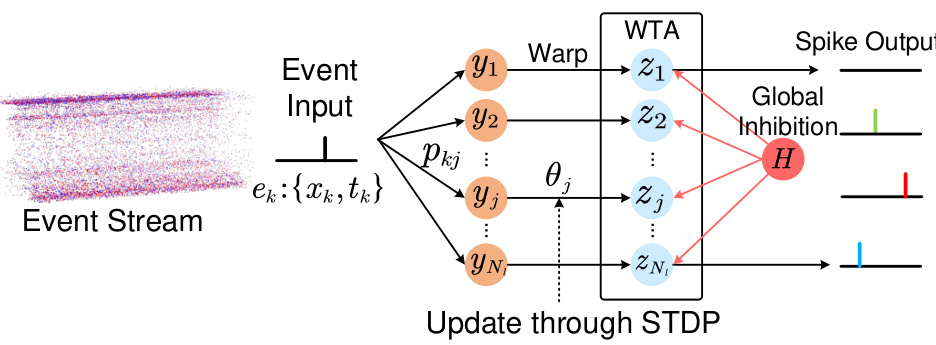

This figure shows the architecture of a spike-based motion segmentation network. Event streams are input into the network, where each event is processed by a set of motion neurons (yj) that represent different motion models. The output of each motion neuron is then passed through a winner-take-all (WTA) circuit, which selects the most active neuron representing the dominant motion. This selection is further refined by a global inhibition neuron (H) that suppresses less-active neurons. Finally, the spike output from the WTA circuit represents the segmented motions. The motion parameters (θj) for each motion model are updated through spike-timing-dependent plasticity (STDP).

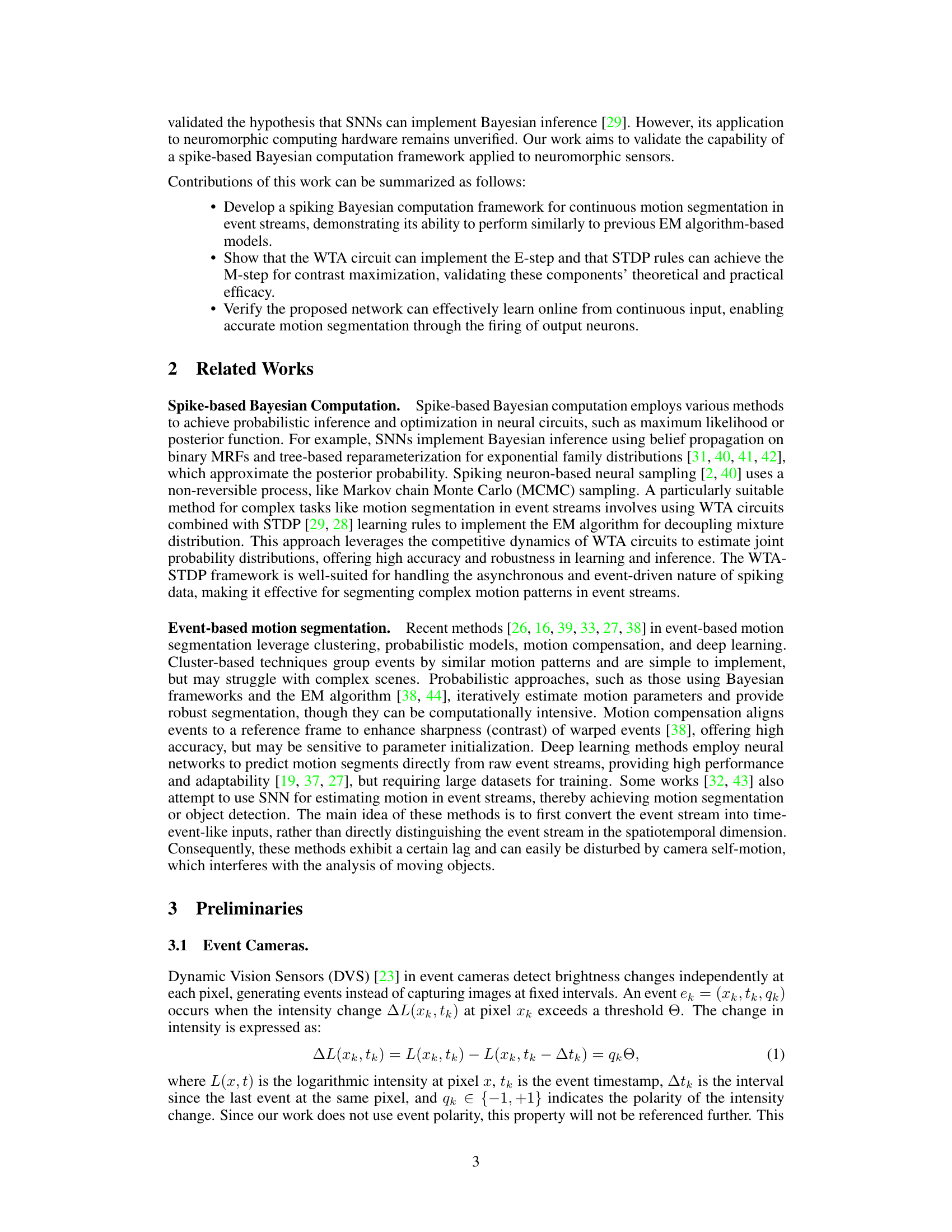

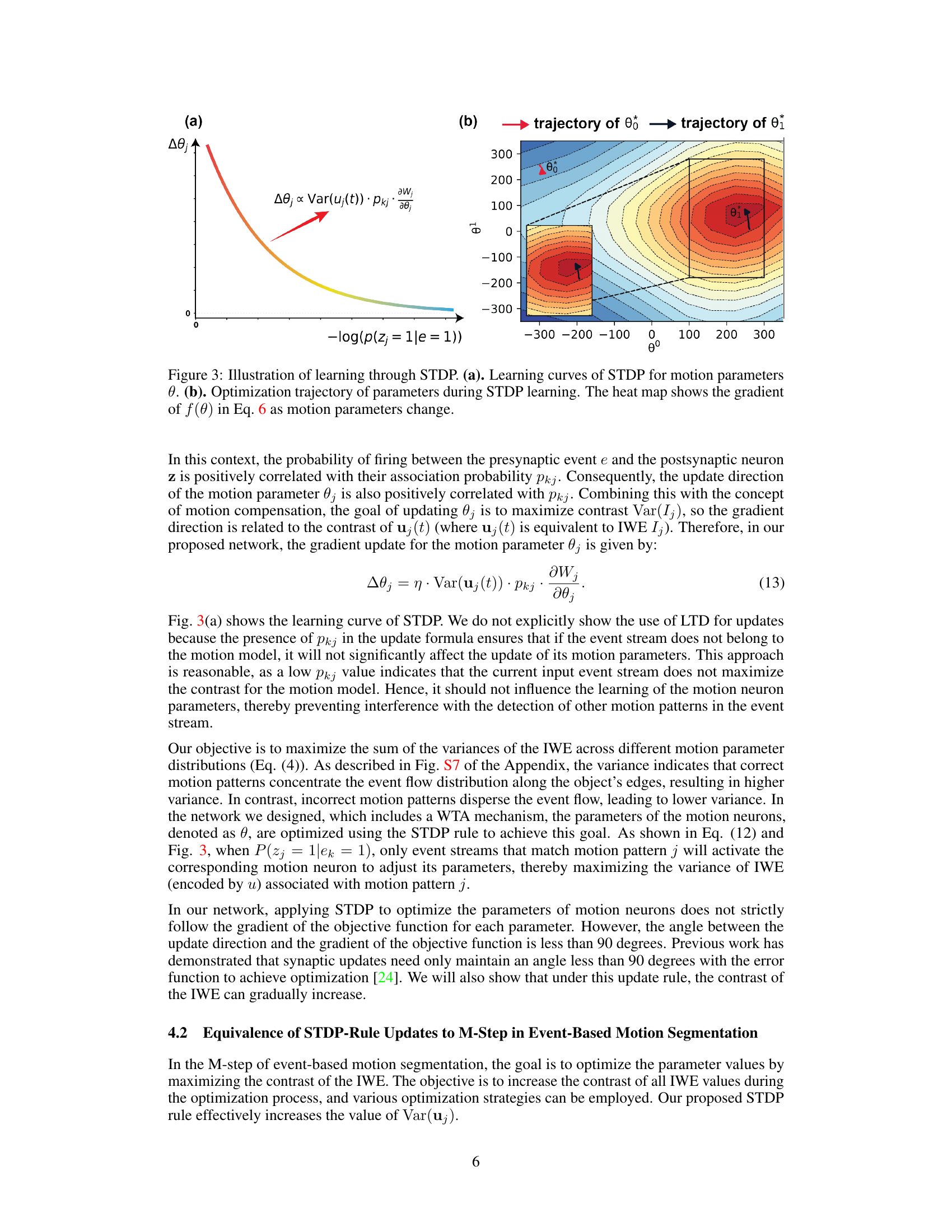

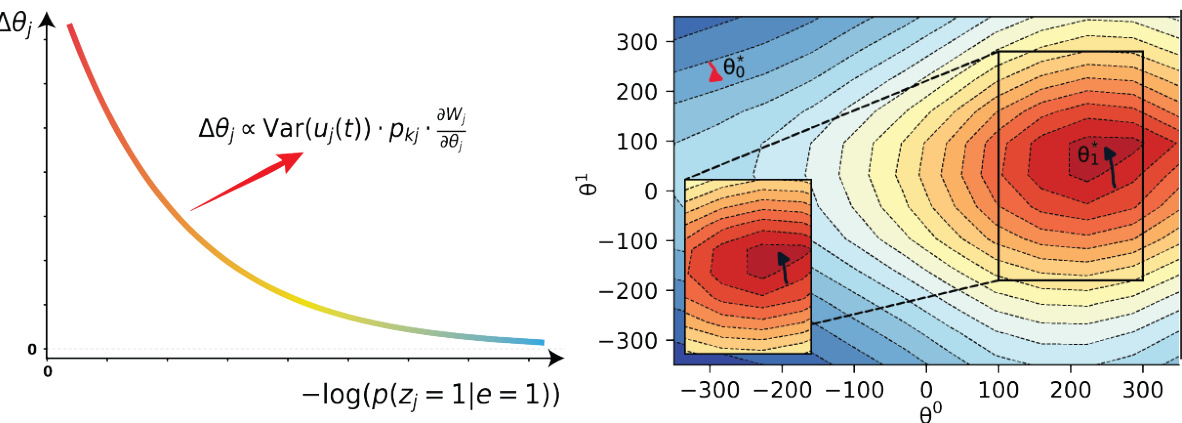

This figure illustrates the learning process of STDP in the context of motion parameter optimization. Panel (a) shows learning curves depicting how STDP adjusts motion parameters (θ) over time. Panel (b) shows an optimization trajectory on a heatmap visualizing the gradient of the objective function (f(θ)) across different parameter values, illustrating how STDP dynamically updates parameters to maximize contrast.

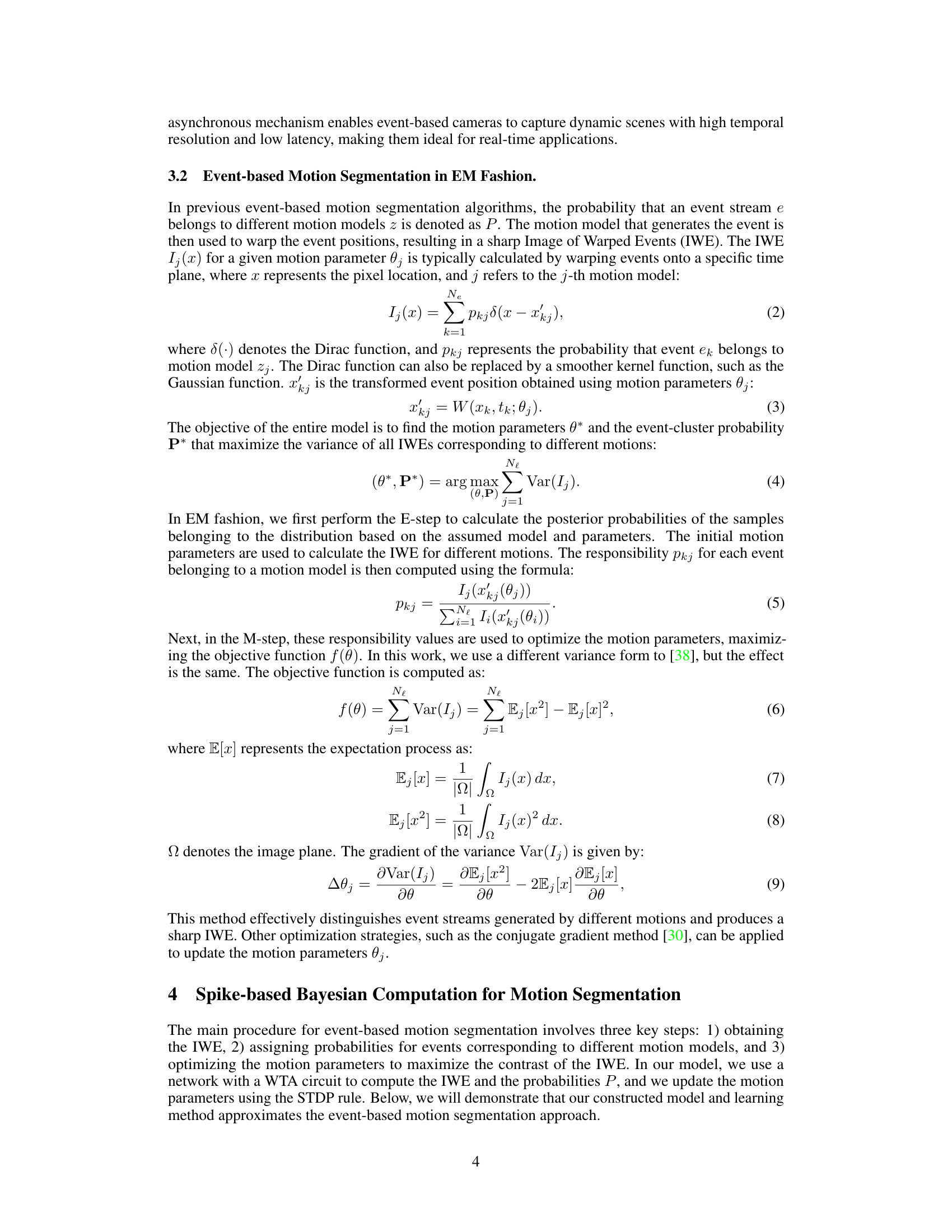

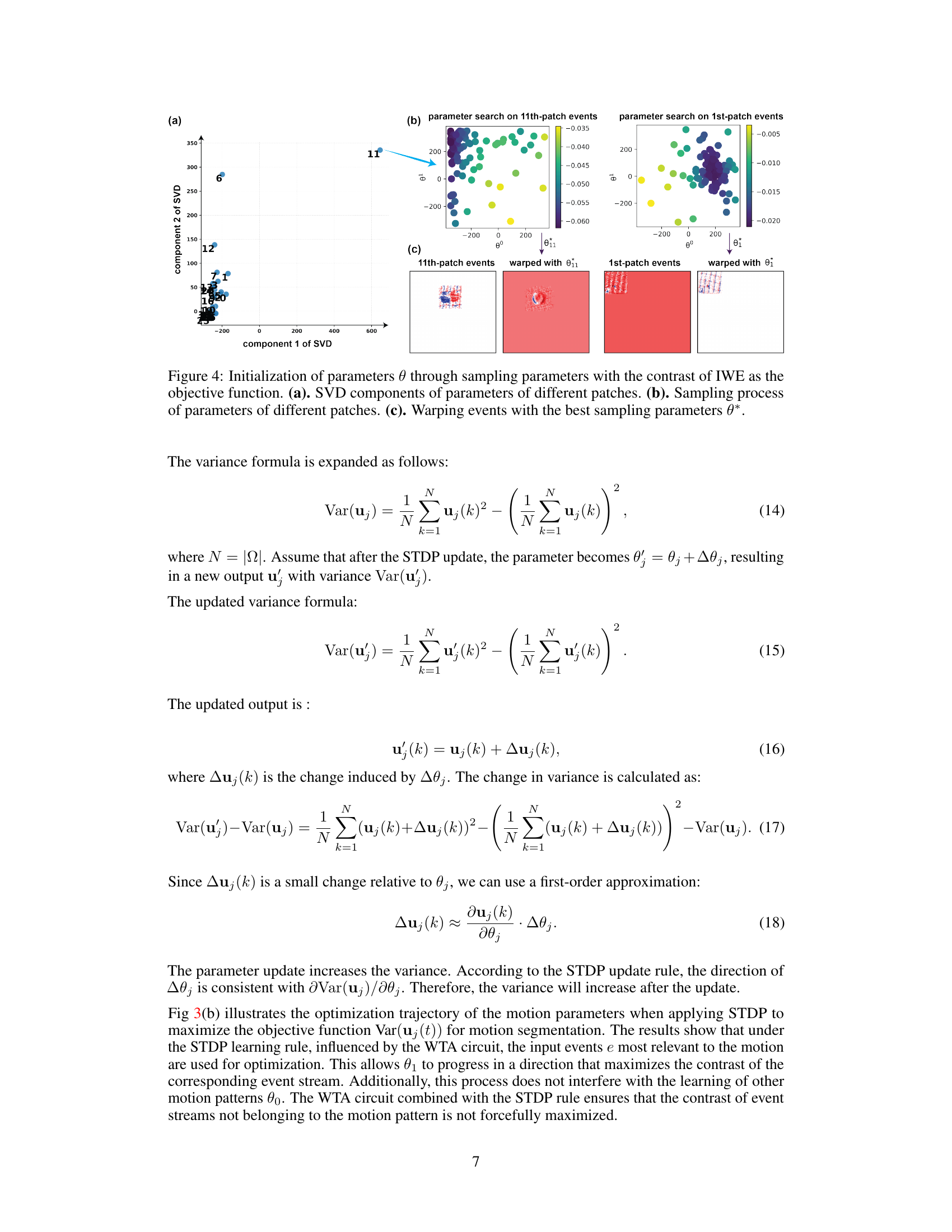

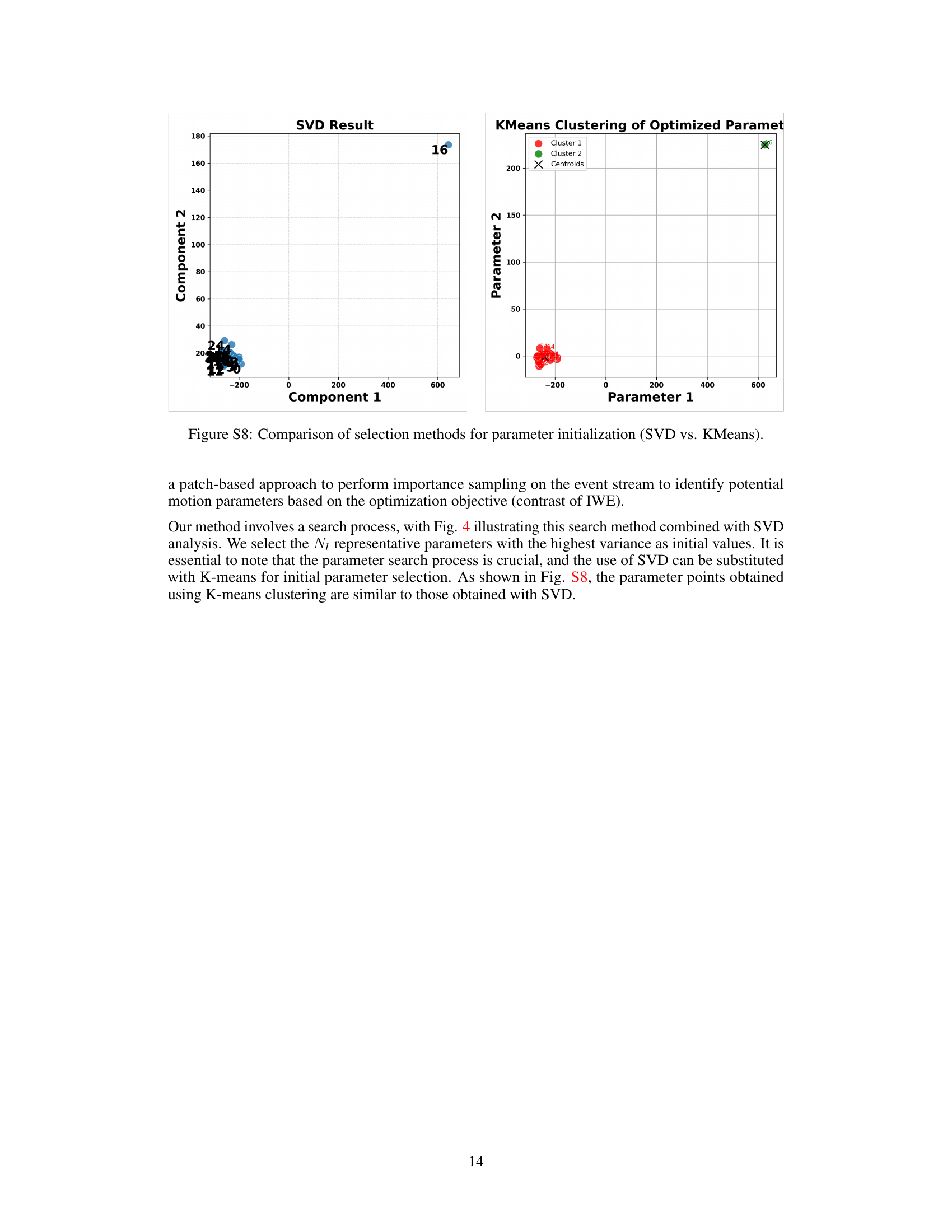

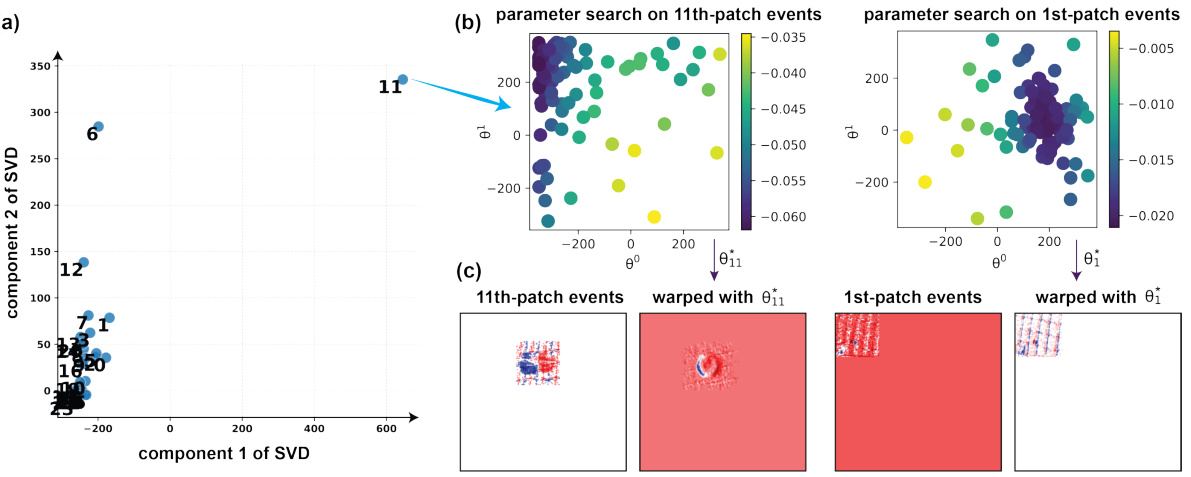

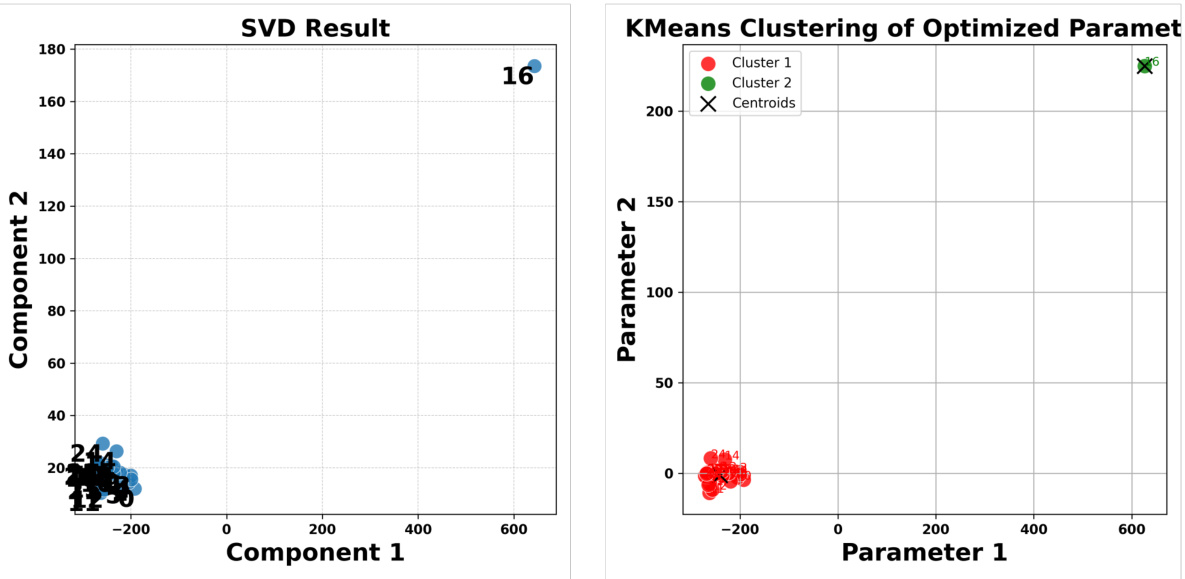

This figure shows the initialization process of motion parameters θ. The contrast of the Image of Warped Events (IWE) is used as the objective function. The process involves dividing events into patches, sampling parameters for each patch using the Tree-structured Parzen Estimator (TPE), then using Singular Value Decomposition (SVD) to select the most significant parameters. Finally, events are warped using the best parameters.

This figure shows the results of motion segmentation using the proposed spike-based Bayesian computation model. The leftmost panel shows the raw event stream data in a three-dimensional representation (time, width, height). The top-middle panels display the warped event images (IWE) before any learning has occurred (i.e., initial state), representing the contrast of warped events in different motion models. The bottom-middle panels present the IWE after the network has learned, exhibiting a marked increase in contrast. This improved contrast facilitates better separation between events corresponding to different motion patterns. The rightmost panels visually illustrate the result of event segmentation, using different colors to represent events belonging to different motion models, both before and after the learning phase. The contrast enhancement resulting from the learning process is clearly observable, making the distinct motion components much more easily identified.

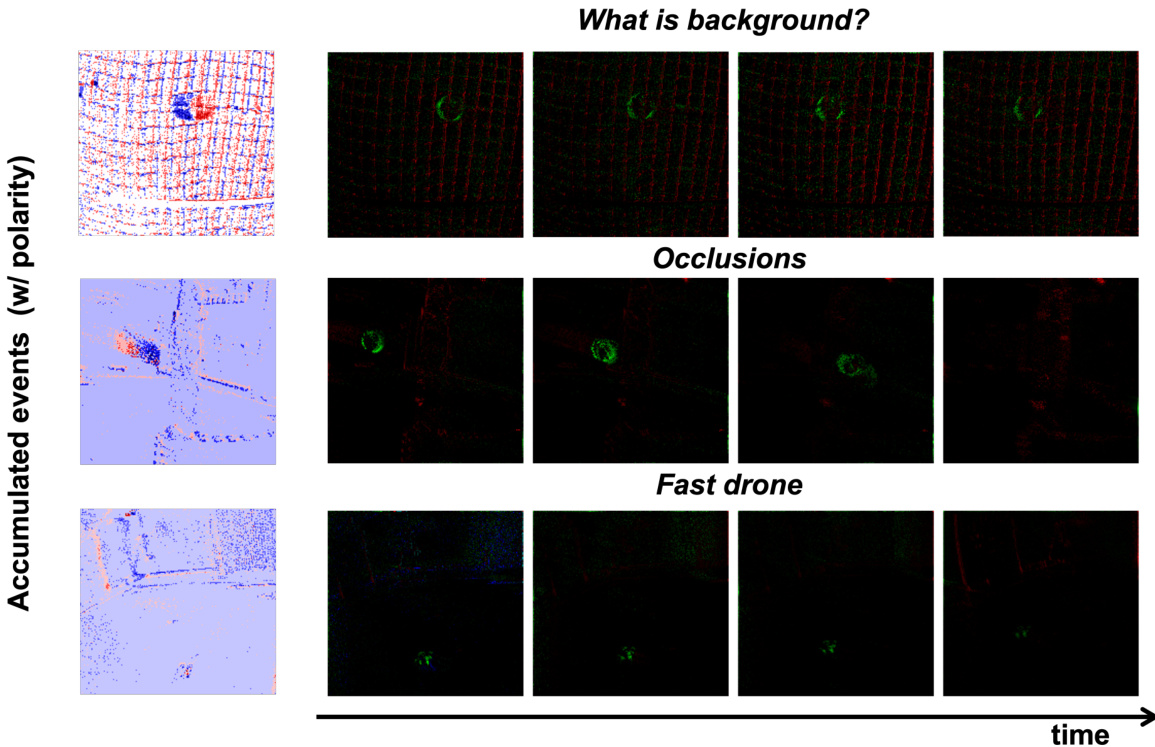

This figure shows the results of motion segmentation for three different scenarios from the Extreme Event Dataset (EED). Each row represents a different scene: ‘What is background?’, ‘Occlusions’, and ‘Fast drone’. The leftmost column shows the accumulated events with polarity, illustrating the raw sensor input. The remaining columns display the motion segmentation results obtained by the proposed spike-based Bayesian computation framework. In each scene, the events are segmented into different motion components, each represented by a different color, illustrating the effectiveness of the proposed model in separating different motion patterns, even in challenging scenarios with complex backgrounds and occlusions.

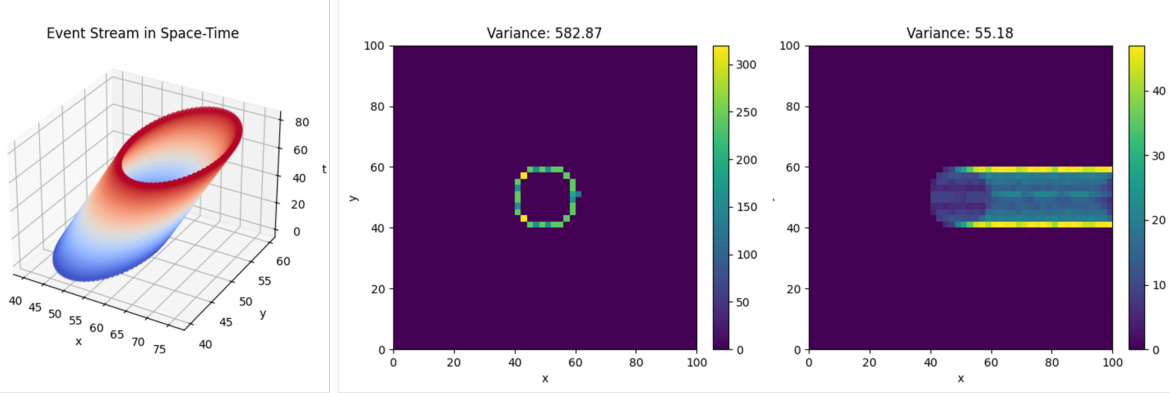

This figure shows the variance of the warped events (IWEs) for different motion patterns. The leftmost panel displays a 3D representation of an event stream in space-time, illustrating the distribution of events over time and space. The middle and right panels show heatmaps of the IWEs for two different motion models. The IWE with higher variance (582.87) corresponds to the correct motion model, which concentrates the event distribution along object edges, producing sharper object boundaries. In contrast, the IWE with lower variance (55.18) corresponds to an incorrect motion model, where the event distribution is dispersed, resulting in blurred boundaries. The variances of the IWEs serve as a measure of the model’s accuracy in separating the event streams according to the underlying motion patterns.

This figure shows the initialization process of motion parameters θ in the proposed spike-based Bayesian computation framework for event segmentation. It uses a combination of random sampling and Bayesian optimization (Tree-structured Parzen Estimator or TPE) to search for parameters that maximize the contrast of the Image of Warped Events (IWE). Subsequently, Singular Value Decomposition (SVD) is applied to the obtained parameter set to select the most significant ones for initialization, thereby ensuring efficient and effective parameter initialization for the network. The subfigures (a), (b), and (c) illustrate the SVD components, sampling process, and warping results, respectively.

Full paper#