↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Existing snapshot compressive imaging (SCI) systems suffer from performance vulnerability due to hardware variations and the impracticality of centralized training for privacy reasons. This paper addresses this by proposing a Federated Hardware-Prompt Learning (FedHP) framework. This framework tackles the problem of inconsistent data distributions caused by hardware heterogeneity by using a hardware-conditioned prompter. It does not try to correct gradients, but instead aligns the data distributions, improving data consistency.

FedHP significantly improves the performance of SCI systems across various hardware platforms. Extensive experimental results showcase FedHP’s superiority over prevalent federated learning approaches. By focusing on aligning the data before learning, FedHP achieves significant performance gains while maintaining data privacy. A new heterogeneous dataset is also released for broader research use.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in computational imaging and federated learning. It addresses the critical challenge of training robust snapshot compressive imaging (SCI) systems across diverse hardware configurations while preserving data privacy. The proposed FedHP framework offers a novel solution, opening new avenues for collaborative hardware development and optimization in SCI. Its impact extends to other domains using federated learning where heterogeneous data distributions are a challenge.

Visual Insights#

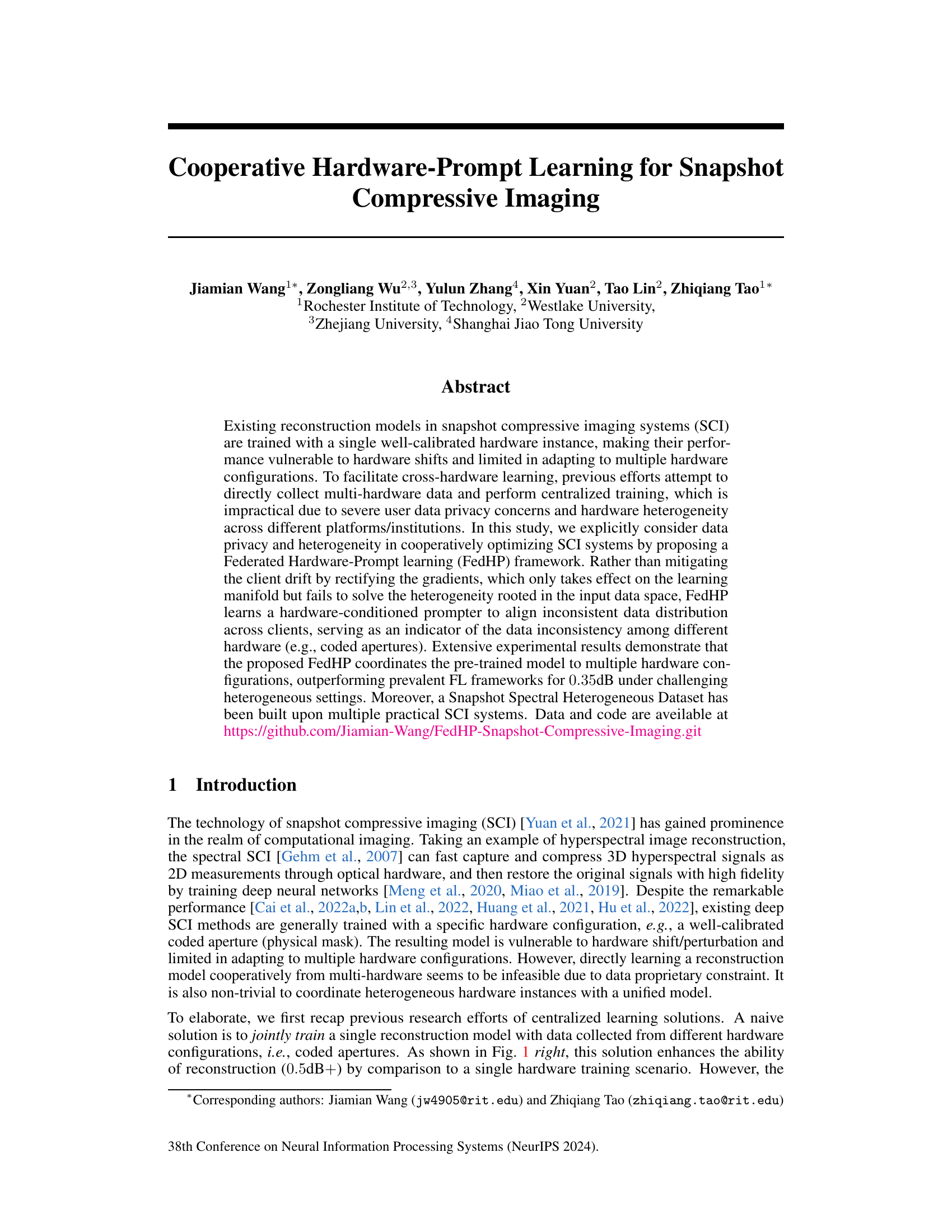

This figure compares five different hyperspectral reconstruction learning strategies. The first strategy uses a single hardware for training, resulting in poor performance on different hardware configurations. The second and third strategies (Jointly train and Self-tuning) jointly train models on data from multiple hardware, improving performance but requiring centralized training and potentially violating privacy. The fourth and fifth strategies (FedAvg and FedHP) use federated learning to avoid centralized training. FedHP enhances FedAvg by incorporating a hardware-conditioned prompt network to align the data distributions from different hardware, achieving the best performance.

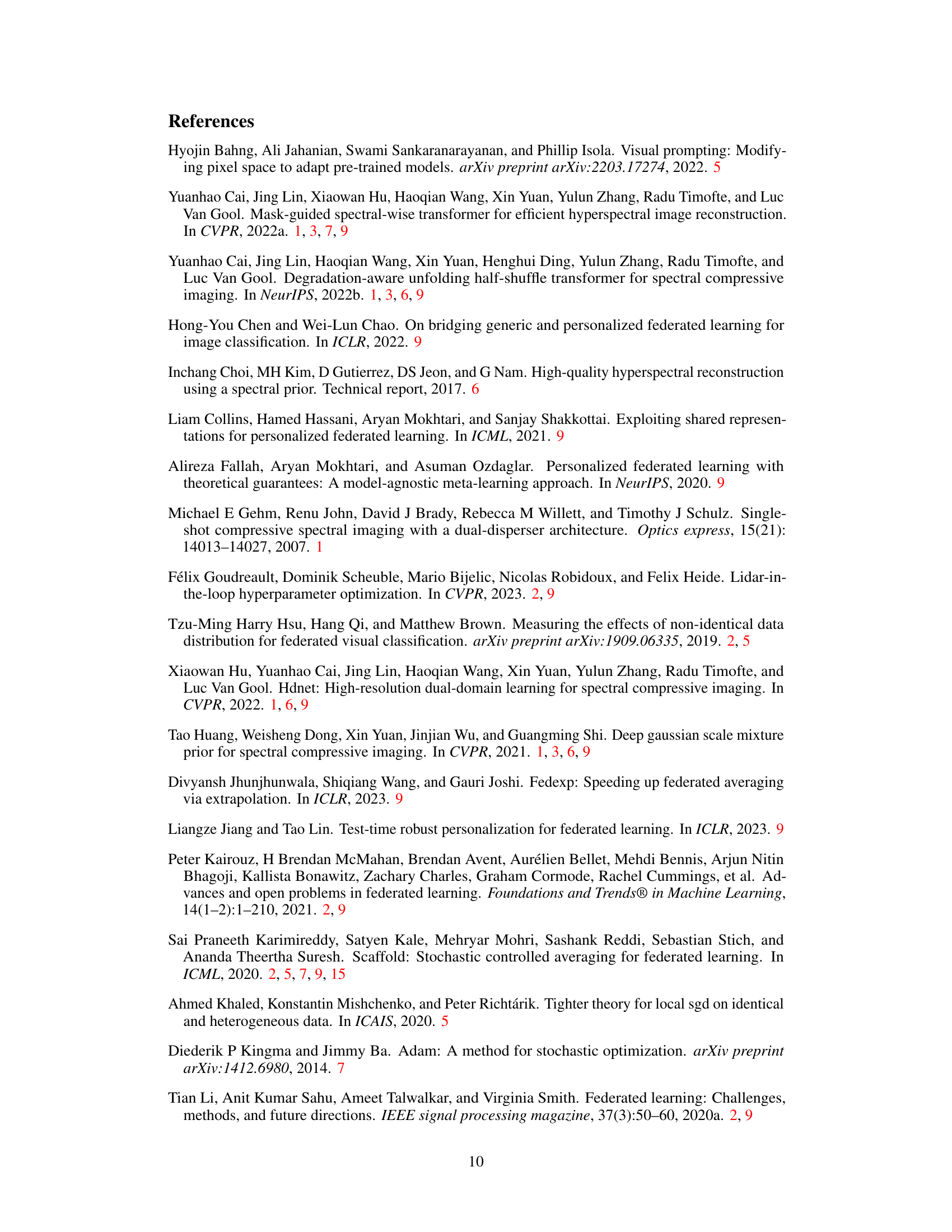

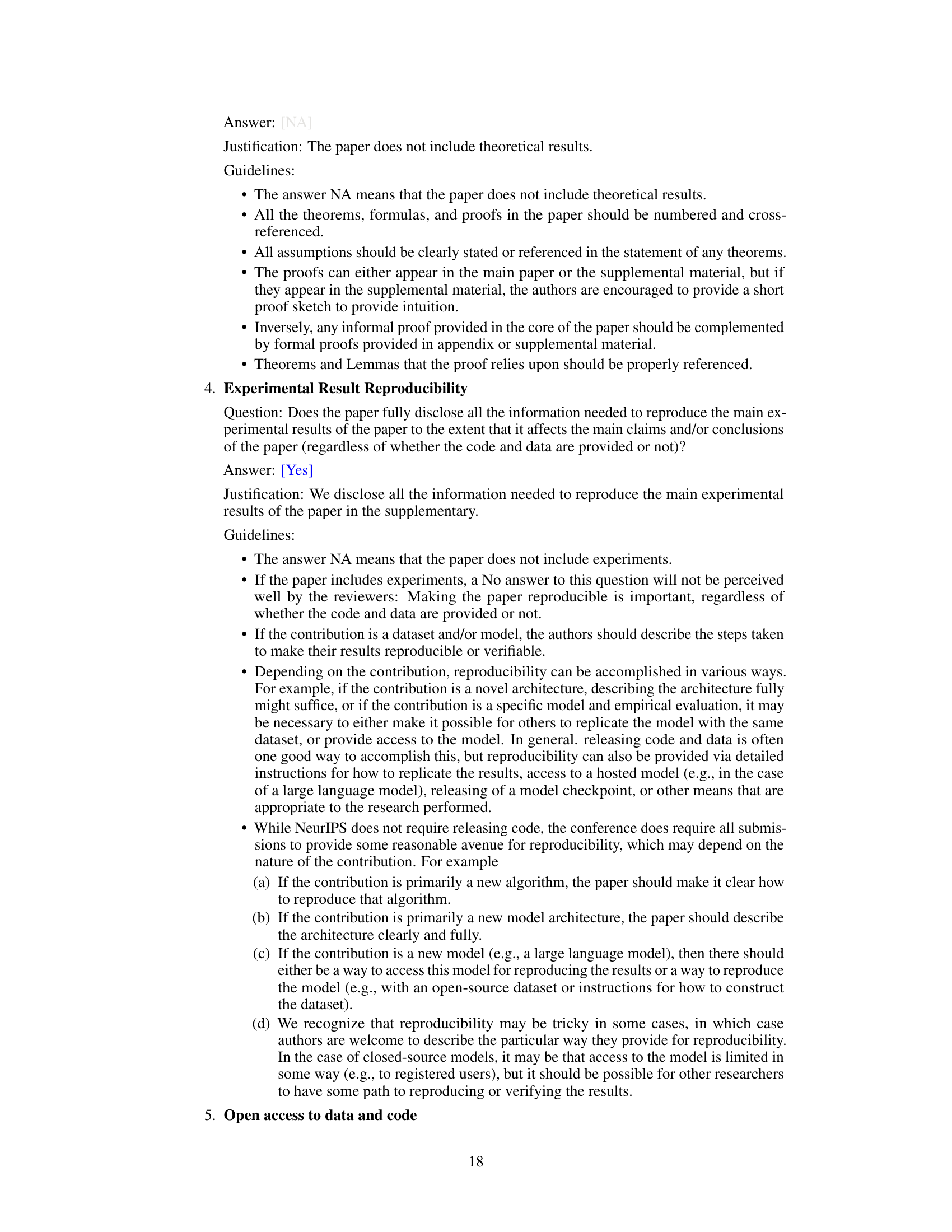

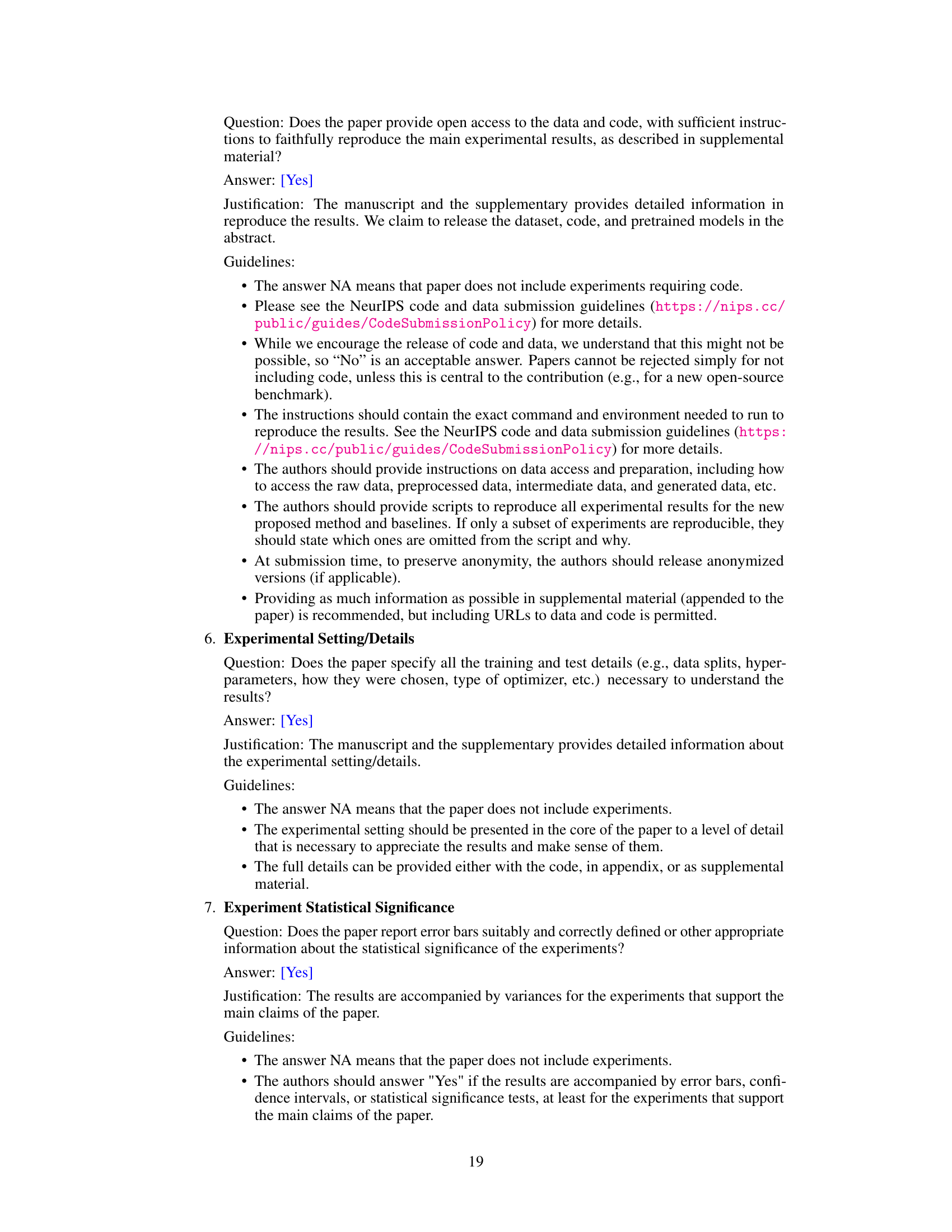

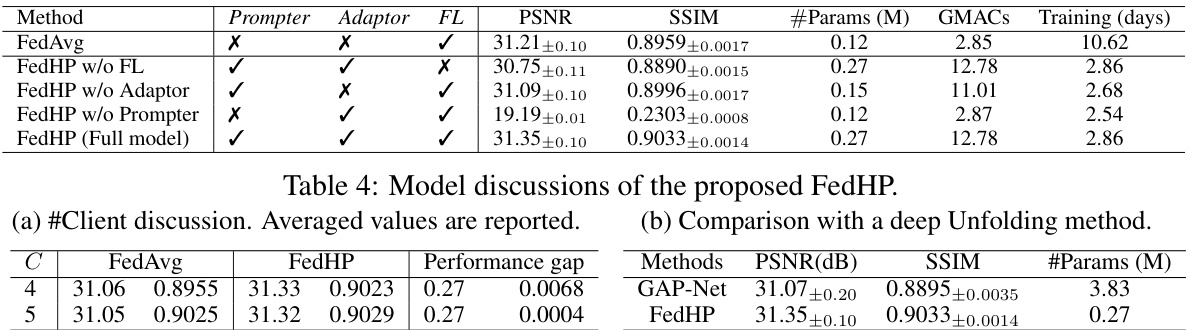

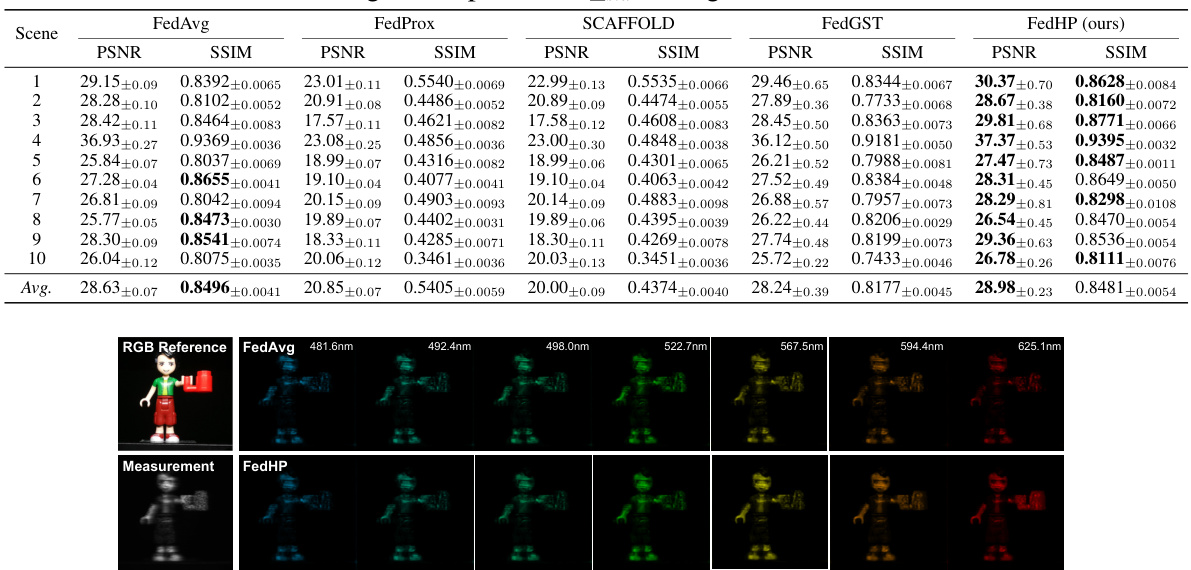

This table compares the performance (PSNR and SSIM) of different federated learning methods (FedAvg, FedProx, SCAFFOLD, FedGST, and FedHP) for snapshot compressive imaging (SCI) reconstruction. The experiments use different numbers of clients, all sampling non-overlapping masks from the same distribution for training, and then test on unseen masks randomly sampled from all clients. The results show the average PSNR and SSIM values across multiple trials, demonstrating the relative effectiveness of each method.

In-depth insights#

FedHP Framework#

The proposed FedHP (Federated Hardware-Prompt) framework innovatively addresses the challenges of cross-hardware training in snapshot compressive imaging (SCI) systems. It tackles the limitations of existing federated learning approaches by directly addressing data heterogeneity stemming from inconsistent hardware configurations, rather than solely focusing on gradient rectification. The core of FedHP is a hardware-conditioned prompt module that aligns inconsistent data distributions across different clients. This aligns input data before reconstruction learning, enabling effective cooperation among diverse hardware without compromising data privacy. Unlike traditional methods that only operate on the learning manifold, FedHP works directly in the input space, improving model adaptability. Furthermore, the framework leverages pre-trained reconstruction backbones, enhancing training efficiency. The introduction of the prompt network, combined with adaptors, enables the model to generalize well across varying hardware setups, demonstrating significantly improved performance compared to standard federated learning techniques. The framework’s efficacy is supported by experimental results and the development of a heterogeneous dataset, showcasing its potential for real-world applications in distributed SCI environments.

Data Heterogeneity#

The concept of ‘Data Heterogeneity’ in the context of snapshot compressive imaging (SCI) is explored in this paper. The authors highlight the significant challenge posed by variations in hardware configurations across different SCI systems. This heterogeneity manifests in the inconsistency of data distributions. The input data, measurements produced by various hardware components (e.g., coded apertures), exhibit differing characteristics. Directly applying standard federated learning (FL) methods is ineffective because these methods often struggle with heterogeneous data. The core issue lies in the fact that differences in hardware produce inconsistencies in data distributions, hindering the learning process of a unified model. This paper proposes a novel approach to address this by introducing a hardware-conditioned prompter. This component helps in aligning inconsistent data distributions across various hardware configurations, enabling cooperative learning while preserving data privacy. The paper’s exploration of data heterogeneity provides critical insights into the challenges and limitations of employing standard FL techniques in SCI, leading to the development of more robust methods. This improved methodology could further enhance the wider adoption of SCI technologies.

Cross-Hardware SCI#

Cross-hardware snapshot compressive imaging (SCI) tackles the challenge of generalizing SCI models trained on a single hardware instance to diverse hardware configurations. This is crucial because real-world SCI deployments often involve variations in hardware components, leading to performance degradation if the model is not robust. Existing approaches either collect data from multiple hardware setups for centralized training (impractical due to data privacy concerns) or utilize federated learning (FL), but struggle with the inherent heterogeneity of the input data. A key innovation is to address this heterogeneity directly through a hardware-conditioned prompter. This mechanism aligns inconsistent data distributions from different hardware, facilitating cooperative learning among various hardware instances without compromising data privacy. The effectiveness of this approach is demonstrated through extensive experiments, significantly outperforming conventional FL methods. The development of a novel Snapshot Spectral Heterogeneous Dataset further strengthens the study’s contribution and sets a benchmark for future research in robust and adaptable SCI systems.

Cooperative Learning#

The concept of cooperative learning in the context of snapshot compressive imaging (SCI) systems addresses the challenge of training robust reconstruction models that generalize well across diverse hardware configurations. Centralized training, while offering improved performance, is often impractical due to data privacy concerns and hardware heterogeneity. Federated learning presents a promising alternative. However, the inherent heterogeneity of SCI data, stemming from variations in coded apertures and other hardware parameters, significantly hinders the effectiveness of standard federated learning frameworks. This necessitates innovative approaches like Federated Hardware-Prompt Learning (FedHP), which focuses on aligning inconsistent data distributions across different hardware instances rather than merely rectifying gradients. This is achieved by introducing a hardware-conditioned prompt module that adjusts the input data to reduce inconsistencies. The successful development of the FedHP framework demonstrates that cooperative learning in SCI is indeed achievable, resulting in improved performance and addressing essential privacy and practical implementation challenges.

Future of FedHP#

The future of FedHP (Federated Hardware-Prompt learning) in snapshot compressive imaging (SCI) looks promising. Addressing data heterogeneity across diverse hardware remains crucial. Future work could explore more sophisticated prompt engineering techniques to handle even greater variations in hardware configurations. Investigating different prompt architectures beyond the current approach could improve data alignment and enhance performance. Furthermore, exploring the theoretical aspects of FedHP’s convergence properties and developing efficient optimization strategies would solidify its foundation. Scaling FedHP to larger-scale federated settings with many clients and complex hardware distributions will be critical for real-world deployment. Finally, applying FedHP to different SCI modalities beyond hyperspectral imaging, such as RGB or multispectral imaging, would broaden its impact and demonstrate its versatility.

More visual insights#

More on figures

This figure illustrates the learning process of Federated Hardware-Prompt Learning (FedHP) framework. It shows the four steps involved in one global round: initialization, local prompt update, local adaptor update, and aggregation. Each client utilizes a pre-trained reconstruction backbone which remains frozen. A prompt network adjusts the input data based on hardware configurations, and adaptors enhance learning. The process aims for cooperative learning across multiple hardware setups.

This figure compares five different hyperspectral reconstruction learning strategies. The first strategy uses a single hardware for training, showing poor performance on other hardware. The second and third strategies jointly train or self-tune a single model with data from multiple hardware configurations, but they are centralized methods which suffer from privacy concerns and may not generalize well. The fourth and fifth strategies use FedAvg and the proposed FedHP, which are federated learning methods that address privacy issues and heterogeneity across different hardware. The results show that FedHP, which learns a hardware-conditioned prompter to align inconsistent data distributions, significantly outperforms the other methods.

This figure compares five different hyperspectral reconstruction learning strategies. The first strategy uses a single hardware for training, demonstrating poor performance on other hardware configurations. The next two strategies are centralized learning approaches (Jointly train and Self-tuning) attempting to improve performance by training on data from multiple hardware instances. The remaining two strategies (FedAvg and FedHP) use federated learning to address the data privacy and hardware heterogeneity issues. The figure highlights the performance improvement achieved by FedHP over other methods, particularly when dealing with unseen hardware configurations. The performance gain is visualized via average peak signal-to-noise ratio (PSNR) values.

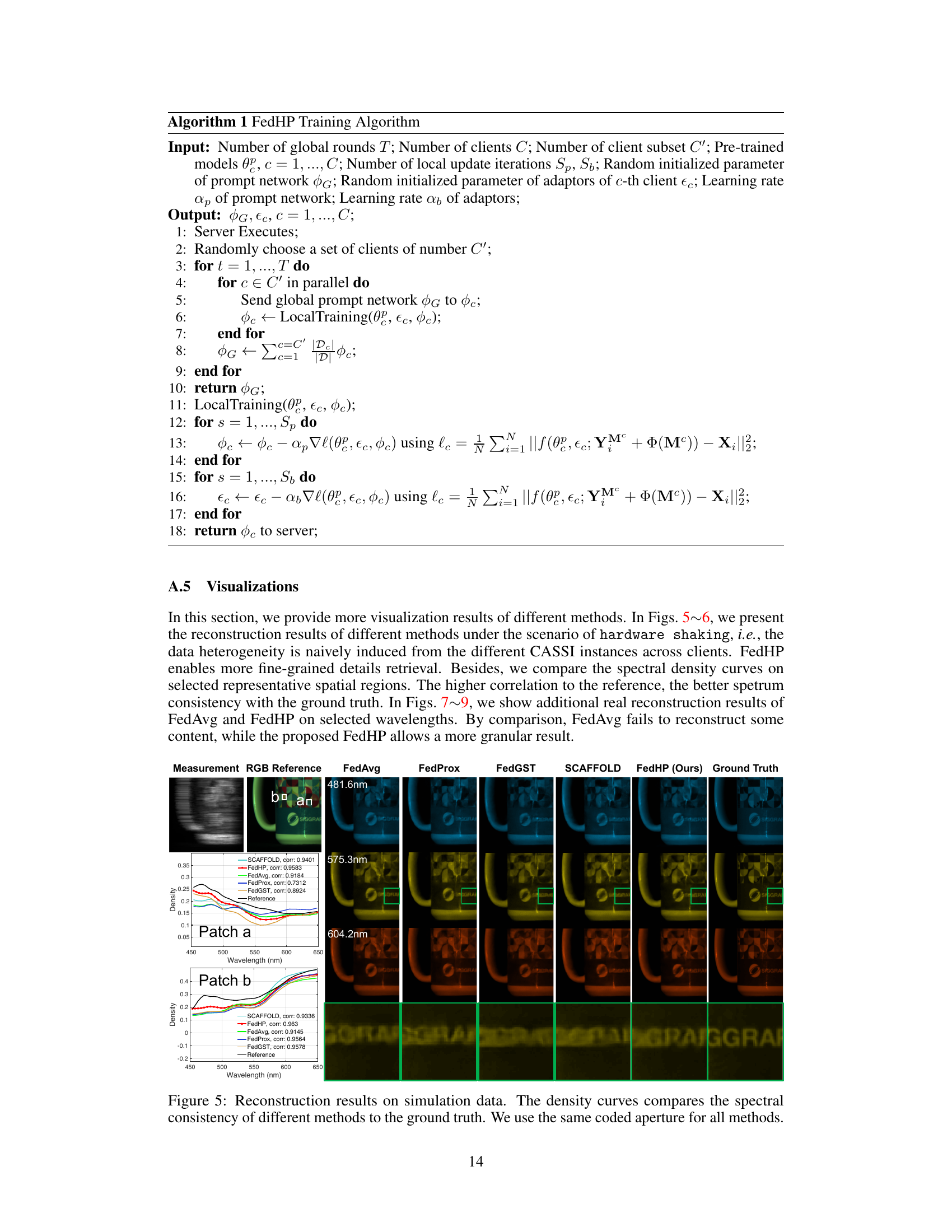

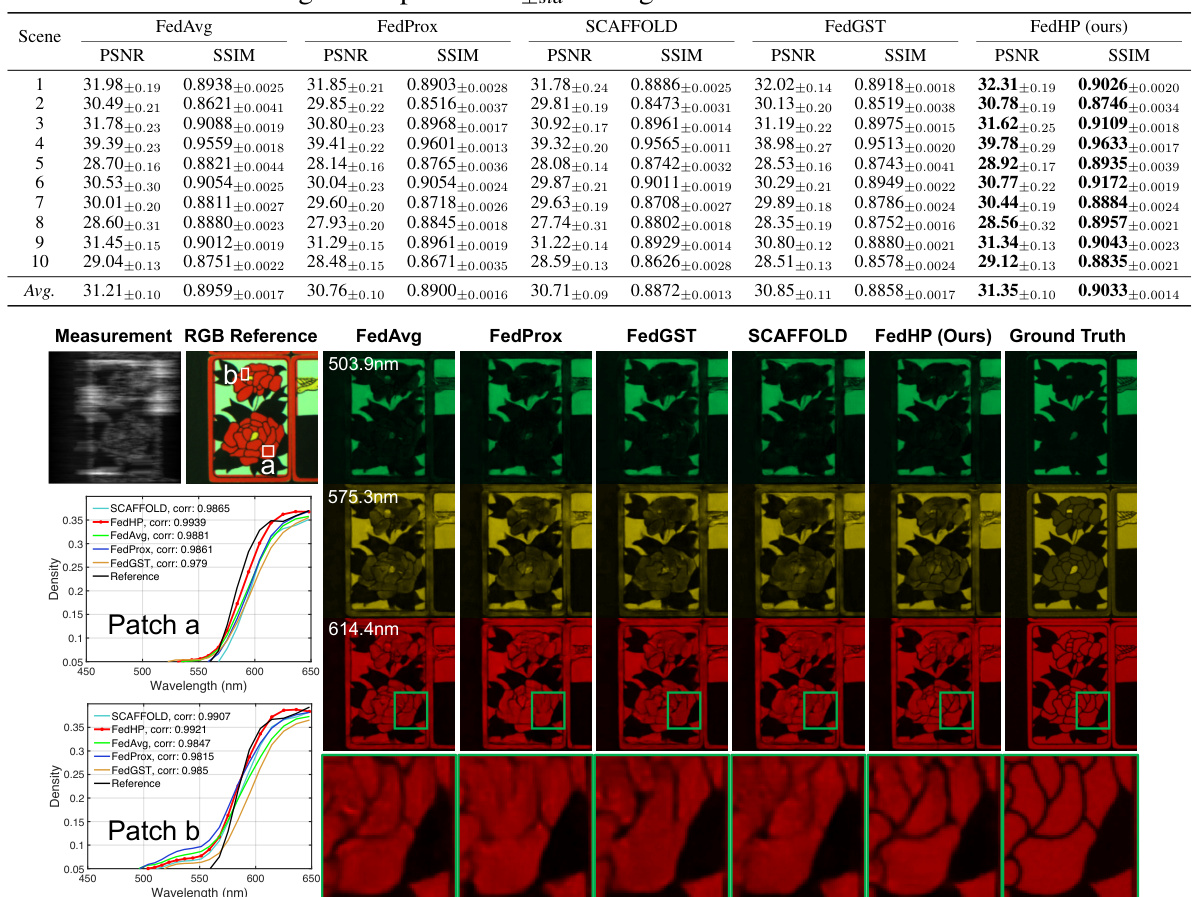

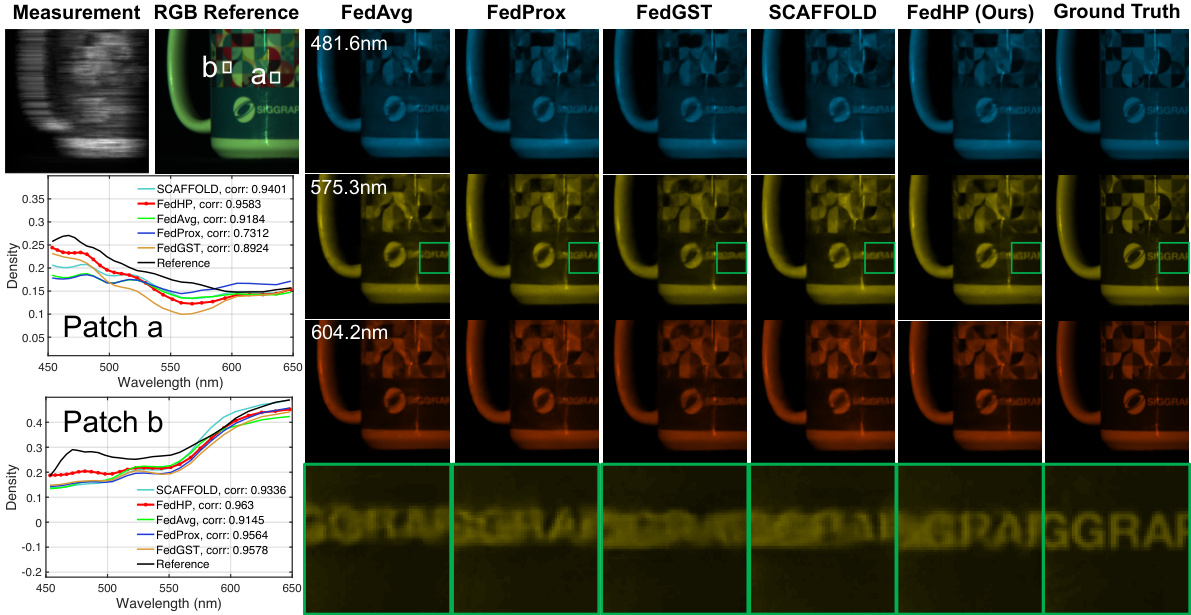

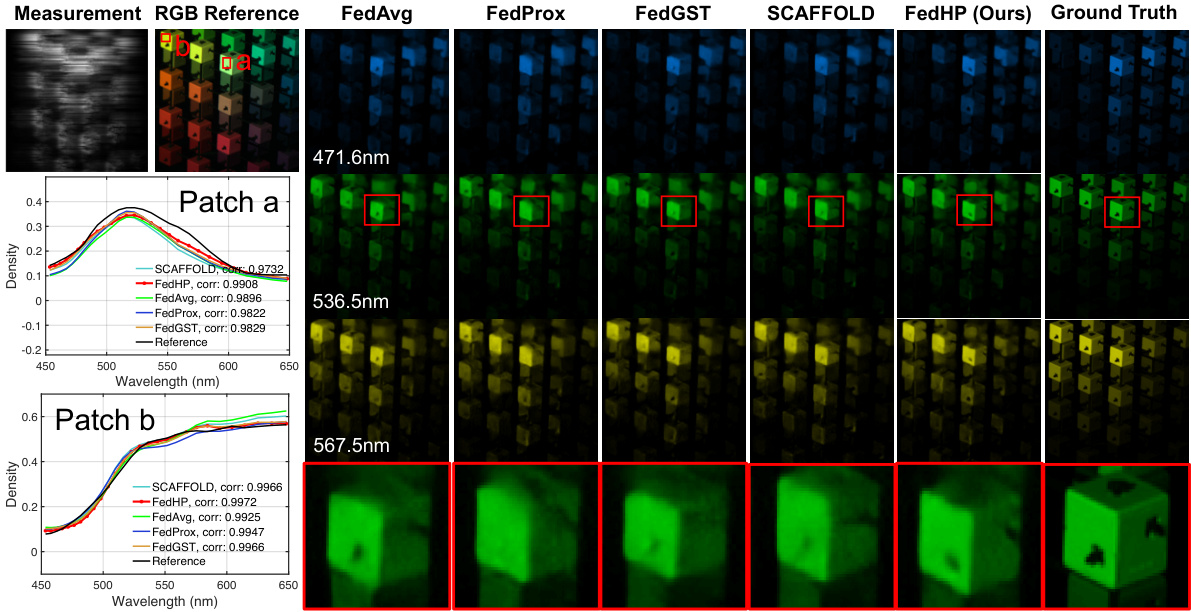

This figure compares the performance of different hyperspectral image reconstruction methods using simulation data. The spectral consistency (how well the reconstructed spectra match the ground truth) is evaluated using density curves for different wavelengths. The key takeaway is that the proposed method (FedHP) exhibits the best spectral consistency compared to FedAvg, FedProx, FedGST, and SCAFFOLD, indicating its superior performance in accurately reconstructing the hyperspectral data.

This figure compares the spectral consistency of different hyperspectral image reconstruction methods with the ground truth. It shows the density curves for the spectral distribution of reconstructed images for two example patches (a and b) using several methods (FedAvg, FedProx, FedGST, SCAFFOLD, and FedHP) and the ground truth. The methods are evaluated using the same coded aperture, allowing for a direct comparison of their ability to accurately reconstruct the spectral information.

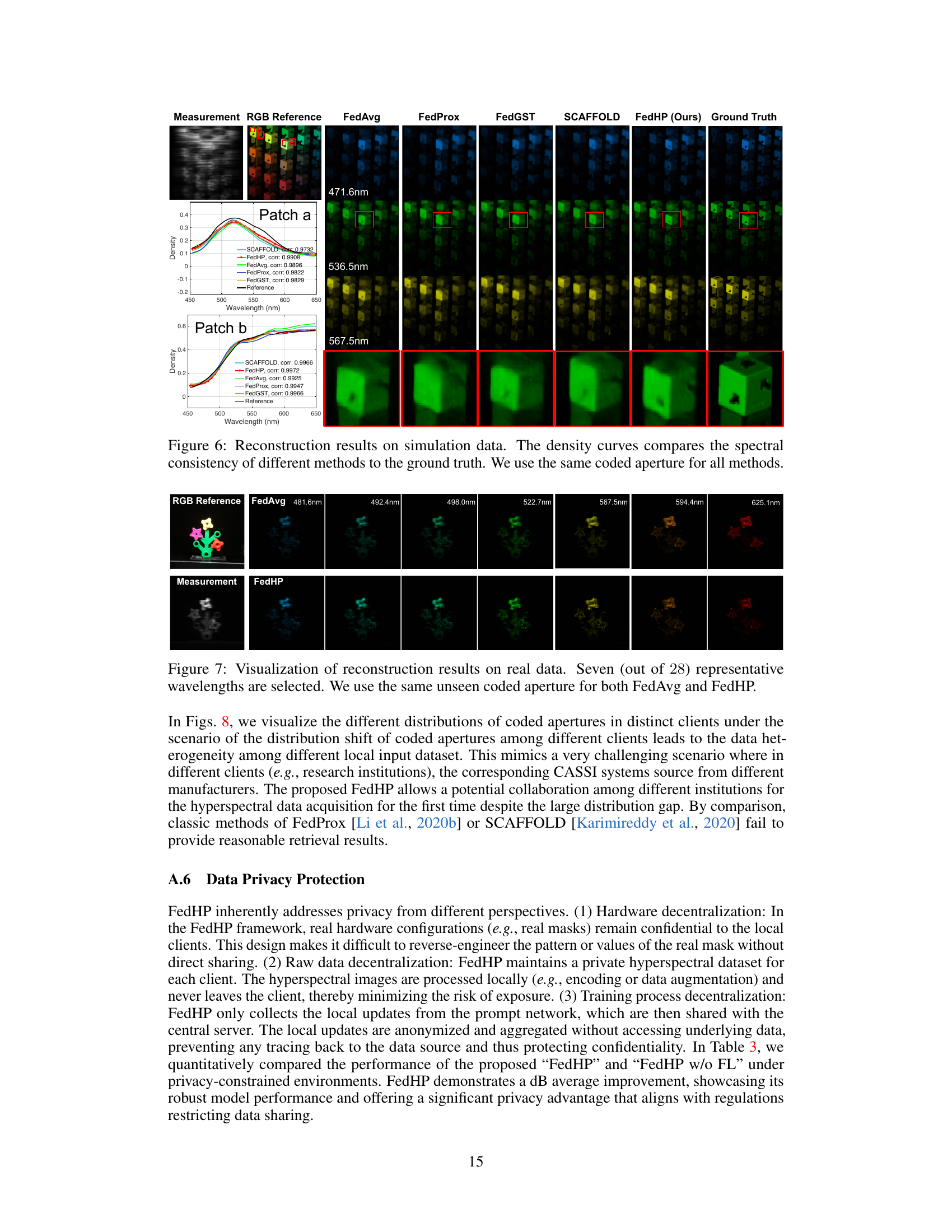

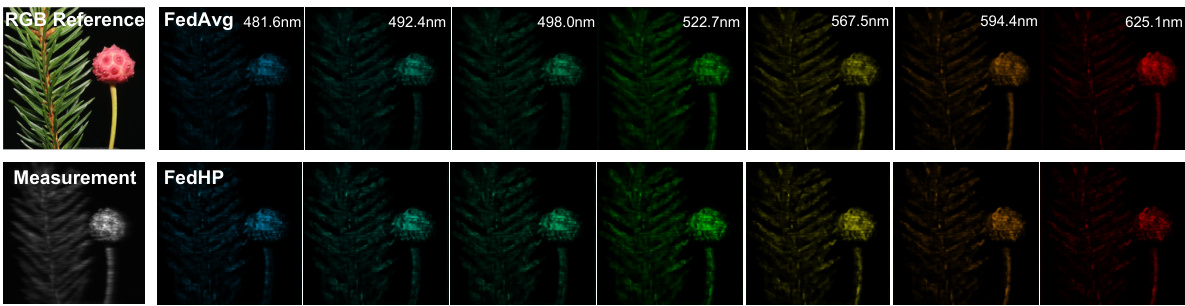

This figure visualizes the reconstruction results of FedAvg and FedHP on real data. It uses six representative wavelengths (out of the 28 available) to compare the performance of the two methods. Importantly, both methods used the same unseen coded aperture for a fair and consistent comparison. The image shows that FedHP yields superior reconstruction quality in terms of spectral consistency compared to FedAvg.

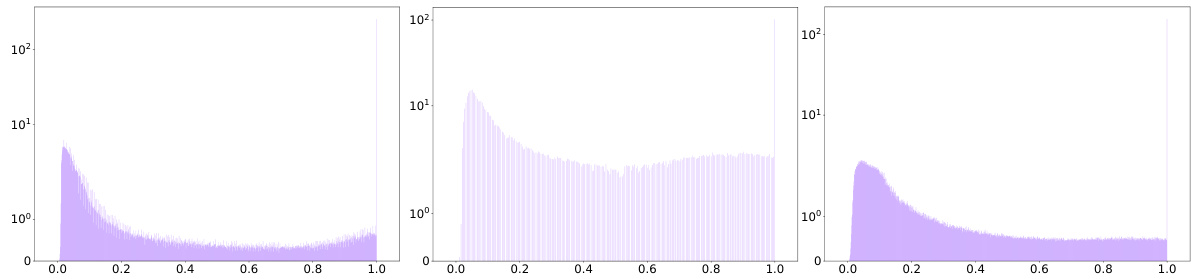

This figure visualizes the distributions of coded apertures across three different clients in a scenario where the hardware originates from distinct manufacturers. The x-axis represents the values of the coded aperture, and the y-axis represents the frequency of those values. The three subplots show the distributions for each client. The purpose is to illustrate the data heterogeneity resulting from different hardware configurations, which is one of the key challenges addressed by the proposed Federated Hardware-Prompt (FedHP) method.

This figure visualizes the reconstruction results of FedAvg and FedHP on real data. It showcases six representative wavelengths (out of the total 28) from the reconstructed hyperspectral images. Notably, the same unseen coded aperture (not used in training) was employed for both methods to ensure a fair comparison of their performance on unseen data.

Full paper#