↗ OpenReview ↗ NeurIPS Proc. ↗ Chat

TL;DR#

Multiclass transductive online learning faces challenges when the number of labels is large or unbounded. Previous research primarily focused on binary or finite label spaces, leaving the unbounded case as an open question. This limited understanding hinders the development of effective learning algorithms for real-world scenarios involving vast data and complex categories. This paper tackles this challenge directly.

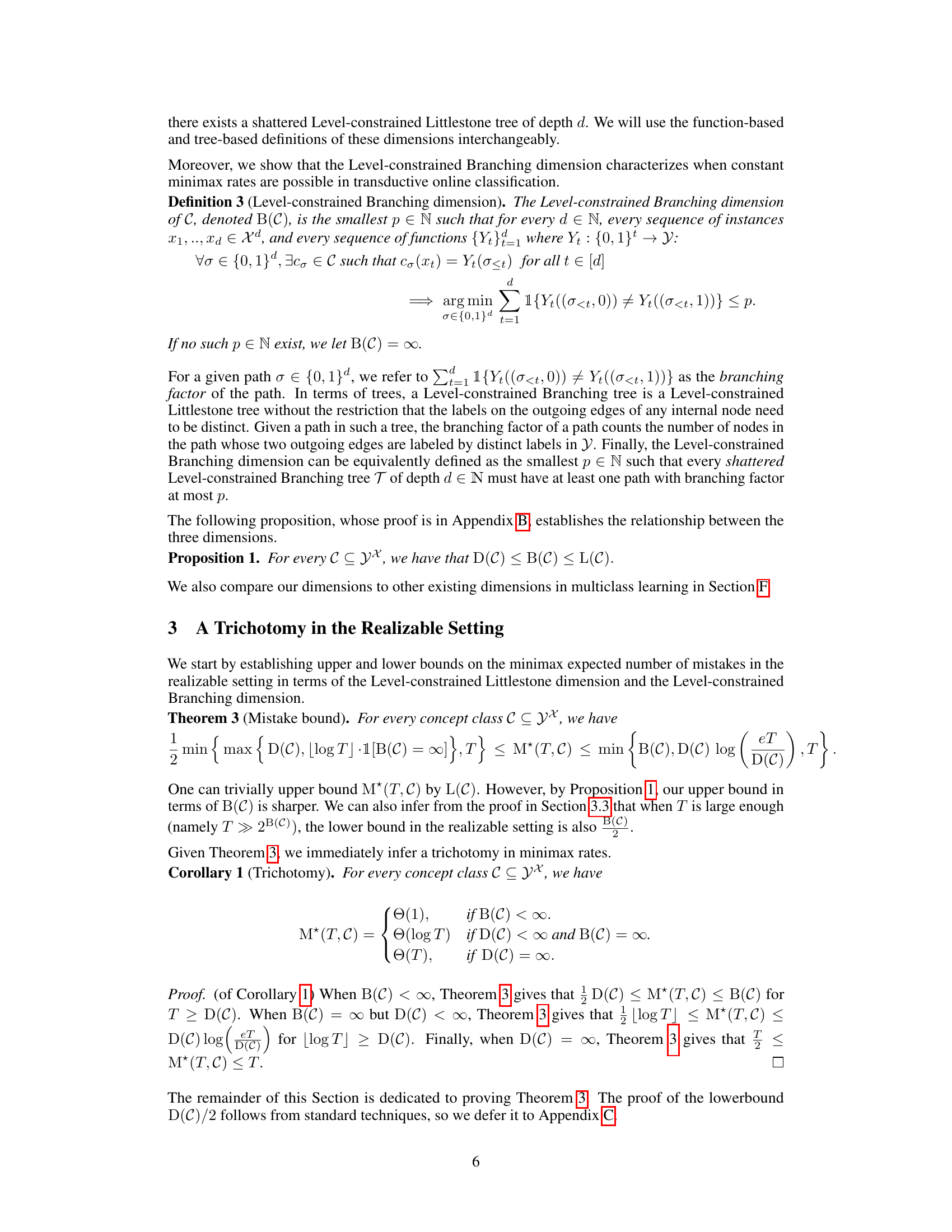

This research introduces new combinatorial parameters (Level-constrained Littlestone and Branching dimensions) to characterize online learnability. Using these parameters, the study establishes a trichotomy of minimax rates for expected mistakes, extending previous results to unbounded label spaces. Furthermore, the paper presents novel algorithms surpassing previous multiclass upper bounds by eliminating label set size dependence. These contributions provide a refined theoretical understanding and improved algorithmic tools for multiclass transductive online learning in scenarios with a potentially unbounded number of labels.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in online learning and machine learning. It solves a longstanding open problem concerning multiclass transductive online learning with unbounded label spaces, offering improved upper bounds and a novel theoretical framework. This work opens new avenues for research in handling large or unbounded label spaces, particularly relevant to modern applications with vast data and complex categorizations, like image recognition and language modeling. The refined understanding of learnability provided here impacts algorithm design and theoretical understanding of learning complexity.

Visual Insights#

Full paper#