↗ OpenReview ↗ NeurIPS Proc. ↗ Chat

TL;DR#

Multi-label learning, where data is associated with multiple labels, is challenging due to the complex relationships between labels. Label-Specific Representation Learning (LSRL) tackles this by creating individual representations for each label, improving performance. However, understanding how well LSRL generalizes to unseen data has been a significant challenge; existing theoretical bounds don’t adequately explain LSRL’s success.

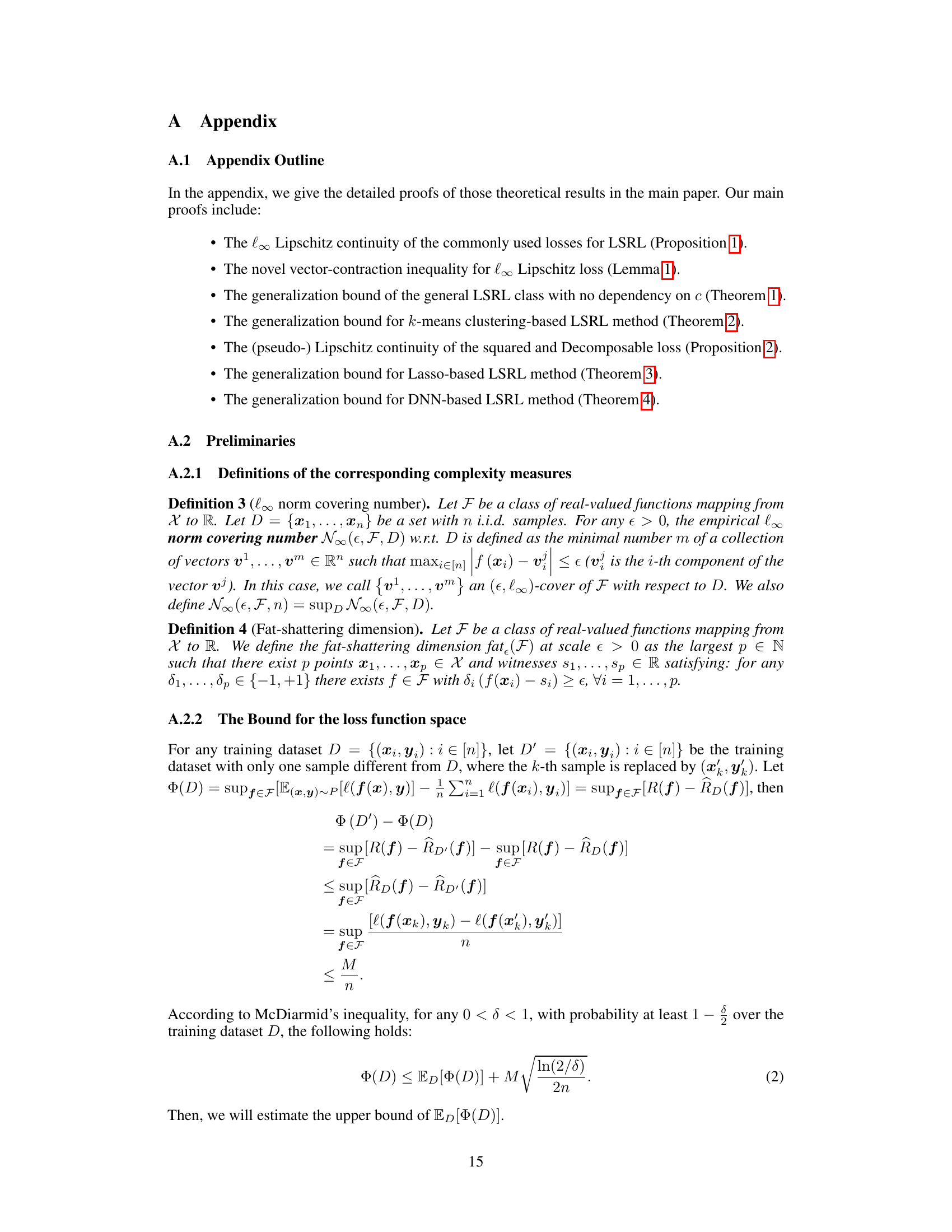

This paper addresses this by developing a novel theoretical framework and proving novel generalization bounds for LSRL. The bounds show a substantially weaker dependency on the number of labels. The paper also analyzes typical LSRL methods to uncover the effect of different label representation strategies on generalization, paving the way for more effective and efficient LSRL algorithm design. The findings are not only important for LSRL but also contribute more broadly to the theory of multi-label learning and vector-valued functions.

Key Takeaways#

Why does it matter?#

This paper is crucial because it significantly advances the understanding of generalization in label-specific representation learning (LSRL), a critical area in multi-label learning. Its novel theoretical bounds provide valuable guidance for designing and improving LSRL methods, offering a deeper insight into their empirical success. The work also introduces new theoretical tools and inequalities applicable beyond LSRL, thus impacting broader machine learning research. Furthermore, the analysis of typical LSRL methods reveals the impact of different representation techniques, opening new avenues for developing better algorithms.

Visual Insights#

Full paper#