↗ OpenReview ↗ NeurIPS Proc. ↗ Chat

TL;DR#

The research addresses three key problems: fast attention in large language models (LLMs), positive definite kernels, and metric transforms. Current fast attention methods rely on approximating the softmax function using low-degree polynomials. Positive definite kernels and metric transforms are well-understood for Euclidean distances but are largely unexplored for other distance metrics, such as the Manhattan distance. The lack of understanding in these areas creates limitations in the application of kernel methods and metric transforms.

The researchers developed a new linear-algebraic tool based on group representation theory to solve these issues. They prove that low-degree polynomials are the only piecewise continuous functions that preserve the low-rank property of matrices essential to fast attention. They also provide a complete classification of positive definite kernels that are functions of the Manhattan distance and fully classify functions that transform Manhattan distances to Manhattan distances. This work is significant as it provides a deeper theoretical understanding of these fundamental concepts, and may lead to the development of more efficient machine learning algorithms.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in machine learning and algorithm design. It significantly advances our understanding of fast attention mechanisms, kernel methods, and metric transforms by providing novel theoretical results and powerful new techniques. The findings open up new avenues for developing more efficient and effective algorithms and could lead to breakthroughs in various applications.

Visual Insights#

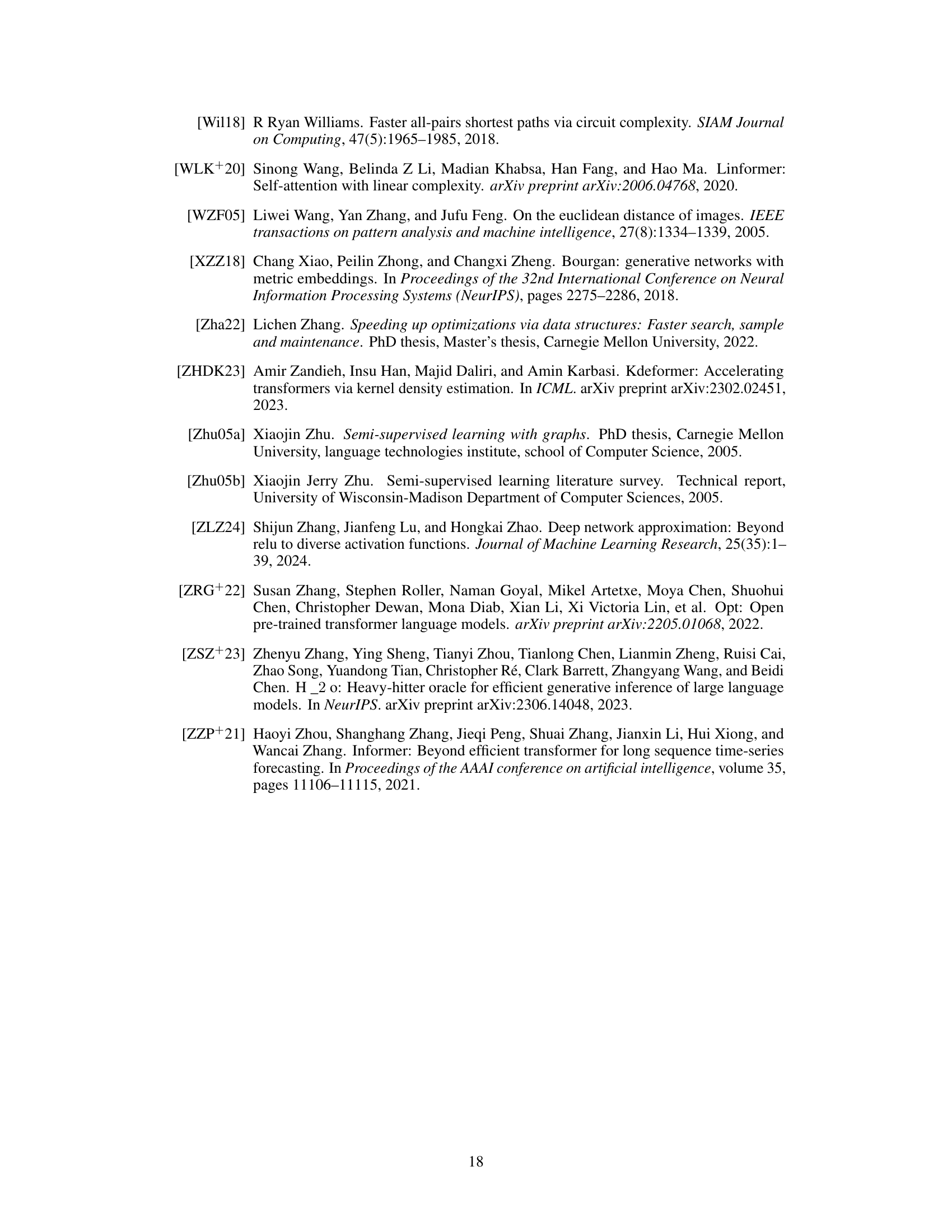

Full paper#