↗ OpenReview ↗ NeurIPS Proc. ↗ Chat

TL;DR#

Fairly allocating indivisible goods online is challenging because items arrive sequentially, and future items are unknown. Existing methods often fail to optimize social welfare while ensuring fairness. This paper tackles this challenge by modeling the problem as a variation of the multi-armed bandit problem, where each ‘arm’ represents allocating an item to a specific player. This approach allows for efficient learning of player preferences while upholding fairness constraints.

The paper introduces a novel explore-then-commit algorithm that addresses the limitations of existing approaches. The algorithm leverages unique properties of fairness constraints to achieve a regret of Õ(T²/³), meaning its performance approaches that of an optimal algorithm that knows the player values in advance. Crucially, the algorithm maintains either envy-freeness or proportionality in expectation, thus ensuring fairness. The authors also prove a lower bound of Ω(T²/³) regret, showing that their algorithm is close to optimal.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in online fair division and multi-armed bandits. It bridges these two important areas by framing fair online allocation as a multi-armed bandit problem, introducing novel fairness machinery based on fundamental properties of envy-freeness and proportionality, and providing a tight algorithm with a proven regret bound. This opens exciting avenues for exploring fairness in dynamic resource allocation, leading to more practical and equitable algorithms.

Visual Insights#

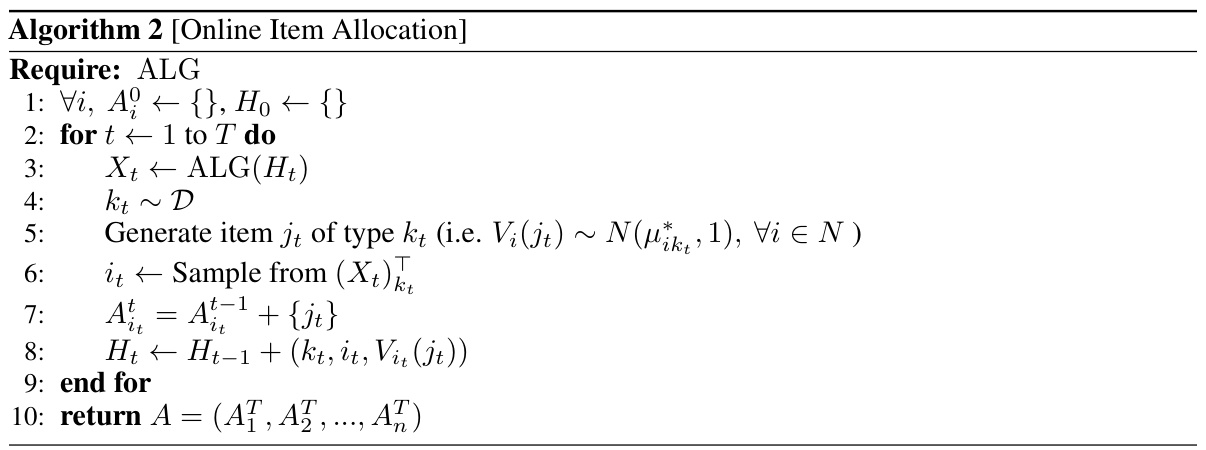

Algorithm 2 provides a pseudocode representation of the online item allocation process within the paper’s model. It details how, at each time step t, an algorithm (ALG) uses the history (Ht) of past allocations to produce a fractional allocation (Xt). An item type (kt) is sampled from a distribution (D), and then the item is allocated to a player (it) according to the probabilities in Xt. The algorithm maintains the allocation (Ai) for each player and the history (Ht). Finally, the complete allocation A is returned after all T items have been allocated.

Full paper#