↗ OpenReview ↗ NeurIPS Proc. ↗ Chat

TL;DR#

Autonomous driving heavily relies on accurate motion forecasting of surrounding agents. Current methods process each driving scene independently, ignoring the crucial temporal context between consecutive scenes. This limitation leads to suboptimal and inefficient forecasting.

RealMotion tackles this by introducing two integrated streams: a scene context stream that progressively accumulates historical scene information, and an agent trajectory stream that sequentially refines predictions. The framework also includes a data reorganization strategy to enhance the realism of the datasets. RealMotion demonstrates state-of-the-art performance on various Argoverse benchmarks, highlighting its effectiveness and efficiency in real-world autonomous driving scenarios.

Key Takeaways#

Why does it matter?#

This paper is important because it addresses the limitations of existing motion forecasting methods in autonomous driving by considering the temporal relationships between successive driving scenes. This is a significant contribution because motion forecasting is crucial for safe and reliable autonomous driving, and the proposed framework, RealMotion, demonstrates state-of-the-art performance with efficient real-world inference. The data reorganization strategy introduced to bridge the gap between existing benchmarks and real-world applications is also valuable for researchers.

Visual Insights#

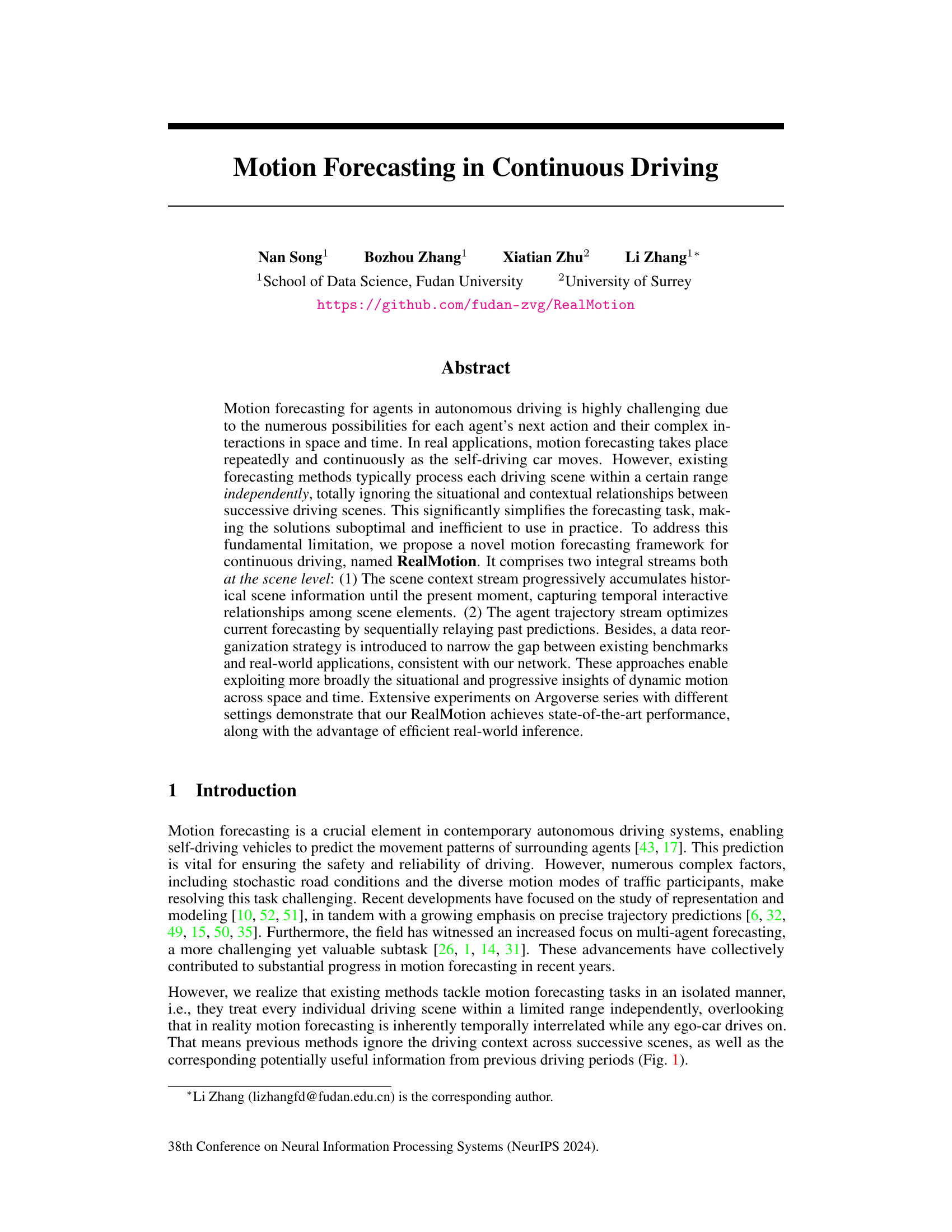

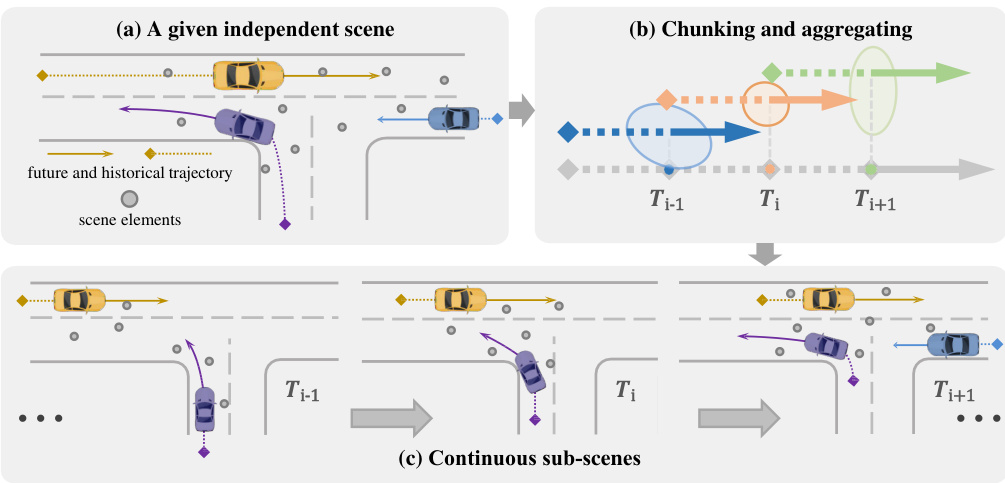

This figure compares traditional motion forecasting methods with the proposed RealMotion approach. (a) shows how existing methods typically process each driving scene in isolation, ignoring the temporal relationships between scenes. (b) illustrates RealMotion’s approach of continuously accumulating historical information across scenes, allowing it to capture temporal interactions and relationships between scene elements. (c) provides a specific example demonstrating RealMotion’s ability to predict the actions of agents that may currently be out of view, such as a pedestrian hidden behind an obstacle, by leveraging historical information and context from previous scenes.

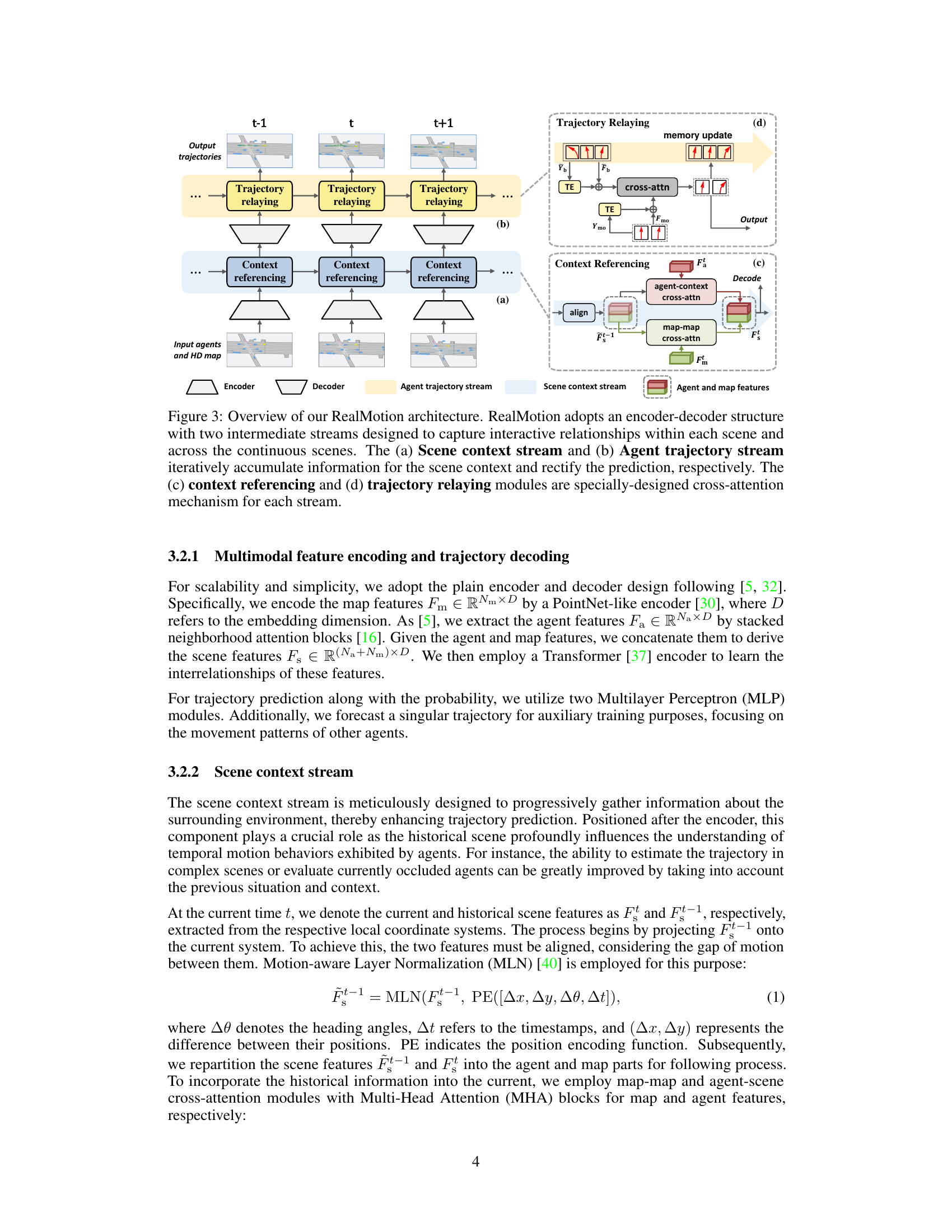

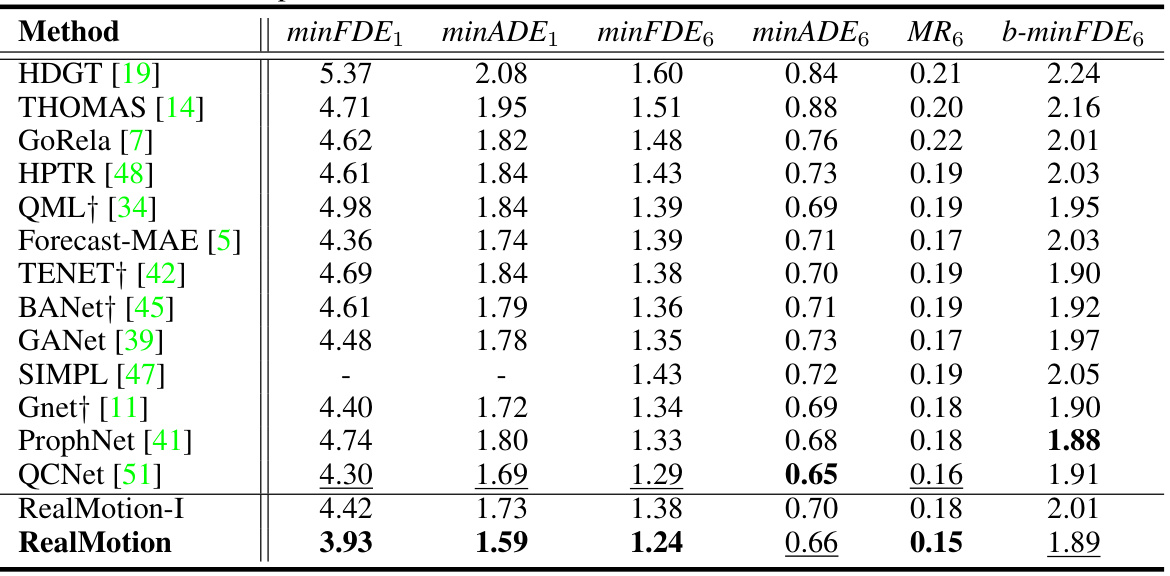

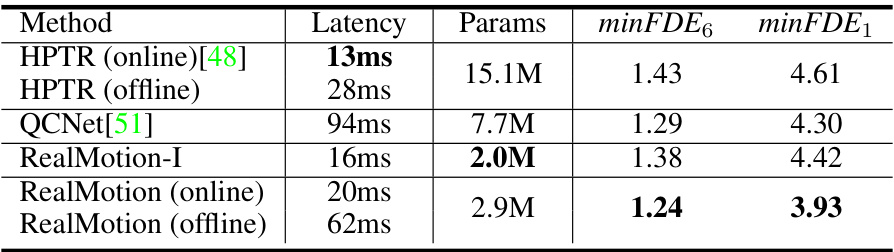

This table compares the performance of the proposed RealMotion model with other state-of-the-art methods on the Argoverse 2 motion forecasting benchmark. It shows minimum final displacement error (minFDE), minimum average displacement error (minADE), miss rate (MR), and a modified minFDE (b-minFDE) for forecasting horizons of 1 and 6 seconds. RealMotion-I is a baseline version of the model that doesn’t use the novel temporal modeling components.

In-depth insights#

Temporal Motion#

Analyzing temporal motion in the context of autonomous driving necessitates a deep understanding of how agents move and interact over time. RealMotion’s strength lies in its ability to accumulate historical scene information and context across consecutive driving scenarios, unlike traditional methods. This progressive accumulation is crucial for predicting future trajectories, especially when dealing with complex interactions and occlusions. The temporal stream in RealMotion not only leverages past predictions to refine current forecasts but also explicitly captures temporal relationships between agents and the environment. The continuous driving context addressed by RealMotion improves the quality of predictions, particularly in challenging situations such as crowded intersections. The method’s reliance on temporal information emphasizes the inherent interconnectedness of motion over time in real-world driving, providing a more robust and adaptable approach to motion forecasting than isolated, scene-by-scene methods.

RealMotion Model#

The RealMotion model is presented as a novel motion forecasting framework designed for continuous driving scenarios. It directly addresses limitations of existing methods which process individual driving scenes independently, ignoring temporal relationships between successive scenes. The model’s architecture is based on two integral streams: a scene context stream which progressively accumulates historical scene information, capturing temporal interactions; and an agent trajectory stream which optimizes current forecasting by sequentially using past predictions. A key aspect is the data reorganization strategy implemented to bridge the gap between existing benchmarks and real-world continuous driving data. RealMotion’s strengths lie in its ability to leverage both progressive and situational information across time and space, resulting in state-of-the-art performance and efficient real-world inference. The model is thoroughly evaluated on the Argoverse datasets demonstrating significant performance improvements over existing methods. However, further research may address model limitations concerning complex scenarios and subjective driving behavior.

Data Reorg#

The heading ‘Data Reorg,’ implying data reorganization, highlights a crucial preprocessing step. It addresses a key limitation of existing motion forecasting methods by transitioning from independently processing individual scenes to handling continuous driving scenarios. This transformation is vital because real-world driving involves temporally linked events, where understanding the context across consecutive scenes significantly improves prediction accuracy. The method likely involves segmenting trajectories and aggregating surrounding elements from past and future frames, creating temporally coherent sub-scenes. This process explicitly incorporates the temporal relationships crucial for realistic prediction and narrows the gap between benchmark datasets and real-world continuous driving data. The effectiveness of this data reorganization suggests a significant improvement in model performance and generalizability, moving beyond the limitations of isolated scene-based approaches.

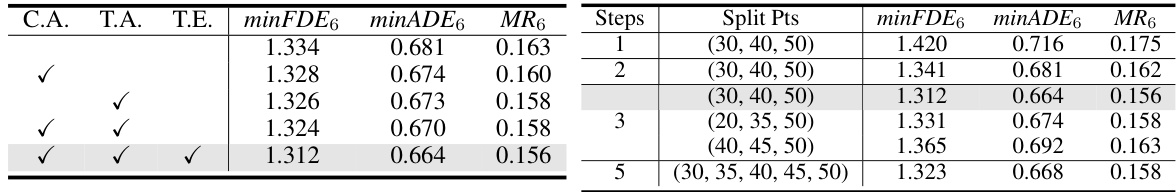

Ablation Study#

An ablation study systematically removes components of a model to assess their individual contributions. In the context of a motion forecasting model, this might involve disabling parts of the architecture, such as the scene context stream or agent trajectory stream. By observing the performance degradation after each removal, the importance of each component in achieving overall accuracy is revealed. This analysis provides crucial insights for model optimization and understanding, by demonstrating the strength of each module and identifying potential weaknesses. For instance, if removing the scene context stream significantly reduces accuracy, it suggests that integrating historical scene information is crucial for robust prediction. Conversely, a minimal performance drop upon removal of a specific component could indicate redundancy or areas for simplification to improve efficiency. Ultimately, a well-executed ablation study strengthens the paper’s findings by providing a clear picture of the model’s design choices and effectiveness, leading to a better understanding of what features are essential for achieving state-of-the-art performance.

Future Works#

Future research directions stemming from this work could involve several key areas. Extending the temporal modeling capabilities of RealMotion to handle even longer sequences and more complex traffic scenarios is crucial. This could involve exploring more sophisticated recurrent neural networks or transformer architectures. Improving the model’s ability to handle occluded agents is another important direction, potentially through the incorporation of advanced sensor fusion techniques or more robust representation methods. Addressing the challenges posed by subjective driving behaviors also warrants further investigation, perhaps by incorporating driver intention models or advanced behavioral analysis techniques. Finally, enhancing the model’s generalization capabilities across diverse geographical locations and driving conditions should be prioritized. Thoroughly evaluating the model’s performance in real-world scenarios will reveal strengths and limitations that can guide future model enhancements and broader applicability.

More visual insights#

More on figures

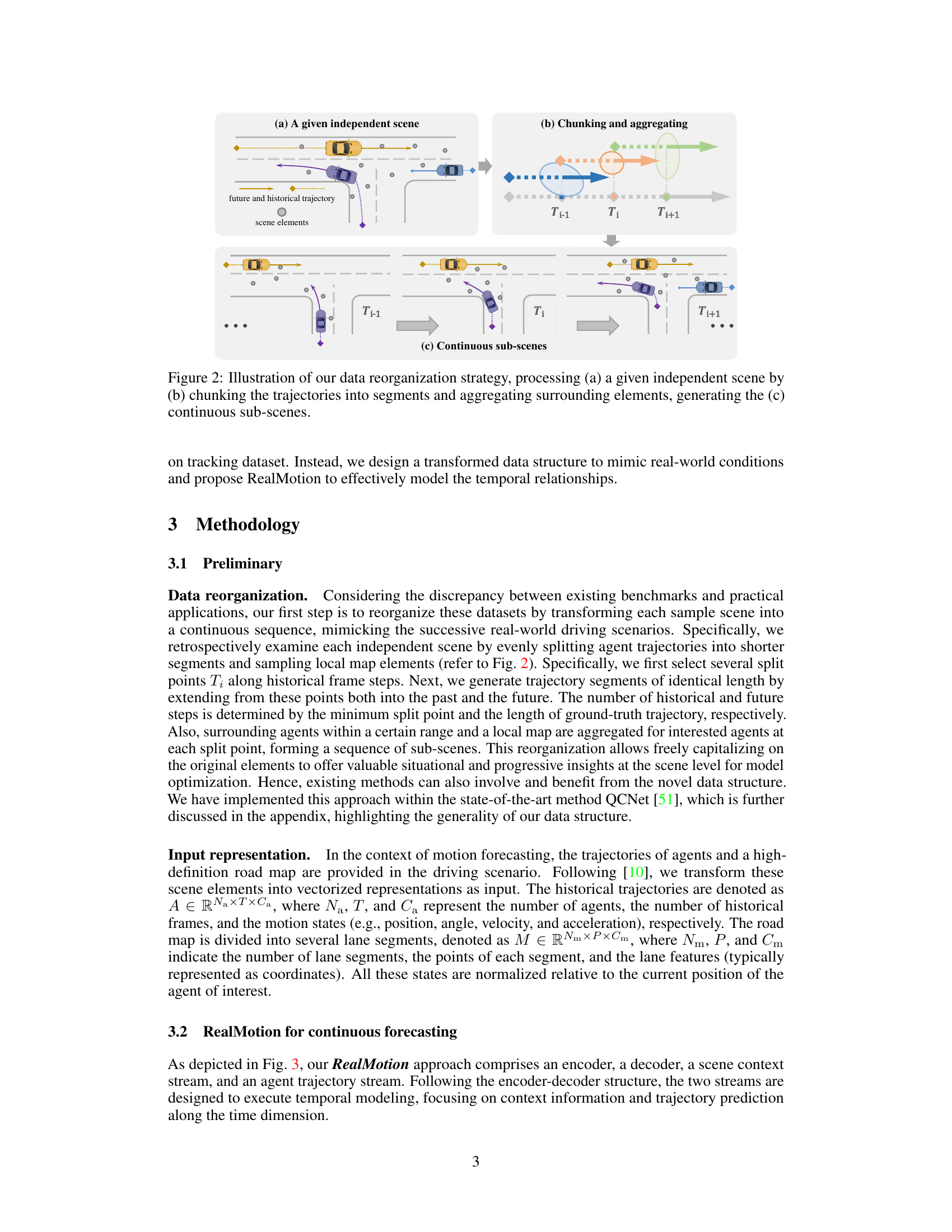

This figure illustrates the data reorganization strategy used in the RealMotion framework. It shows how a single, independent scene (a) is transformed into a sequence of continuous sub-scenes (c). This is done by dividing the trajectories into shorter segments (b) and including surrounding elements at each segment’s start point. The resulting continuous sub-scenes mimic real-world driving scenarios and provide historical and temporal context for more effective motion forecasting.

The figure shows the architecture of the RealMotion model, which consists of an encoder, decoder, scene context stream, and agent trajectory stream. The scene context stream progressively accumulates historical scene information to capture temporal interactions, while the agent trajectory stream optimizes current forecasting by sequentially relaying past predictions. Both streams utilize cross-attention mechanisms to process information and generate predictions.

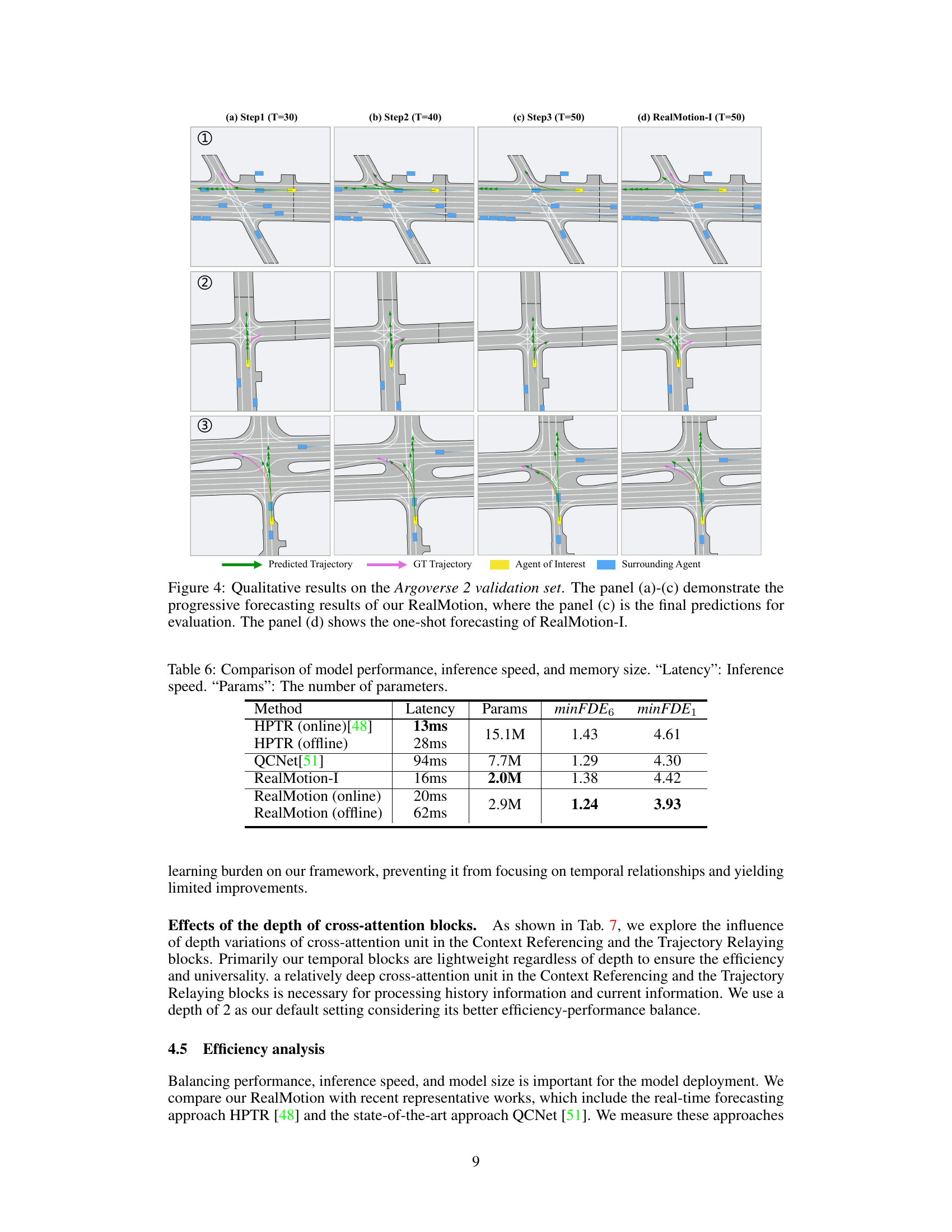

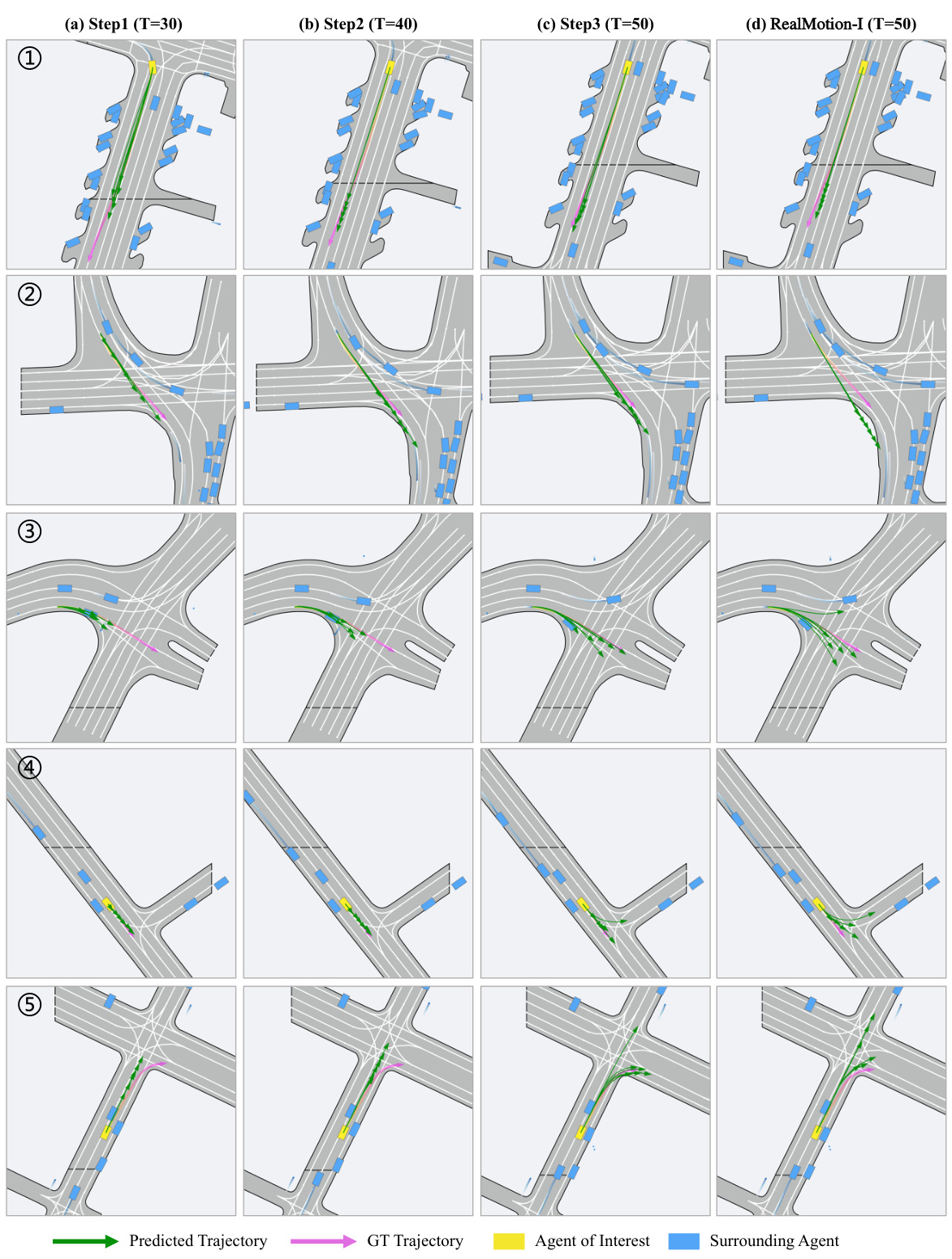

This figure shows a qualitative comparison of the RealMotion model’s performance against its independent variant (RealMotion-I). It presents four panels, each depicting a different stage of trajectory prediction for multiple agents in a driving scene. Panels (a), (b), and (c) show RealMotion’s progressive refinement of predictions over time, culminating in the final prediction in panel (c). In contrast, panel (d) illustrates the single-step prediction of RealMotion-I, highlighting the improvement achieved through RealMotion’s iterative approach.

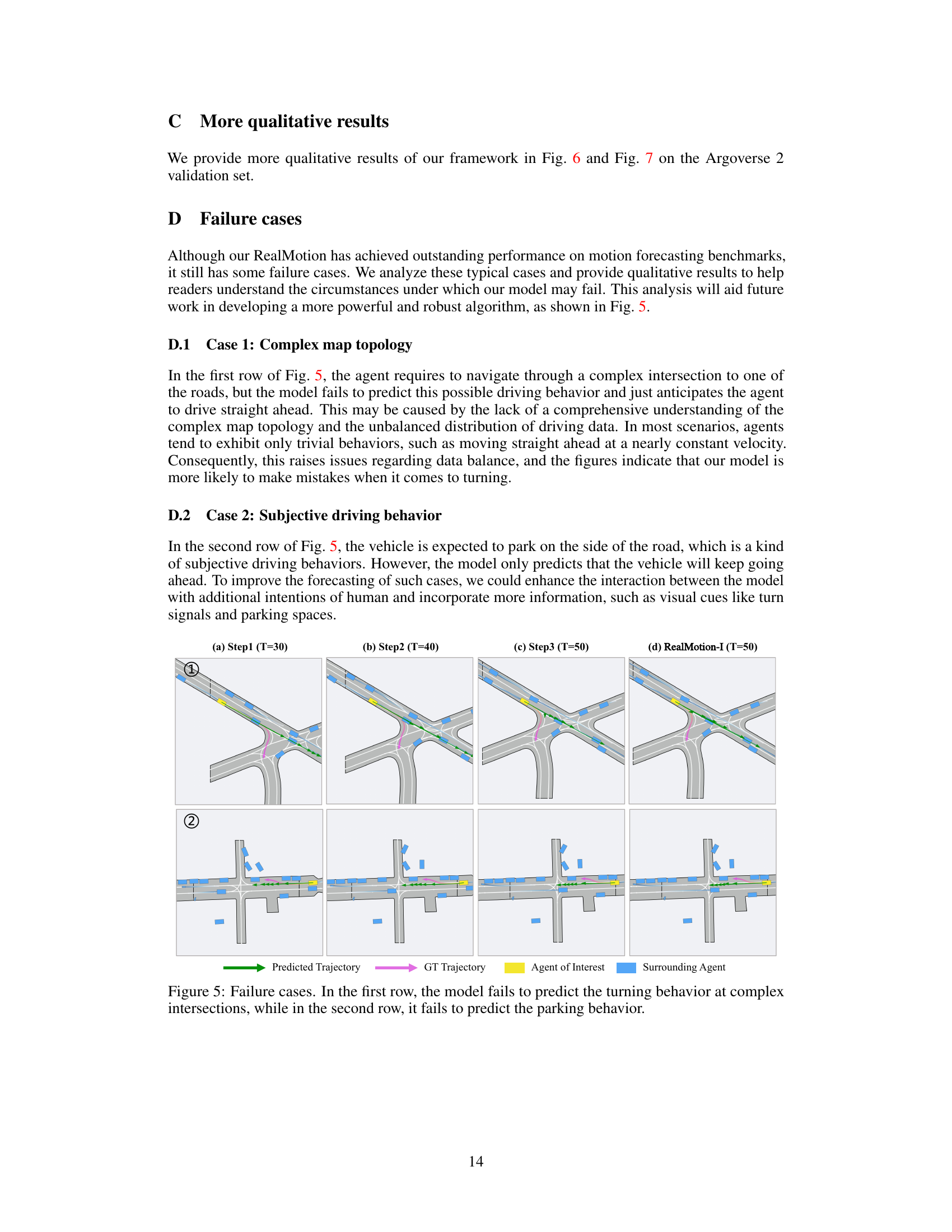

This figure shows two failure cases of the RealMotion model. The first case demonstrates the model’s inability to accurately predict turning maneuvers at complex intersections due to potentially insufficient training data or an incomplete understanding of the map’s topology. The second case highlights the model’s difficulty in predicting subjective driving behaviors, such as parking, indicating a lack of representation for these less frequent actions in the training data.

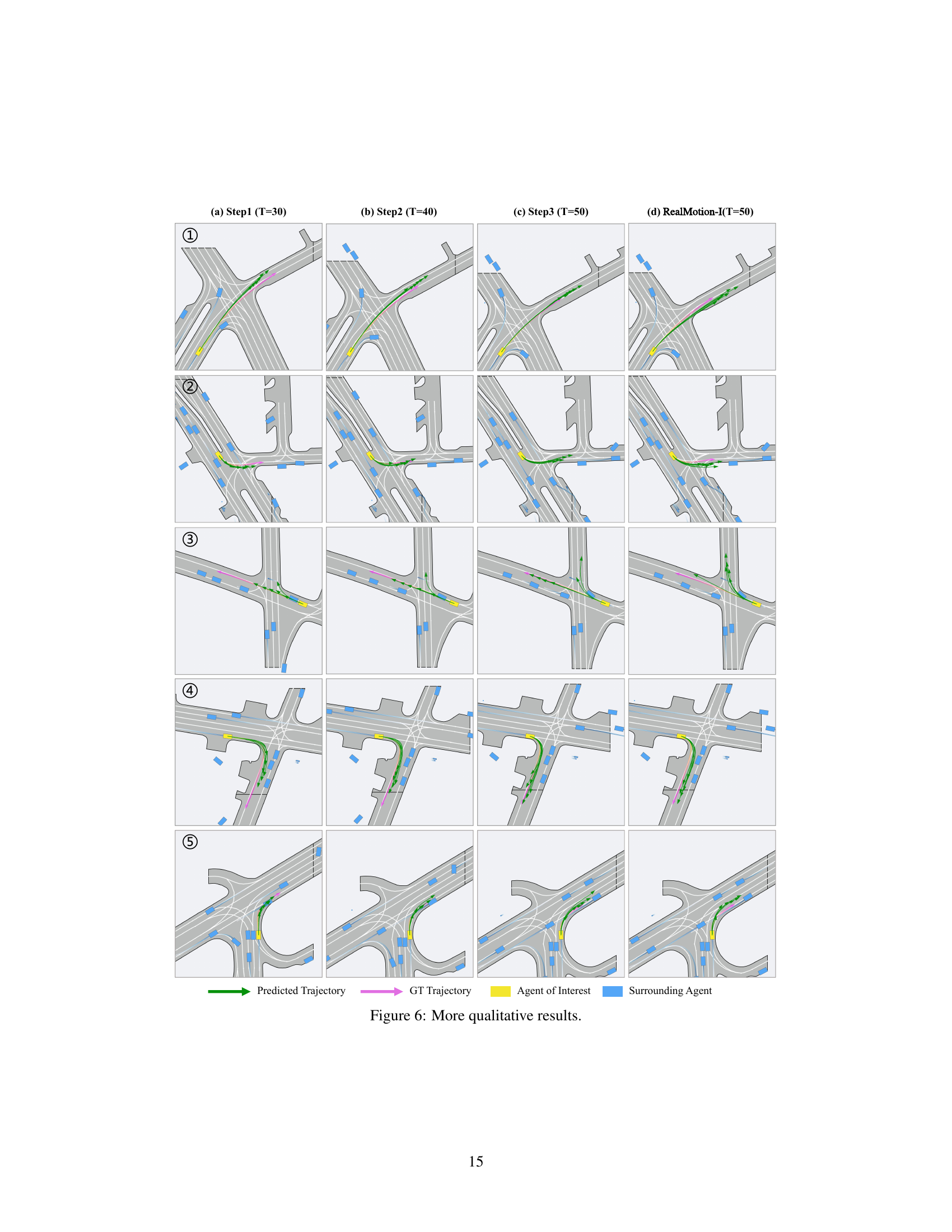

This figure presents a qualitative comparison of the RealMotion model’s performance against its independent variant (RealMotion-I). It shows the progressive refinement of trajectory predictions over time steps (a-c) for RealMotion, highlighting its ability to capture temporal relationships. In contrast, RealMotion-I performs one-shot forecasting, lacking the progressive refinement seen in RealMotion. The results are visualized on sample scenes from the Argoverse 2 validation set.

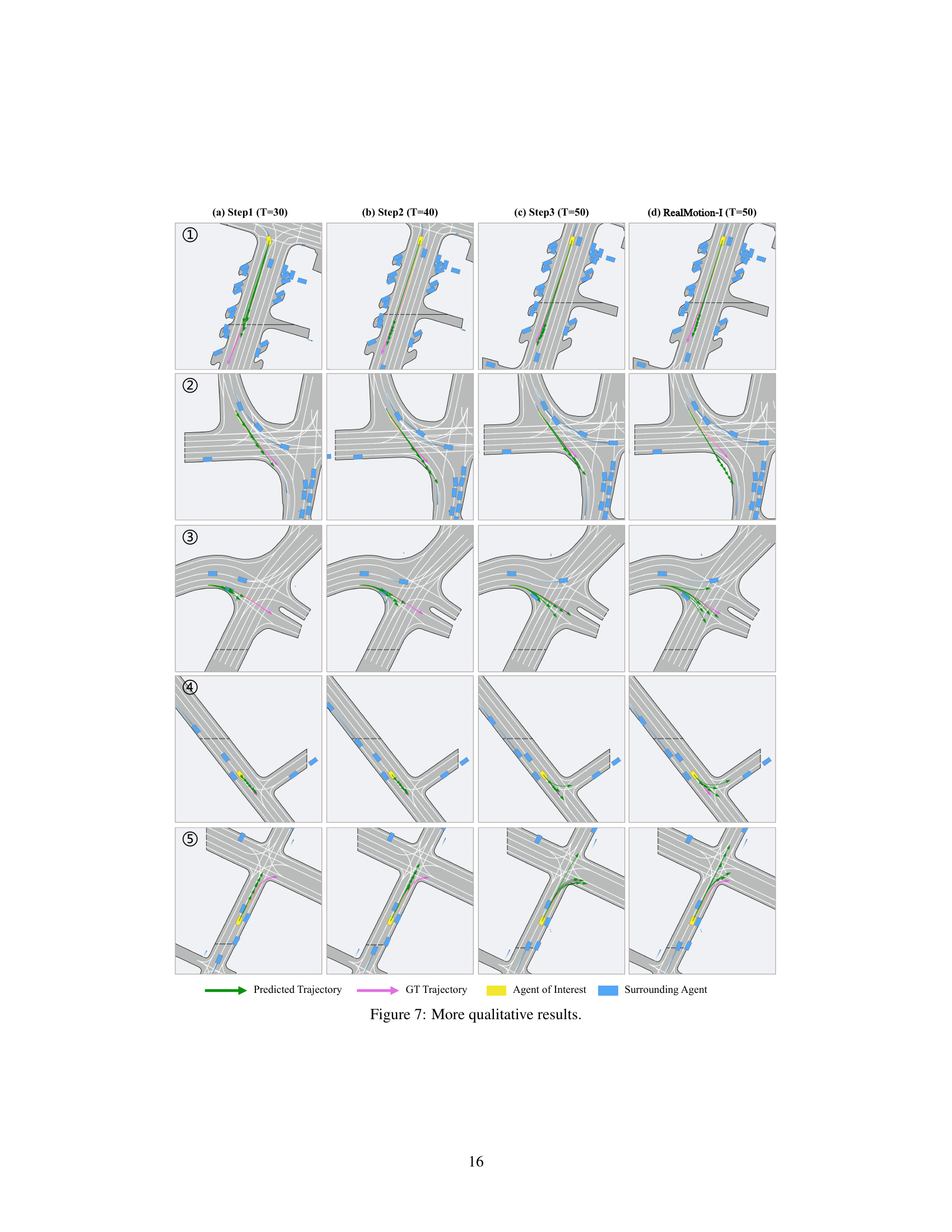

This figure shows a qualitative comparison of the RealMotion model’s performance against its independent variant (RealMotion-I). It displays the progressive forecasting results of RealMotion across three time steps, showcasing its ability to refine predictions over time. In contrast, RealMotion-I provides only a single, one-shot prediction, highlighting the advantage of RealMotion’s iterative approach.

More on tables

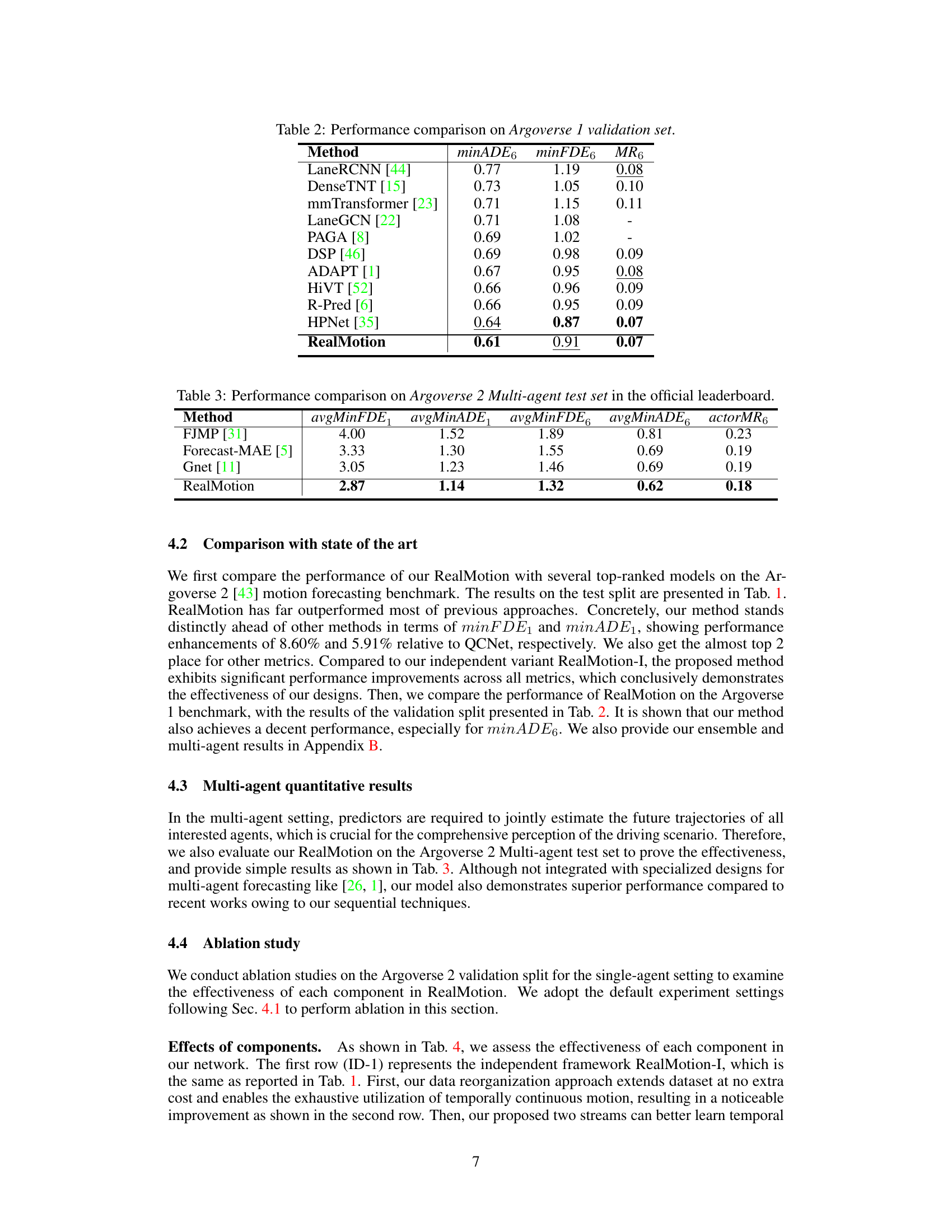

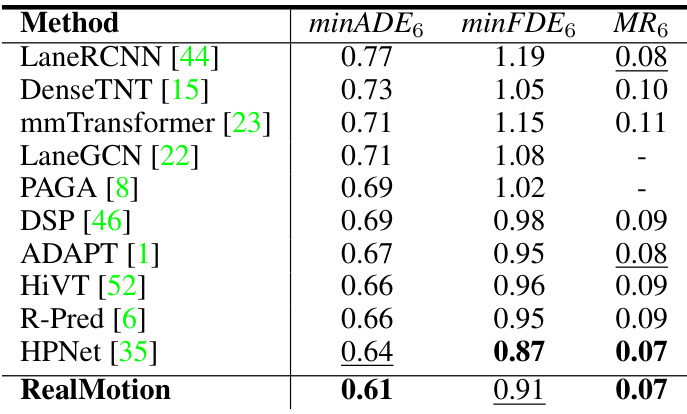

This table compares the performance of RealMotion against other state-of-the-art methods on the Argoverse 1 validation dataset. The metrics used are minimum Average Displacement Error (minADE6), minimum Final Displacement Error (minFDE6), and Miss Rate (MR6). Lower values for minADE6 and minFDE6 indicate better performance, while a lower MR6 indicates fewer missed predictions.

This table compares the performance of RealMotion against other state-of-the-art methods on the Argoverse 2 Multi-agent test set. The metrics used are average minimum final displacement error (avgMinFDE), average minimum average displacement error (avgMinADE), and actor miss rate (actorMR). Subscripts 1 and 6 indicate the metrics are calculated at 1 and 6 seconds into the future, respectively. The results demonstrate RealMotion’s superior performance in multi-agent motion forecasting.

This table presents the ablation study results on the core components of the proposed RealMotion model. It shows the impact of using continuous data, the scene context stream, and the agent trajectory stream on the model’s performance, measured by several metrics (minFDE1, minADE1, minFDE6, minADE6, MR6, b-minFDE6) on the Argoverse 2 validation set. Each row represents a different configuration, with checkmarks indicating the inclusion of a specific component. The results demonstrate the individual and combined contributions of these components to the overall model performance.

This table compares the performance of RealMotion against other state-of-the-art motion forecasting methods on the Argoverse 2 test dataset. It shows various metrics such as minimum final displacement error (minFDE), minimum average displacement error (minADE), and miss rate (MR), at prediction horizons of 1 and 6 seconds. A version of RealMotion without the temporal modeling components (RealMotion-I) is also included for comparison, highlighting the contribution of the proposed novel architecture.

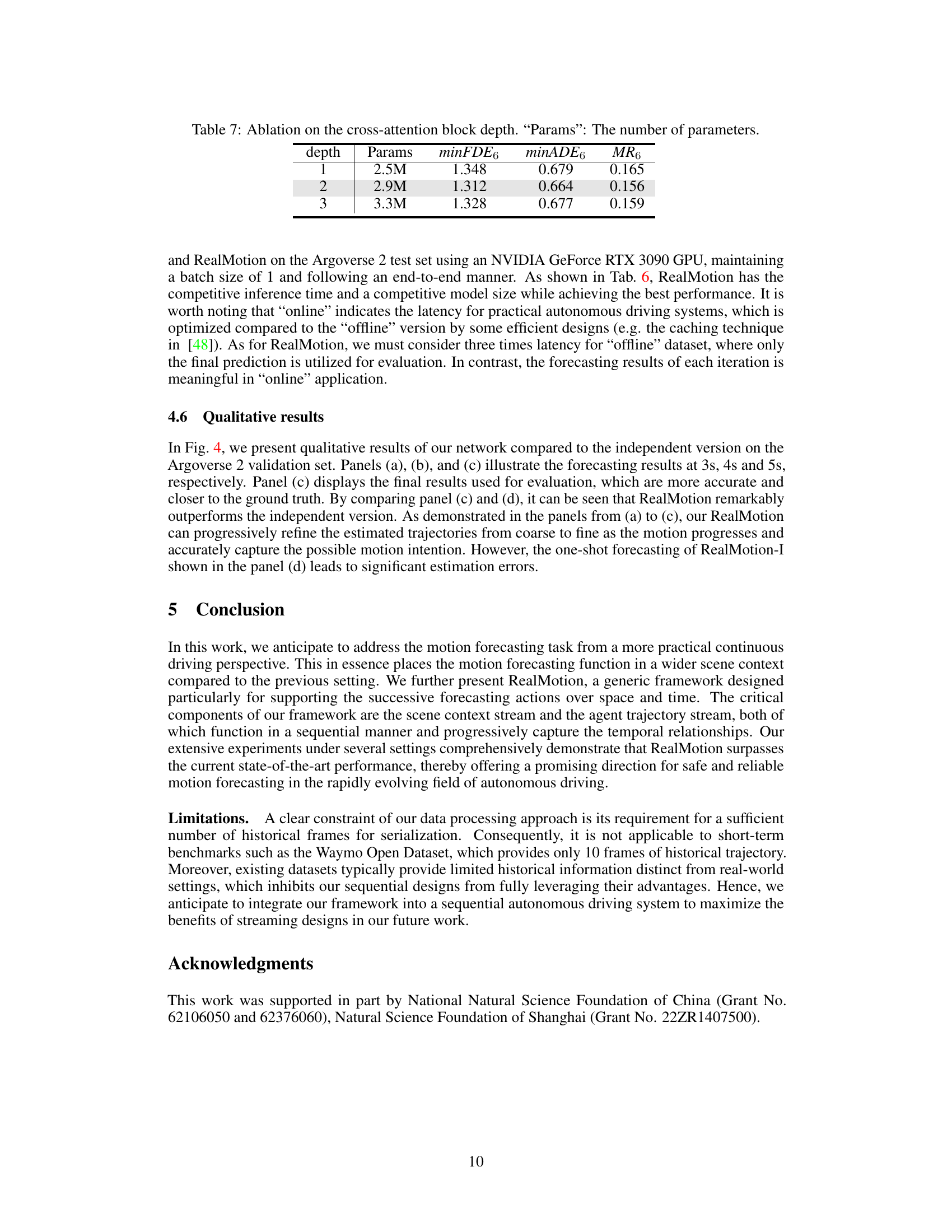

This table compares the performance (minFDE6 and minFDE1), inference speed (Latency), and model size (Params) of different motion forecasting models: HPTR (online and offline), QCNet, and RealMotion (with independent variant and online/offline versions). It highlights RealMotion’s efficiency in achieving state-of-the-art performance.

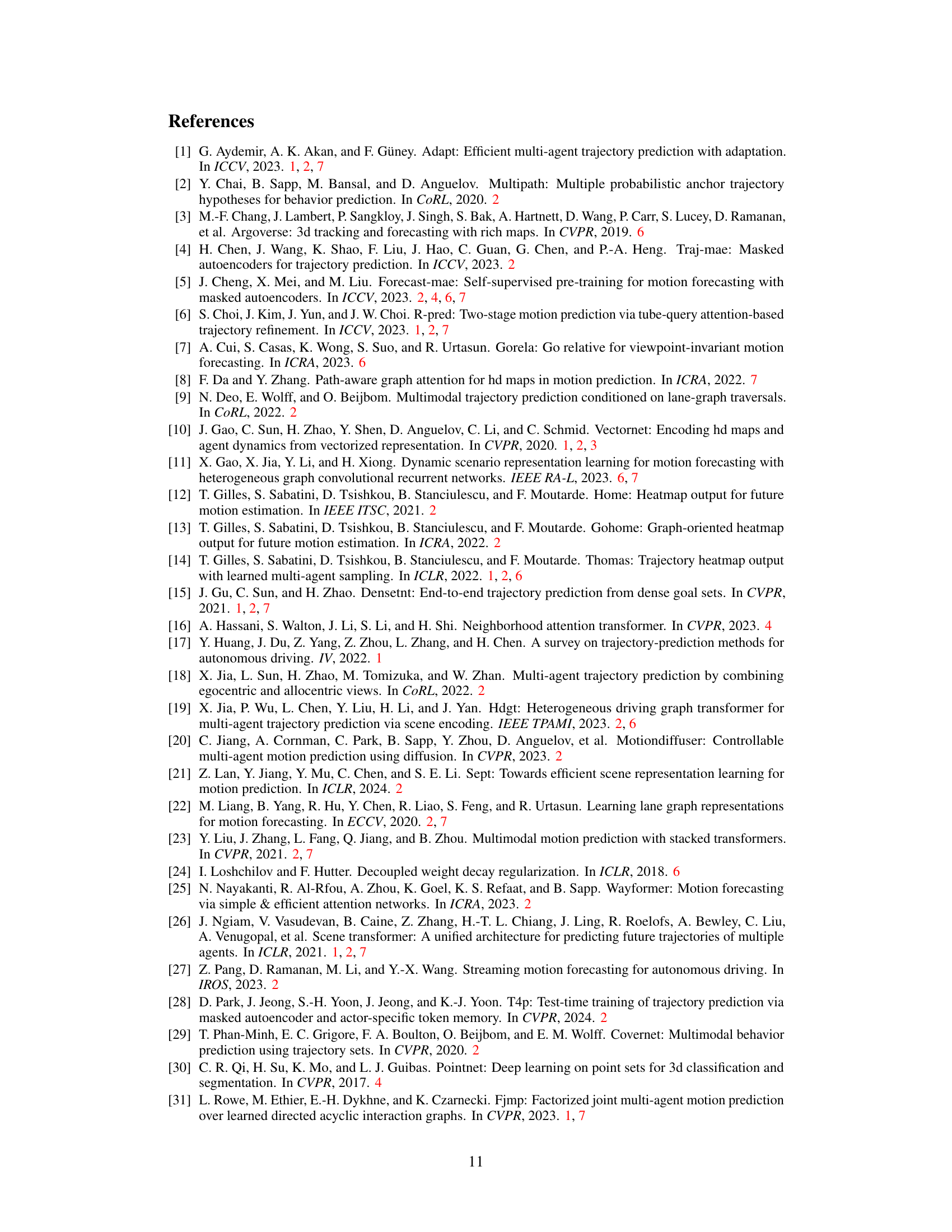

This table presents the ablation study on the cross-attention block depth, showing the impact of varying the depth (1, 2, and 3) on the model’s performance metrics (minFDE6, minADE6, and MR6). The number of parameters (Params) for each depth is also listed. The results indicate the optimal depth for balancing performance and model complexity.

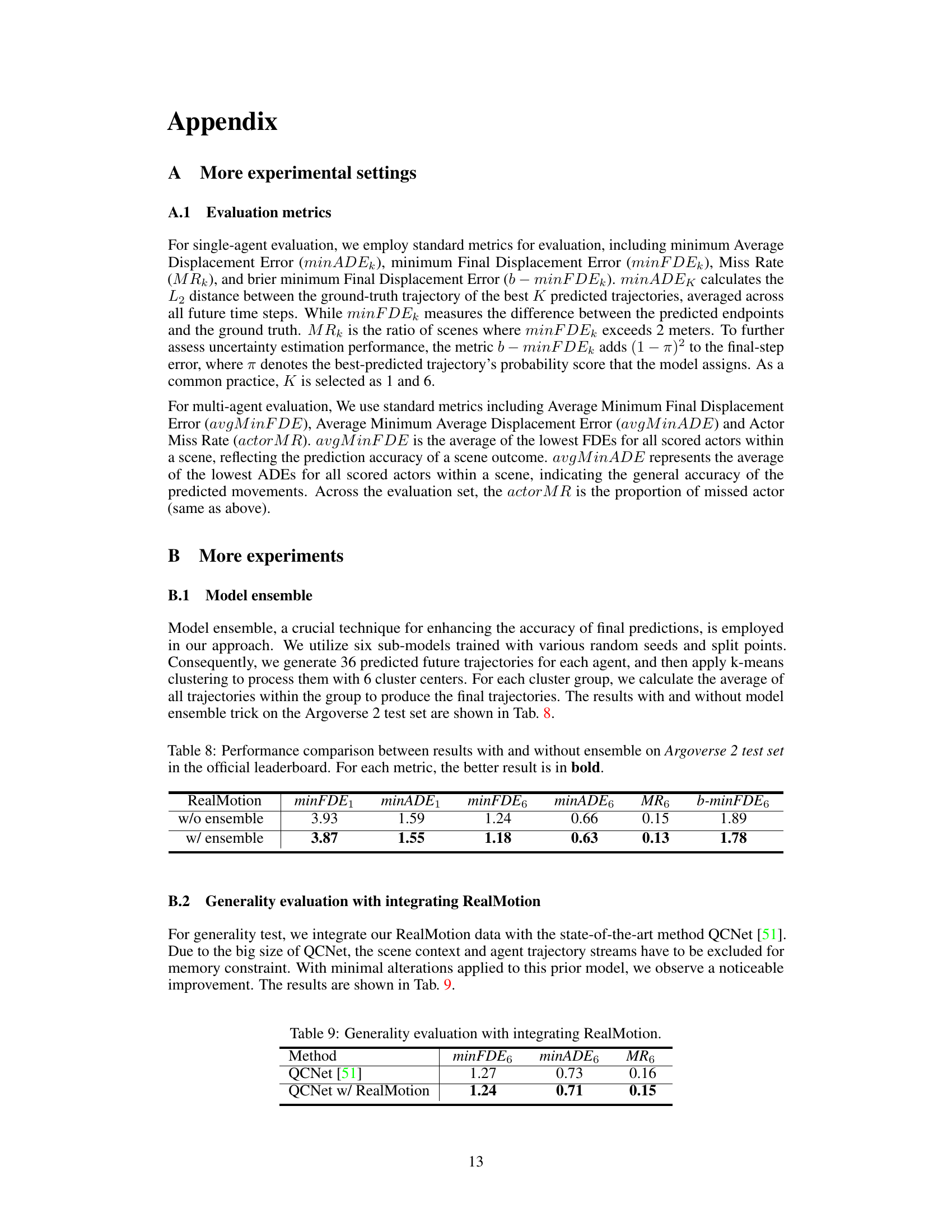

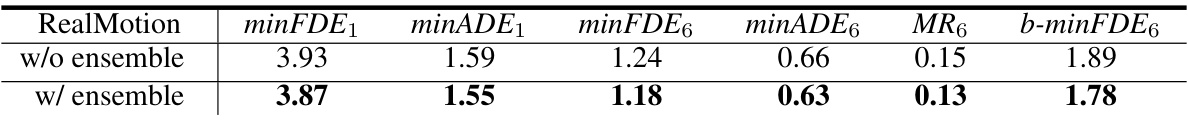

This table compares the performance of the RealMotion model with and without model ensembling on the Argoverse 2 test dataset. Model ensembling is a technique where multiple models are trained independently and their predictions are combined to improve overall accuracy. The table shows that using model ensembling (w/ ensemble) leads to better performance across all metrics (minFDE1, minADE1, minFDE6, minADE6, MR6, b-minFDE6). The metrics measure the accuracy of trajectory prediction for autonomous driving. Lower values are better.

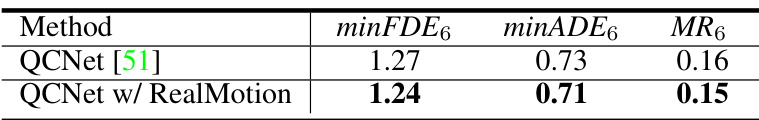

This table demonstrates the improved performance of integrating the RealMotion data reorganization and stream modules with the existing QCNet model. The results show a noticeable improvement across all three metrics (minFDE6, minADE6, MR6), indicating the effectiveness of RealMotion in enhancing trajectory prediction accuracy.

Full paper#