↗ OpenReview ↗ NeurIPS Proc. ↗ Chat

TL;DR#

Many brain regions respond selectively to specific semantic categories like faces or bodies. However, some out-of-category images also activate neurons in these regions, likely due to shared visual features. This paper addresses the challenge of visualizing these shared features using a deep-learning approach. The existing methods do not explicitly highlight shared features between within-category and out-of-category images.

The proposed method identifies a reference image with similar feature activation patterns from the preferred category. Then it applies parallel backpropagation to highlight image regions containing shared features driving the neuron’s response, while enhancing shared and attenuating non-shared dimensions. This approach was applied to body-selective regions in macaque IT cortex, successfully visualizing the shared features that drive neural responses to objects and providing insights into neural preferences.

Key Takeaways#

Why does it matter?#

This paper is important because it introduces a novel method for visualizing the features that drive neural responses in category-selective brain regions. This is crucial for understanding how these regions process visual information and could lead to new insights into the neural mechanisms underlying object recognition and categorization. The method is also widely applicable and could be used to analyze various visual perception processes.

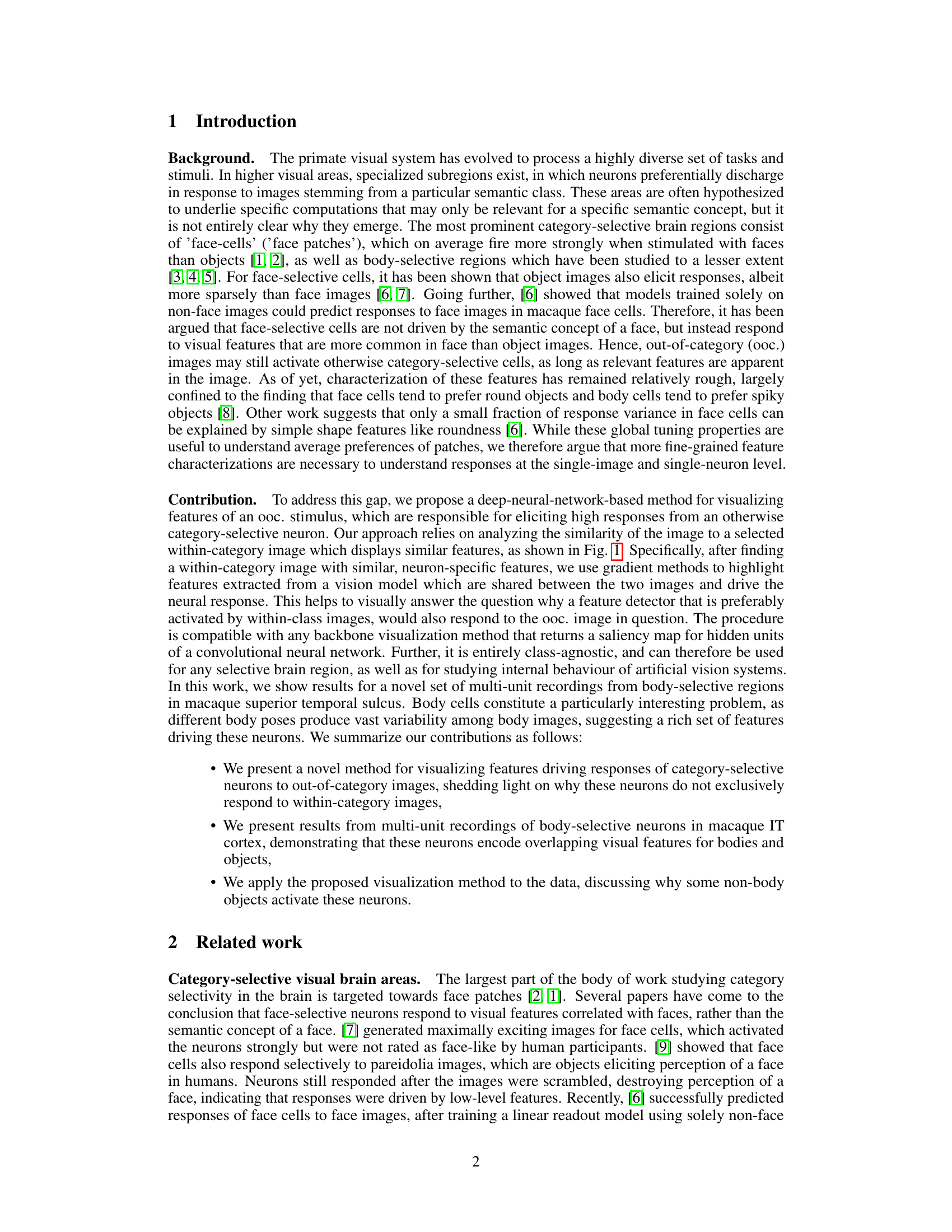

Visual Insights#

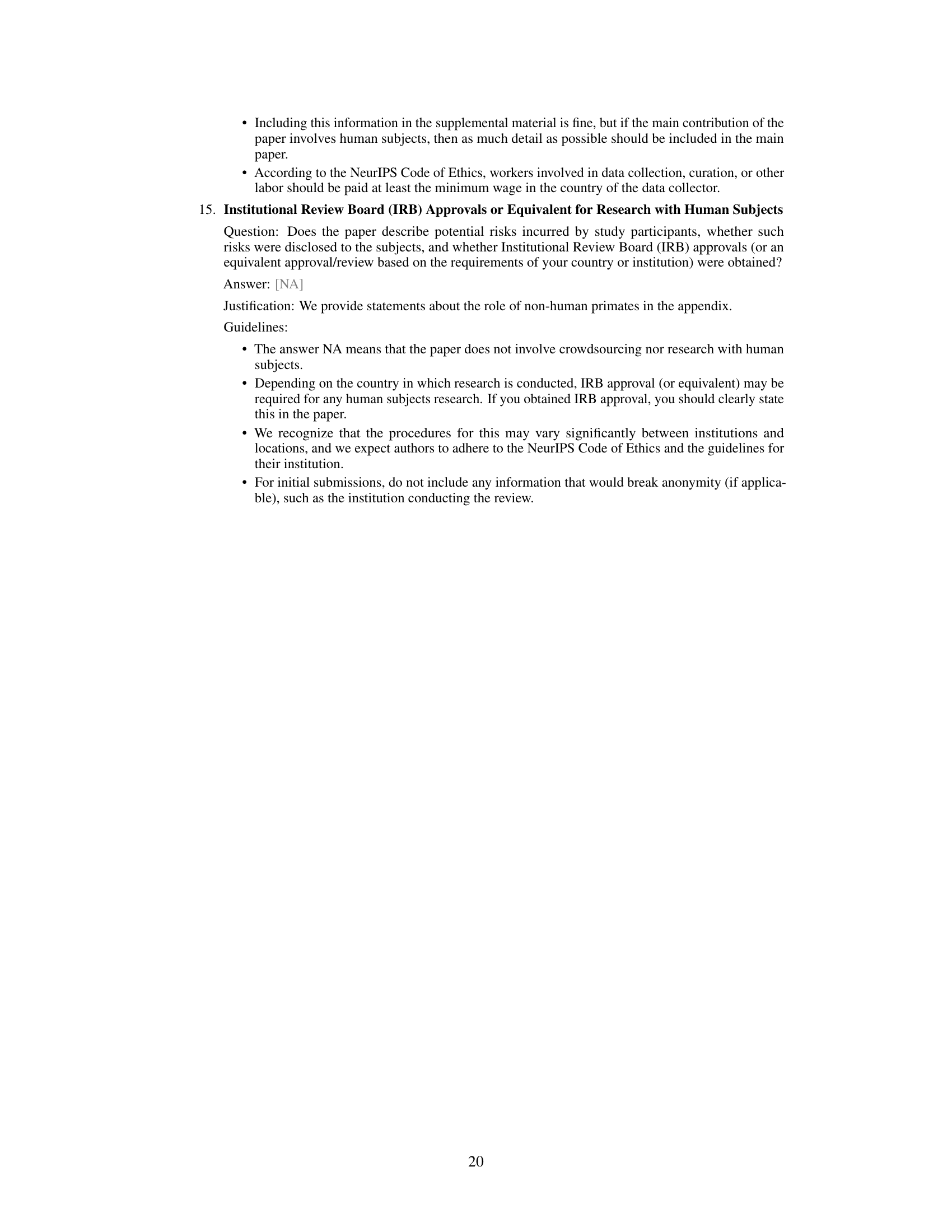

This figure illustrates the workflow of the proposed method. It starts with an out-of-category image that strongly activates a neuron selective for a specific category (e.g., bodies). The method computes the latent CNN activations for this image and compares it to a set of within-category reference images to find the most similar one. Finally, a parallel backpropagation method highlights the shared features between the out-of-category image and the most similar within-category image, visually explaining why the neuron responds to the out-of-category stimulus.

In-depth insights#

Shared-Feature Vis#

The heading “Shared-Feature Vis” suggests a visualization technique focusing on shared visual features between different image categories. The core idea is likely to identify and highlight the common visual aspects that cause a neuron (or a model) to respond to seemingly unrelated images. This method moves beyond simple category-based analysis, delving into the underlying feature representations. By visualizing these shared features, the technique likely aims to improve our understanding of neural selectivity and the factors contributing to the activation of neurons in response to out-of-category stimuli. It offers insights into the generalization capabilities of neural networks and how they bridge the gap between specific and broader visual interpretations. The approach could provide more nuanced explanations of how specific neurons respond to diverse stimuli and contribute valuable insights into the nature of neural processing. Successfully implemented, this technique could offer more detailed interpretations than average category response profiles by focusing on specific shared features, revealing how certain parts of objects might trigger responses in neurons tuned to a particular category.

Parallel Backprop#

The heading ‘Parallel Backprop’ suggests a novel method for visualizing neural network activations. The core idea likely involves simultaneously backpropagating gradients from multiple layers or branches within the network, perhaps focusing on shared features between different images. This parallelization likely accelerates the computation of gradient-based saliency maps, which are used to highlight image regions that strongly influence a neuron’s response. The method may leverage this to compare activations between an out-of-category image (one that unexpectedly activates a neuron) and an in-category image, aiming to identify common features driving the response. The benefit is improved visualization of the shared underlying features responsible for the unexpected activation, leading to greater understanding of neural preferences. This is especially beneficial for high-level visual areas, where neuron selectivity can be complex and influenced by shared features across different categories. The method’s efficiency and interpretability are potentially enhanced by parallel processing, allowing researchers to probe the neural representations of complex visual concepts more effectively.

Macaque IT Study#

The macaque IT study is a crucial component of the research, providing neurophysiological data that validates the computational model. The study carefully selected neurons from body-selective regions in macaque IT cortex, ensuring the data’s relevance to the research question. The detailed experimental setup, including stimuli presentation and data acquisition, is described to guarantee reproducibility. The results of this study show a generalization of the model’s predictions from within-category (body) images to out-of-category images, indicating that the model’s identified features are not solely specific to bodies but may be present and relevant in other object categories. This finding supports the core argument of the paper and strengthens the conclusions drawn from the computational model. The careful analysis of the neural responses to both body and object images offers valuable insights into the neural representation of object features, particularly within body-selective regions.

Model Generalizes#

The heading ‘Model Generalizes’ suggests a key finding: the model’s ability to extrapolate beyond its training data. This is crucial because a model’s true value lies not in memorizing training examples but in its capacity to generalize to unseen data. The authors likely demonstrate that a model trained on a specific category (e.g., body images) successfully predicts neural responses to images outside that category (e.g., objects). This generalization implies that the model has learned underlying features shared by both categories, rather than simply memorizing specific instances. The success of this generalization validates the model’s ability to capture fundamental visual representations and highlights the shared visual features that drive neural responses, providing insights into neural coding principles and brain function. A successful generalization strengthens the study’s implications for understanding brain mechanisms underlying object recognition and demonstrates the power of the proposed methodology.

Future Directions#

Future research could explore several promising avenues. Expanding the methodology to other brain regions known for category selectivity, such as face-selective areas, would validate the approach’s generalizability and reveal potential differences in the types of features driving neural responses across different categories. Investigating the impact of different CNN architectures on the visualizations would help determine the method’s robustness and identify optimal model choices for specific applications. Exploring variations in neural responses across different individuals, and the effect of individual variations in brain anatomy and connectivity on shared features and preference patterns, could improve understanding of the inherent biological variability. Finally, combining the visualization technique with behavioral experiments could generate valuable insights into the relationship between neural preferences, visual perception, and conscious experience.

More visual insights#

More on figures

This figure illustrates the parallel backpropagation method used in the paper to visualize shared features between two images. First, a pre-trained Convolutional Neural Network (CNN) extracts latent feature activations from both images. These activations are then backpropagated to the pixel level, resulting in gradient maps for each image. A weighted Hadamard product, incorporating the neuron’s specific readout weights, combines these gradient maps. Finally, a weighted sum of the resulting gradients generates the final pixel saliency maps highlighting the shared features driving the neuron’s response.

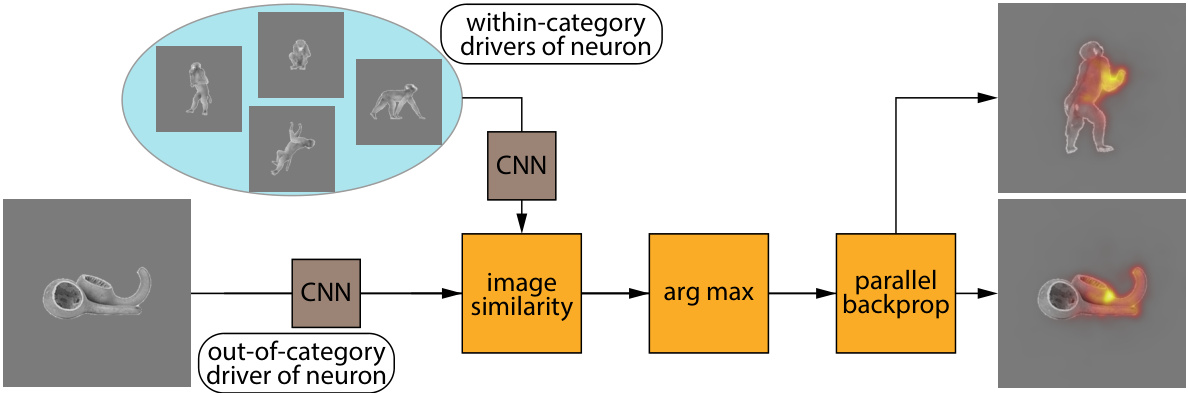

Figure 3 shows the results of testing how well the model generalizes from body images to object images. Part (a) shows a strong positive correlation between predicted and recorded neural responses for object images, demonstrating that the features predictive of body image responses also predict object image responses. The correlation is higher for body images, and the consistency of responses across recording sessions is demonstrated. Part (b) shows that neural responses to the objects are higher than average for body and object images in most cases.

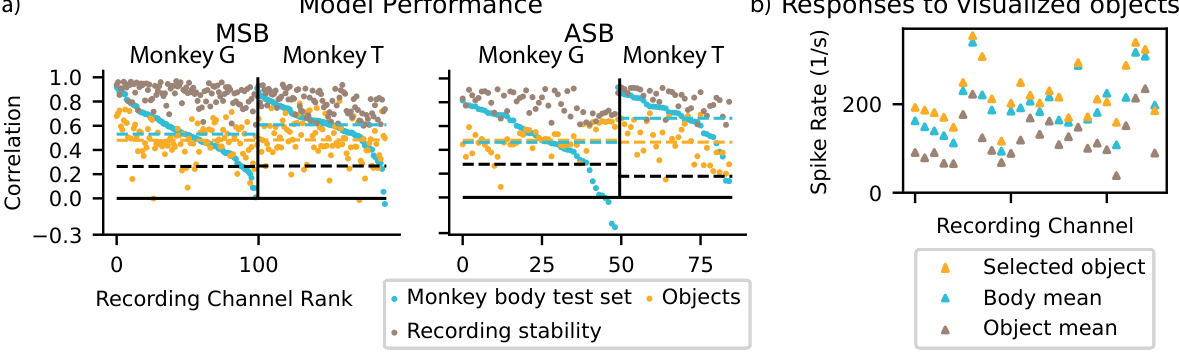

This figure shows the results of applying the parallel backpropagation method to visualize shared features between out-of-category objects and within-category body images for multi-unit recordings from the posterior region of the macaque STS. Each subplot displays a pair of images: an out-of-category object image (left) that strongly activates a particular neuron, and the most similar within-category body image (right) based on a neuron-specific similarity metric. The overlayed heatmaps highlight the shared image regions that contribute to the neural response.

This figure displays the results of applying the parallel backpropagation method to visualize shared features between out-of-category objects and within-category body images for neurons in the macaque STS posterior region. Each subplot represents a different recording channel. The left image in each subplot shows an object that strongly activates the neuron, while the right image shows the most similar body image based on a neuron-specific similarity metric. The heatmaps overlayed on the images highlight the shared features driving the neural response.

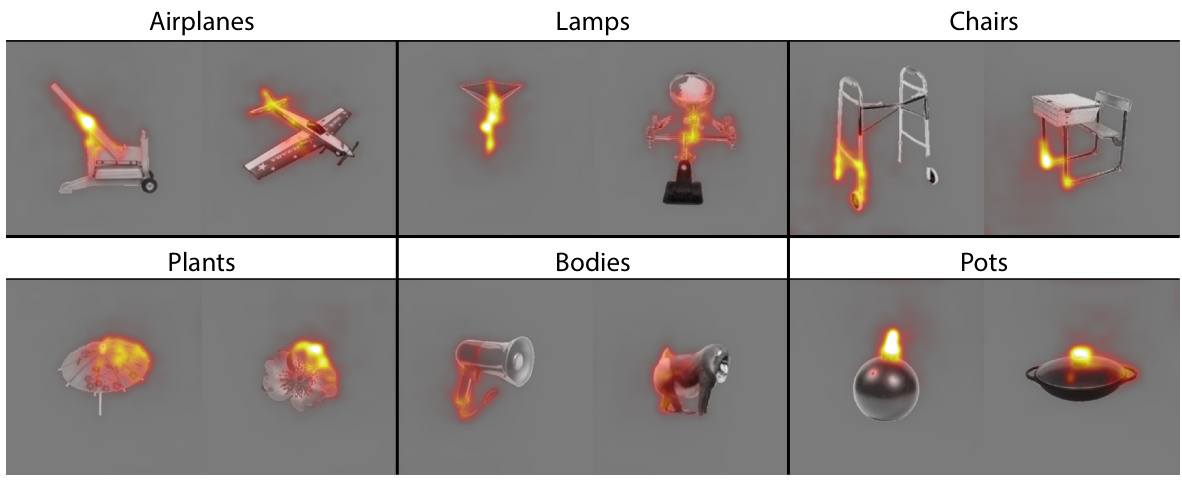

This figure shows the results of applying the parallel backpropagation method on six synthetic, category-selective neurons. Each row represents a different neuron, with its preferred category labeled above. The visualizations highlight features shared between within-category and out-of-category (ooc) images, explaining why ooc images activate the neuron.

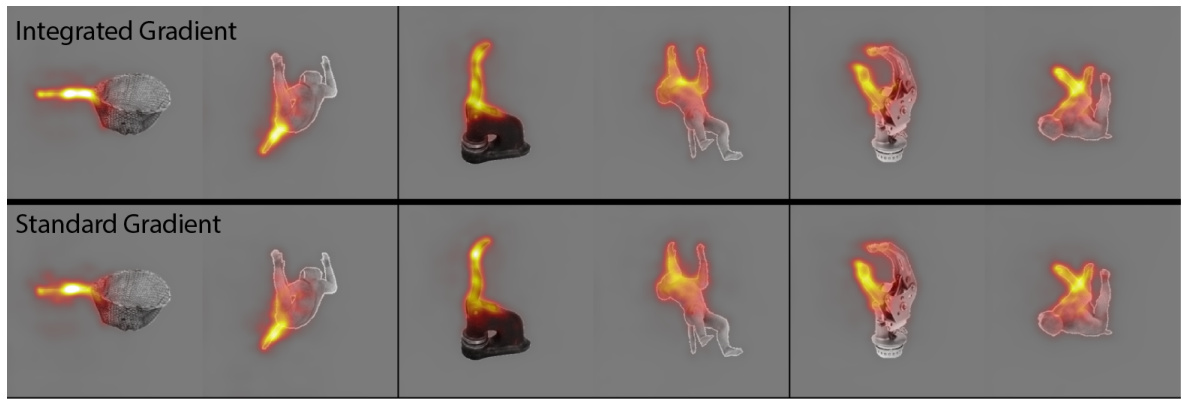

This figure compares the results of using two different methods for computing the Jacobian of the latent features with respect to image pixels: Integrated Gradients and the standard gradient method. The top row displays visualizations generated using Integrated Gradients, while the bottom row shows visualizations obtained using the standard gradient method. Both methods aim to highlight image regions that are highly relevant for predicting neural responses. The similarity in the visualizations suggests that the choice of method has a minimal effect on the overall results.

Full paper#