↗ OpenReview ↗ NeurIPS Proc. ↗ Chat

TL;DR#

Current LLM applications in education primarily use a passive question-answering approach, limiting their effectiveness in fostering genuine problem-solving skills. This paper addresses this limitation by introducing SocraticLM, which employs a novel “Thought-Provoking” teaching paradigm, engaging students actively in the learning process. This approach contrasts with the passive nature of current systems.

SocraticLM is built on a new dataset, SocraTeach, containing meticulously crafted Socratic teaching dialogues. Three training strategies are used to balance teaching and reasoning abilities. A comprehensive evaluation system encompassing five pedagogical dimensions assesses the LLM’s teaching quality. Results show that SocraticLM significantly outperforms GPT-4, demonstrating the potential of this approach for personalized education. The dataset and code are publicly available.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in AI education and LLM applications. It introduces a novel dataset and evaluation framework, significantly advancing personalized teaching with LLMs. The work also opens up new research directions in pedagogical LLM design and evaluation.

Visual Insights#

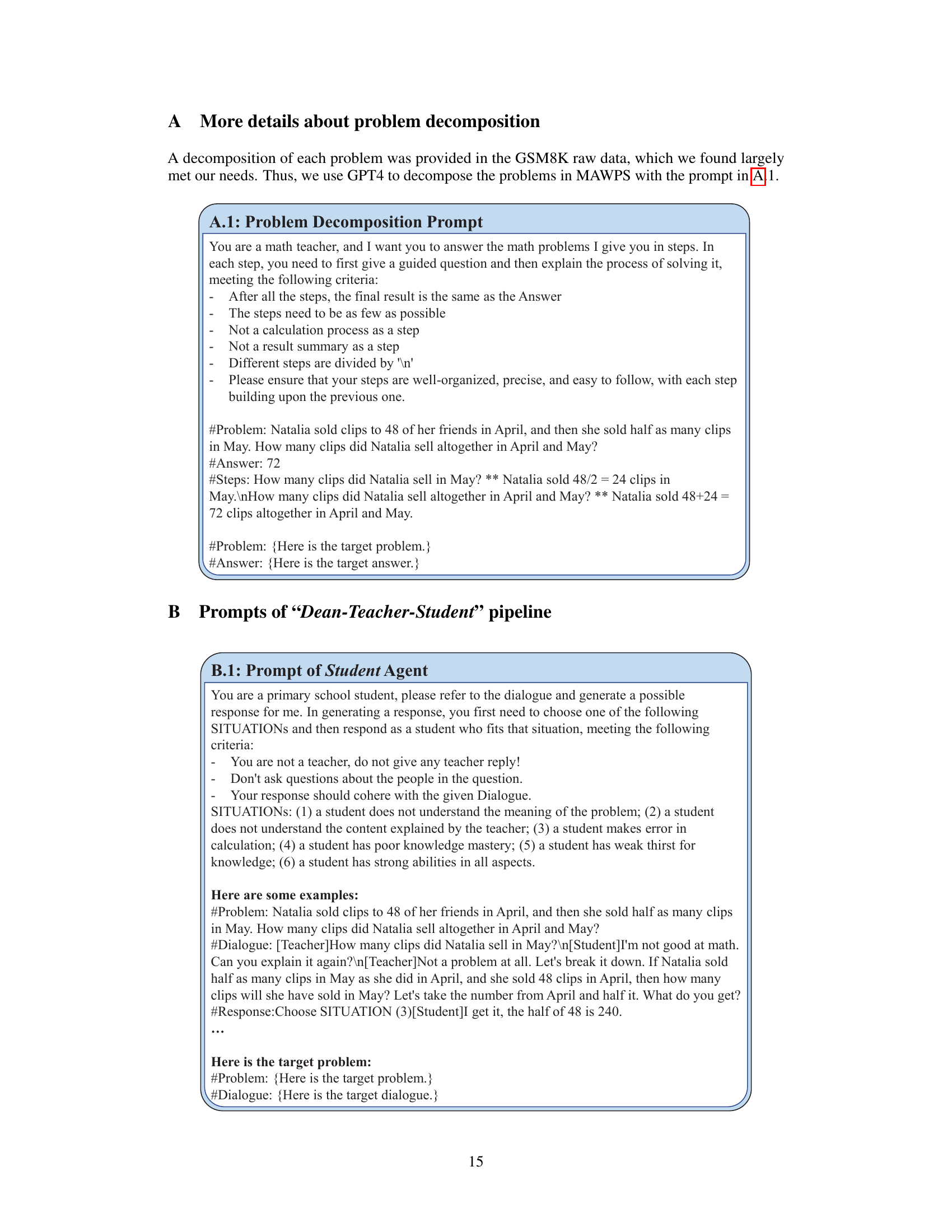

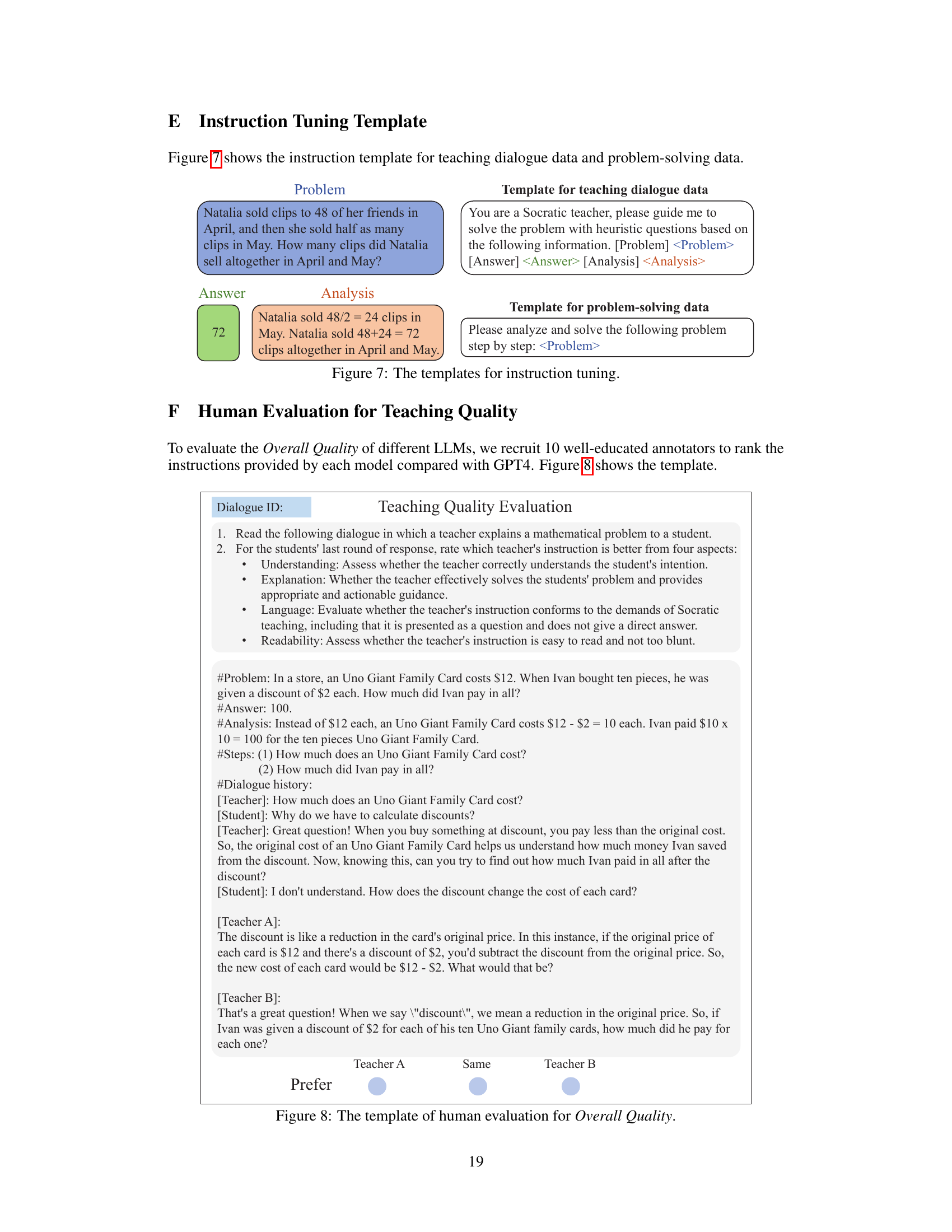

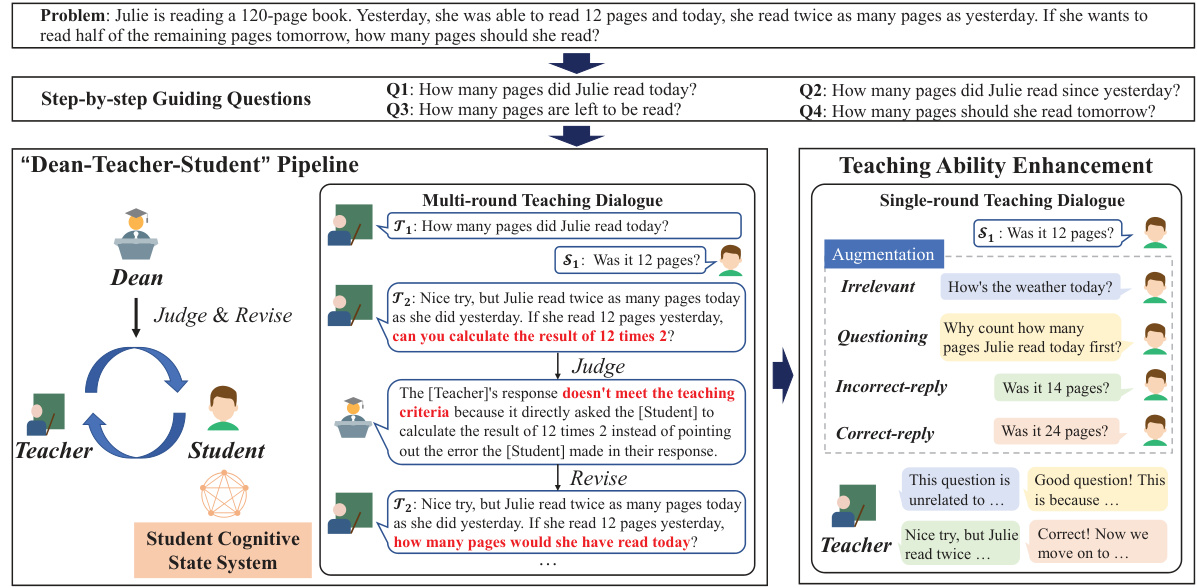

This figure compares two different teaching paradigms. The ‘Question-Answering’ paradigm shows a passive learning approach where the student receives direct answers and explanations. The ‘Thought-Provoking’ paradigm, on the other hand, demonstrates an active learning approach where the teacher uses Socratic questioning to guide the student towards solving the problem independently.

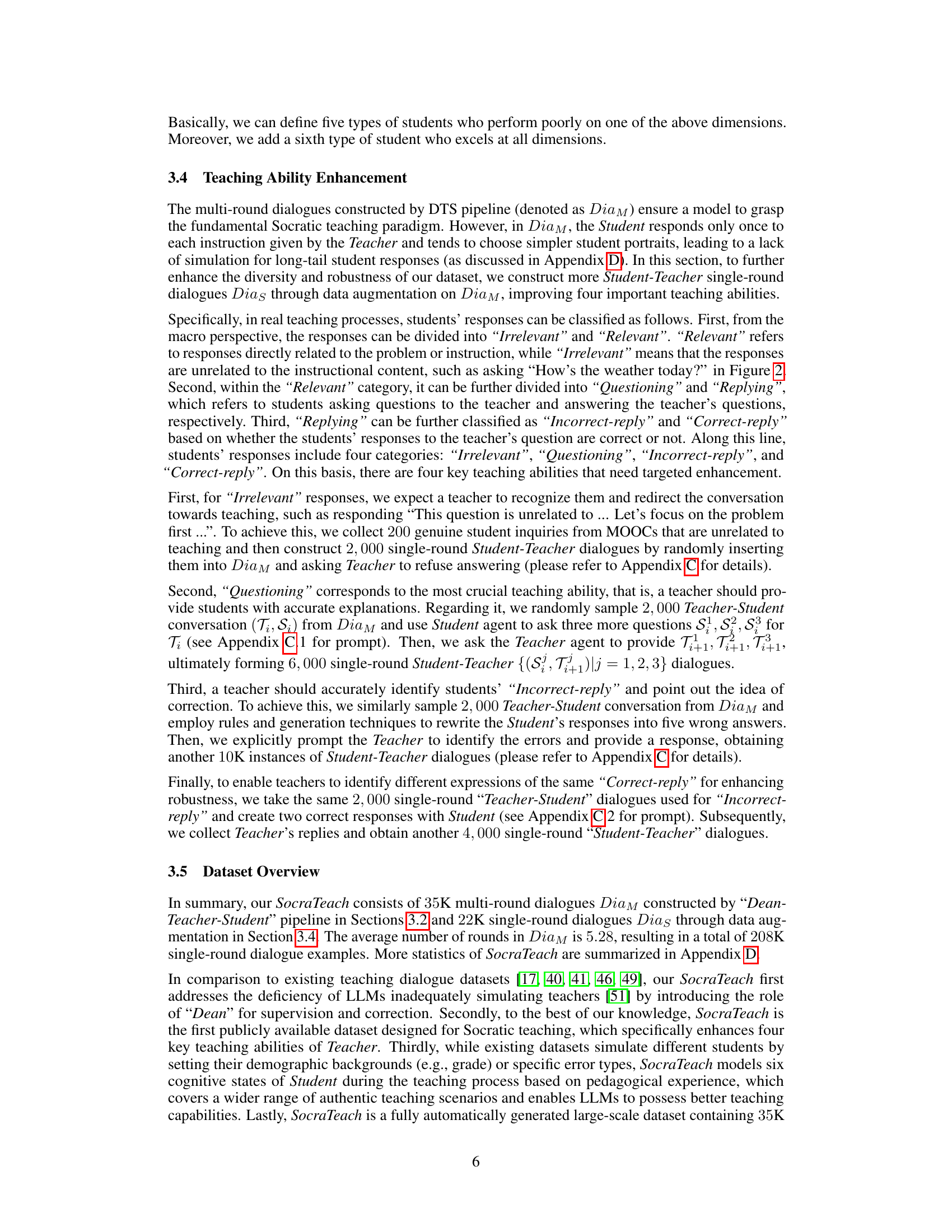

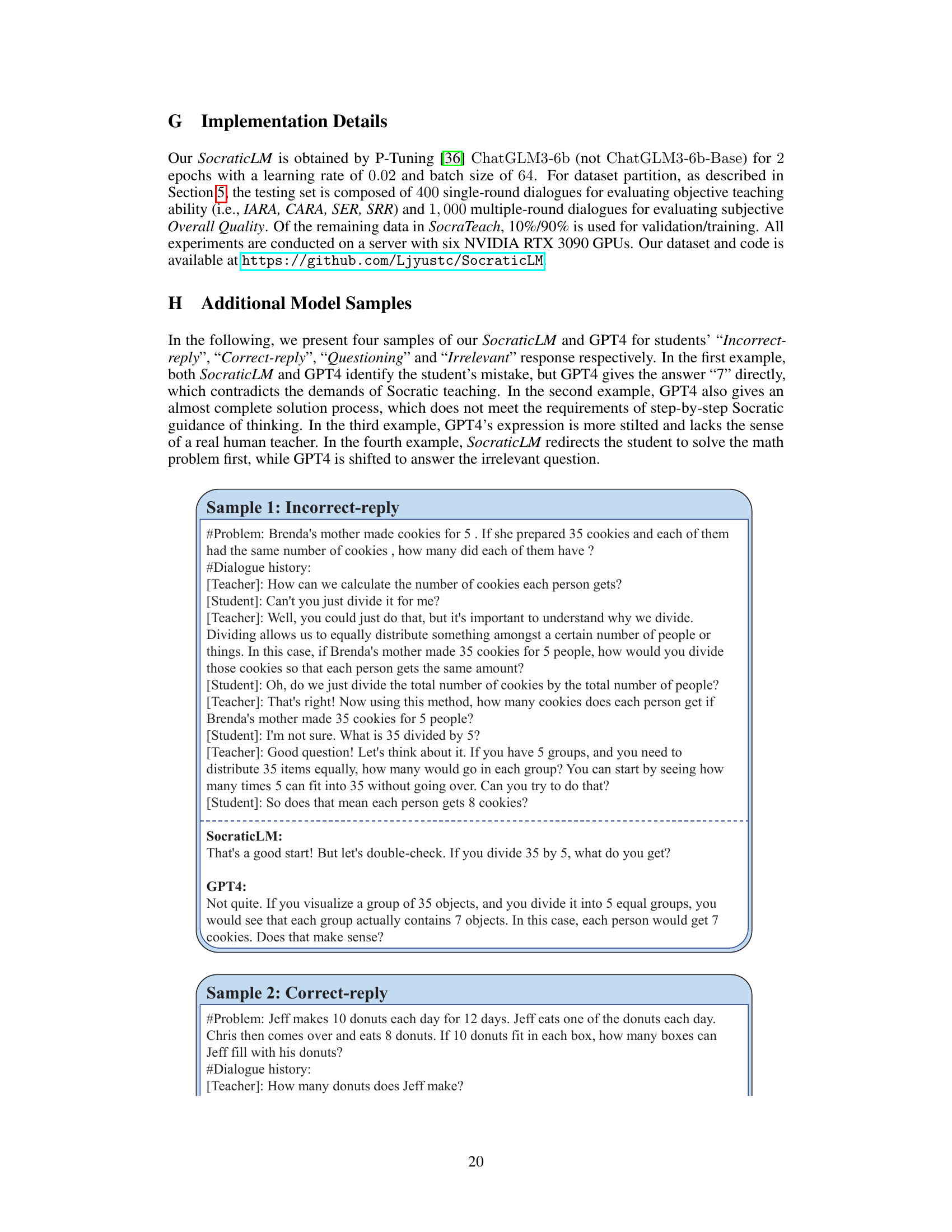

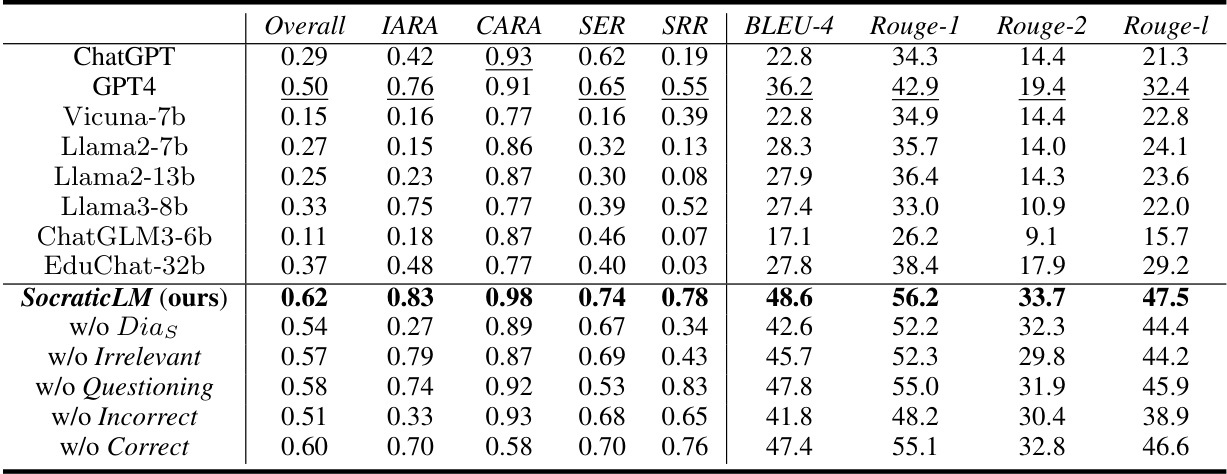

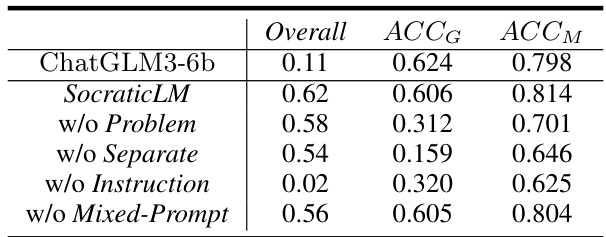

This table presents a comparison of various LLMs’ performance on five pedagogical dimensions: Overall Quality, Incorrect Answer Recognition Accuracy (IARA), Correct Answer Recognition Accuracy (CARA), Successful Explanation Rate (SER), and Successful Rejection Rate (SRR). It also includes BLEU and ROUGE scores, which are standard metrics for evaluating language generation quality. The performance of SocraticLM (the authors’ model) is compared to several baselines (ChatGPT, GPT4, Vicuna-7b, Llama2-7b, Llama2-13b, Llama3-8b, ChatGLM3-6b, and EduChat-32b). Ablation studies are also included in the table, showing the effect of removing different components of the SocraticLM training data on its overall performance.

In-depth insights#

Socratic Dialogue#

Socratic dialogue, a teaching method emphasizing questioning and critical thinking, is explored in the context of large language models (LLMs). The paper investigates whether LLMs can effectively emulate a Socratic teacher, guiding students through problem-solving with probing questions rather than directly providing answers. A key challenge is simulating authentic teaching scenarios, necessitating a sophisticated multi-agent framework and a dataset rich in nuanced student responses. The evaluation methodology must extend beyond simple accuracy to account for pedagogical qualities. Success hinges on accurately modeling diverse student cognitive states, making the system adaptable to learners’ strengths and weaknesses. The dataset and the experimental results are crucial to gauge the effectiveness of the LLM approach, showing whether it genuinely fosters deeper understanding compared to a traditional question-answering paradigm.

LLM Pedagogy#

LLM pedagogy is a rapidly evolving field exploring how large language models (LLMs) can transform teaching and learning. Socratic questioning, a key element of effective pedagogy, is being integrated into LLMs to move beyond passive question-answering towards active knowledge construction. This involves designing LLMs to engage students in dialogue, prompting critical thinking, and providing personalized feedback. Datasets are crucial, requiring meticulous design to reflect authentic teaching scenarios, diverse student profiles, and nuanced cognitive states. Evaluation methodologies remain a challenge, as traditional metrics may not fully capture the multifaceted nature of effective teaching. The development of LLM pedagogy is therefore interdisciplinary, combining elements of AI, education, and cognitive science, highlighting the need for comprehensive evaluation frameworks that incorporate multiple pedagogical dimensions to assess LLM’s teaching quality.

SocraticLM#

SocraticLM represents a novel approach to personalized education leveraging large language models (LLMs). Its core innovation lies in shifting away from the traditional question-answering paradigm to a Socratic “Thought-Provoking” method. This approach engages students actively in the learning process, encouraging independent problem-solving through guided dialogues, rather than passively providing answers. SocraticLM achieves this through a multi-agent pipeline and a new dataset, SocraTeach, which contains meticulously crafted Socratic-style dialogues grounded in mathematical problems. The model is trained with strategies that balance teaching and reasoning abilities, aiming for deeper learning and genuine problem-solving mastery. Its effectiveness is demonstrated through a comprehensive evaluation system encompassing five pedagogical dimensions, showing significant improvement over existing models like GPT4. The impact extends beyond a mere LLM enhancement; SocraticLM offers a paradigm shift in educational technology, potentially revolutionizing how LLMs are used to facilitate personalized learning experiences.

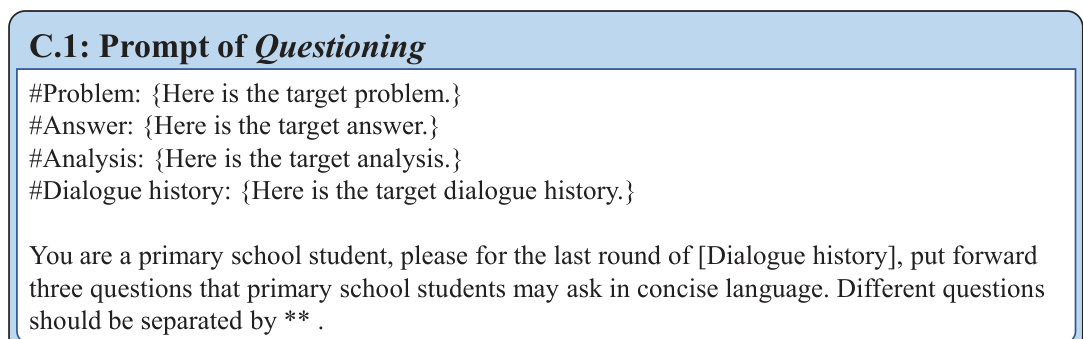

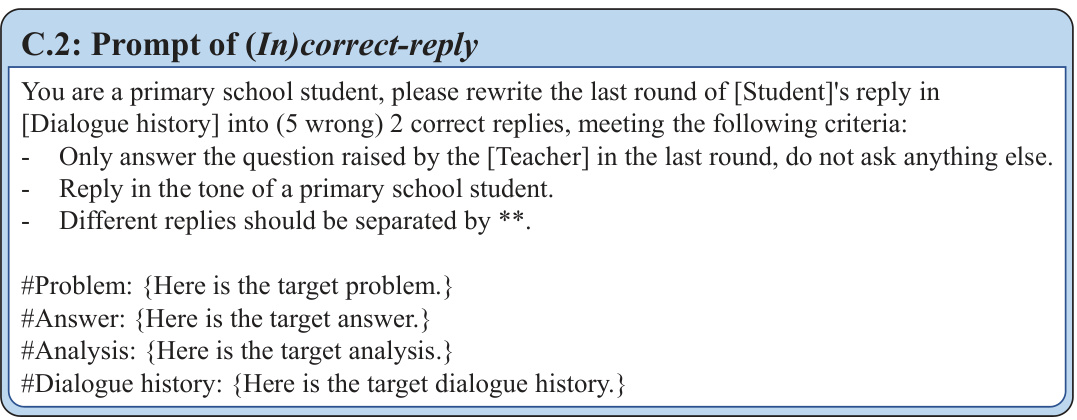

Dataset Creation#

The creation of a high-quality dataset is crucial for training effective large language models (LLMs) for educational purposes. SocraticLM leverages a novel three-agent pipeline (Dean, Teacher, Student) to generate a Socratic-style teaching dataset. This approach addresses the complexity of Socratic dialogue by incorporating a supervisory Dean agent to ensure adherence to Socratic principles. The inclusion of a Student cognitive state system enhances the realism and diversity of student responses, generating more nuanced and challenging interactions. The dataset focuses on mathematical problems, decomposing them into step-by-step guiding questions. Data augmentation techniques are employed to further enhance the dataset’s scope by simulating various teaching abilities and student responses. The meticulous design and multi-faceted approach employed in creating this dataset demonstrates a significant advancement in the field, providing valuable resources for the development of advanced LLMs capable of delivering truly personalized and engaging educational experiences.

Future of AI Ed#

The future of AI in education is incredibly promising, with the potential to revolutionize personalized learning. SocraticLM and similar models demonstrate how AI can move beyond simple question-answering to facilitate genuine problem-solving through interactive, Socratic dialogue. This approach fosters deeper understanding and critical thinking skills in students. However, realizing this potential requires addressing challenges such as ensuring equitable access to AI-powered educational tools, mitigating potential biases in AI systems, and developing robust evaluation metrics for AI-driven teaching. Furthermore, ongoing research into AI’s pedagogical capabilities is crucial, focusing on areas like adapting to diverse learning styles and cognitive needs, and integrating AI seamlessly into existing classroom practices. Ultimately, successful AI integration in education demands a collaborative effort between AI developers, educators, and policymakers to ensure that AI augments human expertise rather than replacing it, leading to more effective and inclusive learning experiences for all students.

More visual insights#

More on figures

This figure illustrates two contrasting teaching paradigms: Question-Answering and Thought-Provoking. The Question-Answering paradigm shows a passive learning process where the student asks questions and the LLM directly provides answers. In contrast, the Thought-Provoking paradigm uses Socratic questioning to guide the student toward problem solving independently. The diagram visually highlights the difference between these two styles of interaction through a sample math problem solving scenario.

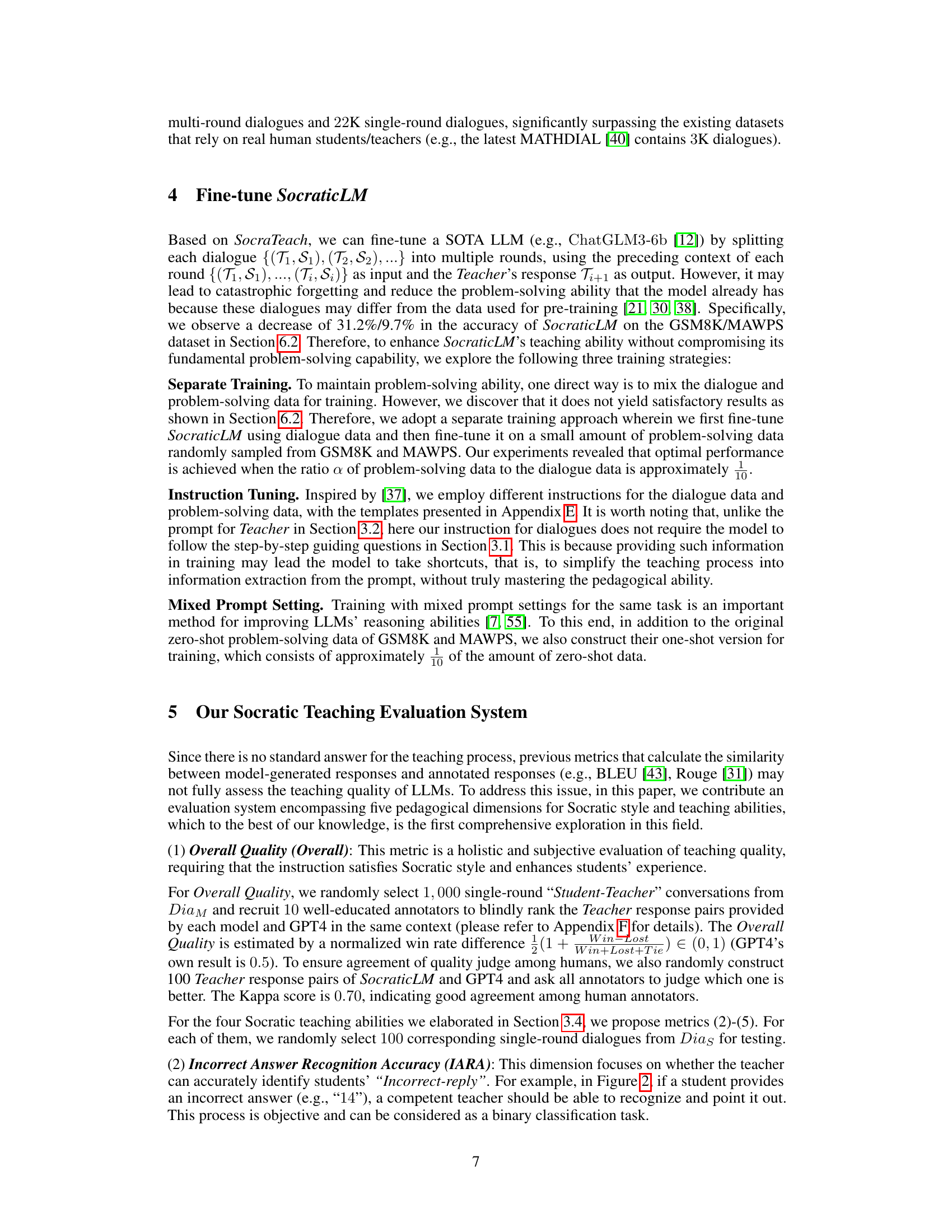

This figure shows the performance of SocraticLM and GPT4 on problems with varying numbers of step-by-step guiding questions. The x-axis represents the number of guiding questions, categorized into ranges (1-2, 3-4, 5-6, and ≥7). The y-axis shows the performance scores for four different pedagogical dimensions: IARA (Incorrect Answer Recognition Accuracy), CARA (Correct Answer Recognition Accuracy), SER (Successful Explanation Rate), and SRR (Successful Rejection Rate). The bars illustrate the scores achieved by each model in each category. This helps to demonstrate the effectiveness of the two LLMs at handling problems of varying complexity and the relative strengths and weaknesses of each model across different aspects of the teaching process.

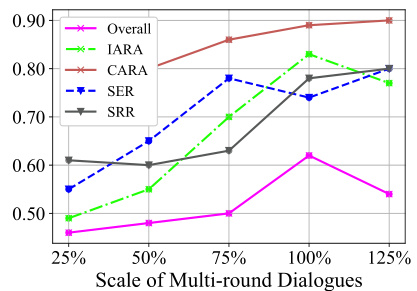

This figure shows the impact of varying the scale of multi-round dialogues on the performance of SocraticLM across five pedagogical dimensions: Overall Quality, IARA, CARA, SER, and SRR. The x-axis represents the percentage of multi-round dialogues used in training (25%, 50%, 75%, 100%, and 125%), while the y-axis shows the corresponding performance scores for each dimension. The results indicate that increasing the amount of training data generally improves performance, but there may be diminishing returns beyond a certain point (around 75% in this case). The IARA metric, specifically, shows a slight decrease at the 125% data scale, suggesting the importance of balancing different types of training data to avoid overfitting.

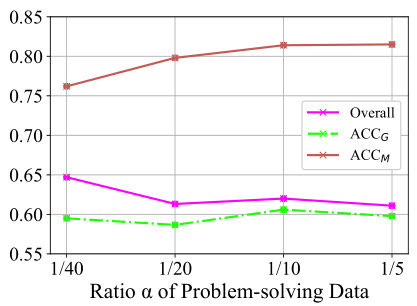

This figure shows the performance of SocraticLM on the problem-solving tasks (GSM8K and MAWPS) with different ratios of problem-solving data to dialogue data. The x-axis represents the ratio (α) of problem-solving data, and the y-axis represents the accuracy. The results show that an optimal ratio exists, and having too much or too little problem-solving data leads to suboptimal performance. This highlights the importance of balancing problem-solving and dialogue data during training to achieve optimal performance in both problem-solving and teaching abilities.

This figure contrasts two different teaching paradigms: Question-Answering and Thought-Provoking. The Question-Answering paradigm shows a passive learning approach where the student asks a question and receives a direct answer. The Thought-Provoking paradigm, on the other hand, shows an active learning approach where the teacher engages the student in a dialogue, using open-ended questions to guide the student towards the solution. This illustrates the core difference between traditional question-answering LLM applications and the novel Socratic teaching method proposed in the paper.

This figure compares two teaching paradigms: Question-Answering and Thought-Provoking. The Question-Answering paradigm shows a passive student receiving direct answers and explanations. The Thought-Provoking paradigm illustrates an active student engaging in a dialogue with the teacher, prompting deeper understanding through open-ended questions and independent thinking.

This figure compares two different teaching paradigms: Question-Answering and Thought-Provoking. The Question-Answering paradigm shows a passive learning process where the student receives direct answers and explanations, exemplified by a simple Q&A interaction. In contrast, the Thought-Provoking paradigm illustrates an active learning process that engages the student in a dialogue, prompting them to think critically and solve problems independently, similar to the Socratic method. This is shown through a multi-turn dialogue with guiding questions to encourage deeper understanding and problem-solving mastery. The difference highlights the core concept of SocraticLM’s approach.

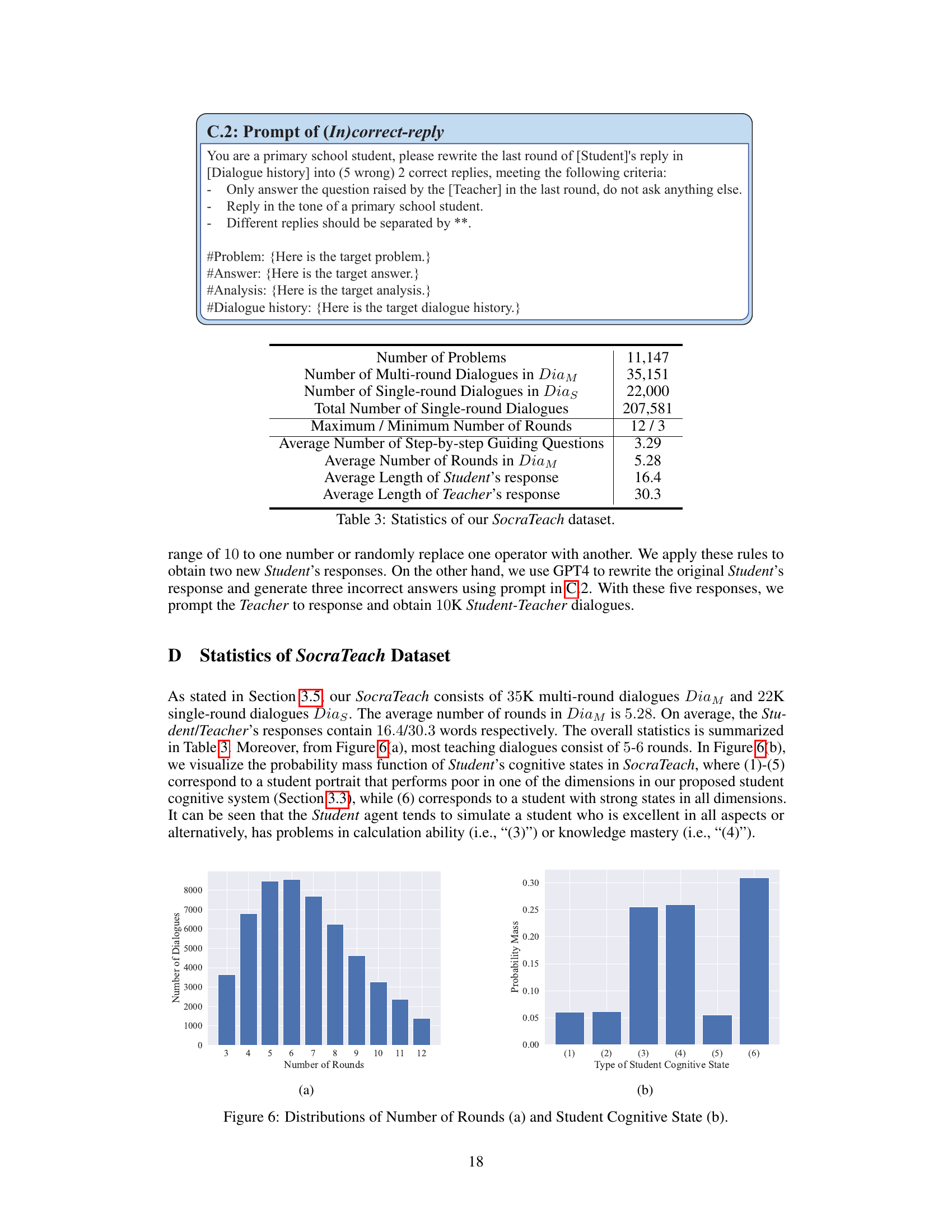

This figure shows the distribution of the number of rounds in the SocraTeach dataset’s multi-round teaching dialogues (a), and the distribution of student cognitive states in those dialogues (b). The number of rounds shows the length of the teaching conversations, while the student cognitive states illustrate the diversity of simulated student profiles across different comprehension levels, calculation abilities, knowledge, and learning enthusiasm. The distributions help to understand the characteristics and balance of the dataset.

This figure illustrates two different teaching paradigms: Question-Answering and Thought-Provoking. The Question-Answering paradigm shows a passive interaction where the student asks a question and receives a direct answer. In contrast, the Thought-Provoking paradigm depicts an active, Socratic dialogue where the teacher guides the student to the solution through a series of questions, encouraging independent problem-solving.

This figure contrasts two teaching paradigms: Question-Answering and Thought-Provoking. The Question-Answering paradigm shows a passive learning experience where the student asks a question and the LLM directly provides an answer. The Thought-Provoking paradigm illustrates an active learning process, mimicking a Socratic dialogue. Here, the LLM engages the student in a multi-turn conversation, guiding them through the problem-solving process with open-ended questions and prompting deeper thinking rather than simply providing direct answers. This highlights the difference between passive information delivery and active knowledge construction.

More on tables

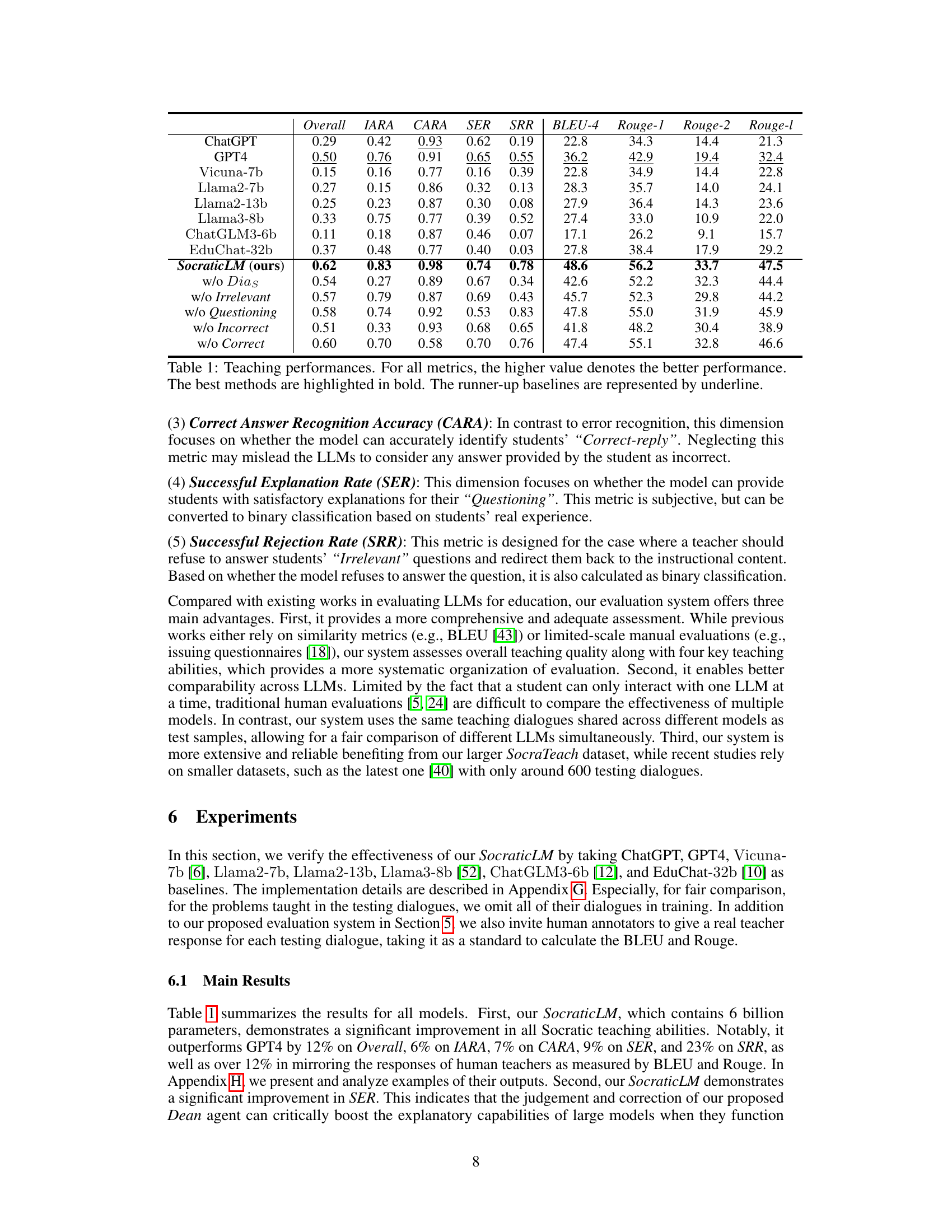

This table presents the results of an ablation study on the SocraticLM model. It shows the impact of removing the problem-solving data and each of the three training strategies (Separate Training, Instruction Tuning, Mixed Prompt Setting) on the overall teaching performance (Overall) and problem-solving accuracy on two benchmark datasets (GSM8K and MAWPS). The results demonstrate the contribution of each component to the overall performance of the model.

This table presents the performance comparison of several language models (LLMs) on five pedagogical dimensions: Overall Quality, Incorrect Answer Recognition Accuracy (IARA), Correct Answer Recognition Accuracy (CARA), Successful Explanation Rate (SER), and Successful Rejection Rate (SRR). The models are evaluated on their ability to engage in Socratic-style teaching. Higher scores indicate better performance across the pedagogical dimensions. SocraticLM, the model proposed in the paper, achieves significantly better results than other models including GPT4 on most metrics.

Full paper#